Abstract

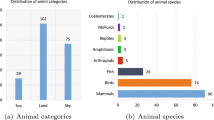

Multi-animal tracking (MAT), a multi-object tracking (MOT) problem, is crucial for animal motion and behavior analysis and has many crucial applications such as biology, ecology and animal conservation. Despite its importance, MAT is largely under-explored compared to other MOT problems such as multi-human tracking due to the scarcity of dedicated benchmarks. To address this problem, we introduce AnimalTrack, a dedicated benchmark for multi-animal tracking in the wild. Specifically, AnimalTrack consists of 58 sequences from a diverse selection of 10 common animal categories. On average, each sequence comprises of 33 target objects for tracking. In order to ensure high quality, every frame in AnimalTrack is manually labeled with careful inspection and refinement. To our best knowledge, AnimalTrack is the first benchmark dedicated to multi-animal tracking. In addition, to understand how existing MOT algorithms perform on AnimalTrack and provide baselines for future comparison, we extensively evaluate 14 state-of-the-art representative trackers. The evaluation results demonstrate that, not surprisingly, most of these trackers become degenerated due to the differences between pedestrians and animals in various aspects (e.g., pose, motion, and appearance), and more efforts are desired to improve multi-animal tracking. We hope that AnimalTrack together with evaluation and analysis will foster further progress on multi-animal tracking. The dataset and evaluation as well as our analysis will be made available upon the acceptance.

Similar content being viewed by others

Notes

Each video sequence is collected under the Creative Commons license.

The annotation tool is available at https://github.com/darkpgmr/DarkLabel.

References

Bai, H., Cheng, W., Chu, P., Liu, J., Zhang, K., & Ling, H. (2021). Gmot-40: A benchmark for generic multiple object tracking. In IEEE international conference on computer vision and pattern recognition conference (CVPR).

Bala, P. C., Eisenreich, B. R., Yoo, S. B. M., Hayden, B. Y., Park, H. S., & Zimmermann, J. (2020). Automated markerless pose estimation in freely moving macaques with OpenMonkeyStudio. Nature Communications, 11(1), 1–12.

Bergmann, P., Meinhardt, T., & Leal-Taixe, L. (2019). Tracking without bells and whistles. In IEEE international conference on computer vision (ICCV).

Bernardin, K., & Stiefelhagen, R. (2008). Evaluating multiple object tracking performance: The clear mot metrics. EURASIP Journal on Image and Video Processing, 2008, 1–10.

Betke, M., Hirsh, D. E., Bagchi, A., Hristov, N. I., Makris, N. C., & Kunz, T. H. (2007). Tracking large variable numbers of objects in clutter. In IEEE international conference on computer vision and pattern recognition conference (CVPR).

Bewley, A., Ge, Z., Ott, L., Ramos, F., & Upcroft, B. (2016). Simple online and realtime tracking. In IEEE international conference in image processing (ICIP).

Bochinski, E., Eiselein, V., & Sikora, T. (2017). High-speed tracking-by-detection without using image information. In IEEE international conference on advanced video and signal-based surveillance (AVSS).

Bozek, K., Hebert, L., Mikheyev, A. S., & Stephens, G. J. (2018). Towards dense object tracking in a 2d honeybee hive. In IEEE international conference on computer vision and pattern recognition conference (CVPR).

Brasó, G., & Leal-Taixé, L. (2020). Learning a neural solver for multiple object tracking. In IEEE international conference on computer vision and pattern recognition conference (CVPR).

Cao, J., Tang, H., Fang, H. S., Shen, X., Lu, C., & Tai, Y. W. (2019). Cross-domain adaptation for animal pose estimation. In IEEE international conference on computer vision (ICCV).

Chu, P., Fan, H., Tan, C. C., & Ling, H. (2019). Online multi-object tracking with instance-aware tracker and dynamic model refreshment. In IEEE winter conference on applications of computer vision (WACV).

Chu, P., & Ling, H. (2019). Famnet: Joint learning of feature, affinity and multi-dimensional assignment for online multiple object tracking. In IEEE international conference on computer vision (ICCV).

Ciaparrone, G., Sánchez, F. L., Tabik, S., Troiano, L., Tagliaferri, R., & Herrera, F. (2020). Deep learning in video multi-object tracking: A survey. Neurocomputing, 381, 61–88.

Dai, P., Weng, R., Choi, W., Zhang, C., He, Z., & Ding, W. (2021). Learning a proposal classifier for multiple object tracking. In IEEE international conference on computer vision and pattern recognition conference (CVPR).

Dave, A., Khurana, T., Tokmakov, P., Schmid, C., & Ramanan, D. (2020). Tao: A large-scale benchmark for tracking any object. In European conference on computer vision (ECCV).

Dehghan, A., Tian, Y., Torr, P. H., & Shah, M. (2015). Target identity-aware network flow for online multiple target tracking. In IEEE international conference on computer vision and pattern recognition conference (CVPR).

Dendorfer, P., Osep, A., Milan, A., Schindler, K., Cremers, D., Reid, I., Roth, S., & Leal-Taixé, L. (2021). Motchallenge: A benchmark for single-camera multiple target tracking. International Journal of Computer Vision, 129(4), 845–881.

Dendorfer, P., Rezatofighi, H., Milan, A., Shi, J., Cremers, D., Reid, I., Roth, S., Schindler, K., & Leal-Taixé, L. (2020). Mot20: A benchmark for multi object tracking in crowded scenes. arXiv:2003.09003.

Du, D., Qi, Y., Yu, H., Yang, Y., Duan, K., Li, G., Zhang, W., Huang, Q., & Tian, Q. (2018). The unmanned aerial vehicle benchmark: Object detection and tracking. In European conference on computer vision (ECCV).

Emami, P., Pardalos, P. M., Elefteriadou, L., & Ranka, S. (2020). Machine learning methods for data association in multi-object tracking. ACM Computing Surveys, 53(4), 1–34.

Ferryman, J., & Shahrokni, A. (2009). Pets2009: Dataset and challenge. In PETS Workshop.

Geiger, A., Lenz, P., & Urtasun, R. (2012). Are we ready for autonomous driving? the kitti vision benchmark suite. In IEEE International conference on computer vision and pattern recognition conference (CVPR).

Guo, S., Wang, J., Wang, X., & Tao, D. (2021). Online multiple object tracking with cross-task synergy. In IEEE international conference on computer vision and pattern recognition conference (CVPR).

He, K., Zhang, X., Ren, S., & Sun, J. (2016). Deep residual learning for image recognition. In IEEE conference on computer vision and pattern recognition (CVPR).

Iwashita, Y., Takamine, A., Kurazume, R., & Ryoo, M. S. (2014). First-person animal activity recognition from egocentric videos. In International conference on pattern recognition (ICPR).

Khan, Z., Balch, T., & Dellaert, F. (2004). An MCMC-based particle filter for tracking multiple interacting targets. In European conference on computer vision (ECCV).

Leal-Taixé, L., Milan, A., Reid, I., Roth, S., & Schindler, K. (2015). Motchallenge 2015: Towards a benchmark for multi-target tracking. arXiv:1504.01942.

Li, S., Li, J., Tang, H., Qian, R., Lin, W. (2019). ATRW: A benchmark for amur tiger re-identification in the wild. In ACM Multimedia (MM).

Liang, C., Zhang, Z., Zhou, X., Li, B., Lu, Y., & Hu, W. (2022). One more check: Making“ fake background” be tracked again. In Association for the advancement of artificial intelligence (AAAI).

Lin, T. Y., Goyal, P., Girshick, R., He, K., & Dollár, P. (2017). Focal loss for dense object detection. In IEEE international conference on computer vision (ICCV).

Lin, T. Y., Maire, M., Belongie, S., Hays, J., Perona, P., Ramanan, D., Dollár, P., & Zitnick, C. L. (2014). Microsoft COCO: Common objects in context. In European conference on computer vision (ECCV).

Lu, Z., Rathod, V., Votel, R., & Huang, J. (2020). Retinatrack: Online single stage joint detection and tracking. In IEEE international conference on computer vision and pattern recognition conference (CVPR).

Luiten, J., Osep, A., Dendorfer, P., Torr, P., Geiger, A., Leal-Taixé, L., & Leibe, B. (2021). Hota: A higher order metric for evaluating multi-object tracking. International Journal of Computer Vision, 129(2), 548–578.

Luo, W., Xing, J., Milan, A., Zhang, X., Liu, W., & Kim, T. K. (2021). Multiple object tracking: A literature review. Artificial Intelligence, 293, 103448.

Mathis, A., Biasi, T., Schneider, S., Yuksekgonul, M., Rogers, B., Bethge, M., & Mathis, M. W. (2021). Pretraining boosts out-of-domain robustness for pose estimation. In IEEE winter conference on applications of computer vision (WACV).

Meinhardt, T., Kirillov, A., Leal-Taixe, L., & Feichtenhofer, C. (2022). Trackformer: Multi-object tracking with transformers. In IEEE international conference on computer vision and pattern recognition conference (CVPR).

Milan, A., Leal-Taixé, L., Reid, I., Roth, S., & Schindler, K. (2016). Mot16: A benchmark for multi-object tracking. arXiv:1603.00831.

Pang, J., Qiu, L., Li, X., Chen, H., Li, Q., Darrell, T., & Yu, F. (2021). Quasi-dense similarity learning for multiple object tracking. In IEEE international conference on computer vision and pattern recognition conference (CVPR).

Parham, J., Stewart, C., Crall, J., Rubenstein, D., Holmberg, J., & Berger-Wolf, T. (2018). An animal detection pipeline for identification. In IEEE winter conference on applications of computer vision (WACV).

Peng, J., Wang, C., Wan, F., Wu, Y., Wang, Y., Tai, Y., Wang, C., Li, J., Huang, F., & Fu, Y. (2020). Chained-tracker: Chaining paired attentive regression results for end-to-end joint multiple-object detection and tracking. In European conference on computer vision (ECCV).

Ren, S., He, K., Girshick, R., & Sun, J. (2015). Faster R-CNN: Towards real-time object detection with region proposal networks. In Conference on neural information processing systems (NIPS).

Ristani, E., Solera, F., Zou, R., Cucchiara, R., & Tomasi, C. (2016). Performance measures and a data set for multi-target, multi-camera tracking. In European conference on computer vision (ECCV) workshop.

Russakovsky, O., Deng, J., Su, H., Krause, J., Satheesh, S., Ma, S., Huang, Z., Karpathy, A., Khosla, A., Bernstein, M., et al. (2015). Imagenet large scale visual recognition challenge. International Journal of Computer Vision, 115(3), 211–252.

Schulter, S., Vernaza, P., Choi, W., & Chandraker, M. (2017). Deep network flow for multi-object tracking. In IEEE international conference on computer vision and pattern recognition conference (CVPR).

Shuai, B., Berneshawi, A., Li, X., Modolo, D., & Tighe, J. (2021). Siammot: Siamese multi-object tracking. In IEEE international conference on computer vision and pattern recognition conference (CVPR).

Sun, P., Cao, J., Jiang, Y., Yuan, Z., Bai, S., Kitani, K., & Luo, P. (2022). Dancetrack: Multi-object tracking in uniform appearance and diverse motion. In IEEE international conference on computer vision and pattern recognition conference (CVPR).

Sun, P., Cao, J., Jiang, Y., Zhang, R., Xie, E., Yuan, Z., Wang, C., & Luo, P. (2020). Transtrack: Multiple object tracking with transformer. arXiv:2012.15460.

Tang, S., Andriluka, M., Andres, B., & Schiele, B. (2017). Multiple people tracking by lifted multicut and person re-identification. In IEEE international conference on computer vision and pattern recognition conference (CVPR).

Van der Maaten, L., & Hinton, G. (2008). Visualizing data using t-SNE. Journal of Machine Learning Research, 9(11).

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., Kaiser, Ł., & Polosukhin, I. (2017). Attention is all you need. In Conference on neural information processing systems (NIPS).

Voigtlaender, P., Krause, M., Osep, A., Luiten, J., Sekar, B. B. G., Geiger, A., & Leibe, B. (2019). Mots: Multi-object tracking and segmentation. In IEEE international conference on computer vision and pattern recognition conference (CVPR).

Wang, Z., Zheng, L., Liu, Y., Li, Y., & Wang, S. (2020). Towards real-time multi-object tracking. In European conference on computer vision (ECCV).

Wen, L., Du, D., Cai, Z., Lei, Z., Chang, M. C., Qi, H., Lim, J., Yang, M. H., & Lyu, S. (2020). UA-DETRAC: A new benchmark and protocol for multi-object detection and tracking. Computer Vision and Image Understanding, 193, 102907.

Wojke, N., Bewley, A., & Paulus, D. (2017). Simple online and realtime tracking with a deep association metric. In IEEE international conference in image processing (ICIP).

Xu, J., Cao, Y., Zhang, Z., & Hu, H. (2019). Spatial-temporal relation networks for multi-object tracking. In IEEE international conference on computer vision (ICCV).

Xu, Y., Osep, A., Ban, Y., Horaud, R., Leal-Taixé, L., & Alameda-Pineda, X. (2020). How to train your deep multi-object tracker. In IEEE international conference on computer vision and pattern recognition conference (CVPR).

Yang, L., Fan, Y., & Xu, N. (2019). Video instance segmentation. In IEEE international conference on computer vision (ICCV).

Yin, J., Wang, W., Meng, Q., Yang, R., & Shen, J. (2020). A unified object motion and affinity model for online multi-object tracking. In IEEE international conference on computer vision and pattern recognition conference (CVPR).

Yu, F., Chen, H., Wang, X., Xian, W., Chen, Y., Liu, F., Madhavan, V., & Darrell, T. (2020). Bdd100k: A diverse driving dataset for heterogeneous multitask learning. In IEEE international conference on computer vision and pattern recognition conference (CVPR).

Yu, H., Xu, Y., Zhang, J., Zhao, W., Guan, Z., & Tao, D. (2021). Ap-10k: A benchmark for animal pose estimation in the wild. In Conference and workshop on neural information processing systems (NeurIPS)—track on datasets and benchmarks.

Zhang, Y., Sun, P., Jiang, Y., Yu, D., Yuan, Z., Luo, P., Liu, W., & Wang, X. (2021a). Bytetrack: Multi-object tracking by associating every detection box. arXiv:2110.06864.

Zhang, Y., Wang, C., Wang, X., Zeng, W., & Liu, W. (2021b). Fairmot: On the fairness of detection and re-identification in multiple object tracking. International Journal of Computer Vision, 129(11), 3069–3087.

Zhou, X., Koltun, V., & Krähenbühl, P. (2020). Tracking objects as points. In European conference on computer vision (ECCV).

Zhu, J., Yang, H., Liu, N., Kim, M., Zhang, W., & Yang, M. H. (2018). Online multi-object tracking with dual matching attention networks. In European conference on computer vision (ECCV).

Zhu, P., Wen, L., Du, D., Bian, X., Fan, H., Hu, Q., & Ling, H. (2022). Detection and tracking meet drones challenge. IEEE Transactions on Pattern Analysis and Machine Intelligence, 44(11), 7380–7399.

Acknowledgements

Libo Zhang was supported by the Key Research Program of Frontier Sciences, CAS, Grant No. ZDBSLY- JSC038, CAAI-Huawei MindSpore Open Fund and Youth Innovation Promotion Association, CAS (2020111).

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Angjoo Kanazawa.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Libo Zhang and Junyuan Gao make equal contributions to this work. Heng Fan is the corresponding author.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhang, L., Gao, J., Xiao, Z. et al. AnimalTrack: A Benchmark for Multi-Animal Tracking in the Wild. Int J Comput Vis 131, 496–513 (2023). https://doi.org/10.1007/s11263-022-01711-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11263-022-01711-8