Abstract

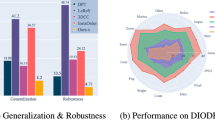

Wide field-of-view (FOV) cameras, which capture a larger scene area than narrow FOV cameras, are used in many applications including 3D reconstruction, autonomous driving, and video surveillance. However, wide-angle images contain distortions that violate the assumptions underlying pinhole camera models, resulting in object distortion, difficulties in estimating scene distance, area, and direction, and preventing the use of off-the-shelf deep models trained on undistorted images for downstream computer vision tasks. Image rectification, which aims to correct these distortions, can solve these problems. In this paper, we comprehensively survey progress in wide-angle image rectification from transformation models to rectification methods. Specifically, we first present a detailed description and discussion of the camera models used in different approaches. Then, we summarize several distortion models including radial distortion and projection distortion. Next, we review both traditional geometry-based image rectification methods and deep learning-based methods, where the former formulates distortion parameter estimation as an optimization problem and the latter treats it as a regression problem by leveraging the power of deep neural networks. We evaluate the performance of state-of-the-art methods on public datasets and show that although both kinds of methods can achieve good results, these methods only work well for specific camera models and distortion types. We also provide a strong baseline model and carry out an empirical study of different distortion models on synthetic datasets and real-world wide-angle images. Finally, we discuss several potential research directions that are expected to further advance this area in the future.

Similar content being viewed by others

Notes

The source code, dataset, models, and more results will be released at: https://github.com/loong8888/WAIR.

References

Ahmed, M., & Farag, A. (2001). Non-metric calibration of camera lens distortion. In Proceedings of IEEE International Conference on Image Processing (pp. 157–160).

Ahmed, M., & Farag, A. (2005). Nonmetric calibration of camera lens distortion: Differential methods and robust estimation. IEEE Transactions on Image Processing, 14(8), 1215–1230.

Akinlar, C., & Topal, C. (2011). EDLines: A real-time line segment detector with a false detection control. Pattern Recognition Letters, 32(13), 1633–1642.

Alemán-Flores, M., Alvarez, L., Gomez, L., & Santana-Cedrés, D. (2013). Wide-angle lens distortion correction using division models. In Progress in Pattern Recognition, Image Analysis, Computer Vision, and Applications,, Vol. 8258 (pp. 415–422).

Alemán-Flores, M., Alvarez, L., Gomez, L., & Santana-Cedrés, D. (2014a). Automatic lens distortion correction using one-parameter division models. Image Processing On Line, 4, 327–343.

Alemán-Flores, M., Alvarez, L., Gomez, L., & Santana-Cedrés, D. (2014b). Line detection in images showing significant lens distortion and application to distortion correction. Pattern Recognition Letters, 36, 261–271.

Antunes, M., Barreto, J. P., Aouada, D., & Ottersten, B. (2017). Unsupervised vanishing point detection and camera calibration from a single Manhattan image with radial distortion. In Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (pp. 6691–6699).

Baker, S., & Nayar, S. K. (1999). A theory of single-viewpoint catadioptric image formation. International Journal of Computer Vision, 35(2), 175–196.

Barreto, J., & Daniilidis, K. (2005). Fundamental matrix for cameras with radial distortion. In Proceedings of IEEE International Conference on Computer Vision Systems, Vol. 1 (pp. 625–632).

Barreto, J. P. (2006). A unifying geometric representation for central projection systems. Computer Vision and Image Understanding, 103(3), 208–217.

Basu, A., & Licardie, S. (1995). Alternative models for fish-eye lenses. Pattern Recognition Letters, 16(4), 433–441.

Benligiray, B., & Topal, C. (2016). Blind rectification of radial distortion by line straightness. In Proceedings of the European Signal Processing Conference (pp. 938–942).

Bermudez-Cameo, J., Lopez-Nicolas, G., & Guerrero, J. J. (2015). Automatic line extraction in uncalibrated omnidirectional cameras with revolution symmetry. International Journal of Computer Vision, 114(1), 16–37.

Bertalmio, M., Sapiro, G., Caselles, V., & Ballester, C. (2000). Image npainting. In Proceedings of the Annual Conference on Computer Graphics and Interactive Techniques (pp. 417–424).

Bertalmio, M., Vese, L., Sapiro, G., & Osher, S. (2003). Simultaneous structure and texture image inpainting. IEEE Transactions on Image Processing, 12(8), 882–889.

Bogdan, O., Eckstein, V., Rameau, F., & Bazin, J. C. (2018). DeepCalib: A deep learning approach for automatic intrinsic calibration of wide field-of-view cameras. In Proceedings of the ACM SIGGRAPH.

Bräuer-Burchardt, C., & Voss, K. (2000). Automatic lens distortion calibration using single views. In Mustererkennung (pp. 187–194).

Brauer-Burchardt, C., & Voss, K. (2001). A new algorithm to correct fish-eye- and strong wide-angle-lens-distortion from single images. In Proceedings of IEEE International Conference on Image Processing (pp. 225–228).

Bukhari, F., & Dailey, M. N. (2010). Robust radial distortion from a single image. In Advances in Visual Computing, Vol. 6454 (pp. 11–20).

Bukhari, F., & Dailey, M. N. (2013). Automatic radial distortion estimation from a single image. Journal of Mathematical Imaging and Vision, 45(1), 31–45.

Canny, J. (1986). A computational approach to edge detection. IEEE Transactions on Pattern Analysis and Machine Intelligence, 8(6), 679–698.

Caprile, B., & Torre, V. (1990). Using vanishing points for camera calibration. International Journal of Computer Vision, 4(2), 127–139.

Carroll, R., Agarwala, A., & Agrawala, M. (2010). Image warps for artistic perspective manipulation. In Proceedings of the ACM SIGGRAPH.

Carroll, R., Agrawal, M., & Agarwala, A. (2009). Optimizing content-preserving projections for wide-angle images. In Proceedings of the ACM SIGGRAPH.

Caruso, D., Engel, J., & Cremers, D. (2015). Large-scale direct SLAM for omnidirectional cameras. In Proceedings of IEEE International Conference on Intelligent Robots and Systems (pp. 141–148).

Chao, C.-H., Hsu, P.-L., Lee, H.-Y., & Wang, Y.-C.F. (2020). Self-supervised deep learning for Fisheye image rectification. In Proceedings of IEEE International Conference on Acoustics, Speech and Signal Processing (pp. 2248–2252).

Chen, Z., Zhang, J., & Tao, D. (2020). Recursive context routing for object detection. International Journal of Computer Vision, 129, 142–160.

Cinaroglu, I., & Bastanlar, Y. (2016). A direct approach for object detection with catadioptric omnidirectional cameras. Signal, Image and Video Processing, 10(2), 413–420.

Clarke, T. A., & Fryer, J. G. (1998). The development of camera calibration methods and models. The Photogrammetric Record, 16(91), 51–66.

Cornia, M., Baraldi, L., Serra, G., & Cucchiara, R. (2016). A deep multi-level network for saliency prediction. In Proceedings of IEEE International Conference on Pattern Recognition (pp. 3488–3493).

Courbon, J., Mezouar, Y., Eckt, L., & Martinet, P. (2007). A generic fisheye camera model for robotic applications. In Proceedings of IEEE International Conference on Intelligent Robots and Systems (pp. 1683–1688).

Cucchiara, R., Grana, C., Prati, A., & Vezzani, R. (2003). A Hough transform-based method for radial lens distortion correction. In Proceedings of IEEE International Conference on Image Analysis and Processing (pp. 182–187).

Deng, J., Dong, W., Socher, R., Li, L. J., Li, K., & Fei-Fei, L. (2009). ImageNet: A large-scale hierarchical image database. In Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (pp. 248–255).

Devernay, F., & Faugeras, O. (2001). Straight lines have to be straight. Machine Vision and Applications, 13(1), 14–24.

Devernay, F., & Faugeras, O. D. (1995). Automatic calibration and removal of distortion from scenes of structured environments. In Proceedings of International Symposium on Optical Science, Engineering, and Instrumentation.

Dosovitskiy, A., Ros, G., Codevilla, F., Lopez, A., & Koltun, V. (2017). CARLA: An open urban driving simulator. In Proceedings of the Annual Conference on Robot Learning (pp. 1–16).

Duane, C. B. (1971). Close-range camera calibration. Photogramm. Eng, 37(8), 855–866.

Eichenseer, A., & Kaup, A. (2016). A data set providing synthetic and real-world fisheye video sequences. In Proceedings of IEEE International Conference on Acoustics, Speech and Signal Processing (pp. 1541–1545).

Elharrouss, O., Almaadeed, N., Al-Maadeed, S., & Akbari, Y. (2020). Image inpainting: A review. Neural Processing Letters, 51(2), 2007–2028.

Everingham, M., Gool, L., Williams, C. K., Winn, J., & Zisserman, A. (2010). The pascal visual object classes (VOC) challenge. International Journal of Computer Vision, 88(2), 303–338.

Fan, J., Zhang, J., & Tao, D. (2020). Sir: Self-supervised image rectification via seeing the same scene from multiple different lenses. arXiv preprint arXiv:2011.14611.

Faugeras, O. D., Luong, Q.-T., & Maybank, S. J. (1992). Camera self-calibration: Theory and experiments. In Proceedings of the European conference on computer vision. Springer (pp. 321–334).

Fenna, D. (2006). Cartographic science: A compendium of map projections, with derivations. CRC Press.

Fitzgibbon, A. (2001). Simultaneous linear estimation of multiple view geometry and lens distortion. In Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, Vol. 1 (pp. I–125–I–132).

Fraser, C. S. (1997). Digital camera self-calibration. Photogrammetry and Remote Sensing, 52(4), 149–159.

Gatys, L. A., Ecker, A. S., & Bethge, M. (2016). Image style transfer using convolutional neural networks. In Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (pp. 2414–2423).

Geiger, A., Lenz, P., & Urtasun, R. (2012). Are we ready for autonomous driving? The KITTI vision benchmark suite. In Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (pp. 3354–3361).

Gennery, D. B. (1979). Stereo-camera calibration. In Proceedings of Image Understanding Workshop (pp. 101–107).

A unifying theory for central panoramic systems and practical implications. In Vernon, D., (Ed.), Proceedings of the European Conference on Computer Vision, Vol. 1843 (pp. 445–461).

Geyer, C., & Daniilidis, K. (2001). Catadioptric projective geometry. International Journal of Computer Vision, 45(3), 223–243.

Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A., & Bengio, Y. (2014). Generative adversarial nets. In Advances in Neural Information Processing Systems (pp. 2672–2680).

Grompone von Gioi, R., Jakubowicz, J., Morel, J.-M., & Randall, G. (2012). LSD: A line segment detector. Image Processing On Line, 2, 35–55.

Hartley, R., & Sing Bing Kang (2005). Parameter-free radial distortion correction with centre of distortion estimation. In Proceedings of IEEE International Conference on Computer Vision, Vol. 2 (pp. 1834–1841).

Hartley, R., & Zisserman, A. (2003). Multiple view geometry in computer vision.

He, K., Zhang, X., Ren, S., & Sun, J. (2016). Deep Residual Learning for Image Recognition. In Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (pp. 770–778).

Heikkila, J., & Silven, O. (1997). A four-step camera calibration procedure with implicit image correction. In Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (pp. 1106–1112).

Huang, K., Wang, Y., Zhou, Z., Ding, T., Gao, S., & Ma, Y. (2018). Learning to parse wireframes in images of man-made environments. In Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (pp. 626–635).

Hugemann, W. (2010). Correcting lens distortions in digital photographs.

Hughes, C., Denny, P., Jones, E., & Glavin, M. (2010). Accuracy of fish-eye lens models. Applied Optics, 49(17), 3338.

Hughes, C., Glavin, M., Jones, E., & Denny, P. (2008). Review of geometric distortion compensation in fish-eye cameras. In Proceedings of IET Irish Signals and Systems Conference (pp. 162–167).

Isola, P., Zhu, J.-Y., Zhou, T., & Efros, A. A. (2017). Image-to-image translation with conditional adversarial networks. In Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (pp. 5967–5976).

Jabar, F., Ascenso, J., & Queluz, M. P. (2017). Perceptual Analysis of perspective projection for viewport rendering in 360\(^\circ \) images. In Proceedings of IEEE International Symposium on Multimedia (pp. 53–60).

Jabar, F., Ascenso, J., & Queluz, M. P. (2019). Content-aware perspective projection optimization for viewport rendering of 360\(^\circ \) images. In Proceedings of IEEE International Conference on Multimedia and Expo (pp. 296–301).

Jiang, S., Cao, D., Wu, Y., Zhu, S., & Hu, P. (2015). Efficient line-based lens distortion correction for complete distortion with vanishing point constraint. Applied Optics, 54(14), 4432.

Kakani, V., Kim, H., Lee, J., Ryu, C., & Kumbham, M. (2020). Automatic distortion rectification of wide-angle images using outlier refinement for streamlining vision tasks. Sensors, 20(3), 894.

Kanamori, Y., Cuong, N. H., & Nishita, T. (2013). Local optimization of distortions in wide-angle images using moving least-squares. In Proceedings of the Spring Conference on Computer Graphics (p. 51).

Kang, S. B. (2000). Catadioptric self-calibration. In Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (pp. 201–207).

Kannala, J., Brandt, S. (2004). A generic camera calibration method for fish-eye lenses. In Proceedings of IEEE International Conference on Pattern Recognition, Vol. 1 (pp. 10–13).

Kannala, J., & Brandt, S. (2006). A generic camera model and calibration method for conventional, wide-angle, and fish-eye lenses. IEEE Transactions on Pattern Analysis and Machine Intelligence, 28(8), 1335–1340.

Khomutenko, B., Garcia, G., & Martinet, P. (2016). An enhanced unified camera model. IEEE Robotics and Automation Letters, 1(1), 137–144.

Kim, B.-K., Chung, S.-W., Song, M.-K., & Song, W.-J. (2010). Correcting radial lens distortion with advanced outlier elimination. In Proceedings of IEEE International Conference on Audio, Language and Image Processing (pp. 1693–1699).

Kim, Y. W., Lee, C. R., Cho, D. Y., Kwon, Y. H., Choi, H. J., & Yoon, K. J. (2017). Automatic content-aware projection for 360 videos. In Proceedings of IEEE International Conference on Computer Vision (pp. 4753–4761).

Kingslake, R. (1989). A history of the photographic lens. Elsevier.

Kopf, J., Lischinski, D., Deussen, O., Cohen-Or, D., & Cohen, M. (2009). Locally adapted projections to reduce panorama distortions. Computer Graphics Forum, 28(4), 1083–1089.

Kukelova, Z., & Pajdla, T. (2011). A minimal solution to radial distortion autocalibration. IEEE Transactions on Pattern Analysis and Machine Intelligence, 33(12), 2410–2422.

Land, M. F., & Nilsson, D.-E. (2012). Animal eyes. Oxford animal biology series (2nd Ed.). Oxford University Press.

Ledig, C., Theis, L., Huszar, F., Caballero, J., Cunningham, A., Acosta, A., Aitken, A., Tejani, A., Totz, J., Wang, Z., & Shi, W. (2017). Photo-realistic single image super-resolution using a generative adversarial network. In In Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (pp. 105–114).

Li, H., & Hartley, R. (2005). A non-iterative method for correcting lens distortion from nine-point correspondences. In Proceedings of IEEE International Conference onComputer Vision Workshops.

Li, X., Zhang, B., Sander, P. V., & Liao, J. (2019). Blind geometric distortion correction on images through deep learning. In Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (pp. 4850–4859).

Liao, K., Lin, C., Zhao, Y., & Gabbouj, M. (2020a). DR-GAN: Automatic radial distortion rectification using conditional GAN in real-time. IEEE Transactions on Circuits and Systems for Video Technology, 30(3), 725–733.

Liao, K., Lin, C., Zhao, Y., Gabbouj, M., & Zheng, Y. (2020b). OIDC-Net: Omnidirectional image distortion correction via coarse-to-fine region attention. IEEE Journal of Selected Topics in Signal Processing, 14(1), 222–231.

Liao, K., Lin, C., Zhao, Y., & Xu, M. (2020c). Model-free distortion rectification framework bridged by distortion distribution map. IEEE Transactions on Image Processing, 29, 3707–3718.

Lim, B., Son, S., Kim, H., Nah, S., & Lee, K. M. (2017). Enhanced deep residual networks for single image super-resolution. In Proceedings of IEEE Conference on Computer Vision and Pattern Recognition Workshops (pp. 1132–1140).

Lin, T.-Y., Maire, M., Belongie, S., Hays, J., Perona, P., Ramanan, D., Dollár, P., & Zitnick, C. L. (2014). Microsoft COCO: Common objects in context. In Proceedings of the European Conference on Computer Vision.

Liu, X., & Fang, S. (2014). Correcting large lens radial distortion using epipolar constraint. Applied Optics, 53(31), 7355.

Lopez, M., Mari, R., Gargallo, P., Kuang, Y., Gonzalez-Jimenez, J., & Haro, G. (2019). Deep single image camera calibration With radial distortion. In Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (pp. 11809–11817).

Lőrincz, S.-B., Pável, S., & Csató, L. (2019). Single view distortion correction using semantic guidance. In Proceedings of International Joint Conference on Neural Networks (pp. 1–6).

Mallon, J., & Whelan, P. F. (2004). Precise radial un-distortion of images. In Proceedings of the International Conference on Pattern Recognition (pp. 18–21).

Markovic, I., Chaumette, F., & Petrovic, I. (2014). Moving object detection, tracking and following using an omnidirectional camera on a mobile robot. In Proceedings of IEEE International Conference on Robotics and Automation (pp. 5630–5635).

Matsuki, H., von Stumberg, L., Usenko, V., Stuckler, J., & Cremers, D. (2018). Omnidirectional DSO: Direct sparse odometry with fisheye cameras. IEEE Robotics and Automation Letters, 3(4), 3693–3700.

Maybank, S. J., & Faugeras, O. D. (1992). A theory of self-calibration of a moving camera. International Journal of Computer Vision, 8(2), 123–151.

Mei, C., & Rives, P. (2007). Single view point omnidirectional camera calibration from planar grids. In Proceedings of IEEE International Conference on Robotics and Automation (pp. 3945–3950).

Miyamoto, K. (1964). Fish eye lens. Journal of the Optical Society of America, 54(8), 1060.

Nayar, S. (1997). Catadioptric omnidirectional camera. In Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (pp. 482–488).

Neumann, J., Fermuller, C., & Aloimonos, Y. (2002). Eyes from eyes: New cameras for structure from motion. In Proceedings of IEEE Workshop on Omnidirectional Vision (pp. 19–26).

Payá, L., Gil, A., & Reinoso, O. (2017). A state-of-the-art review on mapping and localization of mobile robots using omnidirectional vision sensors. Journal of Sensors, 2017, 1–20.

Chang, Peng, & Hebert, M. (2000). Omni-directional structure from motion. In Proceedings of IEEE Workshop on Omnidirectional Vision (pp. 127–133).

Philbin, J., Chum, O., Isard, M., Sivic, J., & Zisserman, A. (2007). Object retrieval with large vocabularies and fast spatial matching. In Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (pp. 1–8).

Posada, L. F., Narayanan, K. K., Hoffmann, F., & Bertram, T. (2010). Floor segmentation of omnidirectional images for mobile robot visual navigation. In Proceedings of IEEE International Conference on Intelligent Robots and Systems (pp. 804–809).

Prescott, B., & McLean, G. (1997). Line-based correction of radial lens distortion. Graphical Models and Image Processing, 59(1), 39–47.

Pritts, J., Kukelova, Z., Larsson, V., Lochman, Y., & Chum, O. (2020). Minimal solvers for rectifying from radially-distorted scales and change of scales. International Journal of Computer Vision, 128(4), 950–968.

Puig, L., Bermúdez, J., Sturm, P., & Guerrero, J. (2012). Calibration of omnidirectional cameras in practice: A comparison of methods. Computer Vision and Image Understanding, 116(1), 120–137.

Ray, S. (2002). Applied photographic optics. Routledge.

Rituerto, A., Puig, L., & Guerrero, J. (2010). Visual SLAM with an omnidirectional camera. In Proceedings of IEEE International Conference on Pattern Recognition (pp. 348–351).

Rong, J., Huang, S., Shang, Z., & Ying, X. (2016). Radial lens distortion correction using convolutional neural networks trained with synthesized images. In Proceedings of the Asian Conference on Computer Vision, Vol. 10113 (pp. 35–49).

Ronneberger, O., Fischer, P., & Brox, T. (2015). U-Net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention (pp. 234–241).

Ross, D. A., Lim, J., Lin, R.-S., & Yang, M.-H. (2008). Incremental learning for robust visual tracking. International Journal of Computer Vision, 77(1–3), 125–141.

Royer, E., Lhuillier, M., Dhome, M., & Lavest, J.-M. (2007). Monocular vision for mobile robot localization and autonomous navigation. International Journal of Computer Vision, 74(3), 237–260.

Sacht, L., Velho, L., Nehab, D., & Cicconet, M. (2011). Scalable motion-aware panoramic videos. In Proceedings of the ACM SIGGRAPH.

Sacht, L. K. (2010). Content-based projections for panoramic images and videos. National Institute for Pure and Applied Mathematics. Master’s thesis.

Santana-Cedrés, D., Gomez, L., Alemán-Flores, M., Salgado, A., Esclarín, J., Mazorra, L., & Alvarez, L. (2015). Invertibility and estimation of two-parameter polynomial and division lens distortion models. SIAM Journal on Imaging Sciences, 8(3), 1574–1606.

Santana-Cedrés, D., Gómez, L., Alemán-Flores, M., Salgado, A., Esclarín, J., Mazorra, L., & Álvarez, L. (2016). An iterative optimization algorithm for lens distortion correction using two-parameter models. Image Processing On Line, 5, 326–364.

Scaramuzza, D. (2014). Computer vision: A reference guide, chapter omnidirectional camera.

Scaramuzza, D., Martinelli, A., & Siegwart, R. (2006). A flexible technique for accurate omnidirectional camera calibration and structure from motion. In Proceedings of IEEE International Conference on Computer Vision Systems.

Shah, S., & Aggarwal, J. (1994). A simple calibration procedure for fish-eye (high distortion) lens camera. In Proceedings of IEEE International Conference on Robotics and Automation (pp. 3422–3427).

Shah, S., & Aggarwal, J. (1996). Intrinsic parameter calibration procedure for a (high-distortion) fish-eye lens camera with distortion model and accuracy estimation. Pattern Recognition, 29(11), 1775–1788.

Shi, Y., Zhang, D., Wen, J., Tong, X., Ying, X., & Zha, H. (2018). Radial lens distortion correction by adding a weight layer with inverted Foveal models to convolutional neural networks. In Proceedings of IEEE International Conference on Pattern Recognition (pp. 1–6).

Shih, Y., Lai, W.-S., & Liang, C.-K. (2019). Distortion-free wide-angle portraits on camera phones. ACM Transactions on Graphics, 38(4), 1–12.

Sid-Ahmed, M., & Boraie, M. (1990). Dual camera calibration for 3-D machine vision metrology. IEEE Transactions on Instrumentation and Measurement, 39(3), 512–516.

Snyder, J. P. (1997). Flattening the earth: Two thousand years of map projections. University of Chicago Press.

Song, S., Yu, F., Zeng, A., Chang, A. X., Savva, M., & Funkhouser, T. (2017). Semantic scene completion from a single depth image. In Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (pp. 190–198).

Steele, R. M., & Jaynes, C. (2006). Overconstrained linear estimation of radial distortion and multi-view geometry. In Proceedings of the European Conference on Computer Vision, Vol. 3951 (pp. 253–264).

Stein, G. (1997). Lens distortion calibration using point correspondences. In Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (pp. 602–608).

Stevenson, D., & Fleck, M. (1996). Nonparametric correction of distortion. In Proceedings of IEEE Workshop on Applications of Computer Vision (pp. 214–219).

Strand, R., & Hayman, E. (2005). Correcting radial distortion by circle fitting. In Procedings of the British Machine Vision Conference.

Sturm, P. (2010). Camera models and fundamental concepts used in geometric computer vision. Foundations and Trends in Computer Graphics and Vision, 6(1–2), 1–183.

Sturm, P., & Barreto, J. P. (2008). General imaging geometry for central catadioptric cameras. In Proceedings of the European Conference on Computer Vision (pp. 609–622).

Swaminathan, R., Grossberg, M. D., & Nayar, S. K. (2006). Non-single viewpoint catadioptric cameras: Geometry and analysis. International Journal of Computer Vision, 66(3), 211–229.

Swaminathan, R., & Nayar, S. (2000). Nonmetric calibration of wide-angle lenses and polycameras. IEEE Transactions on Pattern Analysis and Machine Intelligence, 22(10), 1172–1178.

Tang, Z., Grompone Von Gioi, R., Monasse, P., & Morel, J.-M. (2012). Self-consistency and universality of camera lens distortion models.

Thormählen, T. (2003). Robust line-based calibration of lens distortion from a single view. In Proceedings of Mirage (pp. 105–112:8).

Tsai, R. (1987). A versatile camera calibration technique for high-accuracy 3D machine vision metrology using off-the-shelf TV cameras and lenses. IEEE Journal on Robotics and Automation, 3(4), 323–344.

Urban, S., Leitloff, J., & Hinz, S. (2015). Improved wide-angle, fisheye and omnidirectional camera calibration. Photogrammetry and Remote Sensing, 108, 72–79.

Usenko, V., Demmel, N., & Cremers, D. (2018). The double sphere camera model. In Proceedings of IEEE International Conference on 3D Vision (pp. 552–560).

Wang, A., Qiu, T., & Shao, L. (2009). A simple method of radial distortion correction with centre of distortion estimation. Journal of Mathematical Imaging and Vision, 35(3), 165–172.

Wang, Z., Bovik, A., Sheikh, H., & Simoncelli, E. (2004). Image quality assessment: From error visibility to structural similarity. IEEE Transactions on Image Processing, 13(4), 600–612.

Wang, Z., Chen, J., & Hoi, S. C. (2020). Deep learning for image super-resolution: A survey. IEEE Transactions on Pattern Analysis and Machine Intelligence.

Wei, J., Li, C.-F., Hu, S.-M., Martin, R. R., & Tai, C.-L. (2012). Fisheye video correction. IEEE Transactions on Visualization and Computer Graphics, 18(10), 1771–1783.

Weng, J., Cohen, P., & Herniou, M. (1992). Camera calibration with distortion models and accuracy evaluation. IEEE Transactions on Pattern Analysis and Machine Intelligence, 14(10), 965–980.

Wildenauer, H., & Micusik, B. (2013). Closed form solution for radial distortion estimation from a single vanishing point. In Procedings of the British Machine Vision Conference, Vol. 106 (pp. 1–106).

Xiao, J., Ehinger, K. A., Oliva, A., & Torralba, A. (2012). Recognizing scene viewpoint using panoramic place representation. In Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (pp. 2695–2702).

Xue, Z., Xue, N., Xia, G.-S., & Shen, W. (2019). Learning to calibrate straight lines for fisheye image rectification. In Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (pp. 1643–1651).

Xue, Z.-C., Xue, N., & Xia, G.-S. (2020). Fisheye distortion rectification from deep straight lines. arXiv:2003.11386 [cs].

Yagi, Y. (1999). Omnidirectional sensing and its applications. IEICE Transactions on Information and Systems, 82(3), 568–579.

Yagi, Y., & Kawato, S. (1990). Panorama scene analysis with conic projection. In Proceedings of IEEE International Workshop on Intelligent Robots and Systems (pp. 181–187).

Yagi, Y., Kawato, S., & Tsuji, S. (1994). Real-time omnidirectional image sensor (COPIS) for vision-guided navigation. IEEE Transactions on Robotics and Automation, 10(1), 11–22.

Yang, S., Lin, C., Liao, K., Zhao, Y., & Liu, M. (2020). Unsupervised fisheye image correction through bidirectional loss with geometric prior. Journal of Visual Communication and Image Representation, 66, 102692.

Yang, S., Rong, J., Huang, S., Shang, Z., Shi, Y., Ying, X., & Zha, H. (2016). Simultaneously vanishing point detection and radial lens distortion correction from single wide-angle images. In Proceedings of IEEE International Conference on Robotics and Biomimetics (pp. 363–368).

Yang, W., Qian, Y., Kamarainen, J.-K., Cricri, F., & Fan, L. (2018). Object detection in equirectangular panorama. In Proceedings of IEEE International Conference on Pattern Recognition (pp. 2190–2195).

Yin, X., Wang, X., Yu, J., Zhang, M., Fua, P., & Tao, D. (2018). FishEyeRecNet: A multi-context collaborative deep network for fisheye image rectification. In Proceedings of the European Conference on Computer Vision, Vol. 11214 (pp. 475–490).

Ying, X., & Hu, Z. (2004). Can we consider central catadioptric cameras and fisheye cameras within a unified imaging model. In Proceedings of the European Conference on Computer Vision, Vol. 3021 (pp. 442–455).

Ying, X., Mei, X., Yang, S., Wang, G., Rong, J., & Zha, H. (2015). Imposing differential constraints on radial distortion correction. In Proceedings of the Asian Conference on Computer Vision, Vol. 9003 (pp. 384–398).

Yu, J., Lin, Z., Yang, J., Shen, X., Lu, X., & Huang, T. S. (2018). Generative image inpainting with contextual attention. In Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (pp. 5505–5514).

Zhang, M., Yao, J., Xia, M., Li, K., Zhang, Y., & Liu, Y. (2015a). Line-based Multi-Label Energy Optimization for fisheye image rectification and calibration. In Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (pp. 4137–4145).

Zhang, X., Liu, W., & Xing, W. (2015b). Robust radial distortion estimation using good circular arcs. In Proceedings of International Conference on Distributed Multimedia Systems (pp. 231–240).

Zhang, Y., Zhao, L., & Hu, W. (2013). A survey of catadioptric omnidirectional camera calibration. International Journal of Information Technology and Computer Science, 5(3), 13–20.

Zhang, Z. (1996). On the epipolar geometry between two images with lens distortion. In Proceedings of IEEE International Conference on Pattern Recognition (pp. 407–411).

Zhang, Z. (2000). A flexible new technique for camera calibration. IEEE Transactions on Pattern Analysis and Machine Intelligence, 22(11), 1330–1334.

Zhou, B., Lapedriza, A., Khosla, A., Oliva, A., & Torralba, A. (2018). Places: A 10 million image database for scene recognition. IEEE Transactions on Pattern Analysis and Machine Intelligence, 40(6), 1452–1464.

Zhou, B., Zhao, H., Puig, X., Xiao, T., Fidler, S., Barriuso, A., & Torralba, A. (2019). Semantic understanding of scenes through the ADE20K dataset. International Journal of Computer Vision, 127(3), 302–321.

Zhu, J.-Y., Park, T., Isola, P., & Efros, A. A. (2017). Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of IEEE International Conference on Computer Vision (pp. 2223–2232).

Zorin, D., & Barr, A. H. (1995). Correction of geometric perceptual distortions in pictures. In Proceedings of the Annual Conference on Computer Graphics and Interactive Techniques (pp. 257–264).

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Srinivasa Narasimhan.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was supported by Australian Research Council Projects FL-170100117, IH-180100002, IC-190100031.

Rights and permissions

About this article

Cite this article

Fan, J., Zhang, J., Maybank, S.J. et al. Wide-Angle Image Rectification: A Survey. Int J Comput Vis 130, 747–776 (2022). https://doi.org/10.1007/s11263-021-01562-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11263-021-01562-9