Abstract

Predictive models of student success in Massive Open Online Courses (MOOCs) are a critical component of effective content personalization and adaptive interventions. In this article we review the state of the art in predictive models of student success in MOOCs and present a categorization of MOOC research according to the predictors (features), prediction (outcomes), and underlying theoretical model. We critically survey work across each category, providing data on the raw data source, feature engineering, statistical model, evaluation method, prediction architecture, and other aspects of these experiments. Such a review is particularly useful given the rapid expansion of predictive modeling research in MOOCs since the emergence of major MOOC platforms in 2012. This survey reveals several key methodological gaps, which include extensive filtering of experimental subpopulations, ineffective student model evaluation, and the use of experimental data which would be unavailable for real-world student success prediction and intervention, which is the ultimate goal of such models. Finally, we highlight opportunities for future research, which include temporal modeling, research bridging predictive and explanatory student models, work which contributes to learning theory, and evaluating long-term learner success in MOOCs.

Similar content being viewed by others

Notes

Other work has emphasized the “openness” of MOOCs as reflective of open content and open-ended learning structures (e.g. Kennedy et al. 2015); this is highly debatable with current MOOC providers, where much of the content is under copyright and may follow strict instructivist designs, and we consider these senses of openness to be too constraining for the present work.

DiscourseDB (http://discoursedb.github.io/), and MOOCdb (https://github.com/MOOCdb) are both tools used to bridge these different implementations and data sources across platforms to enable research and encapsulate the full breadth of forum experiences. Both are now components of LearnSphere (http://learnsphere.org/).

educational-technology-collective.github.io/morf/.

We discuss concerns related to large numbers of comparisons, including with self-optimizing or auto-tuning machine learning toolkits, in a forthcoming work.

While the current survey is not specifically interested in the prediction of these outcomes, we include these works on the basis that they contain other, more direct predictions of student success in MOOCs or generate insights relevant to such predictions.

This concern is similar to that raised in Henrich et al. (2010) in the context of psychological research; as Henrich et al. argue, such sampling bias could have true and significant consequences for the generalizability of these findings.

Some corrections, such as the Bonferonni correction, can be applied by readers directly by simply multiplying the reported p value by the number of comparisons; however, even this depends on the researcher self-reporting the number of models considered. It is unlikely that the total number of models considered over the scope of an entire experiment are reported in most published research.

educational-technology-collective.github.io/morf/.

References

Adamopoulos, P.: What makes a great MOOC? an interdisciplinary analysis of student retention in online courses. In: Proceedings of the 34th International Conference on Information Systems, pp. 1–21 (2013)

Agudo-Peregrina, Á.F., Iglesias-Pradas, S., Conde-González, M.Á., Hernández-García, Á.: Can we predict success from log data in VLEs? Classification of interactions for learning analytics and their relation with performance in VLE-supported F2F and online learning. Comput. Hum. Behav. 31, 542–550 (2014)

Alexandron, G., Ruipérez-Valiente, J.A., Chen, Z., Muñoz-Merino, P.J., Pritchard, D.E.: Copying@ scale: using harvesting accounts for collecting correct answers in a MOOC. Comput. Educ. 108, 96–114 (2017)

Andres, J.M.L., Baker, R.S., Siemens, G., Gašević, D., Spann, C.A.: Replicating 21 findings on student success in online learning. Technol. Instr. Cognit. Learn. 10, 313–333 (2016)

Andres, J.M.L., Baker, R.S., Siemens, G., Gašević, D., Crossley, S.: Studying MOOC completion at scale using the MOOC replication framework. In: Proceedings of the International Conference on Learning Analytics and Knowledge, pp. 71–78 (2018)

Ashenafi, M.M., Riccardi, G., Ronchetti, M.: Predicting students’ final exam scores from their course activities. In: IEEE Frontiers in Education Conference (FIE), pp. 1–9 (2015)

Ashenafi, M.M., Ronchetti, M., Riccardi, G.: Predicting student progress from peer-assessment data. In: Proceedings of the 9th International Conference on Educational Data Mining, pp. 270–275 (2016)

Baehrens, D., Schroeter, T., Harmeling, S., Kawanabe, M., Hansen, K., Müller, K.R.: How to explain individual classification decisions. J. Mach. Learn. Res. 11(Jun), 1803–1831 (2010)

Baker, R.S., Lindrum, D., Lindrum, M.J., Perkowski, D.: Analyzing early at-risk factors in higher education e-learning courses. In: Proceedings of the 8th International Conference on Educational Data Mining, pp. 150–155 (2015)

Balakrishnan, G., Coetzee, D.: Predicting student retention in massive open online courses using hidden Markov models. Electrical Engineering and Computer Sciences, University of California at Berkeley, Technical report (2013)

Barber, R., Sharkey, M.: Course correction: using analytics to predict course success. In: Proceedings of the 2nd International Conference on Learning Analytics and Knowledge, LAK ’12, pp. 259–262. ACM, New York (2012)

Benavoli, A., Corani, G., Demsar, J., Zaffalon, M.: Time for a change: a tutorial for comparing multiple classifiers through Bayesian analysis. J. Mach. Learn. Res. 18(1), 2653–2688 (2017)

Benjamini, Y., Hochberg, Y.: Controlling the false discovery rate: a practical and powerful approach to multiple testing. J. R. Stat. Soc. Ser. B Stat. Methodol. 57(1), 289–300 (1995)

Bote-Lorenzo, M.L., Gómez-Sánchez, E.: Predicting the decrease of engagement indicators in a MOOC. In: Proceedings of the Seventh International Learning Analytics and Knowledge Conference, LAK ’17, pp. 143–147. ACM, New York (2017)

Bouckaert, R.R., Frank, E.: Evaluating the replicability of significance tests for comparing learning algorithms. In: Advances in Knowledge Discovery and Data Mining, pp. 3–12. Springer, Berlin (2004)

Bouzayane, S., Saad, I.: Weekly predicting the at-risk MOOC learners using dominance-based rough set approach. In: Delgado, K.C., Jermann, P., Pérez-Sanagustín, M., Seaton, D., White, S. (eds.) Digital Education: Out to the World and Back to the Campus, pp. 160–169. Springer, Cham (2017)

Boyer, S., Veeramachaneni, K.: Transfer learning for predictive models in massive open online courses. In: Conati, C., Heffernan, N., Mitrovic, A., Verdejo, M. (eds.) Artificial Intelligence in Education, pp. 54–63. Springer, Cham (2015)

Breiman, L.: Statistical modeling: the two cultures (with comments and a rejoinder by the author). Stat. Sci. 16(3), 199–231 (2001)

Brinton, C.G., Chiang, M.: MOOC performance prediction via clickstream data and social learning networks. In: IEEE Conference on Computer Communications (INFOCOM), pp. 2299–2307 (2015)

Brinton, C.G., Buccapatnam, S., Chiang, M., Poor, H.V.: Mining MOOC clickstreams: on the relationship between learner video-watching behavior and performance (2015). https://arxiv.org/abs/1503.06489

Brooks, C., Thompson, C., Teasley, S.: A time series interaction analysis method for building predictive models of learners using log data. In: Proceedings of the Fifth International Conference on Learning Analytics and Knowledge, pp. 126–135. ACM, New York (2015a)

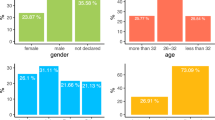

Brooks, C., Thompson, C., Teasley, S.: Who you are or what you do: comparing the predictive power of demographics vs. activity patterns in massive open online courses (MOOCs). In: Proceedings of the 2nd Conference on Learning @ Scale, L@S ’15, pp. 245–248. ACM, New York (2015b)

Champaign, J., Colvin, K.F., Liu, A., Fredericks, C., Seaton, D., Pritchard, D.E.: Correlating skill and improvement in 2 MOOCs with a student’s time on tasks. In: Proceedings of the First ACM Conference on Learning @ Scale Conference, L@S ’14, pp. 11–20. ACM, New York (2014)

Chaplot, D.S., Rhim, E., Kim, J.: Predicting student attrition in MOOCs using sentiment analysis and neural networks. In: Fourth Workshop on Intelligent Support for Learning in Groups, pp. 1–6 (2015a)

Chaplot, D.S., Rhim, E., Kim, J.: SAP: student attrition predictor. In: Proceedings of the 8th International Conference on Educational Data Mining, pp. 635–636 (2015b)

Chen, Y., Zhang, M.: MOOC student dropout: pattern and prevention. In: Proceedings of the ACM Turing 50th Celebration Conference—China, TUR-C ’17, pp. 4:1–4:6. ACM, New York (2017)

Cheng, J., Kulkarni, C., Klemmer, S.: Tools for predicting drop-off in large online classes. In: Proceedings of the 2013 Conference on Computer Supported Cooperative Work Companion, CSCW ’13, pp. 121–124. ACM, New York (2013)

Chuang, I., Ho, A.D.: HarvardX and MITx: four years of open online courses—fall 2012-summer 2016. Technical report, Harvard/MIT (2016)

Cocea, M., Weibelzahl, S.: Cross-system validation of engagement prediction from log files. In: Duval, E., Klamma, R., Wolpers, M. (eds.) Creating new learning experiences on a global scale. European conference on technology-enhanced learning (EC-TEL) 2007. Lecture notes in computer science, vol. 4753. Springer, Berlin (2007)

Coleman, C.A., Seaton, D.T., Chuang, I.: Probabilistic use cases: discovering behavioral patterns for predicting certification. In: Proceedings of the Second (2015) ACM Conference on Learning @ Scale, L@S ’15, pp. 141–148. ACM, New York (2015)

Coursera: Coursera Data Export Procedures. Coursera, Mountain View (2013)

Craven, M., Shavlik, J.W.: Extracting tree-structured representations of trained networks. In: Touretzky, D.S., Mozer, M.C., Hasselmo, M.E. (eds.) Advances in Neural Information Processing Systems, vol. 8, pp. 24–30. MIT Press, Cambridge (1996)

Crossley, S., Paquette, L., Dascalu, M., McNamara, D.S., Baker, R.S.: Combining click-stream data with NLP tools to better understand MOOC completion. In: Proceedings of the Sixth International Conference on Learning Analytics and Knowledge, LAK ’16, pp. 6–14. ACM, New York (2016)

DeBoer, J., Breslow, L.: Tracking progress: predictors of students’ weekly achievement during a circuits and electronics MOOC. In: Proceedings of the First ACM Conference on Learning @ Scale Conference, L@S ’14, pp. 169–170. ACM, New York (2014)

DeBoer, J., Stump, G.S., Seaton, D., Ho, A., Pritchard, D.E., Breslow, L.: Bringing student backgrounds online: MOOC user demographics, site usage, and online learning. In: Proceedings of the Sixth International Conference on Educational Data Mining, pp. 312–313 (2013)

DeBoer, J., Ho, A.D., Stump, G.S., Breslow, L.: Changing “course” reconceptualizing educational variables for massive open online courses. Educ. Res. 43(2), 74–84 (2014)

Demšar, J.: Statistical comparisons of classifiers over multiple data sets. J. Mach. Learn. Res. 7(Jan), 1–30 (2006)

Dietterich, T.G.: Approximate statistical tests for comparing supervised classification learning algorithms. Neural Comput. 10(7), 1895–1923 (1998)

Dillon, J., Bosch, N., Chetlur, M., Wanigasekara, N., Ambrose, G.A., Sengupta, B., D’Mello, S.K.: Student emotion, co-occurrence, and dropout in a MOOC context. In: Proceedings of the 9th International Conference on Educational Data Mining, pp. 353–357 (2016)

Domingos, P.: Occam’s two razors: the sharp and the blunt. In: KDD, American Association for Artificial Intelligence, pp. 37–43 (1998)

Domingos, P.: The role of occam’s razor in knowledge discovery. Data Min. Knowl. Discov. 3(4), 409–425 (1999)

Donoho, D.: 50 years of data science. In: Tukey Centennial Workshop, Princeton, pp. 1–41 (2015)

Dowell, N.M., Skrypnyk, O., Joksimovic, S., et al.: Modeling learners’ social centrality and performance through language and discourse. In: Proceedings of the 8th International Conference on Educational Data Mining, pp. 250–257 (2015)

Dowell, N.M.M., Brooks, C., Kovanović, V., Joksimović, S., Gašević, D.: The changing patterns of MOOC discourse. In: Proceedings of the Fourth (2017) ACM Conference on Learning @ Scale, L@S ’17, pp. 283–286. ACM, New York (2017)

Dupin-Bryant, P.A.: Pre-entry variables related to retention in online distance education. Am. J. Distance Educ. 18(4), 199–206 (2004)

Evans, B.J., Baker, R.B., Dee, T.S.: Persistence patterns in massive open online courses (MOOCs). J. High. Educ. 87(2), 206–242 (2016)

Fei, M., Yeung, D.Y.: Temporal models for predicting student dropout in massive open online courses. In: 2015 IEEE International Conference on Data Mining Workshop (ICDMW), ieeexplore.ieee.org, pp. 256–263 (2015)

Fire, M., Katz, G., Elovici, Y., Shapira, B., Rokach, L.: Predicting student exam’s scores by analyzing social network data. In: Active Media Technology, pp. 584–595. Springer, Berlin (2012)

García-Saiz, D., Zorrilla, M.: A meta-learning based framework for building algorithm recommenders: an application for educational arena. J. Intell. Fuzzy Syst. 32(2), 1449–1459 (2017)

Gardner, J., Brooks, C.: Dropout model evaluation in MOOCs. In: Eighth AAAI Symposium on Educational Advances in Artificial Intelligence (EAAI-18), Association for the Advancement of Artificial Intelligence (AAAI), pp. 1–7 (2018)

Gardner, J., Brooks, C., Andres, J.M.L., Baker, R.: MORF: A framework for MOOC predictive modeling and replication at scale (2018). arXiv:1801.05236

Garman, G.: A logistic approach to predicting student success in online database courses. Am. J. Bus. Educ. 3(12), 1 (2010)

Gašević, D., Zouaq, A., Janzen, R.: “Choose your classmates, your GPA is at stake!” the association of cross-class social ties and academic performance. Am. Behav. Sci. 57(10), 1460–1479 (2013)

Gelman, A., Loken, E.: The garden of forking paths: why multiple comparisons can be a problem, even when there is no “fishing expedition” or “p-hacking” and the research hypothesis was posited ahead of time. Technical report (2013)

Gelman, A., Hill, J., Yajima, M.: Why we (usually) don’t have to worry about multiple comparisons. J. Res. Educ. Eff. 5(2), 189–211 (2012)

Glass, C.R., Shiokawa-Baklan, M.S., Saltarelli, A.J.: Who takes MOOCs? New Dir. Inst. Res. 2015(167), 41–55 (2016)

Greene, J.A., Oswald, C.A., Pomerantz, J.: Predictors of retention and achievement in a massive open online course. Am. Educ. Res. J. 52(5), 925–955 (2015)

Guo, P.J., Reinecke, K.: Demographic differences in how students navigate through MOOCs. In: Proceedings of the First ACM Conference on Learning @ Scale Conference, L@S ’14, pp. 21–30. ACM, New York (2014)

Gütl, C., Rizzardini, R.H., Chang, V., Morales, M.: Attrition in MOOC: lessons learned from drop-out students. In: Uden, L., Sinclair, J., Tao, Y.H., Liberona, D. (eds.) Learning Technology for Education in Cloud. MOOC and Big Data, pp. 37–48. Springer, Cham (2014)

Halawa, S., Greene, D., Mitchell, J.: Dropout prediction in MOOCs using learner activity features. In: Experiences and Best Practices in and Around MOOCs, vol. 7, pp. 3–12 (2014)

Hansen, J.D., Reich, J.: Socioeconomic status and MOOC enrollment: enriching demographic information with external datasets. In: Proceedings of the Fifth International Conference on Learning Analytics And Knowledge, LAK ’15, pp. 59–63. ACM, New York (2015)

He, J., Bailey, J., Rubinstein, B.I.P., Zhang, R.: Identifying at-risk students in massive open online courses. In: Proceedings of the Twenty-Ninth AAAI Conference on Artificial Intelligence, pp. 1749–1755 (2015)

Henrich, J., Heine, S.J., Norenzayan, A.: The weirdest people in the world? Behav. Brain Sci. 33(2–3), 61–83 (2010). (discussion 83–135)

Ho, A.: Advancing educational research and student privacy in the “big data” era. In: Workshop on Big Data in Education: Balancing the Benefits of Educational Research and Student Privacy, pp. 1–18. National Academy of Education, Washington (2017)

Hosseini, R., Brusilovsky, P., Yudelson, M., Hellas, A.: Stereotype modeling for Problem-Solving performance predictions in MOOCs and traditional courses. In: Proceedings of the 25th Conference on User Modeling, Adaptation and Personalization, UMAP ’17, pp. 76–84. ACM, New York (2017)

Ishwaran, H., Kogalur, U.B., Blackstone, E.H., Lauer, M.S.: Random survival forests. Ann. Appl. Stat. 2(3), 841–860 (2008)

Park, J.-H., Choi, H.J.: Factors influencing adult learners’ decision to drop out or persist in online learning. J. Educ. Technol. Soc. 12(4), 207–217 (2009)

Jiang, S., Fitzhugh, S.M., Warschauer, M.: Social positioning and performance in MOOCs. In: Proceedings of the 2014 Workshop on Graph-Based Educational Data Mining, pp. 55–58 (2014a)

Jiang, S., Williams, A., Schenke, K., Warschauer, M., O’dowd, D.: Predicting MOOC performance with week 1 behavior. In: Proceedings of the 7th International Conference on Educational Data Mining, pp. 273–275 (2014b)

Joksimović, S., Manataki, A., Gašević, D., Dawson, S., Kovanović, V., de Kereki, I.F.: Translating network position into performance: importance of centrality in different network configurations. In: Proceedings of the 6th International Conference on Learning Analytics and Knowledge, LAK ’16, pp. 314–323. ACM, New York (2016)

Jordan, K.: Initial trends in enrolment and completion of massive open online courses. Int. Rev. Res. Open Distrib. Learn. 15(1), 133–160 (2014)

Jordan, K.: MOOC completion rates: the data. http://www.katyjordan.com/MOOCproject. Accessed 2017-9-15 (2015)

Kennedy, G., Coffrin, C., de Barba, P., Corrin, L.: Predicting success: how learners’ prior knowledge, skills and activities predict MOOC performance. In: Proceedings of the Fifth International Conference on Learning Analytics and Knowledge, LAK ’15, pp. 136–140. , ACM, New York (2015)

Khalil, H., Ebner, M.: MOOCs completion rates and possible methods to improve retention-a literature review. In: World Conference on Educational Multimedia, Hypermedia and Telecommunications, pp. 1305–1313 (2014)

Kitzes, J., Turek, D., Deniz, F.: The Practice of Reproducible Research: Case Studies and Lessons from the Data-Intensive Sciences. University of California Press, Berkeley (2017)

Kizilcec, R.F., Cohen, G.L.: Eight-minute self-regulation intervention raises educational attainment at scale in individualist but not collectivist cultures. Proc. Natl. Acad. Sci. USA 114(17), 4348–4353 (2017)

Kizilcec, R.F., Halawa, S.: Attrition and achievement gaps in online learning. In: Proceedings of the Second (2015) ACM Conference on Learning @ Scale, L@S ’15, pp. 57–66. ACM, New York (2015)

Kloft, M., Stiehler, F., Zheng, Z., Pinkwart, N.: Predicting MOOC dropout over weeks using machine learning methods. In: Proceedings of the EMNLP 2014 Workshop on Analysis of Large Scale Social Interaction in MOOCs, aclweb.org, pp. 60–65 (2014)

Koedinger, K.R., Kim, J., Jia, J.Z., McLaughlin, E.A., Bier, N.L.: Learning is not a spectator sport: Doing is better than watching for learning from a MOOC. In: Proceedings of the Second (2015) ACM Conference on Learning @ Scale, L@S ’15, pp. 111–120. ACM, New York (2015)

Koller, D., Ng, A., Do, C., Chen, Z.: Retention and intention in massive open online courses: in depth. Educ. Rev. 48(3), 62–63 (2013)

Kotsiantis, S., Patriarcheas, K., Xenos, M.: A combinational incremental ensemble of classifiers as a technique for predicting students’ performance in distance education. Knowl. Based Syst. 23(6), 529–535 (2010)

Kotsiantis, S.B., Pierrakeas, C.J., Pintelas, P.E.: Preventing student dropout in distance learning using machine learning techniques. In: Palade, V., Howlett, R.J., Jain, L. (eds.) Knowledge-Based Intelligent Information and Engineering Systems. Lecture Notes in Computer Science, pp. 267–274. Springer, Berlin (2003)

Levy, Y.: Comparing dropouts and persistence in e-learning courses. Comput. Educ. 48(2), 185–204 (2007)

Li, W., Gao, M., Li, H., Xiong, Q., Wen, J., Wu, Z.: Dropout prediction in MOOCs using behavior features and multi-view semi-supervised learning. In: 2016 International Joint Conference on Neural Networks (IJCNN), pp. 3130–3137 (2016a)

Li, X., Xie, L., Wang, H.: Grade prediction in MOOCs. In: 2016 IEEE International Conference on Computational Science and Engineering (CSE) and IEEE International Conference on Embedded and Ubiquitous Computing (EUC) and 15th International Symposium on Distributed Computing and Applications for Business Engineering (DCABES), pp. 386–392 (2016b)

Li, X., Wang, T., Wang, H.: Exploring n-gram features in clickstream data for MOOC learning achievement prediction. In: Database Systems for Advanced Applications, pp. 328–339. Springer, Cham (2017)

Liang, J., Li, C., Zheng, L.: Machine learning application in MOOCs: dropout prediction. In: 2016 11th International Conference on Computer Science Education (ICCSE), pp. 52–57 (2016)

Luo, L., Koprinska, I., Liu, W.: Discrimination-aware classifiers for student performance prediction. In: Proceedings of the 8th International Conference on Educational Data Mining, pp. 384–387 (2015)

Lykourentzou, I., Giannoukos, I., Nikolopoulos, V., Mpardis, G., Loumos, V.: Dropout prediction in e-learning courses through the combination of machine learning techniques. Comput. Educ. 53(3), 950–965 (2009)

Makel, M.C., Plucker, J.A.: Facts are more important than novelty: replication in the education sciences. Educ. Res. 43(6), 304–316 (2014)

Molina, M.M., Luna, J.M., Romero, C., Ventura, S.: Meta-learning approach for automatic parameter tuning: a case study with educational datasets. In: Proceedings of the 5th International Conference on Educational Data Mining, pp. 180–183 (2012)

Nagrecha, S., Dillon, J.Z., Chawla, N.V.: MOOC dropout prediction: lessons learned from making pipelines interpretable. In: Proceedings of the 26th International Conference on World Wide Web Companion, International World Wide Web Conferences Steering Committee, Republic and Canton of Geneva, Switzerland, WWW ’17 Companion, pp. 351–359 (2017)

Ocumpaugh, J., Baker, R., Gowda, S., Heffernan, N., Heffernan, C.: Population validity for educational data mining models: a case study in affect detection. Br. J. Educ. Technol. 45(3), 487–501 (2014)

Pappano, L.: The year of the MOOC. NY Times 2(12) (2012)

Pardos, Z.A., Baker, R.S.J.D., San Pedro, M.O.C.Z., Gowda, S.M., Gowda, S.M.: Affective states and state tests: Investigating how affect throughout the school year predicts end of year learning outcomes. In: Proceedings of the 3rd International Conference on Learning Analytics and Knowledge, LAK ’13, pp. 117–124. ACM, New York (2013)

Pardos, Z.A., Tang, S., Davis, D., Le, C.V.: Enabling real-time adaptivity in MOOCs with a personalized Next-Step recommendation framework. In: Proceedings of the 4th ACM Conference on Learning @ Scale, L@S ’17, pp. 23–32. ACM, New York (2017)

Peng, R.D.: Reproducible research in computational science. Science 334(6060), 1226–1227 (2011)

Pennebaker, J.W., Booth, R.J., Boyd, R.L., Francis, M.E.: Linguistic Inquiry and Word Count: LIWC2015. Pennebaker Conglomerates, Austin (2015)

Perna, L.W., Ruby, A., Boruch, R.F., Wang, N., Scull, J., Ahmad, S., Evans, C.: Moving through MOOCs: understanding the progression of users in massive open online courses. Educ. Res. 43(9), 421–432 (2014)

Pham, P., Wang, J.: AttentiveLearner: improving mobile MOOC learning via implicit heart rate tracking. In: Proceedings of the International Conference on Artificial Intelligence in Education, pp. 367–376. Springer, Cham (2015)

Qiu, J., Tang, J., Liu, T.X., Gong, J., Zhang, C., Zhang, Q., Xue, Y.: Modeling and predicting learning behavior in MOOCs. In: Proceedings of the Ninth ACM International Conference on Web Search and Data Mining, WSDM ’16, pp. 93–102. ACM, New York (2016)

Ramesh, A., Goldwasser, D., Huang, B., Daumé, H. III, Getoor, L.: Modeling learner engagement in MOOCs using probabilistic soft logic. In: NIPS Workshop on Data Driven Education, vol. 21, p. 62 (2013)

Ramesh, A., Goldwasser, D., Huang, B., Daume, H. III, Getoor, L.: Uncovering hidden engagement patterns for predicting learner performance in MOOCs. In: Proceedings of the First ACM Conference on Learning @ Scale Conference, L@S ’14, pp. 157–158. ACM, New York (2014)

Ramos, C., Yudko, E.: “Hits” (not “discussion posts”) predict student success in online courses: a double cross-validation study. Comput. Educ. 50(4), 1174–1182 (2008)

Reich, J.: MOOC completion and retention in the context of student intent. EDUCAUSE Review Online (2014)

Ren, Z., Rangwala, H., Johri, A.: Predicting performance on MOOC assessments using multi-regression models (2016). arXiv:1605.02269

Ribeiro, M.T., Singh, S., Guestrin, C.: Why should I trust you? Explaining the predictions of any classifier. In: Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 1135–1144. ACM, New York (2016)

Robinson, C., Yeomans, M., Reich, J., Hulleman, C., Gehlbach, H.: Forecasting student achievement in MOOCs with natural language processing. In: Proceedings of the Sixth International Conference on Learning Analytics and Knowledge, LAK ’16, pp. 383–387. ACM, New York (2016)

Romero, C., Olmo, J.L., Ventura, S.: A meta-learning approach for recommending a subset of white-box classification algorithms for moodle datasets. In: Proceedings of the Sixth International Conference on Educational Data Mining, pp. 268–271 (2013)

Rosé, C.P., Carlson, R., Yang, D., Wen, M., Resnick, L., Goldman, P., Sherer, J.: Social factors that contribute to attrition in MOOCs. In: Proceedings of the First ACM Conference on Learning @ Scale Conference, L@S ’14, pp. 197–198. ACM, New York (2014)

Rosenthal, R.: The file drawer problem and tolerance for null results. Psychol. Bull. 86(3), 638 (1979)

Russo, T.C., Koesten, J.: Prestige, centrality, and learning: a social network analysis of an online class. Commun. Educ. 54(3), 254–261 (2005)

Sanchez-Santillan, M., Paule-Ruiz, M., Cerezo, R., Nuñez, J.: Predicting students’ performance: incremental interaction classifiers. In: Proceedings of the Third (2016) ACM Conference on Learning @ Scale, L@S ’16, pp. 217–220. ACM, New York (2016)

Seaton, D.T., Coleman, C., Daries, J., Chuang, I.: Enrollment in MITx MOOCs: are we educating educators. Educause Review (2015)

Shah, D.: By the numbers: MOOCS in 2017 (2018). https://www.class-central.com/report/mooc-stats-2017/. Accessed 2018-4-8

Sharkey, M., Sanders, R.: A process for predicting MOOC attrition. In: Proceedings of the EMNLP 2014 Workshop on Analysis of Large Scale Social Interaction in MOOCs, pp. 50–54 (2014)

Sinha, T., Cassell, J.: Connecting the dots: Predicting student grade sequences from bursty MOOC interactions over time. In: Proceedings of the Second (2015) ACM Conference on Learning @ Scale, L@S ’15, pp. 249–252. ACM, New York (2015)

Sinha, T., Jermann, P., Li, N., Dillenbourg, P.: Your click decides your fate: inferring information processing and attrition behavior from MOOC video clickstream interactions. In: Proceedings of the EMNLP 2014 Workshop on Analysis of Large Scale Social Interaction in MOOCs, pp. 3–13 (2014a)

Sinha, T., Li, N., Jermann, P., Dillenbourg, P.: Capturing “attrition intensifying” structural traits from didactic interaction sequences of MOOC learners (2014b). arXiv:1409.5887

Slade, S., Prinsloo, P.: Learning analytics: ethical issues and dilemmas. Am. Behav. Sci. 57(10), 1510–1529 (2013)

Stein, R.M., Allione, G.: Mass attrition: an analysis of drop out from a principles of microeconomics MOOC. Technical report, Penn Institute for Economic Research (2014)

Stodden, V., Miguez, S.: Best practices for computational science: software infrastructure and environments for reproducible and extensible research. J. Open Res. Softw. 2(1), 1–6 (2013)

Street, H.D.: Factors influencing a learner’s decision to drop-out or persist in higher education distance learning. Online J. Distance Learn. Adm. 13(4), 4 (2010)

Tang, J.K.T., Xie, H., Wong, T.L.: A big data framework for early identification of dropout students in MOOC. In: Lam, J., Ng, K., Cheung, S., Wong, T., Li, K., Wang, F. (eds.) Technology in Education. Technology-Mediated Proactive Learning, pp. 127–132. Springer, Berlin (2015)

Taylor, C.: Stopout prediction in massive open online courses. PhD thesis, Massachusetts Institute of Technology (2014)

Taylor, C., Veeramachaneni, K., O’Reilly, U.M.: Likely to stop? Predicting stopout in massive open online courses (2014). arXiv:1408.3382

Tinto, V.: Research and practice of student retention: what next? J. Coll. Stud. Retent. 8(1), 1–19 (2006)

Tucker, C., Pursel, B.K., Divinsky, A.: Mining student-generated textual data in MOOCs and quantifying their effects on student performance and learning outcomes. Comput. Educ. J. 5(4), 84–95 (2014)

Veeramachaneni, K., O’Reilly, U.M., Taylor, C.: Towards feature engineering at scale for data from massive open online courses (2014). arXiv:1407.5238

Vitiello, M., Walk, S., Hernández, R., Helic, D., Gutl, C.: Classifying students to improve MOOC dropout rates. In: Proceedings of the European MOOC Stakeholder Summit, pp. 501–507 (2016)

Vitiello, M., Gütl, C., Amado-Salvatierra, H.R., Hernández, R.: MOOC learner behaviour: attrition and retention analysis and prediction based on 11 courses on the TELESCOPE platform. In: Learning Technology for Education Challenges. Communications in Computer and Information Science, pp. 99–109. Springer, Cham (2017a)

Vitiello, M., Walk, S., Chang, V., Hernandez, R., Helic, D., Guetl, C.: MOOC dropouts: a multi-system classifier. In: Lavoué, É., Drachsler, H., Verbert, K., Broisin, J., Pérez-Sanagustín, M. (eds.) Data Driven Approaches in Digital Education. Lecture Notes in Computer Science, pp. 300–314. Springer, Cham (2017b)

Wang, F., Chen, L.: A nonlinear state space model for identifying at-risk students in open online courses. In: Proceedings of the 9th International Conference on Educational Data Mining, pp. 527–532 (2016)

Wang, X., Yang, D., Wen, M., Koedinger, K., Rosé, C.P.: Investigating how student’s cognitive behavior in MOOC discussion forums affect learning gains. In: Proceedings of the 8th International Conference on Educational Data Mining, pp. 226–233 (2015)

Wang, Y.: Demystifying learner success: before, during, and after a massive open online course. PhD thesis, Teachers College, Columbia University (2017)

Wen, M., Yang, D., Rose, C.: Sentiment analysis in MOOC discussion forums: what does it tell us? In: Proceedings of the 7th International Conference on Educational Data Mining, pp. 130–137 (2014a)

Wen, M., Yang, D., Rosé, C.P.: Linguistic reflections of student engagement in massive open online courses. In: Proceedings of the Eighth International AAAI Conference on Weblogs and Social Media, pp. 525–534 (2014b)

Whitehill, J., Williams, J., Lopez, G., Coleman, C., Reich, J.: Beyond prediction: Toward automatic intervention to reduce MOOC student stopout. In: Proceedings of the 8th International Conference on Educational Data Mining, pp. 171–178 (2015)

Whitehill, J., Mohan, K., Seaton, D., Rosen, Y., Tingley, D.: Delving deeper into MOOC student dropout prediction (2017). arXiv:1702.06404

Willging, P.A., Johnson, S.D.: Factors that influence students’ decision to dropout of online courses. J. Asynchronous Learn. Netw. 13(3), 115–127 (2009)

Wojciechowski, A., Palmer, L.B.: Individual student characteristics: can any be predictors of success in online classes? Online J. Distance Learn. Adm. 8(2), 1–21 (2005)

Wolpert, D.H., Macready, W.G.: No free lunch theorems for optimization. IEEE Trans. Evol. Comput. 1(1), 67–82 (1997)

Xiao, X., Pham, P., Wang, J.: AttentiveLearner: adaptive mobile MOOC learning via implicit cognitive states inference. In: Proceedings of the 2015 ACM International Conference on Multimodal Interaction, ICMI ’15, pp. 373–374. , ACM, New York (2015)

Xing, W., Chen, X., Stein, J., Marcinkowski, M.: Temporal predication of dropouts in MOOCs: reaching the low hanging fruit through stacking generalization. Comput. Hum. Behav. 58, 119–129 (2016)

Xu, B., Yang, D.: Motivation classification and grade prediction for MOOCs learners. Comput. Intell. Neurosci. 2174, 613 (2016)

Yang, D., Sinha, T., Adamson, D., Rosé, C.P.: Turn on, tune in, drop out: anticipating student dropouts in massive open online courses. In: Proceedings of the 2013 NIPS Data-Driven Education Workshop, vol. 11, p. 14 (2013)

Yang, D., Wen, M., Kumar, A., Xing, E.P., Rose, C.P.: Towards an integration of text and graph clustering methods as a lens for studying social interaction in MOOCs. Int. Rev. Res. Open Distrib. Learn. 15(5), 215–234 (2014)

Yang, D., Wen, M., Howley, I., Kraut, R., Rose, C.: Exploring the effect of confusion in discussion forums of massive open online courses. In: Proceedings of the Second (2015) ACM Conference on Learning @ Scale, L@S ’15, pp. 121–130. ACM, New York (2015)

Ye, C., Biswas, G.: Early prediction of student dropout and performance in MOOCSs using higher granularity temporal information. J. Learn. Anal. 1(3), 169–172 (2014)

Ye, C., Kinnebrew, J.S., Biswas, G., Evans, B.J., Fisher, D.H., Narasimham, G., Brady, K.A.: Behavior prediction in MOOCs using higher granularity temporal information. In: Proceedings of the Second (2015) ACM Conference on Learning @ Scale, L@S ’15, pp. 335–338. ACM, New York (2015)

Zafra, A., Ventura, S.: Multi-instance genetic programming for predicting student performance in web based educational environments. Appl. Soft Comput. 12(8), 2693–2706 (2012)

Zhou, Q., Mou, C., Yang, D.: Research progress on educational data mining a survey. J. Softw. Maint. Evol. Res. Pract. 26(11), 3026–3042 (2015)

Acknowledgements

This work was funded in part by the Michigan Institute for Data Science (MIDAS) Holistic Modeling of Education (HOME) project, and the University of Michigan Third Century Initiative. The authors would like to thank the four anonymous reviewers for their comments on the work.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Gardner, J., Brooks, C. Student success prediction in MOOCs. User Model User-Adap Inter 28, 127–203 (2018). https://doi.org/10.1007/s11257-018-9203-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11257-018-9203-z