Abstract

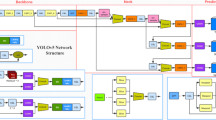

With the popularity of autonomous vehicles and the rapid development of intelligent transportation, the application scenarios for detecting pedestrians in everyday life are becoming more and more widespread, with high and high application value. Pedestrian detection is the basis of many human-based tasks, including speed tracking, pedestrian motion detection, automatic pedestrian recognition, and appropriate response measures, or rejecting true false pedestrian detection. Various researchers have done a lot of research in this area, but there are still many errors in the correct identification of rejecting true false pedestrians. This article focuses on the design and implementation of real pedestrian discovery using deep learning technology to identify pedestrian rejections. In this work, our goal is to estimate the achievement of the current 2D detection system with a 3D Convolutional Neural Network on the issues of rejecting true false pedestrians using images obtained from the car’s on-board cameras and light detection and ranging (LiDAR) sensors. We evaluate the single-phase (YOLOv3 models) and two-phase (Faster R-CNN) deep learning meta-structure under distinct image resolutions and attribute extractors (MobileNet). To resolve this issue, it is urge to apply a data augmentation approach to improve the execution of the framework. To observe the performance, the implemented methods are applied to recent datasets. The experimental assessment shows that the proposed method/algorithm enhances the accuracy of detection of true and false pedestrians, and still undergoes the real-time demands.

Similar content being viewed by others

References

Abughalieh, K. M., & Alawneh, S. G. (2020). Predicting pedestrian intention to cross the road. IEEE Access, 8, 72 558–72 569.

Amisse, C., Jijón-Palma, M. E., & Centeno, J. A. S. (2021). “Fine-tuning deep learning models for pedestrian detection,” Boletim de Ciências Geodésicas, 27.

Asim, M., Wang, Y., Wang, K., & Huang, P. Q. (2020). A review on computational intelligence techniques in cloud and edge computing. IEEE Transactions on Emerging Topics in Computational Intelligence, 4(6), 742–763.

Asvadi, A., Garrote, L., Premebida, C., Peixoto, P., & Nunes, U. J. (2018). Multimodal vehicle detection: fusing 3d-lidar and color camera data. Pattern Recognition Letters, 115, 20–29.

Chen, X., Ma, H., Wan, J., Li, B., & Xia, T. (2017). “Multi-view 3d object detection network for autonomous driving,” in Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, 1907–1915.

Du, X., Ang, M. H., Karaman, S., & Rus, D. (2018). “A general pipeline for 3d detection of vehicles,” in 2018 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 3194–3200.

Hamed, O., & Steinhauer, H. J. (2021) “Pedestrian’s intention recognition, fusion of handcrafted features in a deep learning approach,” in AAAI-21 Student Abstract and Poster Program, Thirty-Fifth Conference on Artificial Intelligence, February 2-9, 2021. AAAI Press.

Howard, A., Zhmoginov, A., Chen, L.-C., Sandler, M., & Zhu, M. (2018). “Inverted residuals and linear bottlenecks: Mobile networks for classification, detection and segmentation,” .

Jiang, Z., Xia, P., Huang, K., Stechele, W., Chen, G., Bing, Z., & Knoll, A. (2019). “Mixed frame-/event-driven fast pedestrian detection,” in 2019 International Conference on Robotics and Automation (ICRA). IEEE, 8332–8338.

Kidono, K., Miyasaka, T., Watanabe, A., Naito, T., & Miura, J. (2011). “Pedestrian recognition using high-definition lidar,” in IEEE Intelligent Vehicles Symposium (IV). IEEE, 405–410.

Ku, J., Mozifian, M., Lee, J., Harakeh, A., & Waslander, S. L. (2018). “Joint 3d proposal generation and object detection from view aggregation,” in 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 1–8.

LeCun, Y., Bengio, Y., & Hinton, G. (2015). Deep learning. Nature, 521(7553), 436–444.

Liang, M., Yang, B., Chen, Y., Hu, R., & Urtasun, R. (2019). “Multi-task multi-sensor fusion for 3d object detection,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 7345–7353.

Martinson, I. (1989). The spectroscopy of highly ionised atoms. Reports on Progress in Physics, 52(2), 157.

Miron, A., Rogozan, A., Ainouz, S., Bensrhair, A., & Broggi, A. (2015). An evaluation of the pedestrian classification in a multi-domain multi-modality setup. Sensors, 15(6), 13 851-13 873.

Ouyang, W., Zhou, H., Li, H., Li, Q., Yan, J., & Wang, X. (2017). Jointly learning deep features, deformable parts, occlusion and classification for pedestrian detection. IEEE transactions on pattern analysis and machine intelligence, 40(8), 1874–1887.

Premebida, C., Ludwig, O., & Nunes, U. (2009). “Exploiting lidar-based features on pedestrian detection in urban scenarios,” in 2009 12th International IEEE Conference on Intelligent Transportation Systems. IEEE, 1–6.

Qi, C. R., Liu, W., Wu, C., Su, H., & Guibas, L. J. (2018). “Frustum pointnets for 3d object detection from rgb-d data,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 918–927.

Rasouli, A., Kotseruba, I., & Tsotsos, J. K., (2017). “Agreeing to cross: How drivers and pedestrians communicate,” in IEEE Intelligent Vehicles Symposium (IV). IEEE, 264–269.

Redmon, J., & Farhadi, A. (2018). “Yolov3: An incremental improvement,” arXiv preprint arXiv:1804.02767.

Schalling, F., Ljungberg, S., & Mohan, N., (2019). “Benchmarking lidar sensors for development and evaluation of automotive perception,” in 4th International Conference and Workshops on Recent Advances and Innovations in Engineering (ICRAIE). IEEE, 1–6.

Schlosser, J., Chow, C. K., & Kira, Z. (2016). “Fusing lidar and images for pedestrian detection using convolutional neural networks,” in 2016 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2198–2205.

Schneider, L., Jasch, M., Fröhlich, B., Weber, T., Franke, U., Pollefeys, M., & Rätsch, M. (2017). “Multimodal neural networks: Rgb-d for semantic segmentation and object detection,” in Scandinavian conference on image analysis. Springer, 98–109.

Shi, S., Guo, C., Jiang, L., Wang, Z., Shi, J., Wang, X., & Li, H. (2020) “Pv-rcnn: Point-voxel feature set abstraction for 3d object detection,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 10 529–10 538.

Sindagi, V. A., Zhou, Y., & Tuzel, O. (2019). “Mvx-net: Multimodal voxelnet for 3d object detection,” in 2019 International Conference on Robotics and Automation (ICRA). IEEE, 7276–7282.

Takumi, K., Watanabe, K., Ha, Q., Tejero-De-Pablos, A., Ushiku, Y., & Harada, T. (2017). Multispectral object detection for autonomous vehicles. Proceedings of the on Thematic Workshops of ACM Multimedia, 2017, 35–43.

Wang, H., Wang, B., Liu, B., Meng, X., & Yang, G. (2017). Pedestrian recognition and tracking using 3d lidar for autonomous vehicle. Robotics and Autonomous Systems, 88, 71–78.

Wang, Z., & Jia, K. (2019). “Frustum convnet: Sliding frustums to aggregate local point-wise features for amodal 3d object detection,” in 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 1742–1749.

Wang, Z., Zhan, W., & Tomizuka, M. (2018). “Fusing bird’s eye view lidar point cloud and front view camera image for 3d object detection,” in IEEE Intelligent Vehicles Symposium (IV). IEEE, 1–6.

Xu, H., Guo, M., Nedjah, N., Zhang, J., & Li, P. (2022). “Vehicle and pedestrian detection algorithm based on lightweight yolov3-promote and semi-precision acceleration,” IEEE Transactions on Intelligent Transportation Systems.

You, Q., & Jiang, H. (2020). “Real-time 3d deep multi-camera tracking,” arXiv preprint arXiv:2003.11753.

Zheng, G., & Chen, Y. (2012). “A review on vision-based pedestrian detection,” in IEEE Global High Tech Congress on Electronics. IEEE, 49–54.

Acknowledgements

This work was supported by the EIAS Data Science Lab, College of Computer and Information Sciences, Prince Sultan University, Riyadh, Saudi Arabia.

Funding

The authors have not disclosed any funding.

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Competing Interests

The authors have not disclosed any competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Iftikhar, S., Asim, M., Zhang, Z. et al. Advance generalization technique through 3D CNN to overcome the false positives pedestrian in autonomous vehicles. Telecommun Syst 80, 545–557 (2022). https://doi.org/10.1007/s11235-022-00930-1

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11235-022-00930-1