Abstract

According to the hierarchy of models (HoM) account of scientific experimentation developed by Patrick Suppes and elaborated by Deborah Mayo, theoretical considerations about the phenomena of interest are involved in an experiment through theoretical models that in turn relate to experimental data through data models, via the linkage of experimental models. In this paper, I dispute the HoM account in the context of present-day high-energy physics (HEP) experiments. I argue that even though the HoM account aims to characterize experimentation as a model-based activity, it does not involve a modeling concept for the process of data acquisition, and it thus fails to provide a model-based characterization of the theory-experiment relationship underlying this process. In order to characterize the foregoing relationship, I propose the concept of a model of data acquisition and illustrate it in the case of the ATLAS experiment at CERN’s Large Hadron Collider, where the Higgs boson was discovered in 2012. I show that the process of data acquisition in the ATLAS experiment is performed according to a model of data acquisition that specifies and organizes the experimental procedures necessary to select the data according to a predetermined set of selection criteria. I also point out that this data acquisition model is theory-laden, in the sense that the underlying data selection criteria are determined by considering the testable predictions of the theoretical models that the ATLAS experiment is aimed to test. I take this sense of theory-ladenness to indicate that the relationship between the procedures of the ATLAS experiment and the theoretical models of the phenomena of interest is first established, prior to the formation of data models, through the data acquisition model of the experiment, thus not requiring the intermediary of other types of models as suggested by the HoM account. I therefore conclude that in the context of present-day HEP experiments, the HoM account does not consistently extend to the process of data acquisition so as to include models of data acquisition.

Similar content being viewed by others

1 Introduction

A scientific experiment consists of various stages ranging from the design and construction of experimental set-up and procedures to the acquisition and analysis of experimental data and the interpretation of experimental results. As is widely acknowledged in the philosophical literature, experimentation is theory-laden, because the various stages of an experiment involve, to varying extents, theoretical considerations, not only about the phenomena of interest but also about the working of the experimental set-up and procedures. It is therefore important for the epistemology of experimentation to understand how the various stages of an experiment are organized and coordinated with each other as well as how and to what extent theoretical considerations are involved in the stages of an experiment and what kinds of effects they have on experimental results. In the philosophical literature, the foregoing questions have been dealt with from a modeling perspective by what is today referred to as the hierarchy of models (HoM) account of scientific experimentation, which was developed by Patrick Suppes (1962) and elaborated by Deborah Mayo (1996). According to the HoM account, a scientific experiment is essentially a model-based activity, in the sense that it is performed by means of various types of models that relate to each other through a hierarchical structure. Over the years, the HoM account has been applied to various experimental contexts, including the case of electron microscopy (Harris 1999), the case of binary pulsar analysis (Mayo 2000), the case of the Collider Detector Experiment at Fermilab (Staley 2004), and the case of simulation studies (Winsberg 1999).

In this paper, I will dispute the HoM account in the context of present-day high-energy physics experiments (HEP). To this end, I will present a case study that examines the process of data acquisition as well as the statistical testing of the Higgs boson hypothesis in the ATLASFootnote 1 experiment at CERN’s Large Hadron Collider (LHC),Footnote 2 where the Higgs boson was discovered in 2012 (ATLAS Collaboration 2012a). The present case study is also aimed at elucidating the role of theoretical considerations in the production of experimental data in the context of present-day HEP experiments. This is an important issue for the epistemology of scientific experimentation but has not yet received due attention from philosophers of science, while the relevant philosophical literature has so far largely focused on the role of theoretical considerations in the production and interpretation of experimental results.Footnote 3

The plan of the present paper is as follows. In Sect. 2, I will revisit the HoM account and argue that it fails to provide a model-based characterization of the process of data acquisition. In Sect. 3, I will discuss the necessity of modeling the process of data acquisition in present-day HEP experiments. In Sect. 4, I will examine how data selection criteria are determined in the ATLAS experiment. In Sect. 5, I will show that the process of data acquisition in the ATLAS experiment is modeled as a three-level data selection process. In Sect. 6, I will examine the statistical testing of the Higgs boson hypothesis in the ATLAS experiment. Finally, in Sect. 7, I will present the conclusions of the present case study and discuss their implications for the HoM account.

2 Theory-experiment relationship according the HoM account

In an influential paper, entitled “Models of Data,” Suppes argued that “exact analysis of the relation between empirical theories and relevant data calls for a hierarchy of models of different logical type” (Suppes 1962, p. 253). At the top end of his proposed HoM lie what he calls “models of theory.” Suppes defines a model of a theory to be “a possible realization in which all valid sentences of the theory are satisfied” (ibid., p. 252).Footnote 4 One step down the proposed HoM lie what he calls “models of experiment,” which are primarily developed to confront testable conclusions of models of theory with experimental data. Models of experiment fulfill this task by specifying various factors in an experiment, such as the testing rule, the choice of experimental parameters, the number of trials, specific procedures by which data are to be collected, as well as the range of data. Therefore, models of experiment are linkage models between models of theory and what Suppes calls “models of data,” which constitute the third level of the proposed HoM. In Suppes’ account, each model of data includes a possible realization of experimental data, but not vice versa. In order for a set of data to count as a model of data, it is required to satisfy the statistical features of data (such as homogeneity and stationarity) that are demanded by the experimental model used for hypothesis testing. In this sense, in Suppes’s account, models of data offer canonical representations of experimental data and “incorporate all the information about the experiment which can be used in statistical tests of the adequacy of the theory” (ibid., p. 258). At the bottom of the proposed HoM lie two more levels, the first of which is what Suppes calls the level of “experimental design” that concerns experimental procedures, such as calibration of instruments and randomization of data, which directly relate to the formation of models of data. Below this level, one finds what Suppes calls ceteris paribus conditions; namely, auxiliary factors that contain “detailed information about the distribution of physical parameters characterizing the experimental environment” (ibid.). In Suppes’s account, ceteris paribus conditions in an experiment might include auxiliary factors such as control of loud noises, bad odors, wrong times of day or season and so forth, that involve no formal statistics.

Mayo provided an elaborated account of the HoM. In her account, both the order and the types of models constituting the claimed hierarchy are essentially the same as the ones in Suppes’s account (Mayo 1996, Chap. 5). It is important to note that unlike Suppes who aimed at uncovering set-theoretical connections that, he believed, exist between the different levels of his proposed HoM, Mayo does not adopt a set-theoretic approach to modeling. Rather, her interest in the HoM is to “offer a framework for canonical models of error, methodological rules, and theories of statistical testing” (ibid., p. 131). According to Mayo’s account, at the top end of the HoM lie “primary theoretical models” that serve to “[b]reak down inquiry into questions that can be addressed by canonical models for testing hypotheses and estimating values of parameters in equations and theories” as well as to “[t]est hypotheses by applying procedures of testing and estimation to models of data” (ibid. p. 140).Footnote 5 At the next level down the HoM lie models of experiment that are aimed at testing scientific hypotheses. To this end, a model of experiment serves two complementary functions. The “first function [...] involves specifying the key features of the experiment[, such as sample size, experimental variables and tests statistics,] and stating the primary question (or questions) with respect to it” (ibid., p. 134). According to Mayo, experimental models “serve a second function: to specify analytical techniques for linking experimental data to the questions of the experimental model” (ibid.). More specifically, experimental models serve to “[s]pecify analytical methods to answer questions framed in terms of the experiment: choice of testing or estimating procedure, specification of a measure of fit and of test characteristics (error probabilities), e.g., significance level” (ibid., p. 140). Therefore, like Suppes, Mayo suggests that experimental models play the role of linkage models between theoretical models and data models in two ways. While the “first function [of experimental models] addresses the links between the primary hypotheses and experimental hypotheses (or questions), the second function concerns links between the experimental hypotheses and experimental data or data models” (ibid., p. 134).

In Mayo’s account, at the bottom end of the HoM lie models of data that serve to “[p]ut raw data into a canonical form to apply analytical methods and run hypothesis tests” as well as to “[t]est whether assumptions of the experimental model hold for the actual data (remodel data, run statistical tests for independence and for experimental control), test for robustness” (ibid., p. 140). Unlike Suppes who separated the level of ceteris paribus conditions from the level of experimental design, Mayo combines these two levels into a single one that serves planning and executing data generation procedures,Footnote 6 such as “introduc[ing] statistical considerations via simulations and manipulations on paper or on computer[; applying] systematic procedures for producing data satisfying the assumptions of the experimental data model[; and insuring] the adequate control of extraneous factors or [estimating] their influence to subtract them out in the analysis” (ibid., p. 140).

Summarizing, the HoM account characterizes scientific experimentation as a model-based activity, in the sense that the relationship between theory and experimental data is established through the proposed HoM in such a way so as to test a scientific hypothesis. Yet, the HoM account does not involve a modeling concept for the acquisition of experimental data. As a result, it abstracts away all the operational details of the process of data acquisition and thus treats it as a black-box that produces certain outputs in the form of data sets given appropriate inputs about objects under investigation as well as theoretical considerations about these objects. This in turn indicates that the HoM account is merely an account of how scientific hypotheses are tested against experimental data. However, since the details of the process of data acquisition are crucial to understand how theory is involved and what kinds of roles it plays in experimental procedures before experimental data are obtained, an adequate account of the theory-experiment relationship should also account for the involvement of theory in the process of data acquisition.

In the next section, I will discuss the necessity of modeling the process of data acquisition in present-day HEP experiments as well as the role of theoretical models in this process. In light of these considerations, I will suggest a model-based account of the involvement of theory in the process of data acquisition in the context of present-day HEP experiments.

3 The necessity of modeling data acquisition process in present-day HEP experiments

The immense advances that have taken place over the last fifty years or so in collider technology have resulted in vast increases in collision energies and eventFootnote 7 rates in HEP experiments. At the particle colliders built in the fifties and sixties (see Pickering 1984, Chap. 2), it was only possible to reach collision energies of few dozen GeV and event rates of few dozen Hz, whereas at present-day particle colliders, such as the Tevatron Collider at Fermilab and the LHC at CERN, collision energies of the order of the TeV energy scale and event rates of the order of MHz could now be reached. For example, the LHC is currently operating at a (center-of-mass) collision energy of 13 TeV with an event rate of around 40 MHz. The acquisition of what are often called interesting events, namely, the collision events relevant to the objectives of an experiment, becomes increasingly difficult as increasingly higher event rates are reached in HEP experiments. For interesting events are typically characterized by very small production rates and thus swamped by the background of the events containing abundant and well-known physics processes. This is illustrated by Fig. 1 for the case of the ATLAS and CMS experiments at the LHC, where there is a large background due to the well-known processes of the Standard Model (SM)Footnote 8 of elementary particle physics. Therefore, in present-day HEP experiments, the process of data acquisition is essentially a selection process by which the interesting events are selected from the rest of the collision events, so that the objectives of the experiment could be achieved.

Cross-section and rates (for a luminosity of \(10^{34}\,{\hbox {cm}}^{-2}\,{\hbox {s}}^{-1})\) at the LHC for various processes in proton–proton collisions, as a function of the centre-of-mass energy (Source Ellis 2002, p. 4)

Moreover, technical limitations in terms of data-storage capacity and data-process time make it necessary to apply data selection criteria to collisions events themselves in real-time, i.e., during the course of particle collisions at the collider. This indicates that in present-day HEP experiments, selectivity is built, in the first place, into the data acquisition procedures. At the stage of data analysis, various other selection criteria are also applied to the acquired sets of interesting events in order to analyze the latter in relation to the objectives of the experiment.Footnote 9 It is important to note that in present-day HEP experiments, due to the aforementioned technological limitations, only a minute fraction of the interesting events (at a ratio of approximately \(5\times 10^{-6})\) could be selected for further evaluation. Therefore, the issue of how data selection criteria are to be applied to collision events in real-time is of utmost importance for the fulfillment of the objectives of the experiment, and this is achieved through what I shall call a model of data acquisition, whose primary function is to specify and organize the procedures through which data selection criteria are to be applied to collision events so as to select interesting events. In this regard, a model of data acquisition is an essential component of the experimental process in present-day HEP experiments, and thus needs to be designed and constructed prior to the stage of data acquisition.Footnote 10

In the remainder of this paper, I will illustrate the concept of a model of data acquisition in the case of the ATLAS experiment. As the above discussion indicates, since data selection criteria are essential to the process of data acquisition, in what follows, I shall first discuss how data selection criteria are determined in the ATLAS experiment.

4 Determining data selection criteria in the ATLAS experiment

The ATLAS experiment (ATLAS Collaboration 2008) is designed as a multipurpose experiment mainly to test the prediction of the Higgs boson by the SM and the (experimentally testable) predictions of what are called the models beyond the SM (the BSM models). The latter are a group of HEP models that have been offered as possible extensions of the SM, such as extra-dimensional and supersymmetric models (see, e.g., Ellis 2002; Borrelli and Stöltzner 2013). The ATLAS experiment is also aimed at searching for novel physics processes that are not predicted by the present HEP models.

The signatures, i.e., stable decay products, predicted by the SM for the Higgs boson are high transverse-momentum (\(p_T )\)Footnote 11 photons and leptons,Footnote 12 and the signatures predicted by the BSM models for the new particles, including new heavy gauge bosons \({W}'\) and \({Z}'\), super-symmetric particles and gravitons, are high \(p_T \) photons and leptons, high \(p_T \) jets as well as high missing and total transverse energy \(\left( {E_T } \right) \). Here, the term high refers to the \(p_T \) and \(E_T \) values that are approximately of the order of 10 GeV for particles, and 100 GeV for jets. At the LHC, the foregoing high \(p_T \) and \(E_T \) types of signatures might be produced as a result of the decay processes involving the Higgs boson and the aforementioned new particles predicted by the BSM models. This indicates that the collision events containing the aforementioned high \(p_T \) and \(E_T \) types of signatures are interesting for the process of data selection. Therefore, the data-selection strategy adopted in the ATLAS experiment requires the full set of selection criteria, called the trigger menu, to be mainly composed of the aforementioned high \(p_T \) and \(E_T \) types of signatures predicted by the aforementioned HEP models, which the ATLAS experiment is aimed to test (ATLAS Collaboration 2003, Sect. 4).Footnote 13 This is necessary for the ATLAS experiment to achieve its aforementioned testing objectives. In what follows, I shall illustrate how the foregoing strategy has been implemented in the ATLAS experiment to determine the data selection criteria relevant to the SM’s prediction of the Higgs boson as well as the predictions of the minimal super-symmetric extension of the SM (MSSM), which is the most studied BSM model in the HEP literature (Nilles 1984).Footnote 14

Table 1 shows some of the main selection signatures used in the trigger menu of the ATLAS experiment. Each selection signature given in the left column of Table 1 is represented by the label ‘NoXXi.’ Here, ‘N’ denotes the minimum number of particles, jets, and transverse energy, required for a particular selection, and ‘o’ denotes the type of signature; e.g., ‘e’ for electron; ‘\(\gamma \)’ for photon; ‘\(\mu \)’ for muon; ‘\(\tau \)’ for tau; ‘xE’ for missing \(E_T\); ‘E’ for total \(E_T \); and ‘jE’ for total \(E_T \) associated with jet(s). The label ‘XX’ above denotes the threshold of \(E_T\) (in units of GeV), i.e., the lowest\(E_T \) at or above which a given selection criterion operates, and ‘i’ denotes whether the given signature is isolated or not. The right column of Table 1 shows the processes to which the selection signatures in the left column of the same table are relevant (for details, see ATLAS Collaboration 2003, Sect. 4.4).

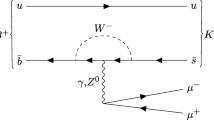

According to the SM, the decay processes of the Higgs boson(H) are as follows: \(H\rightarrow WW^{*}\); \(H\rightarrow ZZ^{*}\); and \(H\rightarrow \gamma \gamma \).Footnote 15 In these processes, the Higgs boson decays respectively into two W bosons, two Z bosons, and two photons. As indicated in the first line of the right column in Table 1, the W and Z bosons produced in the foregoing decays could subsequently decay into leptons (including electrons and electron neutrinos (\(\nu ))\) as well as top quarks.Footnote 16 Note that in the SM, the top quark could decay into a bottom quark, and a W boson that could subsequently decay into an electron and an electron neutrino. Therefore, the events that contain at least one electron with high \(E_T \) have the potential to contain the first two decay processes of the Higgs boson above, while the events that contain at least two photons with \(E_T \) have the potential to contain the third decay process of the Higgs boson above. This in turn means that selection signatures consisting of at least one electron with high \(E_T \) and those consisting of at least two photons with high \(E_T \) are appropriate for the testing of the SM’s prediction of the Higgs boson. In Table 1, the selection signature ‘e25i’, which requires at least one isolated electron with an \(E_T \) threshold of 25 GeV, and the selection signature ‘\(2\gamma 20i\)’, which requires at least two isolated photons each of which has an \(E_T \) threshold of 20 GeV, exemplify selection signatures appropriate for the testing of the SM’s prediction of the Higgs boson. Since the signatures predicted by some BSM models for the new heavy gauge bosons \({W}'\) and \({Z}'\) and those of the top quark also include leptons, selection signatures consisting of at least one electron with high \(E_T \) are also appropriate for the selection of the events relevant to the testing of these predictions (see, e.g., ATLAS Collaboration 2015) as well as to the study of the top quark related processes in the SM.

The selection signatures appropriate to select the events relevant to the testing of the MSSM are determined by taking into account the signatures predicted by this model for supersymmetric particles, including squarks, gluinos, charginos and neutralinos (Collaboration 2012c; ATLAS Collaboration 2016).Footnote 17 Since the signatures predicted by the MSSM for squarks or gluinos are jets and missing \(E_T \), selection signatures consisting of various combinations of these signatures are appropriate for the testing of the MSSM. This is illustrated, as shown in Table 1, by the following selection signatures: ‘j400’, ‘2j350’, ‘3j165’ and ‘4j110’, which consist of different numbers of high \(E_T \) jets. As indicated in the same table, the foregoing selection signatures are also appropriate for the study of the hadronic processes in QCD. According to the above consideration, selection signatures consisting of both jets and missing \(E_T \) are also appropriate for the testing of the MSSM. As given in Table 1, an example of such a selection signature is ‘\(j70+xE70\)’ that denotes the requirement of at least one jet with an \(E_T \) threshold of 70 GeV and a missing \(E_T \) at or above 70 GeV. Since the signatures predicted by the MSSM for charginos or neutralinos are leptons and missing \(E_T \), selection signatures consisting of various combinations of these signatures are also appropriate for the testing of the MSSM. This is exemplified in Table 1 by the selection signature ‘\(\mu 10+e15i\)’ that denotes the requirement of at least one muon with an \(E_T \) threshold of 10 GeV and one isolated electron with an \(E_T \) threshold of 15 GeV. The foregoing selection signature is also appropriate for the testing of the SM’s prediction of the Higgs boson, because the signatures predicted by the SM for the Higgs boson also include leptons.

The trigger menu of the ATLAS experiment is more diverse than the sample set of selection signatures given in Table 1. However, for my purposes in this paper, the above discussion is sufficient to illustrate that in the ATLAS experiment the main set of data selection criteria is established by considering the experimentally testable predictions of the HEP models that the ATLAS experiment is aimed to test.Footnote 18 In particular, what types of selection signatures, namely, whether they are based on particles or jets or missing or total energy, are to be used is determined by considering the conclusions of the foregoing HEP models about the decay processes of their predicted particles, e.g., the Higgs boson predicted by the SM, and the aforementioned supersymmetric particles predicted by the MSSM.

5 Modeling data acquisition in the ATLAS experiment

The acquisition of interesting events in the ATLAS experiment (ATLAS Collaboration 2003) is modeled as a three-level selection process through which a set of predetermined data selection criteria—namely, a trigger menu—are applied to the collision events in real-time, so as to select the interesting events, namely, the collision events relevant to the aforementioned objectives of the ATLAS experiment.Footnote 19 At each level of the selection process, the selection criteria are implemented by means of a trigger system (Linderstruth and Kisel 2004). The first level of the selection process is executed by the level-1 trigger system that provides a trigger decision within 2.5 microseconds, thereby reducing the LHC event rate of approximately 40 MHz to the range of 75–100 kHz. Since the level-1 trigger decision time is extremely short, the level-1 trigger system could identify only the regions in the ATLAS detector (in terms of the angular coordinates of the ATLAS detector which has the cylindrical geometry) that contain signals (for particles, jets, and missing and total energy) satisfying the energy threshold conditions specified by the chosen selection signatures. The foregoing regions are called regions of interest (RoIs), in the sense that they have the potential to contain the interesting events. The RoIs and the energy information associated with the signals detected in the RoIs are together called the RoI data. Note that ATLAS is a detector system that consists of several sub-detectors, including the calorimeter and tracking detectors. The RoI data are mainly determined by using the data coming from the calorimeter detector, which measures the energies of the impinging particles and jets as well as determines the regions in the ATLAS detector (in terms of the angular coordinates of the ATLAS detector) where they are detected. By using the calorimeter data, the level-1 trigger system determines the RoI data according to the data selection criteria.

It is to be noted that the tracking detectors are also used in the ATLAS experiment in order to determine the trajectories of particles and jets. However, since the level-1 trigger decision is too short to read out the relevant data from the tracking detectors, the data from these detectors are not useful for the level-1 selection purposes. As a result, it is technologically impossible to determine the trajectories, and thus the momenta, of the particles and jets quickly enough for the level-1 selection purposes. Therefore, at the end of the level-1 selection, the information regarding the location, momentum, and energy of particles and jets, or missing energy, contained in a selected event is fragmented across the different sub-detectors of the ATLAS detector, and the pieces of this fragmented information, called event fragments, are not assembled yet so as to fully describe a selected event.

The second level of the data selection process is aimed at assembling the event fragments identified at the level-1 selection in order to obtain the full descriptions of the selected events. The level-2 selection and the level-3 selection processes are executed by the level-2 and level-3 trigger systems, which are jointly called the high level trigger and data acquisition (HLT/DAQ) system. While the level-1 trigger system is hardware-based, the HLT/DAQ system is software-based, meaning that event selections are performed by using specialized software algorithms.Footnote 20 The level-2 selection consists of two sub-stages. In the first stage, selection is performed piecemeal, meaning that event fragments are accepted for selection in small amounts. In this way, the level-2 trigger system could have sufficient time for the selection process. If event fragments were accepted at once, this would considerably diminish the level-2 decision time and thus render the selection process ineffective. Through this mechanism, called the seeding mechanism, the event selection results of the level-1 trigger are transmitted to the level-2 trigger system for more refined trigger decisions. The level-2 selection is performed by using various specialized software algorithms that reduce the event accept rate from the range of 75–100 kHz down to approximately 2 kHz. In the second stage of the level-2 selection, which is called event building, the event fragments that satisfy the conditions specified by the selection criteria are assembled. Therefore, at the end of the second level of the data selection process, the information necessary to fully describe a selected event is available, meaning that the energy and momenta of the particles and jets, as well as missing transverse energy, contained in a selected event are known. At the third level of the data selection process, which is called event filtering, the events that have been built at the end of the level-2 selection undergo a filtering process through which specialized software algorithms further refine event selections according to the selection criteria with an event accept rate of approximately 200 Hz.Footnote 21 The events that have passed this event-filtering process are then sent to the data-storage unit for permanent storage for the offline data analysis, thus marking the end of the data acquisition process in the ATLAS experiment.

The discussion in this section shows that the data acquisition model of the ATLAS experiment contains the procedural information as to how the conclusions of the SM and the BSM models, namely those concerning the decay channels of the predicted particles and their associated signatures, are involved in the procedures carried out to acquire experimental data. The foregoing information is key to understand not only through what kinds of experimental procedures data are obtained in the context of present-day HEP experiments, but also, more importantly for the epistemology of experimentation, what kind of theory-experiment relationship is essential to the process of data acquisition as well as how this relationships is established during this process. In the next section, I will discuss how the acquired sets of collisions events are used in the testing of the SM Higgs boson hypothesis in the ATLAS experiment.

6 Modeling statistical testing and data in the Higgs boson search in the ATLAS experiment

The SM and the SM Higgs boson hypothesis are respectively, to use the terminology of Mayo’s version of the HoM account, the primary theoretical model and hypothesis in the Higgs boson search in the ATLAS experiment. The SM Higgs boson hypothesis was tested in the ATLAS experiment through a statistical model that allows extracting from the data the mass of the Higgs boson, which is not predicted by the SM. This model uses discriminating variables to distinguish the background processes from the Higgs boson signal in a given decay channel of the Higgs boson. The measurable quantities invariant (or rest) mass and transverse mass are taken to be discriminating variables, because novel processes are expected to arise in observed excesses of events with either invariant or transverse mass relative to the background expectation. In the adopted statistical model (ATLAS Collaboration 2012b), the term data is used to refer to the values of the discriminating variables. Accordingly, for a channel c with n selected events, the data D consists of the values of the discriminating variable(s) (x) for each event as follows: \(D_c =\left\{ {x_1 ,\ldots ,x_n } \right\} .\) The combined data \(D_{com} \) thus consists of the data sets from the individual channels of the Higgs boson: \(D_{com} =\left\{ {D_1 ,\ldots ,D_{c_{max} } } \right\} \). Therefore, given that collision events occurring at the LHC are discrete and independent from each other, the probability density function (pdf) of the data in the statistical model is taken to be of Poisson distribution and defined as follows:

where \(n_c \) is the number of selected events in the cth channel; \(x_e \) is the value of the discriminating variable x for the eth event in channels 1 to \(\hbox {c}_\mathrm{max} \); \(v_c \) is the total rate of production of events in the cth channel expected from the Higgs boson related processes and background processes (ibid.). The term \(f_c (x_{ce} |\alpha )\) above represents the pdf of the discriminating variable and depends on \(\alpha =\alpha \left( {\mu ,m_H ,\theta } \right) ,\)which represents the full list of parameters, namely, the global signal strength factor \(\mu \); the Higgs boson mass \(m_H \), and the nuisance parameters\(\theta \).Footnote 22 Note that \(\mu \) is defined such that the case in which \(\mu =1\) corresponds to the possibility that the Higgs boson hypothesis is true, meaning that the selected events are produced by the SM Higgs boson and the background processes, and the case in which \(\mu =0\) corresponds to the possibility where the background-only hypothesis (traditionally called the null hypothesis in statistical testing) is true, meaning that the selected events are produced only by background processes. The term \(\mathop \prod \nolimits _{p\in S} f_p (a_p |\alpha _p ,\sigma _p )\) above represents the product of the pdfs of the nuisance parameters that are estimated from auxiliary measurements, such as control regions and calibration measurements. The set of these nuisance parameters is denoted as S, and the set of their estimates is denoted as \(G=\left\{ {a_p } \right\} \) with \(p\in S\), \(\sigma _p \) being a standard error.

In the adopted model, the statistical test of the SM Higgs boson hypothesis is based on the likelihood function\(L\left( {\mu ,\theta } \right) \), which is defined, so as to reflect the dependence on the data, as follows: \(L\left( {\mu ,\theta ;m_H ,D_{com} ,G} \right) =\hbox {f}_{\text {tot}} (D_{com} ,G|\mu ,m_H ,\theta )\). The level of compatibility between the data and \(\mu \) is characterized by the profile likelihood ratio that is defined by the method of maximum likelihood as follows: \(\lambda \left( \mu \right) =L\left( {\mu , \hat{\hat{\theta }} \left( \mu \right) } \right) {\big /}L\left( {\hat{\mu } ,\hat{\theta } } \right) \), where \( {\hat{\mu } } \) and \(\hat{\theta } \), called the maximum likelihood estimates, are the values of \(\mu \) and \(\theta \) that maximize \(L\left( {\mu ,\theta } \right) \); and \(\hat{\hat{\theta }} \left( \mu \right) \), called the conditional maximum likelihood estimate, is the value of \(\theta \) that maximizes \(L\left( {\mu ,\theta } \right) \) with \(\mu \) fixed. The definition of \(\lambda \left( \mu \right) \) indicates that \(0\le \lambda \le 1\), where \(\lambda \) equals 1 if the hypothesized value of \(\mu \) equals \( \hat{\mu } \), and \(\lambda \) tends to 0 if there is an increasing incompatibility between \(\hat{\mu }\) and the hypothesized value of \(\mu \). Therefore, given that the natural logarithm is a monotonically increasing function of its argument, the test statistic is conveniently defined as \(t_\mu =-2\ln \lambda \left( {\mu ,m_H } \right) .\hbox {}\) Here, \(\lambda \left( {\mu ,m_H } \right) \), rather than \(\lambda \left( \mu \right) \), is used in order to test which values of \(\mu \) and mass of a signal hypothesis are simultaneously consistent with the data. In this way, it is possible to carry out tests of the SM Higgs boson hypothesis for a range of hypothesized Higgs boson masses (ATLAS Collaboration 2012b). The statistical model of testing quantifies the level of disagreement between the data and the SM Higgs boson hypothesis through the p value, i.e., the probability of the background-only hypothesis being true given the data, which is defined as follows: \(p_\mu =\mathop \smallint \nolimits _{t_{\mu ,obs} }^\infty f\left( {t_\mu \hbox {|}\mu } \right) dt_\mu ,\) “where \(t_{\mu ,obs} \) is the value of the statistic \(t_\mu \) observed from the data and \(f\left( {t_\mu \hbox {|}\mu } \right) \) denotes the pdf of \(t_\mu \) under the assumption of the signal strength \(\mu \)” (Cowan et al 2011, p. 3).

In the ATLAS experiment, the statistical testing of the SM Higgs boson hypothesis was carried out by using the sets of proton-proton collision events produced at a center-of-mass energy of \(\sqrt{s}=7\) TeV in 2011 and those produced at \(\sqrt{s}=8\) TeV in 2012 for the following Higgs boson decay channels: \(H\rightarrow ZZ^{*}\rightarrow 4l\), where l stands for electron or muon; \(H\rightarrow \gamma \gamma \); and \(H\rightarrow WW^{*}\rightarrow e\nu \mu \nu \) (ATLAS Collaboration 2012a). The discriminating variable in the aforementioned statistical model is taken to be the four-lepton invariant mass \(m_{4l} \) for the channel \(H\rightarrow ZZ^{*}\rightarrow 4l\), and the diphoton invariant mass (\(m_{\gamma \gamma } )\) for the channel \(H\rightarrow \gamma \gamma \). The distributions of the invariant mass in the four-lepton and diphoton events for the foregoing two channels are shown in Figs. 2 and 3, respectively. Instead of invariant mass, transverse mass (\(m_T )\) is taken to be the discriminating variable for the channel \(H\rightarrow WW^{*}\rightarrow e\nu \mu \nu \), because one cannot reconstruct the mass of the W bosons from the invariant masses of their decay products due to the fact that the neutrino is invisible in the detector (see Barr et al. 2009, p. 1). The transverse mass distribution in the foregoing channel is shown in Fig. 4. It is to be noted that the mass distributions in the foregoing figures show an excess of events with respect to the background expectation. In particular, Fig. 2 indicates an excess of events near \(m_{4l} =125\) GeV in the decay channel \(H\rightarrow ZZ^{*}\rightarrow 4l\). Similarly, Fig. 3 indicates an excess of events near \(m_{\gamma \gamma } =126.5\) GeV in the channel \(H\rightarrow \gamma \gamma \). Whereas, the mass resolution of the observed peak in the mass distribution associated with the channel \(H\rightarrow WW^{*}\rightarrow e\nu \mu \nu \) is low as shown in Fig. 4.

The distribution of the four-lepton invariant mass for the selected events in the decay channel \(H\rightarrow ZZ^{*}\rightarrow 4l.\) The signal expectation for a SM Higgs with \(m_H =125\) GeV is also shown (SourceATLAS Collaboration 2012a, p. 5)

The unweighted (a) and weighted (c) distributions of the diphoton invariant mass for the selected events in the channel \(H\rightarrow \gamma \gamma \). The dashed lines represent the fitted background (Source ATLAS Collaboration 2012a, p. 8)

The transverse mass distribution for the selected events in the channel \(H\rightarrow WW^{*}\rightarrow e\nu \mu \nu \). The hashed area indicates the total uncertainty on the background prediction. The expected signal for \(m_H =125\) GeV is negligible and therefore not visible (Source ATLAS Collaboration 2012a, p. 10)

For the results combined from the aforementioned channels, the adopted statistical model yields the best-fit signal strength \(\hat{\mu }\) as shown in Fig. 5c as a function of \(m_H \) in the mass range of 110–600 GeV. In this mass range, local p values are shown in Fig. 5b, together with the confidence levels in Fig. 5a. Here, local p value means “the probability that the background can produce a fluctuation greater than or equal to the excess observed in data” (ATLAS Collaboration 2012a, p. 11). Based on the aforementioned results, the ATLAS Collaboration concluded that this “observation ... has a significance of 5.9 standard deviations, corresponding to a background fluctuation probability of \(1.7\times 10^{-9}\)... [and that it] is compatible with the production and decay of the [SM] Higgs boson” (ibid., p. 1).

Combined search results: (a) The observed (solid) 95% confidence level (CL) limits on the signal strength as a function of \(m_H\) and the expectation (dashed) under the background-only hypothesis. The dark and light shaded bands show the \(\pm 1\sigma \) and \(\pm 2\sigma \) uncertainties on the background-only expectation. (b) The observed (solid) local \(p_0\) as a function of \(m_H \) and the expectation (dashed) for a SM Higgs boson signal hypothesis (\(\mu =1)\) at the given mass. (c) The best-fit signal strength \(\hat{\mu }\) as a function of \(m_H\). The band indicates the approximate 68% CL interval around the fitted value (Source ATLAS Collaboration 2012a, p. 13)

An important epistemological consequence of the testing of the SM Higgs boson hypothesis in the ATLAS experiment concerns the modeling of experimental data. According to the adopted model of statistical testing, the distributions of the aforementioned discriminating variables in the relevant sets of selected events need to be determined for the statistical testing of the Higgs boson hypothesis. This makes it necessary to analyze the relevant sets of selected events so as to determine the mass distributions in these sets over a range of energy values. The results of this analysis are shown in Figs. 2, 3 and 4. At this point, let us remember that according to the HoM account, data sets are, by themselves, not usable for hypothesis testing. In order for data sets to be used for this purpose, they must be put into data models that satisfy the requirements of statistical testing. The distinction between the sets of selected events relevant to the testing of the Higgs boson hypothesis and the corresponding mass distributions fits the distinction drawn by the HoM account between data sets and models of data, in that the Higgs boson hypothesis is tested not against the sets of selected events, but against the mass distributions in these sets. It is to be noted that in the publications of the ATLAS Collaboration, the term data is used to refer to the sets of selected collision events (ATLAS Collaboration 2012a) as well as the mass distributions in these sets (ATLAS Collaboration 2012b). The former sense illustrates the usual sense of the term data that refers to as yet unanalyzed measurement results, whereas the latter sense is restricted to the statistical testing of the SM Higgs boson hypothesis and peculiarly used to refer to analyzed measurement results. In the rest of this paper, I shall stick to the aforementioned former sense of the term data to refer to selected collision events. I shall also use the terminology of the HoM account to refer to the foregoing mass distributions as the data models used in the testing of the SM Higgs boson, in the sense that they are the data forms that bring out the features of the LHC data that are relevant to the statistical testing of the SM Higgs boson hypothesis and that also satisfy the requirements imposed by the adopted statistical model of testing.

The above considerations suggest that the testing of the SM Higgs boson hypothesis in the ATLAS experiment was driven by a statistical model of testing that prescribes how to analyze the LHC data, namely the sets of selected collision events, and put them into the data models, namely the invariant and transverse mass distributions, as well as how to extract from the latter the information regarding the extent of compatibility between the SM Higgs boson hypothesis and the foregoing data models. This in turn shows that the statistical testing of the SM Higgs boson hypothesis in the ATLAS experiment requires the relationship between the SM, which is the primary theoretical model of inquiry in the Higgs boson search in the ATLAS experiment, and the LHC data to be established through the intermediary of a statistical model of testing in the way suggested by the HoM account.

I will conclude this section with some remarks on a paper by Todd Harris, where he argues that “[f]rom the outset the data must be considered to be the product of a certain amount of purposeful manipulation” (Harris 2003, p. 1512). Harris reaches this conclusion in the context of a case study concerning electron micrographs:

[A]n electron micrograph is the product of an astonishingly complex instrument that requires a specimen to undergo a lengthy preparation procedure. Scientists will change many aspects of this specimen preparation procedure as well as settings on the microscope in order to achieve a desired effect in the resulting micrograph. Because of this purposeful manipulation of both the microscope and the specimen, the electron micrograph cannot be said to be unprocessed data. (Ibid., p. 1511)

This part of Harris’s argument is supported by the present case study, in that the data used in the ATLAS experiment are not raw but rather consist of processed collision events (associated with the decay channels of the Higgs boson) that have been selected from the rest of the collision events through the selection procedures as prescribed by the data acquisition model of the experiment. In the same paper, Harris further remarks:

When one moves from [...] relatively simple instruments to an instrument such as an electron microscope, it becomes obvious that the instrument is not producing raw data (in the sense of being unprocessed), but data that has been interpreted and manipulated, in short, a data model. (ibid., p. 1512)

Contrary to Harris’s claim in the above passage, what I have called data models in the case of the ATLAS experiment, i.e., the invariant and transverse mass distributions shown in Figs. 2, 3 and 4, are not produced directly by the experimental instruments, namely, the LHC and the ATLAS detector and trigger systems, but rather by analyzing the collision events produced by means of these instruments. This illustrates that in present-day HEP experiments, data, i.e., selected collision events, are produced by means of highly complex experimental instruments, such as colliders, detectors and trigger systems; unlike data models that are produced through the analysis of selected events.Footnote 23

7 Conclusions

The present case study shows that both the data acquisition and the statistical testing of the SM Higgs boson hypothesis in the ATLAS experiment are model-based processes. The HoM account correctly captures the way the foregoing statistical testing process is modeled, whereas, due to the lack of the concept of a model of data acquisition, it fails to provide a model-based characterization of the process of data acquisition. For it is solely focused to account for how the relationship between theory and data is established and thereby scientific hypotheses are tested in experimentation. The present case study illustrates that in present-day HEP experiments, while the relationship between data and theory (or more generally theoretical considerations), which is essential to data analysis and interpretation of experimental results, is established through the proposed HoM, the relationship between theory and procedures of data acquisition, which is necessary for the data to be acquired in accordance with the objectives of the experiment, is established through a model of data acquisition.

The concept of a model of data acquisition has the potential to be applied broadly to cases outside the context of HEP experiments where the process of data acquisition requires the modeling of procedures used to extract data from an experimental set-up or measurement device. The case of the ATLAS experiment illustrates that a model of data acquisition is a different type of model than the types of models suggested by the HoM account, namely, models of primary scientific hypotheses, models of experiment and models of data. Remember that models of primary scientific hypotheses are theoretical models that account for the phenomena of interest and yield experimentally testable hypotheses about those phenomena. Models of experiment are statistical models that specify procedures necessary to test scientific hypotheses. Data models are forms of analyzed data that represent measured quantities relevant to hypothesis testing. Unlike the foregoing types of models, as the case of the ATLAS experiment illustrates, a data acquisition model in the context of present-day HEP experiments is basically a system of data selection, with hardware and software components, that is designed and implemented in order to select the data according to a set of predetermined criteria.Footnote 24 The present case study therefore shows that the sets of procedures involved in the statistical testing of the SM Higgs boson hypothesis and those in the acquisition of the data in the ATLAS experiment are distinct from each other and thus modeled through different types of models, namely, a statistical model of testing and a model of data acquisition, respectively. This in turn means that in the ATLAS experiment, there exists no overarching model that encompasses both statistical testing and data acquisition procedures of the experiment, which we could refer to as the model of the experiment. However, in the HoM account, statistical hypothesis testing is incorrectly taken to be the only model-based process in an experiment, and as a result, a statistical model of testing is referred to as the model of the experiment. The above considerations therefore suggest that the term model of experiment is a misnomer in the context of present-day HEP experiments.

The previous discussion also indicates that the ATLAS data acquisition model is theory-laden, in the sense that the procedures at each level of the data selection process are implemented in accordance with the set of selection criteria established by considering the conclusions of the SM and the BSM models concerning what types of events (in terms of types of signatures contained, namely photons, leptons, jets, and total and missing energy, as well as associated energy thresholds) are relevant to the intended objectives of the ATLAS experiment. The theory-ladenness in the foregoing sense should be seen as providing the theoretical guidance that is necessary to determine the data selection criteria in such a way that they are appropriate for the various objectives of the ATLAS experiment. It is to be noted that since the production rates of the events associated with the well-known physics processes of the SM are much higher than those of the events considered interesting, in the absence of the foregoing theoretical guidance, the process of data selection would be dominated by the abundant and well-known events of the SM and thus biased against the interesting ones. Therefore, the theory-ladenness of the data acquisition model in the foregoing sense is essential to the acquisition of data in the ATLAS experiment. It is also important to note that in the ATLAS experiment since the selection criteria are applied only to collision events themselves, the functioning of the experimental apparatus (i.e., the LHC and the ATLAS detector) used to produce the experimental phenomena (i.e., inelastic proton-proton collision events) is by no means affected by the use of data selection criteria. This means that data selection criteria have no causal effect whatsoever on the production of experimental phenomena, thus ruling out the possibility of a vicious circularity in testing due to the theory-ladenness of the data acquisition model of the ATLAS experiment.

The theory-ladenness of the ATLAS data acquisition model indicates that the SM and the BSM models are involved in the data acquisition process through the chosen data selection criteria. It is to be noted that according to the terminology of Mayo’s version of the HoM account, the SM and the BSM models are the primary theoretical models in the case of the ATLAS experiment, in that they provide the theoretical predictions being tested in this experiment. Mayo characterizes the relationship between primary theoretical models and procedures of data acquisition as follows:

A wide gap exists between the nitty-gritty details of the data gathering experience and the primary theoretical model of the [experimental] inquiry... [A]s one descends the [HoM], one gets closer to the data and the actual details of the experimental experience. (Mayo 1996, p. 133)

Contrary to Mayo’s assertion, the above considerations show that there exists no such a gap between the primary theoretical models and the actual details of the process of data acquisition in the case of the ATLAS experiment. Instead, the testable predictions of the SM and the BSM models directly bear upon the nitty-gritty details of the process of data selection through the chosen data-selection criteria. This in turn means that in the ATLAS experiment the primary theoretical models relate to the model of data acquisition without the intermediary of the proposed HoM.

The above discussion indicates that there is even a more fundamental problem with the HoM account than the lack of the concept of a data acquisition model. The following two considerations are relevant in this regard. First, theoretical models and data models lie respectively at the top and the bottom ends of the HoM proposed by Suppes and Mayo. Second, a model of data acquisition precede models of data in the course of experimentation, as the former is used to obtain experimental data from which data models are formed through the use of an experimental model. These considerations suggest that adding models of data to the HoM in such a way that they form a separate level below the level of data models seems to be a natural way to amend it into a larger hierarchy whose top and bottom ends consist of theoretical models and models of data acquisition, respectively. However, no hierarchy results from amending the HoM in the foregoing way, because, as the case of the ATLAS experiment illustrates, in the context of present-day HEP experiments, the relationship between theoretical models and data acquisition models is a direct one that does not involve any intermediate model. Therefore, in the foregoing context, the HoM proposed by Suppes and Mayo holds true only for the process of statistical testing of scientific hypotheses but does not consistently extend to the process of data acquisition so as to include models of data acquisition.

Notes

The ATLAS experiment derives its name from the ATLAS (“A Toroidal LHC ApparatuS”) detector.

The CMS (“Compact Muon Solenoid”) detector is the other LHC experiment that also detected the Higgs boson in 2012 (CMS Collaboration 2012).

For a survey, see Franklin and Perovic (2015).

Here, a “possible realization” is characterized as an entity of the appropriate set-theoretical structure, and “valid sentences” are taken to be those sentences that are logical consequences of the axioms of the theory (Suppes 1962, p. 252).

The second function stated here is rather misleading, because, as shall be discussed in what follows, in Mayo’s account hypothesis testing is undertaken by models of experiment, instead of primary theoretical models.

For Mayo’s considerations on this issue, see ibid., p. 139.

In the terminology of modern experimental HEP, “the term ‘event’ is used to refer to the record of all the products from a given bunch crossing,” (Ellis 2010, p. 6) which occurs when two beams of particles collide with each other inside the collider.

The SM consists of two gauge theories, namely, the electroweak theory of the weak and electromagnetic interactions, and the theory of quantum chromo-dynamics (QCD) that describes strong interactions.

Franklin (1998) illustrated selectivity in data analysis in a number of case studies concerning earlier HEP experiments, including the branching ratio experiment (Bowen et al 1967), 17 keV neutrino experiments (Morrison 1992) and the experiments that searched for low-mass electron-positron states (Ganz 1996). Franklin points out that “[s]election criteria, usually referred to as “cuts,” are applied to either the data themselves or to the analysis procedures and are designed to maximize the desired signal and to eliminate or minimize background that might mask or mimic the desired effect” (ibid., p. 399). However, this does not mean that selectivity built into the data acquisition procedures was absent in previous HEP experiments. To illustrate the last point, Franklin notes that in the branching ratio experiment “the experimenters required that the decay particle give a signal in a Cerenkov counter set to detect positrons. This was designed to exclude events resulting from decay modes such as \(K^{+}_{\mu 2}, K^{+}_{\pi 2}\), and \(K^{+}_{\mu 3}\) that did not include a positron” (ibid.).

The design information is typically provided in the technical design reports, which are reviewed and approved by the managements of HEP experiments; see, e.g., ATLAS Collaboration (2003), CMS Collaboration (2002) for the technical design reports of the ATLAS and CMS experiments for data acquisition.

Transverse-momentum is the component of the momentum of a particle that is transverse to the proton-proton collision axis, and transverse-energy is obtained from energy measurements in the calorimeter detector.

A lepton is a spin 1/2 particle that interacts through electromagnetic and weak interactions, but not through strong interaction. In the SM, leptons are the following elementary particles: electron, muon, tau, and their respective neutrinos.

In addition, the trigger menu contains high \(p_{T}\) and \(E_{T}\) types of triggers appropriate for the search for novel physics processes that are not predicted by the present HEP models. The trigger menu also contains prescaled triggers that are determined by prescaling high \(p_{T}\) and \(E_{T}\) triggers with lower thresholds, i.e., below (see ATLAS Collaboration 2003, Sect. 4.4.2). Here, prescaling means that the amount of events that a trigger could accept is suppressed by what is called a prescale factor in order for the selection process not to be swamped by the events containing vastly abundant low \(p_{T}\) and \(E_{T}\) signatures. Prescaled triggers are used especially to select events that have the potential to serve the detection of novel physics processes at low energy scale (\(<10\) GeV), such as possible deviations from the SM. It is also to be noted that the trigger menu of the ATLAS experiment is constantly updated.

To this end, I shall follow the relevant discussion in Karaca (2017a), which provides a more detailed account on how data selection signatures are determined in the ATLAS experiment.

Here, “*” denotes an off-shell boson, i.e., not satisfying classical equations of motions

For a thorough discussion of the decay processes and associated selection signatures relevant to the Higgs boson prediction by the SM, see, e.g., ATLAS Collaboration (2012b).

See also Pralavorio (2013) for a short survey of supersymmetry searches in the ATLAS experiment.

At this point, it is also worth noting the essential role of computer simulations in determining data selection criteria. For an extensive examination of the use of computer simulations in the context of the LHC experiments, see Morrison (2015).

A detailed description of the ATLAS data acquisition system can also be found in Karaca (2017b).

The abovementioned event accept rates are for early data taking at the LHC, and they have later changed significantly.

Nuisance parameters are the parameters of the model that must be accounted for the analysis but that are not of immediate interest. “There are three types of nuisance parameters: those corresponding to systematic uncertainties, the fitted parameters of the background models, and any unconstrained signal model parameters not relevant to the particular hypothesis under test” (ATLAS Collaboration 2015, p. 2).

According to Daniela Bailer-Jones and Coryn Bailer-Jones, the analysis of large amounts of data requires what they call “data analysis models” (Bailer-Jones and Bailer-Jones 2002). In their account, “various computational data analysis techniques [(such as artificial neural networks, simulated annealing and genetic algorithms)] ... can be assembled into [data analysis] models of how to solve a certain kind of problem associated with a set of data (ibid., p. 148). For instance, the aforementioned data analysis techniques are used in the analysis of data in present-day HEP experiments. It is to be noted that Bailer-Jones and Bailer-Jones do not discuss data analysis models in relation to the HoM account. But, their account is relevant to the HoM account, in that they suggest that in cases of experiments where large amounts of data are analyzed the transition from data to data models is mediated through models of data analysis, because the latter provide various techniques designed to handle various problems encountered in the analysis of data sets before they are represented in the form of data models.

References

ATLAS Collaboration. (2003). ATLAS high-level trigger, data-acquisition and controls: Technical design report. CERN-LHCC-2003-022.

ATLAS Collaboration. (2008). The ATLAS experiment at the CERN Large Hadron Collider. Journal of Instrumentation, 3, S08003.

ATLAS Collaboration. (2012a). Observation of a new particle in the search for the Standard Model Higgs boson with the ATLAS detector at the LHC. Physics Letters B, 716, 1–29.

ATLAS Collaboration. (2012b). Combined search for the Standard Model Higgs boson in pp collisions at \(\sqrt{s}=7\) TeV with the ATLAS detector. Physical Review D, 86, 032003.

ATLAS Collaboration. (2012c). Further search for supersymmetry at \(\sqrt{s}=7\) TeV in final states with jets, missing transverse momentum, and isolated leptons with the ATLAS detector. Physical Review D, 86, 092002.

ATLAS Collaboration. (2015). Search for high-mass diboson resonances with boson-tagged jets in proton-proton collisions at \(\sqrt{s}=8\) TeV with the ATLAS detector. Journal of High Energy Physics, 12, 55.

ATLAS Collaboration. (2016). Search for supersymmetry at \(\sqrt{s}=13\) TeV in final states with jets and two same-sign leptons or three leptons with the ATLAS detector. European Physical Journal C, 76, 259.

ATLAS Collaboration and CMS Collaboration. (2015). Combined measurement of the Higgs boson mass in pp collisions at \(\sqrt{s}=7\) and \(8\) with the ATLAS and CMS experiments. Physical Review Letters, 114, 191803.

Bailer-Jones, D., & Bailer-Jones, C. A. L. (2002). Modeling data: Analogies in neural networks, simulated annealing and genetic algorithms. In L. Magnani & N. Nersessian (Eds.), Model-based reasoning: Science, technology, values (pp. 147–165). New York: Kluwer Academic/Plenum Publishers.

Barr, A. J., Gripaios, B., & Lester, C. G. (2009). Measuring the Higgs boson mass in dileptonic W-boson decays at hadron colliders. Journal of High Energy Physics, 0907, 072.

Borrelli, A., & Stöltzner M. (2013). Model landscapes in the Higgs sector. In V. Karakostas, & D. Dieks (Eds.), EPSA11 Proceedings: Perspectives and foundational problems in philosophy of science (pp. 241–252). New York: Springer.

Bowen, D. R., Mann, A. K., McFarlane, W. K., et al. (1967). Measurement of the \(K_{e2}^+\) branching ratio. Physical Review, 154, 1314–1322.

CMS Collaboration. (2002). Technical design report, vol. 2: Data acquisition and high-level trigger. CERN/LHCC 02-026.

CMS Collaboration. (2012). Observation of a new boson at a mass of 125 GeV with the CMS experiment at the LHC. Physics Letters B, 716, 30–61.

Cowan, G., Cranmer, K., Gross, E., & Vitells, O. (2011). Asymptotic formulae for likelihood-based tests of new physics. European Physical Journal C, 71, 1554.

Ellis, N. (2002). The ATLAS years and the future. Talk given at a half-day symposium at the University of Birmingham, July 3rd, 2002. http://www.ep.ph.bham.ac.uk/general/outreach/dowellfest/.

Ellis, N. (2010). Trigger and data acquisition. Lecture given at the 5th CERN-Latin-American School of High-Energy Physics, Recinto Quirama, Colombia, 15–28 Mar 2009. CERN Yellow Report CERN-2010-001, pp. 417–449. http://lanl.arxiv.org/abs/1010.2942.

Ellis, J. (2012). Outstanding questions: Physics beyond the standard model. Philosophical Transactions of the Royal Society A, 370, 818–830.

Franklin, A. (1998). Selectivity and the production of experimental results. Archive for History of Exact Sciences, 53, 399–485.

Franklin, A., & Perovic, S. (2015). Experiments in physics. In E. N. Zalta (Ed.), Stanford encyclopedia of philosophy. http://plato.stanford.edu/entries/physics-experiment/#TheLadHEP.

Ganz, R., Bär, R., Balanda, A., et al. (1996). Search for e+ e– pairs with narrow sum-energy distributions in heavy-ion collisions. Physics Letters B, 389, 4–12.

Harris, T. (1999). A hierarchy of models and electron microscopy. In L. Magnani, N. J. Nersessian, & P. Thagard (Eds.), Model-based reasoning in scientific discovery (pp. 139–148). New York: Kluwer Academic/Plenum Publishers.

Harris, T. (2003). Data models and the acquisition and manipulation of data. Philosophy of Science, 70, 1508–1517.

Karaca, K. (2017a). A case study in experimental exploration: Exploratory data selection at the Large Hadron Collider. Synthese, 194, 333–354.

Karaca, K. (2017b). Representing experimental procedures through diagrams at CERN’s Large Hadron Collider: The communicatory value of diagrammatic representations in collaborative research. Perspectives on Science, 25, 177–203.

Linderstruth, V., & Kisel, I. (2004). Overview of trigger systems. Nuclear Instruments and Methods in Physics Research A, 535, 48–56.

Mayo, D. (1996). Error and growth of experimental knowledge. Chicago: University of Chicago Press.

Mayo, D. (2000). Experimental practice and an error statistical account of evidence. Philosophy of Science, 67, 193–207.

Morrison, D. (1992). Review of 17 keV neutrino experiments. In S. Hegarty, K. Potter, & E. Quercigh (Eds.), Proceedings of joint international lepton-photon symposium and europhysics conference on high energy physics (pp. 599–605). Geneva: World Scientific.

Morrison, M. (2015). Reconstructing reality: Models, mathematics, and simulations. Oxford: Oxford University Press.

Nilles, H. P. (1984). Supersymmetry, supergravity and particle physics. Physics Reports, 110, 1–162.

Pickering, A. (1984). Constructing quarks: A sociological history of particle physics. Chicago: University of Chicago Press.

Pralavorio, P. (2013). SUSY searches at ATLAS. Frontiers of Physics, 8, 248–256.

Staley, K. (2004). The evidence for the top quark: Objectivity and bias in collaborative experimentation. Cambridge: Cambridge University Press.

Suppes, P. (1962). Models of data. In E. Nagel, P. Suppes, & A. Tarski (Eds.), Logic, methodology, and philosophy of science (pp. 252–261). Stanford: Stanford University Press.

Winsberg, E. (1999). The hierarchy of models in simulation. In L. Magnani, N. J. Nersessian, & P. Thagard (Eds.), Model-based reasoning in scientific discovery (pp. 255–269). New York: Kluwer Academic/Plenum Publishers.

Acknowledgements

I am grateful to Kent Staley for his constructive comments on an earlier version of this paper. I also acknowledge helpful discussions with Allan Franklin, James Griesemer, Wendy Parker, Sabina Leonelli, Margaret Morrison, Paul Teller and Christian Zeitnitz. Earlier versions of this paper have been presented at conferences in Barcelona, Exeter, San Sebastián and Wuppertal. I thank the audiences at these conferences for their feedback. This research is funded by the German Research Foundation (DFG) under project reference: Epistemologie des LHC (PAK 428)—GZ: STE 717/3-1.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Karaca, K. Lessons from the Large Hadron Collider for model-based experimentation: the concept of a model of data acquisition and the scope of the hierarchy of models. Synthese 195, 5431–5452 (2018). https://doi.org/10.1007/s11229-017-1453-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11229-017-1453-5