Abstract

Several recent works have developed a new, probabilistic interpretation for numerical algorithms solving linear systems in which the solution is inferred in a Bayesian framework, either directly or by inferring the unknown action of the matrix inverse. These approaches have typically focused on replicating the behaviour of the conjugate gradient method as a prototypical iterative method. In this work, surprisingly general conditions for equivalence of these disparate methods are presented. We also describe connections between probabilistic linear solvers and projection methods for linear systems, providing a probabilistic interpretation of a far more general class of iterative methods. In particular, this provides such an interpretation of the generalised minimum residual method. A probabilistic view of preconditioning is also introduced. These developments unify the literature on probabilistic linear solvers and provide foundational connections to the literature on iterative solvers for linear systems.

Similar content being viewed by others

1 Introduction

Consider the linear system

where \(A \in \mathbb {R}^{d \times d}\) is an invertible matrix, \(\varvec{b} \in \mathbb {R}^d\) is a given vector, and \(\varvec{x}^*\in \mathbb {R}^d\) is an unknown to be determined. Recent work (Hennig 2015; Cockayne et al. 2018) has constructed iterative solvers for this problem which output probability measures, constructed to quantify uncertainty due to terminating the algorithm before the solution has been identified completely. On the surface the approaches in these two works appear different: in the matrix-based inference (MBI) approach of Hennig (2015), a posterior is constructed on the matrix \(A^{-\!1}\), while in the solution-based inference (SBI) method of Cockayne et al. (2018) a posterior is constructed on the solution vector \(\varvec{x}^*\).

These algorithms are instances of probabilistic numerical methods (PNM) in the sense of Hennig et al. (2015) and Cockayne et al. (2017). PNM are numerical methods which output posterior distributions that quantify uncertainty due to discretisation error. An interesting property of PNM is that they often result in a posterior distributions whose mean element coincides with the solution given by a classical numerical method for the problem at hand. The relationship between PNM and classical solvers has been explored for integration (e.g. Karvonen and Sarkka 2017), ODE solvers (Schober et al. 2014, 2019; Kersting et al. 2018) and PDE solvers (Cockayne et al. 2016) in some generality. For linear solvers, attention has thus far been restricted to the conjugate gradient (CG) method. Since CG is but a single member of a larger class of iterative solvers, and applicable only if the matrix A is symmetric and positive definite, extending the probabilistic interpretation is an interesting endeavour. Probabilistic interpretations provide an alternative perspective on numerical algorithms and can also provide extensions such as the ability to exploit noisy or corrupted observations. The probabilistic view has also been used to the develop new numerical methods (Xi et al. 2018), and Bayesian PNM can be incorporated rigorously into pipelines of computation (Cockayne et al. 2017).

Preconditioning—mapping Eq. (1) to a better conditioned system with the same solution—is key to the fast convergence of iterative linear solvers, particularly those based upon Krylov methods (Liesen and Strakos 2012). The design of preconditioners has been referred to as “a combination of art and science” (Saad 2003, p. 283). In this work, we also provide a new, probabilistic interpretation of preconditioning as a form of prior information.

1.1 Contribution

This text contributes three primary insights:

- 1.

It is shown that, for particular choices of the generative model, matrix-based inference (MBI) and solution-based inference (SBI) can be equivalent (Sect. 2).

- 2.

A general probabilistic interpretation of projection methods (Saad 2003) is described (Sect. 3.1), leading to a probabilistic interpretation of the generalised minimum residual method (GMRES; Saad and Schultz (1986), Sect. 6). The connection to CG is expanded and made more concise in Sect. 5.

- 3.

A probabilistic interpretation of preconditioning is presented in Sect. 4.

Most of the proofs are presented inline; lengthier proofs are deferred to “Appendix B”. While an important consideration, the predominantly theoretical contributions of this paper will not consider the impact of finite numerical precision.

1.2 Notation

For a symmetric positive-definite matrix \(M \in \mathbb {R}^{d\times d}\) and two vectors \(\varvec{v}, \varvec{w} \in \mathbb {R}^d\), we write \(\langle \varvec{v}, \varvec{w} \rangle _M = \varvec{v}^{\top }M \varvec{w}\) for the inner product induced by \(M\), and \(\Vert \varvec{v}\Vert _M^2 = \langle \varvec{v}, \varvec{v} \rangle _M\) for the corresponding norm.

A set of vectors \(\varvec{s}_1, \dots , \varvec{s}_m\) is called \(M\)-orthogonal or M-conjugate if \(\langle \varvec{s}_i, \varvec{s}_j \rangle _M = 0\) for \(i\ne j\), and \(M\)-orthonormal if, in addition, \(\Vert \varvec{s}_i\Vert _M = 1\) for \(1\le i\le m\).

For a square matrix \(A =\begin{bmatrix} \varvec{a}_1&\ldots&\varvec{a}_d\end{bmatrix}^{\top }\in \mathbb {R}^{d\times d}\), the vectorisation operator\(\text {vec} : \mathbb {R}^{d\times d} \rightarrow \mathbb {R}^{d^2}\) stacks the rowsFootnote 1 of A into one long vector:

The Kronecker product of two matrices \(A, B \in \mathbb {R}^{d\times d}\) is \(A \otimes B\) with \([A\otimes B]_{(ij),(k\ell )} = [A]_{ik}[B]_{j\ell }\). A list of its properties is provided in “Appendix A”.

The Krylov space of order m generated by the matrix \(A\in \mathbb {R}^{d\times d}\) and the vector \(\varvec{b}\in \mathbb {R}^d\) is

We will slightly abuse notation to describe shifted and scaled subspaces of \(\mathbb {R}^d\): let \({\mathbb {S}}\) be an m-dimensional linear subspace of \(\mathbb {R}^d\) with basis \(\{\varvec{s}_1, \dots , \varvec{s}_m\}\). Then, for a vector \(\varvec{v} \in \mathbb {R}^d\) and a matrix \(M \in \mathbb {R}^{d\times d}\), let

2 Probabilistic linear solvers

Several probabilistic frameworks describing the solution of Eq. (1) have been constructed in recent years. They primarily differ in the subject of inference: SBI approaches such as Cockayne et al. (2018), of whichBayesCG is an example, place a prior distribution on the solution \(\varvec{x}^*\) of Eq. (1). Conversely, the MBI approach of Hennig (2015) and Bartels and Hennig (2016) places a prior on \(A^{-\!1}\), treating the action of the inverse operator as an unknown to be inferred.Footnote 2 This section reviews each approach and adds some new insights. In particular, SBI can be viewed as strict special case of MBI (Sect. 2.4).

Throughout this section, we will assume that the search directions \(S_m\) in \(S_m^\top A\varvec{x}^*= S_m^\top \varvec{b}\) are independent of \(\varvec{x}^*\). Generally speaking, this is not the case for projection methods, in which the solution space often depends strongly on \(\varvec{b}\), as described in Sects. 5 and 6. This disconnect is the source of the poor uncertainty quantification reported in Cockayne et al. (2018) and shown also to hold for the methods in this work in Sect. 6.4. This will not be examined in further detail in this work, though it remains an important area of development for probabilistic linear solvers.

2.1 Background on Gaussian conditioning

The propositions in this section follow from the following two classic properties of Gaussian distributions.

Lemma 1

Let \(\varvec{x} \in \mathbb {R}^d\) be Gaussian distributed with density \(p(\varvec{x})=\mathcal {N}(\varvec{x}; \varvec{x}_0, \varSigma )\) for \(\varvec{x}_0 \in \mathbb {R}^d\) and \(\varSigma \in \mathbb {R}^{d\times d}\) a positive semi-definite matrix. Let \(M \in \mathbb {R}^{n \times d}\) and \(\varvec{z} \in \mathbb {R}^n\). Then, \(\varvec{v} = M \varvec{x} + \varvec{z}\) is also Gaussian, with

Lemma 2

Let \(\varvec{x} \in \mathbb {R}^d\) be distributed as in Lemma 1, and let observations \(\varvec{y} \in \mathbb {R}^n\) be generated from the conditional density

with \(M \in \mathbb {R}^{n \times d}\), \(\varvec{z} \in \mathbb {R}^n\), and \(\varLambda \in \mathbb {R}^{n \times n}\) again positive semi-definite. Then, the associated conditional distribution on \(\varvec{x}\) after observing \(\varvec{y}\) is again Gaussian, with

This formula also applies if \(\varLambda = 0\), i.e. observations are made without noise, with the caveat that if \(M\varSigma M^{\top }\) is singular, the inverse should be interpreted as a pseudo-inverse.

2.2 Solution-based inference

To phrase the solution of Eq. (1) as a form of probabilistic inference, Cockayne et al. (2018) consider a Gaussian prior over the solution \(\varvec{x}^*\), and condition on observations provided by a set of search directions\(\varvec{s}_1, \dots , \varvec{s}_m\), \(m < d\). Let \(S_m \in \mathbb {R}^{d \times m}\) be given by \(S_m = [\varvec{s}_1, \ldots , \varvec{s}_m]\), and let information be given by \(\varvec{y}_m:=S_m^{\top }A\varvec{x}^*=S_m^{\top }\varvec{b}\). Since the information is a linear projection of \(\varvec{x}^*\), the posterior distribution is a Gaussian distribution on \(\varvec{x}^*\):

Lemma 3

(Cockayne et al. 2018) Assume that the columns of \(S_m\) are linearly independent. Consider the prior

The posterior from SBI is then given by

where

and \(\varvec{r}_0=\varvec{b}-A\varvec{x}_0\).

The following proposition establishes an optimality property of the posterior mean \(\varvec{x}_m\). This is a relatively well-known property of Gaussian inference, which will prove useful in subsequent sections.

Proposition 4

If \({\mathbb {S}}_m = \text {range}(S_m)\), then the posterior mean in Lemma 3 satisfies the optimality property

Proof

With the abbreviations \(X=\varSigma _0A^\top S_m\) and \(\varvec{y}=\varvec{x}^*-\varvec{x}_0\) the mean in Lemma 3 can be written as

where

is the solution of the weighted least squares problem (Golub and Van Loan 2013, Section 6.1)

This is equivalent to the desired statement. \(\square \)

2.3 Matrix-based inference

In contrast to SBI, the MBI approach of Hennig (2015) treats the matrix inverse \(A^{-\!1}\) as the unknown in the inference procedure. As in the previous section, search directions \(S_m\) yield matrix-vector products \(Y_m \in \mathbb {R}^{d\times m}\). In Hennig (2015), these arise from right-multiplyingFootnote 3\(A\) with \(S_m\), i.e. \(Y_m = AS_m\). Note that

Thus, \(S_m\) is a linear transformation of \(A^{-\!1}\) and Lemma 2 can again be applied:

Lemma 5

(Lemma 2.1 in Hennig (2015))Footnote 4 Consider the prior

Then, the posterior given the observations \(\overrightarrow{S_m}= A^{-\!1}Y_m\) is given by

with

For linear solvers, the object of interest is \(\varvec{x}^*=A^{-\!1}\varvec{b}\). Writing \(A^{-\!1}\varvec{b}=(I\otimes \varvec{b}^{\top })\overrightarrow{A^{-\!1}}\), and again using Lemma 1, we see that the associated marginal is also Gaussian and given by

In the Kronecker product specification for the prior covariance on \(A^{-\!1}\) from Eq. (4), the matrix \(\varSigma _0\), describes the dependence between the columns of \(A^{-\!1}\). The matrix \(W_0\) captures the dependency between the rows of \(A^{-\!1}\). Note that in Lemma 5, the posterior covariance has the form \(\varSigma _0 \otimes W_m\). When compared to the prior covariance, \(\varSigma _0 \otimes W_0\), it is clear that the observations have conveyed no new information to the first term of the Kronecker product covariance.

The Kronecker structure of the prior covariance matrix in Eq. (4) is by no means the only option that facilitates tractable inference.Footnote 5 However, in the absence of the literature exploring other approaches within MBI, we will assume throughout that MBI refers to the use of the Kronecker produce prior covariance.

2.4 Equivalence of MBI and SBI

In practice, Hennig (2015) notes that inference on \(A^{-\!1}\) should be performed only implicitly, avoiding the \(d^2\) storage cost and the mathematical complexity of the operations involved in Lemma 5. This raises the question of when MBI is equivalent to SBI. Although, based on Lemma 1, one might suspect SBI and MBI to be equivalent, in fact the posterior from Lemma 5 is structurally different to the posterior in Lemma 3: after projecting into solution space, the posterior covariance in Lemma 5 is a scalar multiple of the matrix \(\varSigma _0\), which is not the case in general in Lemma 3.

However, the implied posterior over the solution vector can be made to coincide with the posterior from SBI if one considers observations in MBI as

That is, as left-multiplications of A. We will refer to the observation model of Eq. (3) as right-multiplied information, and to Eq. (6) as left-multiplied information.

Proposition 6

Consider a Gaussian MBI prior

conditioned on the left-multiplied information of Eq. (6). The associated marginal on \(\varvec{x}\) is identical to the posterior on \(\varvec{x}\) arising in Lemma 3 from \(p(\varvec{x})= {\mathcal {N}}(\varvec{x};\varvec{x}_0, \varSigma _0)\) under the conditions

Proof

See “Appendix B”. \(\square \)

The first of the two conditions requires that the prior mean on the matrix inverse be consistent with the prior mean on the solution, which is natural. The second condition demands that, after projection into solution space, the relationship between the rows of \(A^{-\!1}\) modelled by \(W_0\) does not inflate the covariance \(\varSigma _0\). Note that this condition is trivial to enforce for an arbitrary covariance \(\bar{W_0}\) by setting \(W_0 = (\varvec{b}^{\top }\bar{W_0} \varvec{b})^{-1} \bar{W_0}\).

2.5 Remarks

The result in Proposition 6 shows that any result proven for SBI applies immediately to MBI with left-multiplied observations. Though MBI has more model parameters than SBI, there are situations in which this point of view is more appropriate. Unlike in SBI, the information obtained in MBI need not be specific to a particular solution vector \(\varvec{x}^*\) and thus can be propagated and recycled over several linear problems, similar to the notion of subspace recycling (Soodhalter et al. 2014). Secondly, MBI is able to utilise both left- and right-multiplied information, while SBI is restricted to left-multiplied information. This additional generality may prove useful in some applications.

3 Projection methods as inference

This section discusses a connection between probabilistic numerical methods for linear systems and the classic framework of projection methods for the iterative solution of linear problems. Section 3.1 reviews this established class of solvers, while Sect. 3.2 presents the novel results.

3.1 Background

Many iterative methods for linear systems, including CG and GMRES, belong to the class of projection methods (Saad 2003, p. 130f.). Saad describes a projection method as an iterative scheme in which, at each iteration, a solution vector \(\varvec{x}_m\) is constructed by projecting \(\varvec{x}^*\) into a solution space\({\mathbb {X}}_m\subset \mathbb {R}^d\), subject to the restriction that the residual \(\varvec{r}_m = \varvec{b} - A\varvec{x}_m\) is orthogonal to a constraint space\({\mathbb {U}}_m\subset \mathbb {R}^d\).

More formally, each iteration of a projection method is defined by two matrices \(X_m, U_m \in \mathbb {R}^{d\times m}\), and by a starting point \(\varvec{x}_0\). The matrices \(X_m\) and \(U_m\) each encode the solution and constraint spaces as \({\mathbb {X}}_m=\mathrm {range}(X_m)\) and \({\mathbb {U}}_m=\mathrm {range}(U_m)\). The projection method then constructs \(\varvec{x}_m\) as \(\varvec{x}_m = \varvec{x}_0 + X_m\varvec{\alpha }_m\) with \(\varvec{\alpha }_m\in \mathbb {R}^m\) determined by the constraint \(U_m^\top \varvec{r}_m = \varvec{0}\). This is possible only if \(U_m^{\top }AX_m\) is nonsingular, in which case one obtains

From this perspective, CG and GMRES perform only a single step with the number of iterations m fixed and determined in advance. For CG, the spaces are \({\mathbb {U}}_m = {\mathbb {X}}_m = K_m(A, \varvec{b})\), while for GMRES they are \({\mathbb {X}}_m=K_m(A, \varvec{b})\) and \({\mathbb {U}}_m=AK_m(A, \varvec{b})\) (Saad 2003, Proposition 5.1).

3.2 Probabilistic perspectives

In this section, we first show, in Proposition 7, that the conditional mean from SBI afterm steps corresponds to some projection method. Then, in Proposition 8 we prove the converse: that each projection method is also the posterior mean of a probabilistic method, for some prior covariance and choice of information.

Proposition 7

Let the columns of \(S_m\) be linearly independent. Consider SBI under the prior

and with observations \(\varvec{y}_m=S_m^{\top }\varvec{b}\). Then, the posterior mean \(\varvec{x}_m\) in Lemma 3 is identical to the iterate from a projection method defined by the matrices \(U_m=S_m\) and \(X_m=\varSigma _0A^{\top }S_m\), and the starting vector \(\varvec{x}_0\).

Proof

Substituting \(U_m = S_m\) and \(X_m = \varSigma _0 A^{\top }S_m\) into Lemma 3 gives Eq. (8), as required. \(\square \)

The converse to this also holds:

Proposition 8

Consider a projection method defined by the matrices \(X_m,U_m\in \mathbb {R}^{d\times m}\), each with linearly independent columns, and the starting vector \(\varvec{x}_0 \in \mathbb {R}^d\). Then, the iterate \(\varvec{x}_m\) in Eq. (8) is identical to the SBI posterior mean in Lemma 3 under the prior

when search directions \(S_m = U_m\) are used.

Proof

Abbreviate \(Z=X_m^\top A^\top U_m\) and write the projection method iterate from Eq. (8) as

Multiply the middle matrix by the identity,

and insert this into the expression for \(\varvec{x}_0\),

Setting \(U_m=S_m\) gives the mean in Lemma 3. \(\square \)

A direct way to enforce the posterior occupying the solution space is by placing a prior on the coefficients \(\varvec{\alpha }\) in \(\varvec{x} = \varvec{x}_0 + X_m \varvec{\alpha }\). Under a unit Gaussian prior \(\varvec{\alpha }\sim \mathcal {N}(\varvec{0}, I)\), the implied prior on \(\varvec{x}\) naturally has the form of Eq. (9).

However, this prior is unsatisfying since it requires the solution space to be specified a priori, precluding adaptivity in the algorithm and perhaps more worryingly, the posterior uncertainty over the solution is a matrix of zeros even though the solution is not fully identified. Again taking \(Z = X_m^{\top }A^{\top }U_m\):

Concerning this issue, Hennig (2015) and Bartels and Hennig (2016) each proposed to adding additional uncertainty in the null space of \(X_m\). This empirical uncertainty calibration step has not yet been analysed in detail. Such analysis is left for future work.

Including the solution space \(X_m\) in the prior covariance matrix requires it to be specified a priori. For solvers like CG and GMRES which construct \(X_m\) adaptively, this assumption may appear problematic—a probabilistic interpretation should use for inference only quantities that have already been computed. The computation of \(X_m\) could be seen as part of the initialisation, but this requires that the number of iterations m to be fixed a priori, whereas typically such methods choose m adaptively by examining the norm of the residual.Footnote 6 Nevertheless, the proposition provides a probabilistic view forarbitrary projection methods and does not involve \(A^{-\!1}\), unlike the results presented in Hennig (2015), Cockayne et al. (2017).

The above prior is not unique. The next proposition establishes probabilistic interpretations of projection methods under priors that are independent of solution- and constraint space, albeit under more restrictive conditions. The benefit of this is that m need not be fixed a priori.

Proposition 9

Consider a projection method defined by \(X_m, U_m\in \mathbb {R}^{d\times m}\) and the starting vector \(\varvec{x}_0\). Further suppose that \(U_m = R X_m\) for some invertible \(R \in \mathbb {R}^{d\times d}\), and that \(A^\top R\) is symmetric positive definite. Then, under the prior

and the search directions \(S_m = U_m = R X_m\), the iterate in the projection method is identical to the posterior mean in Lemma 3.

Proof

First substitute \(X_m=R^{-1}U_m\) into Eq. (8) to obtain

The third line uses \(\varSigma _0 = (A^{\top }R)^{-1} = R^{-1}A^{-T}\). This is equivalent to the posterior mean in Eq. (2) with \(S_m = U_m\). \(\square \)

A corollary which provides further insight arises when one considers the polar decomposition ofA. Recall that an invertible matrixA has a unique polar decomposition \(A = PH\), where \(P \in \mathbb {R}^{d \times d}\) is orthogonal and \(H \in \mathbb {R}^{d\times d}\) is symmetric positive definite.

Corollary 10

Consider a projection method defined by \(X_m, U_m\in \mathbb {R}^{d\times m}\) and the starting vector \(\varvec{x}_0\), and suppose that \(U_m = P X_m\), where P arises from the polar decomposition \(A = PH\). Then, under the prior

and the search directions \(S_m = U_m = P X_m\), the iterate in the projection method is identical to the posterior mean in Lemma 3.

Proof

This follows from Proposition 9. Setting \(R = P\) aligns the search directions in Corollary 10 with those in Proposition 9. Since P is orthogonal, \(P^{-1} = P^\top \), and since H is symmetric positive definite, \(A^\top P = P^\top A = H\) by definition of the polar decomposition, which gives the prior covariance required for Proposition 9.\(\square \)

This is an intuitive analogue of similar results in Hennig (2015) and Cockayne et al. (2017) which show that CG is recovered under certain conditions involving a prior \(\varSigma _0 = A^{-\!1}\). When \(A\) is not symmetric and positive definite, it cannot be used as a prior covariance. This corollary suggests a natural way to select a prior covariance still linked to the linear system, though this choice is still not computationally convenient. Furthermore, in the case that \(A\) is symmetric positive definite, this recovers the prior which replicates CG described in Cockayne et al. (2018). Note that each of H and P can be stated explicitly as \(H = (A^{\top }A)^\frac{1}{2}\) and \(P = A(A^{\top }A)^{-\frac{1}{2}}\). Thus, in the case of symmetric positive-definite A we have that \(H = A\) and \(P = I\), so that the prior covariance \(\varSigma _0 = A^{-1}\) arises naturally from this interpretation.

4 Preconditioning

This section discusses probabilistic views on preconditioning. Preconditioning is a widely used technique accelerating the convergence of iterative methods (Saad 2003, Sections 9 and 10). A preconditioner P is a nonsingular matrix satisfying two requirements:

- 1.

Linear systems \(Pz=c\) can be solved at low computational cost

- 2.

P is “close” toA in some sense.

In this sense, solving systems based upon a preconditioner can be viewed as approximately inverting A, and indeed, many preconditioners are constructed based upon this intuition. One distinguishes between right preconditioners\(P_r\) and left preconditioners\(P_l\), depending on whether they act on A from the left or the right. Two-sided preconditioning with nonsingular matrices \(P_l\) and \(P_r\) transforms implicitly Eq. (1) into a new linear problem

The preconditioned system can then be solved using arbitrary projection methods as described in Sect. 3.1, from the starting point \(\varvec{z}_0\) defined by \(\varvec{x}_0 = P_r \varvec{z}_0\). The probabilistic view can be used to create a nuanced description of preconditioning as a form of prior information. In the SBI framework, Proposition 11 below shows that solving a right-preconditioned system is equivalent to modifying the prior, while Proposition 12 shows that left preconditioning is equivalent to making a different choice of observations.

Proposition 11

(Right preconditioning) Consider the right-preconditioned system

SBI on Eq. (11) under the prior

is equivalent to solving Eq. (1) under the prior

Proof

Let \(p(x)=\mathcal {N}(\varvec{x}; \varvec{x}_0, \varSigma _r)\). Lemma 3 implies that after observing information from search directions \(S_m\), the posterior mean equals

where \(\varvec{r}_0 = \varvec{b}-A\varvec{x}_0\). Setting \(\varvec{x}_0=P_r \varvec{z}_0\) and letting \(\varSigma _r=P_r\varSigma _0P_r^\top \) gives

where \(B:=AP_r\) and \(\hat{\varvec{r}}_0 = \varvec{b}-B\varvec{z}_0\). Left multiplying by \(P_r^{-1}\) shows that this is equivalent to

Thus, \(\varvec{z}_m\) is the posterior mean of the system \(B\varvec{z}^* = \varvec{b}\) with prior Eq. (12) after observing search directions \(S_m\). \(\square \)

Proposition 12

(Left preconditioning) Consider the left-preconditioned system

And the SBI prior

Then, the posterior from SBI on Eq. (13) under search directions \(S_m\) is equivalent to the posterior from SBI applied to the system Eq. (1) under search directions \(P_l^{\top }S_m\).

Proof

Lemma 3 implies that after observing search directions \(T_m\), the posterior mean over the solution of Eq. (1) equals

where \(\varvec{r}_0 = \varvec{b}-A\varvec{x}_0\). Setting \(T_m= P_l^\top S_m\) gives

where \(B :=P_l A\) and \(\hat{\varvec{r}}_0 = P_l \varvec{b}-P_lA\varvec{x}_0\). Thus, \(\varvec{x}_m\) is the posterior mean of the system \(B\varvec{x}^*= P_l \varvec{b}\) after observing search directions \(S_m\). \(\square \)

If a probabilistic linear solver has a posterior mean which coincides with a projection method (as discussed in Sect. 3.1), the Propositions 11 and 12 show how to obtain a probabilistic interpretation of the preconditioned version of that algorithm. Furthermore, the equivalence demonstrated in Sect. 2.4 shows that the reasoning from Propositions 11 and 12 carries over to MBI based on left-multiplied observations: right preconditioning corresponds to a change in prior belief, while left-preconditioning corresponds to a change in observations.

We do not claim that this probabilistic interpretation of preconditioning is unique. For example, when using MBI with right-multiplied observations, the same line of reasoning can be used to show the converse: right preconditioning corresponds to a change in the observations and left preconditioning to a change in the prior.

5 Conjugate gradients

Conjugate gradients have been studied from a probabilistic point of view before by Hennig (2015) and Cockayne et al. (2018). This section generalises the results of Hennig (2015) and leverages Proposition 6 for new insights into BayesCG. For this section (but not thereafter), assume that \(A\) is a symmetric and positive definite matrix.

5.1 Left-multiplied view

The BayesCG algorithm proposed by Cockayne et al. (2018) encompasses conjugate gradients as a special case. BayesCG uses left-multiplied observations and was derived in the solution-based perspective.

The posterior in Lemma 3 does not immediately result in a practical algorithm as it involves the solution of a linear system based on the matrix \(S_m^{\top }A\varSigma _0 A^{\top }S_m\in \mathbb {R}^{m\times m}\), which requires \({\mathcal {O}}(m^3)\) arithmetic operations. BayesCG avoids this cost by constructing search directions that are \(A\varSigma _0A^\top \)-orthonormal, as shown below, see (Cockayne et al. 2018, Proposition 7).

Proposition 13

[Proposition 7 of Cockayne et al. 2018 (BayesCG)] Let \(\tilde{\varvec{s}}_1 = \varvec{b} - A \varvec{x}_0\), and let \(\varvec{s}_1 = \tilde{\varvec{s}}_1 / \Vert \tilde{\varvec{s}}_1\Vert \). For \(j = 2,\dots ,m\) let

Then, the set \(\{\varvec{s}_1, \dots , \varvec{s}_m\}\) is \(A\varSigma _0 A^{\top }\)-orthonormal, and consequently \(S_m^{\top }A\varSigma _0 A^{\top }S_m = I\).

With these search directions constructed, BayesCG becomes an iterative method:

Proposition 14

(Proposition 6 of Cockayne et al. 2018) Using the search directions from Proposition 13, the posterior from Lemma 3 reduces to:

In Proposition 4 of Cockayne et al. (2018), it was shown that the BayesCG posterior mean corresponds to the CG solution estimate when the prior covariance is taken to be \(\varSigma _0 = A^{-\!1}\), though this is not a practical choice of prior covariance as it requires access to the unavailable \(A^{-1}\). Furthermore, in Proposition 9 it was shown that when using the search directions from Proposition 13, the posterior mean from BCG has the following optimality property:

Note that this is now a trivial special case of Proposition 4.

The following proposition leverages these results along with Proposition 6 to show that there exists an MBI method which, under a particular choice of prior and with a particular methodology for the generation of search directions, is consistent with CG.

Proposition 15

Consider the MBI prior

where \(W_0 \in \mathbb {R}^d\) is symmetric positive definite and so that \(\varvec{b}^{\top }W_0 \varvec{b}= 1\). Suppose left-multiplied information is used, and that the search directions are generated sequentially according to:

and for \(j=2,\dots ,m\)

Then, it holds that the implied posterior mean on solution space, given by \(A_m^{-1} \varvec{b}\), corresponds to the CG solution estimate after m iterations, with starting point \(\varvec{x}_0 = A_0^{-1} \varvec{b}\).

Proof

First note that, by Proposition 6, since left-multiplied observations are used and since \(\varvec{b}^{\top }W_0 \varvec{b}= 1\), the implied posterior distribution on solution space from MBI is identical to the posterior distribution from SBI under the prior

It thus remains to show that the sequence of search directions generated is identical to those in Proposition 13 for this prior. For \(\tilde{\varvec{s}_1}\):

as required. For \(\tilde{\varvec{s}_j}\):

where the second line uses that \(A^{-\!1}_{j-1} \varvec{b}= \varvec{x}_{j-1}\). Thus, the search directions coincide with those in Proposition 13. It therefore holds that the implied posterior mean on solution space, \(A^{-\!1}_m \varvec{b}\), coincides with the solution estimate produced by CG. \(\square \)

5.2 Right-multiplied view

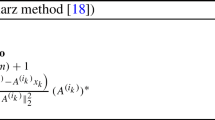

Interpretations of CG (and general projection methods) that use right-multiplied observations seem to require more care than those based on left-multiplied observations. Nevertheless, Hennig (2015) provided an interpretation for CG in this framework, essentially showingFootnote 7 that Algorithm 1 reproduces both the search directions and solution estimates from CG under the prior

where \(\alpha \in \mathbb {R}\setminus \{0\}\), \(\beta \in \mathbb {R}^+\) and  denotes the symmetric Kronecker product (see Section A.1). The

posterior under such a prior is described in Lemma 2.2 of Hennig (2015) (see Lemma 21), though we note that the sense in which the solution

estimate \(\varvec{x}_m\) output by this algorithm is related to the posterior over

\(A^{-1}\) differs from that in the previous section, in the sense that

\(A^{-\!1}_m \varvec{b}\ne \varvec{x}_m\). (More precisely, \(\varvec{x}_m=A^{-\!1}_m (\varvec{b}-A\varvec{x}_0)-\varvec{x}_0 - (1-\alpha _m) \varvec{d}_m\), as the CG estimate is corrected by the step size computed in

line 6. Fixing this rank-1 discrepancy would complicate the exposition of

Algorithm 1 and yield a more cumbersome algorithm.) The following proposition

generalises this result.

denotes the symmetric Kronecker product (see Section A.1). The

posterior under such a prior is described in Lemma 2.2 of Hennig (2015) (see Lemma 21), though we note that the sense in which the solution

estimate \(\varvec{x}_m\) output by this algorithm is related to the posterior over

\(A^{-1}\) differs from that in the previous section, in the sense that

\(A^{-\!1}_m \varvec{b}\ne \varvec{x}_m\). (More precisely, \(\varvec{x}_m=A^{-\!1}_m (\varvec{b}-A\varvec{x}_0)-\varvec{x}_0 - (1-\alpha _m) \varvec{d}_m\), as the CG estimate is corrected by the step size computed in

line 6. Fixing this rank-1 discrepancy would complicate the exposition of

Algorithm 1 and yield a more cumbersome algorithm.) The following proposition

generalises this result.

Proposition 16

Consider the prior

where \(W:=A^{-\!1}\). For all choices \(\alpha \in \mathbb {R}\setminus \{0\}\) and \(\beta ,\gamma \in \mathbb {R}_{+,0}\) with \(\beta + \gamma >0\), Algorithm 1 is equivalent to CG, in the sense that it produces the exact same sequence of estimates \(\varvec{x}_i\) and scaled search directions \(\varvec{s}_i\).

Proof

The proof is extensive and has been moved to “Appendix B”. \(\square \)

Note that, unlike previous propositions, Proposition 16 proposes a prior that does not involve \(A^{-\!1}\) for the case when \(\gamma = 0\).

6 GMRES

The generalised minimal residual method (Saad 2003, Section 6.5) applies to general nonsingular matrices A. At iteration m, GMRES minimises the residual over the affine space \(\varvec{x}_0 + K_m(A,\varvec{ r}_0)\). That is, \(\varvec{r}_m = \varvec{r}_0 - A\varvec{x}_m\) satisfies

Since \(A \varvec{x}-\varvec{b}= A (\varvec{x}- \varvec{x}^*)\), this corresponds to minimising the error in the \(A^\top A\) norm.

We present a brief development of GMRES, starting with Arnoldi’s method (Sect. 6.1) and the GMRES algorithm (Sect. 6.2), before presenting our Bayesian interpretation (Sect. 6.3).

6.1 Arnoldi’s method

GMRES uses Arnoldi’s method (Saad 2003, Section 6.3) to construct orthonormal bases for Krylov spaces of general, nonsingular matrices A. Starting with \(\varvec{q}_1 = \varvec{r}_0/\Vert \varvec{r}_0\Vert _2\), Arnoldi’s method recursively computes the orthonormal basis

for \(K_m(A, \varvec{r}_0)\). The basis vectors satisfy the relations

and \(Q_m^\top AQ_m = H_m\), where the upper Hessenberg matrix \(H_m\) is defined as

and

6.2 GMRES

GMRES computes the iterate

based on the optimality condition in Eq. (14), which can equivalently be expressed as

Thus,

confirming that GMRES is a projection method with \(X_m=Q_m\) and \(U_m=AQ_m\).

GMRES solves the least squares problem in Eq. (16) efficiently by projecting it to a lower-dimensional space via Arnoldi’s method. To this end, express the starting vector in the Krylov basis,

and exploit the Arnoldi recursion from Eq. (15),

followed by the unitary invariance of the two-norm,

Thus, instead of solving the least squares problem Equation (16) with d rows, GMRES solves instead a problem with only \(m+1\) rows,

The computations are summarised in Algorithm 2.

6.3 Bayesian interpretation of GMRES

We now present probabilistic linear solvers with posterior means that coincide with the solution estimate from GMRES.

6.3.1 Left-multiplied view

Proposition 17

Under the SBI prior

and the search directions \(U_m = A Q_m\), the posterior mean is identical to the GMRES iterate \(\varvec{x}_m\) in Eq. (17).

Proof

Substitute \(R=A\) and \(U_m = AQ_m\) into Proposition 9.\(\square \)

Proposition 17 is intuitive in the context of Proposition 4: setting \(\varSigma _0 = (A^{\top }A)^{-1}\) ensures that the norm being minimised coincides with that of GMRES, as does the solution space \(X_m = A Q_m\). This interpretation exhibits an interesting duality with CG for which \(\varSigma _0=A^{-\!1}\).

Another probabilistic interpretation follows from Proposition 8.

Corollary 18

Under the prior

and with observations \(\varvec{y}_m =Q_m^\top \varvec{b}\), the posterior mean from SBI is identical to the GMRES iterate \(\varvec{x}_m\) in Eq. (17).

Note that Proposition 17 has a posterior covariance which is not practical, as it involves \(A^{-\!1}\). (Cockayne et al. 2017) proposed replacing \(A^{-\!1}\) in the prior covariance with a preconditioner to address this, which does yield a practically computable posterior, but this extension was not explored here. Furthermore, that approach yields unsatisfactorily calibrated posterior uncertainty, as described in that work. Corollary 18 does not have this drawback, but the posterior covariance is a matrix of zeroes.

6.3.2 Right-multiplied view

As for CG in Sect. 5.2, finding interpretations of GMRES that use right-multiplied observations appears to be more difficult.

Proposition 19

Under the prior

and given \(Y_m=AQ_m\), the implied posterior mean on the solution space given by \(A_m^{-1} \varvec{b}\) is equivalent to the GMRES solution. This correspondence breaks when \(\varvec{x}_0\ne \varvec{0}\).

Proof

Under this prior, \(\varvec{b}\) applied to the posterior mean is

which is the GMRES projection step if \(\varvec{x}_0=\varvec{0}\). \(\square \)

6.4 Simulation study

In this section, the simulation study of Cockayne et al. (2018) will be replicated to demonstrate that the uncertainty produced from GMRES in Proposition 17 is similarly poorly calibrated to CG, owing to the dependence of \(Q_m\) on \(\varvec{x}^*\) by way of its dependence on \(\varvec{b}\). Throughout the size of the test problems is set to \(d=100\). The eigenvalues of \(A\) were drawn from an exponential distribution with parameter \(\gamma =10\) and eigenvectors uniformly from the Haar-measure over rotation matrices (see Diaconis and Shahshahani 1987). In contrast to Cockayne et al. (2018), the entries of \(\varvec{b}\) are drawn from a standard Gaussian distribution, rather than \(\varvec{x}_*\). By Lemma 1, the prior is then perfectly calibrated for this scenario, providing justification for the expectation that the posterior should be equally well calibrated for \(m\ge 1\).

Convergence of posterior mean and variance of the probabilistic interpretation of GMRES from Proposition 17

Cockayne et al. (2018) argue that if the uncertainty is well calibrated, then \(\varvec{x}^*\) can be considered as a draw from the posterior. Under this assumption, i.e. \(\varSigma _m^{-\nicefrac {1}{2}}(\varvec{x}^*-\varvec{x}_m) \sim \mathcal {N}(\varvec{0}, \varvec{I})\) they derive the test statistic:

Figure 1 shows on the left the convergence of GMRES and on the right the convergence rate of the trace of the posterior covariance. Figure 2 displays the test statistic. It can be seen that the same poor uncertainty quantification occurs in BayesGMRES; even after just 10 iterations, the empirical distribution of the test statistic exhibits a profound left shift, indicating an overly conservative posterior distribution. Producing well-calibrated posteriors remains an open issue in the field of probabilistic linear solvers.

7 Discussion

We have established many new connections between probabilistic linear solvers and a broad class of iterative methods. Matrix-based and solution-based inference were shown to be equivalent in a particular regime, showing that results from SBI transfer to MBI with left-multiplied observations. Since SBI is a special case of MBI, future research will establish what additional benefits the increased generality of MBI can provide.

We also established a connection between the wide class of projection methods and probabilistic linear solvers. The common practice of preconditioning has an intuitive probabilistic interpretation, and all probabilistic linear solvers can be interpreted as projection methods. While the converse was shown to hold, the conditions under which generic projection methods can be reproduced are somewhat restrictive; however, GMRES and CG, which are among the most commonly used projection methods, have a well-defined probabilistic interpretation. Probabilistic interpretations of other widely used iterative methods can, we anticipate, be established from the results presented in this work.

Posterior uncertainty remains a challenge for probabilistic linear solvers. Direct probabilistic interpretations of CG and GMRES yield posterior covariance matrices which are not always computable, and even when the posterior can be computed, the uncertainty remains poorly calibrated. This is owed to the dependence of the search directions in Krylov methods on \(A\varvec{x}^*= \varvec{b}\), resulting in an algorithm which is not strictly Bayesian. Mitigating this issue without sacrificing the fast rate of convergence provided by Krylov methods remains an important focus for future work.

Notes

Stacking the columns is equivalently possible and common. It is associated with a permutation in the definition of the Kronecker product, but the resulting inferences are equivalent.

Hennig (2015) also discusses inference over \(A\). This model class will not be discussed further in the present work. It has the disadvantage that the associated marginal on \(\varvec{x}^*\) is nonanalytic, but more easily lends itself to situations with noisy or otherwise perturbed matrix-vector products as observations.

This corrects a printing error in Hennig (2015). The notation has been adapted to fit the context.

A diagonal covariance matrix would allow efficient inference, as well.

Sometimes m is fixed a priori, due to memory or computation time limits.

Algorithm 1 is not included in this form in the op.cit.

References

Bartels, S., Hennig, P.: Probabilistic approximate least-squares. In: Proceedings of Artificial Intelligence and Statistics (AISTATS) (2016)

Cockayne, J., Oates, C., Sullivan, T.J., Girolami, M.: Probabilistic numerical methods for partial differential equations and Bayesian inverse problems. arXiv:1605.07811 (2016)

Cockayne, J., Oates, C., Sullivan, T.J., Girolami, M.: Bayesian probabilistic numerical methods. 1702.03673 (2017)

Cockayne, J., Oates, C., Ipsen, I.C.F., Girolami, M.: A Bayesian conjugate gradient method. arXiv:1801.05242 (2018)

Diaconis, P., Shahshahani, M.: The subgroup algorithm for generating uniform random variables. Probab. Eng. Inf. Sci. 1(01), 15 (1987). https://doi.org/10.1017/s0269964800000255

Golub, G.H., Van Loan, C.F.: Matrix Computations. Johns Hopkins Studies in the Mathematical Sciences, 4th edn. Johns Hopkins University Press, Baltimore (2013)

Hennig, P.: Probabilistic interpretation of linear solvers. SIAM J. Optim. 25(1), 234–260 (2015). https://doi.org/10.1137/140955501

Hennig, P., Osborne, M.A., Girolami, M.: Probabilistic numerics and uncertainty in computations. Proc. R. Soc. Lond. A Math. Phys. Eng. Sci.471, 20150142 (2015)

Karvonen, T., Sarkka, S.: Classical quadrature rules via gaussian processes. In: IEEE 27th International Workshop on Machine Learning for Signal Processing (MLSP). IEEE (2017). https://doi.org/10.1109/mlsp.2017.8168195

Kersting, H., Sullivan, T.J., Hennig, P.: Convergence rates of Gaussian ODE filters. arXiv:1807.09737, 7 (2018)

Liesen, J., Strakos, Z.: Krylov Subspace Methods. Principles and Analysis. Oxford University Press, Oxford (2012). https://doi.org/10.1093/acprof:oso/9780199655410.001.0001

Nocedal, J., Wright, S.J.: Numerical Optimization. Springer, Berlin (1999)

Saad, Y.: Iterative Methods for Sparse Linear Systems. Society for Industrial and Applied Mathematics, 2nd edn. SIAM, Philadelphia (2003)

Saad, Y., Schultz, M.H.: GMRES: a generalized minimal residual algorithm for solving nonsymmetric linear systems. SIAM J. Sci. Stat. Comput.7(3), 856–869 (1986). https://doi.org/10.1137/0907058

Schober, M., Duvenaud, D., Hennig, P.: Probabilistic ODE solvers with Runge–Kutta means. In: Advances in Neural Information Processing Systems, vol. 27, pp. 739–747. Curran Associates, Inc. (2014). http://papers.nips.cc/paper/5451-probabilistic-ode-solvers-with-runge-kutta-means.pdf

Schober, M., Särkkä, S., Hennig, P.: A probabilistic model for the numerical solution of initial value problems. Stat. Comput. 29(1), 99–122 (2019)

Soodhalter, K.M., Szyld, D.B., Xue, F.: Krylov subspace recycling for sequences of shifted linear systems. Appl. Numer. Math. 81, 105–118 (2014). https://doi.org/10.1016/j.apnum.2014.02.006

Xi, X., Briol, F.-X., Girolami, M.: Bayesian quadrature for multiple related integrals. In: Proceedings of the 35th International Conference on Machine Learning (ICML) (2018). arXiv:8010.4153

Acknowledgements

Open access funding provided by Max Planck Society. Ilse Ipsen wact DMS-1760374. Mark Girolami was supported by EPSRC grants [EP/R034710/1, EP/R018413/1, EP/R004889/1, EP/P020720/1], an EPSRC Established Career Fellowship EP/J016934/3, a Royal Academy of Engineering Research Chair, and The Lloyds Register Foundation Programme on Data Centric Engineering. Philipp Hennig was supported by an ERC grant [757275/PANAMA].

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Properties of Kronecker products

The following identities about Kronecker products and the vectorisation operator are easily derived, but recalled here for the convenience of the reader:

1.1 A.1 The symmetric Kronecker product

Definition 20

(symmetric Kronecker product) The symmetric Kronecker product for two square matrices \(A,B\in \mathbb {R}^{N\times N}\) of equal size is defined as

where \([\varGamma ]_{ij,kl}:=\nicefrac {1}{2}\delta _{ik}\delta _{jl}+\nicefrac {1}{2}\delta _{il}\delta _{jk}\) satisfies

for all square matrices \(C\in \mathbb {R}^{N\times N}\).

Proposition 21

(Theorem 2.3 in Hennig 2015) Let \(W\in \mathbb {R}^{d\times d}\) be symmetric and positive definite. Assume a Gaussian

prior of symmetric mean \(A^{-\!1}_0\) and covariance  on the elements of a symmetric matrix \(A^{-\!1}\). After m linearly

independent noise-free observations of the form \(S = A^{-\!1}Y\), \(Y\in \mathbb {R}^{d\times m}, {\text {rk}}(Y)=m\), the posterior belief over \(A^{-\!1}\) is a Gaussian with mean

on the elements of a symmetric matrix \(A^{-\!1}\). After m linearly

independent noise-free observations of the form \(S = A^{-\!1}Y\), \(Y\in \mathbb {R}^{d\times m}, {\text {rk}}(Y)=m\), the posterior belief over \(A^{-\!1}\) is a Gaussian with mean

and posterior covariance

where \(G:=(Y^{\top }WY)^{-1}\).

Remark 22

Since \(A_0^{-1}\) is symmetric and the symmetric prior places mass only on symmetric matrices, the posterior mean \(A_m^{-1}\) is also symmetric.

Appendix B: Proofs

1.1 B.1 Proposition 6

Proof of Proposition 6

Let \(H = A^{-1}\) and let \(A_0^{-1} = H_0\). First note that by right-multiplying the information in Eq. (6) by H:

Now the implied posterior on \(\overrightarrow{H}\) can be computed using the standard laws of Gaussian conditioning:

Let \(\varOmega _0 = \varSigma _0 \otimes W_0\) and let \(P = Y_m^\top \otimes I\). Then,

Now note that

where the second line uses Eq. (K2) and the third uses Eq. (K4). Thus,

where the second line is again using Eq .(K2) and Eq. (K4), while the third line uses Eq. (K3). We conclude that

From these expressions, it is straightforward to simplify the expressions for \(\overrightarrow{H_m}\):

where the last line follows from K1. For \(\varOmega _m\):

where the last line is from application of K5.

It remains to project the posterior into \(\mathbb {R}^d\) by performing the matrix-vector product \(H\varvec{b}\).

Thus, the implied posterior is \(\varvec{x} \sim {\mathcal {N}}(\bar{\varvec{x}}_m, {\bar{\varSigma }}_m)\), with

where in the last line we have used that \(H_0 \varvec{b} = \varvec{x}_0\) and that \(Y_m = A^\top S_m\). Furthermore,

where in the second line we have used K2 and the fact that \(\varvec{b}^\top W_0 \varvec{b}\) is a scalar, while in the third line we have used that \(\varvec{b}^\top W_0 \varvec{b} = 1\) and that \(Y_m = A^\top S_m\).

Note that \(\varvec{x}_m = \bar{\varvec{x}}_m\) and \(\varSigma _m = {\bar{\varSigma }}_m\), as defined in Cockayne et al. (2018). Thus, the proof is complete. \(\square \)

1.2 B.2 Theorem 16

Proof of Theorem 16

Denote by \(\varvec{x}_i^{CG}\) the conjugate gradient estimate in iteration i and with \(\varvec{p}_i\) the search direction in that iteration. From one iteration to the next, the update to the solution can be written as (Nocedal and Wright 1999, p. 108)

Comparing this update to lines 7 to 10 in Algorithm 1, it is sufficient to show that \(\varvec{d}_i\propto \varvec{p}_i\) which follows from Lemma 23. \(\square \)

Lemma 23

Assume that CG does not terminate before d iterations. Using the prior of Theorem 16 in Algorithm 1, the directions \(\varvec{d}_i\) are scaled conjugate gradients search directions, i.e.

where \(\varvec{p}_{i}^{CG}\) is the CG search direction in iteration i and \(\gamma _i\in \mathbb {R}\setminus \{0\}\).

Proof

The proof proceeds by induction. Throughout we will suppress the superscript CG on the CG search directions, i.e. \(\varvec{p}_i^\text {CG} = \varvec{p}_i\). For \(i=1\), \(A^{-\!1}_{i-1}=\alpha I\) by assumption and therefore \(\varvec{d}_i=\alpha \varvec{r}_0\) which is the first CG search direction scaled by \(\gamma _1=\alpha \ne 0\).

For the inductive step, suppose that the search directions \(\varvec{s}_1, ..., \varvec{s}_{i-1}\) are scaled CG directions and that the vectors \(\varvec{x}_1, \dots , \varvec{x}_{i-1}\) are the same as the first \(i-1\) solution estimates produced by CG. We will prove that \(\varvec{s}_i\) is the ith CG search direction, and that \(\varvec{x}_i\) is the ith solution estimate from CG. Lemma 25 states that \(\varvec{d}_i\) can be written as

where \(\nu _j \in \mathbb {R}, j=1,\dots ,i\). Under the prior, the posterior mean \(A^{-\!1}_i\) is always symmetric as stated in Remark 22. This allows application of Lemma 24, so that \(\{\varvec{s}_1, \dots , \varvec{s}_{i-1}, \varvec{d}_i \}\) is an A-conjugate set. Thus, we have, for \(\ell <i\):

Now note that

This follows from line 10 of Algorithm 1, from which it is clear that \(\varvec{y}_\ell =\varvec{r}_\ell - \varvec{r}_{\ell -1}\). Recall that the CG residuals \(\varvec{r}_j\) are orthogonal (Nocedal and Wright 1999, p. 109) and that from the inductive assumption, Algorithm 1 is equivalent to CG up to iteration \(i-1\)). Thus, for \(\ell < i-1\) we have that

where the second line is from application of the first line in Eq. (K25). However, \(A\) is positive definite and by assumption the algorithm has not converged, so \(\varvec{d}_\ell \ne \varvec{0}\). Furthermore, clearly \(\varvec{s}_\ell ^{\top }A\varvec{s}_\ell \ne 0\). Hence, we must have that

Equation (K24) thus simplifies to

Now, again by Lemma 24, \(\varvec{d}_i\) must be conjugate to \(\varvec{s}_{i-1}\) which implies \(\nu _i\ne 0\). Pre-multiplying Eq. (K26) by \(\varvec{s}_{i-1}^{\top }A\) gives

Thus, \(\varvec{d}_i\) can be written as

where the second line again applies the inductive assumption that \(\varvec{d}_{i-1}\) and \(\varvec{s}_{i-1}\) are proportional to the CG search direction \(\varvec{p}_{i-1}\), noting that the proportionality constants on numerator and denominator cancel. The term inside the brackets is precisely the ith CG search direction. This completes the result. \(\square \)

Lemma 24

If the belief over \(A^{-\!1}_m\) is symmetric for all \(m=0,\dots ,d\) and \(A\) is symmetric and positive definite, then Algorithm 1 produces \(A\)-conjugate directions.

Proof

The proof is by induction. Note that the case \(i = 1\) is irrelevant since a set consisting of one element is trivially A-conjugate. On many occasions, the proof relies on the consistency of the MBI belief, i.e. \(A^{-\!1}_i \varvec{z}_k=\varvec{d}_k\) for \(k\le i\) and by symmetry \(\varvec{z}_k^{\top }A^{-\!1}_i=\varvec{d}_k^{\top }\). Thus, for the base case \(i=2\) we have:

where the second line is by line 10 of Algorithm 1. Now recall that \(\alpha _1=-\nicefrac {\varvec{d}_1^{\top }\varvec{r}_0}{\varvec{d}_1^{\top }A\varvec{d}_1}\) to give:

Here, the symmetry of the estimator \(A^{-\!1}_i\) is used in Eq. (K28). For the inductive step, assume \(\{\varvec{d}_0,\dots ,\varvec{d}_{i-1}\}\) are pairwise \(A\)-conjugate. For any \(k<i\), we have:

where the second line follows from the fact that \(\varvec{r}_i = \varvec{r}_{i-1} + \varvec{y}_i\). Thus, we have:

Now, applying the conjugacy from the inductive assumption:

where the second line rearranges line 6 of the algorithm to obtain \(\alpha _i \varvec{d}_i^{\top }\varvec{z}_i = -\varvec{d}_i^{\top }\varvec{r}_{i-1}\). The third line again uses that \(\varvec{r}_i = \varvec{r}_{i-1} + \varvec{y}_i\), while the fourth line is from the assumed conjugacy. \(\square \)

Lemma 25

Under the prior in Theorem 16 and given scaled CG search directions \(\varvec{p}_1, ..., \varvec{p}_i\), it holds that \(A^{-\!1}_{i}\varvec{r}_i\in {\text {span}}\{\varvec{p}_1, ..., \varvec{p}_{i}, \varvec{r}_{i}\}.\)

Proof

Recall first that under the prior in Theorem 16, \(A^{-\!1}_0 = \alpha I\). Then, by inspection of Eq. (21) we have \(A^{-\!1}_i \varvec{r}_i \in {\mathcal {S}}\) where

By choice of \(W=\beta I+\gamma A^{-\!1}\), \({\mathcal {S}}={\text {span}}\{\varvec{r}_{i}, \varvec{p}_1, ..., \varvec{p}_{i}, \varvec{y}_1, ..., \varvec{y}_i\}\). From line 10 of Algorithm 1, \(\varvec{y}_i = \varvec{r}_i - \varvec{r}_{i-1}\) and therefore \({\mathcal {S}}={\text {span}}\{\varvec{r}_1, ..., \varvec{r}_{i}, \varvec{p}_1, ..., \varvec{p}_{i}\}\). By Theorem 5.3 in (Nocedal and Wright 1999, p. 109), the span of the conjugate gradients residuals and search directions are equivalent. Therefore, \({\mathcal {S}}\subseteq \{\varvec{r}_i, \varvec{p}_1, ..., \varvec{p}_i\}\). \(\square \)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Bartels, S., Cockayne, J., Ipsen, I.C.F. et al. Probabilistic linear solvers: a unifying view. Stat Comput 29, 1249–1263 (2019). https://doi.org/10.1007/s11222-019-09897-7

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11222-019-09897-7