Abstract

We provide a method for fitting monotone polynomials to data with both fixed and random effects. In pursuit of such a method, a novel approach to least squares regression is proposed for models with functional constraints. The new method is able to fit models with constrained parameter spaces that are closed and convex, and is used in conjunction with an expectation–maximisation algorithm to fit monotone polynomials with mixed effects. The resulting mixed effects models have constrained mean curves and have the flexibility to include either unconstrained or constrained subject-specific curves. This new methodology is demonstrated on real-world repeated measures data with an application from sleep science. Code to fit the methods described in this paper is available online.

Similar content being viewed by others

Notes

After multiple M-steps there is dependence through the use of updated parameters in the E-step of the EM algorithm.

Box constraints occur when \(T(\varvec{\beta }) = \left\{ (u_{1},u_{2},\ldots ,u_{rg}) \in {\mathbb {R}}^{rg}: a_{i,1} \le u_{i} \le a_{i,2}, i = 1,2,\ldots ,rg \right\} \) where the \(a_{i,j}\)’s are constants.

For a detailed discussion on the degrees of freedom in a mixed effects model, see Chapter 2.2 of Hodges (2013).

Appendix E contains details on the envelope theorem.

The full derivative is used here because \(c(\varvec{\beta })\) is a multivariate composite function where each component is dependent on \(\varvec{\beta }\). See Appendix E for more details.

Schmidt (2004) attributes the origins of the envelope theorem to Auspitz and Lieben (1889) (in German) in their review and acknowledges that these theorems are not well known outside of economics and sensitivity analysis. An early English publication of the envelope theorem is contained within Samuelson (1947), which was further extended by Afriat (1971) and others.

References

Afriat, S.: Theory of maxima and the method of Lagrange. SIAM J. Appl. Math. 20(3), 343–357 (1971)

Auspitz, R., Lieben, R.: Untersuchungen über die Theorie des Preises. Duncker & Humblot, Berlin (1889)

Barlow, R.E., Brunk, H.D.: The isotonic regression problem and its dual. J. Am. Stat. Assoc. 67(337), 140–147 (1972)

Barlow, R.E., Bartholomew, D.J., Bremner, J., Brunk, H.D.: Statistical Inference Under Order Restrictions: The Theory and Application of Isotonic Regression. Wiley, New York (1972)

Bates, D., Mächler, M., Bolker, B., Walker, S.: Fitting linear mixed-effects models using lme4. J. Stat. Softw. 67(1), 1–48 (2015)

Belenky, G., Wesensten, N.J., Thorne, D.R., Thomas, M.L., Sing, H.C., Redmond, D.P., Russo, M.B., Balkin, T.J.: Patterns of performance degradation and restoration during sleep restriction and subsequent recovery: a sleep dose-response study. J. Sleep Res. 12(1), 1–12 (2003)

Booth, J.G., Hobert, J.P.: Maximizing generalized linear mixed model likelihoods with an automated Monte Carlo EM algorithm. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 61(1), 265–285 (1999)

Bradley, R.A., Srivastava, S.S.: Correlation in polynomial regression. Am. Stat. 33(1), 11–14 (1979)

Cassioli, A., Lorenzo, D.D., Sciandrone, M.: On the convergence of inexact block coordinate descent methods for constrained optimization. Eur. J. Oper. Res. 231(2), 274–281 (2013)

Chen, J., Zhang, D., Davidian, M.: A Monte Carlo EM algorithm for generalized linear mixed models with flexible random effects distribution. Biostatistics 3(3), 347–360 (2002)

Damien, P., Walker, S.G.: Sampling truncated normal, beta, and gamma densities. J. Comput. Graph. Stat. 10(2), 206–215 (2001)

De Boor, C.: A Practical Guide to Splines. Springer, New York (1978)

Dette, H., Neumeyer, N., Pilz, K.F., et al.: A simple nonparametric estimator of a strictly monotone regression function. Bernoulli 12(3), 469–490 (2006)

Dierckx, I.P.: An algorithm for cubic spline fitting with convexity constraints. Computing 24(4), 349–371 (1980)

Elphinstone, C.D.: A target distribution model for nonparametric density estimation. Commun. Stat. Theory Methods 12(2), 161–198 (1983)

Emerson, P.L.: Numerical construction of orthogonal polynomials from a general recurrence formula. Biometrics 24(3), 695–701 (1968)

Forsythe, G.E.: Generation and use of orthogonal polynomials for data-fitting with a digital computer. J. Soc. Ind. Appl. Math. 5(2), 74–88 (1957)

Friedman, J., Tibshirani, R.: The monotone smoothing of scatterplots. Technometrics 26(3), 243–250 (1984)

Hastings, W.K.: Monte Carlo sampling methods using Markov chains and their applications. Biometrika 57(1), 97–109 (1970)

Hawkins, D.M.: Fitting monotonic polynomials to data. Comput. Stat. 9(3), 233–247 (1994)

Hazelton, M.L., Turlach, B.A.: Semiparametric regression with shape-constrained penalized splines. Comput. Stat. Data Anal. 55(10), 2871–2879 (2011)

Hodges, J.S.: Richly Parameterized Linear Models: Additive, Time Series, and Spatial Models Using Random Effects. CRC Press, Boca Raton (2013)

Holland, P.W., Welsch, R.E.: Robust regression using iteratively reweighted least-squares. Commun. Stat. Theory Methods 6(9), 813–827 (1977)

Hornung, U.: Monotone spline interpolation. In: Collatz, L., Meinardus, G., Werner, H. (eds.) Numerische Methoden der Approximationstheorie, pp. 172–191. Birkhäuser, Basel (1978)

Horrace, W.C.: Some results on the multivariate truncated normal distribution. J. Multivar. Anal. 94(1), 209–221 (2005)

Kelly, C., Rice, J.: Monotone smoothing with application to dose-response curves and the assessment of synergism. Biometrics 46(4), 1071–1085 (1990)

Laird, N., Lange, N., Stram, D.: Maximum likelihood computations with repeated measures: application of the EM algorithm. J. Am. Stat. Assoc. 82(397), 97–105 (1987)

Lee, L.F.: On the first and second moments of the truncated multi-normal distribution and a simple estimator. Econ. Lett. 3(2), 165–169 (1979)

Leppard, P., Tallis, G.M.: Algorithm AS 249: evaluation of the mean and covariance of the truncated multinormal distribution. J. R. Stat. Soc. Ser. C (Appl. Stat.) 38(3), 543–553 (1989)

Levine, R.A., Casella, G.: Implementations of the Monte Carlo EM algorithm. J. Comput. Graph. Stat. 10(3), 422–439 (2001)

Lindstrom, M.J., Bates, D.M.: Newton-Raphson and EM algorithms for linear mixed-effects models for repeated-measures data. J. Am. Stat. Assoc. 83(404), 1014–1022 (1988)

Meng, X.L., Rubin, D.B.: Maximum likelihood estimation via the ECM algorithm: a general framework. Biometrika 80(2), 267–278 (1993)

Metropolis, N., Rosenbluth, A.W., Rosenbluth, M.N., Teller, A.H., Teller, E.: Equation of state calculations by fast computing machines. J. Chem. Phys. 21(6), 1087–1092 (1953)

Milgrom, P., Segal, I.: Envelope theorems for arbitrary choice sets. Econometrica 70(2), 583–601 (2002)

Murray, K., Müller, S., Turlach, B.A.: Revisiting fitting monotone polynomials to data. Comput. Stat. 28(5), 1989–2005 (2013)

Murray, K., Müller, S., Turlach, B.A.: Fast and flexible methods for monotone polynomial fitting. Stat. Comput. Simul. 86(15), 2946–2966 (2016)

Narula, S.C.: Orthogonal polynomial regression. Int. Stat. Rev. 47(1), 31–36 (1979)

Pinheiro, J.C., Bates, D.M.: Unconstrained parametrizations for variance-covariance matrices. Stat. Comput. 6(3), 289–296 (1996)

R Core Team: R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing, Vienna, Austria. https://www.r-project.org (2016)

Ramsay, J.O.: Estimating smooth monotone functions. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 60(2), 365–375 (1998)

Samuelson, P.A.: Foundations of Economic Analysis. Harvard University Press, Cambridge (1947)

Schmidt, T.: Really pushing the envelope: early use of the envelope theorem by Auspitz and Lieben. Hist. Polit. Econ. 36(1), 103–129 (2004)

Tallis, G.M.: The moment generating function of the truncated multi-normal distribution. J. R. Stat. Soc. Ser. B (Methodol.) 23(1), 223–229 (1961)

Tuddenham, R.D., Snyder, M.M.: Physical growth of California boys and girls from birth to eighteen years. Publ. Child Dev. Univ. Calif. Berkeley 1(2), 183–364 (1954)

Turlach, B.A.: Shape constrained smoothing using smoothing splines. Comput. Stat. 20(1), 81–104 (2005)

Turlach, B.A., Murray, K.: MonoPoly: Functions to Fit Monotone Polynomials. http://cran.r-project.org/package=MonoPoly (2016). R package version 0.3-8

Utreras, F.I.: Convergence rates for monotone cubic spline interpolation. J. Approx. Theory 36(1), 86–90 (1982)

Utreras, F.I.: Smoothing noisy data under monotonicity constraints existence, characterization and convergence rates. Numer. Math. 47(4), 611–625 (1985)

Wilhelm, S., Manjunath, B.G.: tmvtnorm: Truncated Multivariate Normal and Student t Distribution. http://cran.r-project.org/package=tmvtnorm (2015). R package version 1.4-10

Wong, Y.: An application of orthogonalization process to the theory of least squares. Ann. Math. Stat. 6(2), 53–75 (1935)

Zadrozny, B., Elkan, C.: Transforming classifier scores into accurate multiclass probability estimates. In: Proceedings of the Eighth ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 694–699. ACM (2002)

Zimmerman, D.L., Núñez-Antón, V.: Parametric modelling of growth curve data: an overview. Test 10(1), 1–73 (2001)

Author information

Authors and Affiliations

Corresponding author

Appendices

Penalised constrained orthogonal least squares regression

A generalisation of Algorithm 2 can be useful for constrained penalised regression models and within a mixed effects model framework. Specifically, in mixed effects models the \({\hat{\beta }}_{i}^{U}\) are not available in closed form. For notational simplicity, we discuss the necessary changes to Algorithm 2 within a penalised regression framework.

For our purposes, the penalised regression takes the form

where \(\eta (\varvec{\beta })\) is a continuously differentiable penalty function. Let \(\text {RSS}^{*}(\varvec{\beta }) = \text {RSS}(\varvec{\beta }) + \eta (\varvec{\beta })\) be the function to be minimised. The partial derivative of \(\text {RSS}^{*}\) with respect to \(\beta _{i}\) is

for which we will seek the roots, i.e. the value for which

for each of the \(\beta _{i}\). If the penalty term, \(\eta (\varvec{\beta })\), inhibits a closed form solution to (26), we must adjust Algorithm 2 to handle this additional complexity. In essence, this adjustment can be implemented by using a Newton–Raphson (NR) type step before conducting the line search. Due to the inexact nature of this step, more iterations will be needed for convergence. This algorithm is a simple but effective extension to COLS and is detailed in Algorithm 3. As an alternative, the NR algorithm could be run before conducting any line search. However, Algorithm 3 does not require the unconstrained solution and so this may be unnecessary.

The Newton–Raphson step uses the function h(b), see (27) for example, which may be approximated based on the difficulty of finding the second derivative,  . If the second derivative is available, then h(b) can take the form

. If the second derivative is available, then h(b) can take the form

where  when \({\varvec{X}}\) is an orthonormal design matrix. If

when \({\varvec{X}}\) is an orthonormal design matrix. If  is difficult to evaluate, a suitable approximation can be made. For example, it may be known that

is difficult to evaluate, a suitable approximation can be made. For example, it may be known that  is small compared to 2, so we may express

is small compared to 2, so we may express  where e is a positive number used to shrink the step size so that we do not overshoot the optimal value or get stuck on a boundary. A quasi-NR step is also possible. In testing, values of e between 1 and 2 demonstrated the most potential. Reducing the step size was also effective for Algorithm 2. Henceforth, we refer to the optimisation procedure in Algorithm 3 as penalised constrained orthogonal least squares (pCOLS).

where e is a positive number used to shrink the step size so that we do not overshoot the optimal value or get stuck on a boundary. A quasi-NR step is also possible. In testing, values of e between 1 and 2 demonstrated the most potential. Reducing the step size was also effective for Algorithm 2. Henceforth, we refer to the optimisation procedure in Algorithm 3 as penalised constrained orthogonal least squares (pCOLS).

Conditional truncated normal distribution

The first- and second-order moments of the conditional distributions  and

and  are needed for use in the EM algorithm, for the unconstrained random effects and constrained random effects respectively. Equation (9) gives the mean and variance in the unconstrained scenario. Finding the moments when the random effects are truncated is a non-trivial problem. We consider the general case of an arbitrary number of random effects, although generally \(rg < d \ll n\). We have to start by finding the joint distribution of

are needed for use in the EM algorithm, for the unconstrained random effects and constrained random effects respectively. Equation (9) gives the mean and variance in the unconstrained scenario. Finding the moments when the random effects are truncated is a non-trivial problem. We consider the general case of an arbitrary number of random effects, although generally \(rg < d \ll n\). We have to start by finding the joint distribution of  and

and  denoted here by

denoted here by  where the model for

where the model for  and

and  is

is

as in Eq. (14) and \(T(\varvec{\beta }) \subset {\mathbb {R}}^{rg}\). We note that  may be defined using the underlying normal distribution of

may be defined using the underlying normal distribution of  by

by

where  is the density of \({{\varvec{U}}}_{T}\). Let

is the density of \({{\varvec{U}}}_{T}\). Let  and

and  have the densities

have the densities  , and

, and  , respectively. The density

, respectively. The density  of

of  can be written as the product of the density of

can be written as the product of the density of  given

given  with the density of

with the density of  as

as

with \({\varvec{U}}\in T(\varvec{\beta })\). We may rewrite the density  , the truncated normal distribution, in terms of the non-truncated density of

, the truncated normal distribution, in terms of the non-truncated density of  by

by

with \({\varvec{U}}\in T(\varvec{\beta })\) and where \({\varvec{W}}\) is a dummy variable for the rg-dimensional integration. Substituting Eq. (30) into (29) allows the joint density to be written as

with \({\varvec{U}}\in T(\varvec{\beta })\). Consider the functional form of  . It is normally distributed without truncation since the random effects term is given. Therefore, it has the same distribution (and density) as

. It is normally distributed without truncation since the random effects term is given. Therefore, it has the same distribution (and density) as  where the random effects are non-truncated. We may rewrite Eq. (31) using this identity as

where the random effects are non-truncated. We may rewrite Eq. (31) using this identity as

hence the joint distribution of  and

and  is a truncated normal distribution, with \({\varvec{U}}\in T(\varvec{\beta })\). The second step in Eq. (32) is valid from recognising the product of

is a truncated normal distribution, with \({\varvec{U}}\in T(\varvec{\beta })\). The second step in Eq. (32) is valid from recognising the product of  as the unconstrained joint density. Note that in

as the unconstrained joint density. Note that in  no truncation is imposed on

no truncation is imposed on  and potentially some elements of

and potentially some elements of  . At a minimum, the random intercept has no effect the monotonicity and so can be integrated out of the denominator of Eq. (32).

. At a minimum, the random intercept has no effect the monotonicity and so can be integrated out of the denominator of Eq. (32).

Conditioning  in Eq. (32) on

in Eq. (32) on  the density

the density  (written to emphasise conditioning on \({\varvec{Y}}\)) can be described up to proportionality as

(written to emphasise conditioning on \({\varvec{Y}}\)) can be described up to proportionality as

since both  and

and  are proportional to

are proportional to  for fixed \({\varvec{Y}}\). Hence,

for fixed \({\varvec{Y}}\). Hence,  can be written as

can be written as

by re-normalising the density in Eq. (34), for \({\varvec{U}}\in T(\varvec{\beta })\). This indicates that the conditional distribution of  is also a truncated multivariate normal where the distribution before truncation has the same properties as the unconstrained (non-truncated) case,

is also a truncated multivariate normal where the distribution before truncation has the same properties as the unconstrained (non-truncated) case,  . A similar result for a conditional point-truncated multivariate normal distribution appears in Horrace (2005).

. A similar result for a conditional point-truncated multivariate normal distribution appears in Horrace (2005).

The well-know result for the conditional distribution,  , is restated here from Eq. (9), as

, is restated here from Eq. (9), as  where

where

Using the corresponding density, we replace  in Eq. (35) to obtain the general constrained conditional density of the random effects as

in Eq. (35) to obtain the general constrained conditional density of the random effects as

The evaluation of the both the above density and moments of  is hindered by the form of the truncation \(T(\varvec{\beta })\). In general, the integrals

is hindered by the form of the truncation \(T(\varvec{\beta })\). In general, the integrals  and

and  are rg dimensional integrals with dependent components. We are helped by independence of individuals, but this problem is difficult because of the complex nature of \(T(\varvec{\beta })\).

are rg dimensional integrals with dependent components. We are helped by independence of individuals, but this problem is difficult because of the complex nature of \(T(\varvec{\beta })\).

If \(T(\varvec{\beta })\) could be defined by a set of box constraintsFootnote 2 the work of Tallis (1961) and Lee (1979) to establish the analytical results for the moments of point-truncated multivariate normal densities and their numerical calculation (Leppard and Tallis 1989) can be used. As discussed in Sect. 4, this only occurs for \(r = 2\). In this case, the R package, tmvtnorm (Wilhelm and Manjunath 2015), is able to provide the truncated distribution’s mean and variance based of the unconstrained distribution of  with mean

with mean  and variance

and variance  .

.

For \(r>2\), Monte Carlo simulation is necessary. One could implement a routine similar to that of Damien and Walker (2001) but replace the indicator function for standard truncation with one that adheres to the constraints of our application. This would simulate a \(T(\varvec{\beta })\)-truncated multivariate normal distribution which could then be used to evaluate the mean and variance. We outline our proposed methods in Appendices C.3 and D.4 .

Technical details of expectation steps

1.1 Random intercepts

To complete the derivation of the expectation step for a random intercept model, we need to find the conditional expectation and variance of the random effects terms  . These expectations and variances are conditional on observing

. These expectations and variances are conditional on observing  and their calculations are simplified as the random intercepts do not impact the monotonicity of individuals’ polynomials. Furthermore, the random effects’ variance structure is now defined by \(\varvec{\phi }_{{\varvec{H}}} = \{ \sigma ^{2}_{{\varvec{H}}} \}\) so that \({\varvec{H}}=[ \sigma ^{2}_{{\varvec{H}}} ]\) and \({\varvec{G}}= \sigma ^{2}_{{\varvec{H}}}{\varvec{I}}_{g}\). Additionally, we assume the error terms have homogenous variance so that \({\varvec{R}}= \sigma ^{2}_{{\varvec{R}}}{\varvec{I}}_{n}\). Therefore, from (9) we see that the conditional mean is

and their calculations are simplified as the random intercepts do not impact the monotonicity of individuals’ polynomials. Furthermore, the random effects’ variance structure is now defined by \(\varvec{\phi }_{{\varvec{H}}} = \{ \sigma ^{2}_{{\varvec{H}}} \}\) so that \({\varvec{H}}=[ \sigma ^{2}_{{\varvec{H}}} ]\) and \({\varvec{G}}= \sigma ^{2}_{{\varvec{H}}}{\varvec{I}}_{g}\). Additionally, we assume the error terms have homogenous variance so that \({\varvec{R}}= \sigma ^{2}_{{\varvec{R}}}{\varvec{I}}_{n}\). Therefore, from (9) we see that the conditional mean is

while the conditional variance is given by

1.2 Random slope

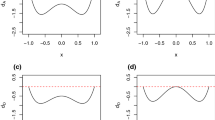

When random slopes are included in the model, we need to impose a suitable truncation on the distribution of the random effects, when we also wish to constrain the individuals’ curves. The first step is to derive the form of truncation needed when we have two random effects present. Define \(p(x;\varvec{\beta },u_{0,i},u_{1,i})\) as the ith individuals’ orthonormal polynomial curve to be estimated in the random intercepts and slopes model. We may write this equation by extending (1) so that

where \(u_{0,i}\) and \(u_{1,i}\) are the random intercepts and slopes and the \(p_{j}\) are defined in (2). Note also that \(p_{0}(x)= \psi _{0,0}\) and \(p_{1}(x)= \psi _{1,0} + \psi _{1,1}x\), where \(\psi _{i,j}\) are elements of \(\varvec{\Psi }\). The derivative of (37) with respect to x is

whereby monotonicity for each individual i is maintained whilst \(p^{\prime }(x;\varvec{\beta },u_{1,i})\ge 0\) for all x in the set S. When estimating the constrained random effects, we take \(\varvec{\beta }\) as fixed in our optimisation process for a given iteration, in accordance with the E-step of the EM algorithm. After fixing \(\varvec{\beta }\), the monotonicity for each individuals’ curve is determined additively by \(u_{1,i}\) and the shape of the curve. To determine the necessary truncation for \(u_{1,i}\), the minimum of the derivative of the individual’s curve as a function of \(u_{1,i}\) is sought. Maintaining this minimum above zero will provide the correct truncation. Given (38), we see that

where the derivative of the mean curve is \(p^{\prime }(x;\varvec{\beta }) = \sum _{j=1}^{q}\beta _{j}p_{j}^{\prime }(x)\). We denote the scaled minimum of this polynomial as

which can be calculated for a given \(\varvec{\beta }\) by applying standard root-solving routines to the derivative of \(p^{\prime }(x;\varvec{\beta })\). The fixed coefficient \(\psi _{1,1}\) that appears in (40) can be expressed as

as a result of the orthonormality of \(p_{0}(x)\) and \(p_{1}(x)\) over the set of observations \(\{x_{1},x_{2},\ldots ,x_{n}\}\). Once \(c(\varvec{\beta })\) is determined, the monotonicity of the individuals’ curves in the random intercept and slope model is guaranteed by one of the following equivalent statements

The last statement uses \(\psi _{1,1} > 0\) by its definition in (41). Hence, we have a one-sided point truncation of \(u_{1,i}\) which can be used to define the truncation region \(T(\varvec{\beta })\) as

when we have a random intercept and slope.

Having the form of the truncation in (42), we use the result of Appendix B for  which tells us the conditional distribution is a truncated multivariate normal random variable. The R package tmvtnorm (Wilhelm and Manjunath 2015) can be used to find the expectation and variance of this distribution for use in the E-step because \(T(\varvec{\beta })\) defines a point truncation.

which tells us the conditional distribution is a truncated multivariate normal random variable. The R package tmvtnorm (Wilhelm and Manjunath 2015) can be used to find the expectation and variance of this distribution for use in the E-step because \(T(\varvec{\beta })\) defines a point truncation.

1.3 Higher-order random effects

To evaluate the expectation and variance of the conditional random effects when \(r > 2\), we use Monte Carlo integration. There are two simple Monte Carlo methods we consider for this task, rejection sampling and the Metropolis-Hastings algorithm (Hastings 1970).

The rejection sampler proceeds by drawing samples from the multivariate normal distribution governing the unconstrained conditional random effects. As in the general case, the relevant unconstrained mean and variance are given by

However, we decompose these matrices into subject-specific means and variances assuming no crossed random effects, i.e. \({\varvec{G}}\) is a block-diagonal matrix. This way we can sample from gr-dimensional distributions rather than one rg-dimensional distribution. It is also easily parallelised.

A random sample is drawn from the underlying unconstrained multivariate normal distribution for each subject. The realisations are rejected when the resulting subject-specific curve is not monotone. The remaining set are effectively samples drawn from the truncated multivariate normal distribution and the approximate expectation and variance is calculated from this set.

Rejection sampling is adequate if a sufficient number of realisations of the unconstrained sample are monotone. If not, it may be very slow to draw realisations from the constrained distribution. In these cases, a random walk Metropolis algorithm (Metropolis et al. 1953) is a good alternative. In order to adhere to the constrained space an additional step is added to the algorithm that rejects non-monotonic proposals. This algorithm is in the class of random walk Metropolis–Hastings (RWMH) samplers.

It is sensible to define the random walk in the RWMH to have variance equal to  where \(\tau \in (0,1)\) is a constant used to scale down the step size. Using this variance incorporates the inherent correlation of the random effects which is only altered by the truncation from the extra rejection step. The only tuning parameter is then \(\tau \), which can be chosen in the warm-up phase or be pre-determined. Implementations should consider thinning the samples to reduce autocorrelation in the Markov chain.

where \(\tau \in (0,1)\) is a constant used to scale down the step size. Using this variance incorporates the inherent correlation of the random effects which is only altered by the truncation from the extra rejection step. The only tuning parameter is then \(\tau \), which can be chosen in the warm-up phase or be pre-determined. Implementations should consider thinning the samples to reduce autocorrelation in the Markov chain.

To reduce computation time, we use a combination of rejection sampling and RWMH. Initially, we draw a preliminary sample of each subjects’ constrained random effects using rejection sampling and calculate individual acceptance ratios. The subjects with low acceptance ratios are transferred to the RWMH algorithm to draw samples—the more efficient option. As with the rejection sampler, the approximate expectation and variance are then calculated from the constrained random samples of each individual.

Technical details of maximisation steps

1.1 Homogenous observational variance

In the special case where \({\varvec{R}}= \sigma ^{2}_{{\varvec{R}}}{\varvec{I}}_{n}\) so that \(\varvec{\phi }_{{\varvec{R}}}=\left\{ \sigma ^{2}_{{\varvec{R}}} \right\} \), the equation  can be solved analytically. The derivative of \(q^{{\left[ t\right] }}_{\text {dev}}\) with respect to \(\sigma ^{2}_{{\varvec{R}}}\) can be simplified to

can be solved analytically. The derivative of \(q^{{\left[ t\right] }}_{\text {dev}}\) with respect to \(\sigma ^{2}_{{\varvec{R}}}\) can be simplified to

where  are the “working” residuals.

are the “working” residuals.

Equating (43) to zero we find that, the optimal \(\sigma ^{2}_{{\varvec{R}}}\) for a given \(\varvec{\beta }\) is

The denominator in (44) could be replaced by the degrees of freedom in the model. If this were a fixed effects model, we would replace n by \(n-d\) where d is the number of coefficients. However, calculating the degrees of freedom in a constrained mixed effects model is complicated in two ways. Firstly, d describes the upper limit for the number of fixed effects parameters in the context of degrees of freedom. This is because the number d does not account for the constraint over the parameter space. Secondly, we need to account for the random effects which do not constitute parameters in the general sense but are estimated and reduce the degrees of freedom in the model.Footnote 3 We defer this choice for future consideration, and for the time being conservatively use n.

In the optimisation of \(\varvec{\beta }\), with \({\varvec{R}}= \sigma ^{2}_{{\varvec{R}}}{\varvec{I}}_{n}\), we can simplify (20) to

and optimisation still needs to be iterative in the M-step since the dependence on \(\sigma ^{2}_{{\varvec{R}}}\) and \(\varvec{\phi }_{{\varvec{H}}}\) is retained (the parameters in \(\varvec{\phi }_{{\varvec{H}}}\) enter (45) through \(\eta (\varvec{\beta })\)). Therefore, the parameter dependence structure has not changed the scenario described in Sect. 4.2 when \(\eta (\varvec{\beta }) \ne 0\). To find \(\varvec{\beta }\) in each step, pCOLS can still be used iteratively in combination with optimisation of the variance parameters.

When the individual curves are not constrained \((\eta (\varvec{\beta }) = 0)\), equating (45) to a vector of zeros gives \({\hat{\varvec{\beta }}}^{U} = {\varvec{X}}^{\top }{\varvec{Y}}_{*}^{{\left[ t\right] }}\), which replaces the standard unconstrained solution in Algorithm 2 for the COLS optimisation. The dependence on \(\sigma _{{\varvec{R}}}^{2}\) can be ignored in the coordinate descent routine, since it is just a multiplicative constant to the derivative. Hence, the M-step dependence structure with homogenous observational variance and no constraint on the random effects (\(\eta (\varvec{\beta }) = 0\)) is simplified. The optimisation of

-

\(\varvec{\beta }\) has no dependence on the other parameter sets,

-

\(\varvec{\phi }_{{\varvec{R}}}\) depends on \(\varvec{\beta }\), and

-

\(\varvec{\phi }_{{\varvec{H}}}\) has no dependence on the other parameter sets

in any one M-step. So to estimate this model, we carry out the expectation step, maximise over \(\varvec{\beta }\) followed by \(\varvec{\phi }_{{\varvec{R}}}\). The variance parameters \(\varvec{\phi }_{{\varvec{H}}}\) can be optimised at any stage. Then, we repeat the expectation and maximisation steps until convergence is reached.

1.2 A random intercept

Having only a random intercept model simplifies the optimisation step considerably. It reduces the variance vector \(\varvec{\phi }_{{\varvec{H}}}\) to \(\varvec{\phi }_{{\varvec{H}}} = \left\{ \sigma ^{2}_{{\varvec{H}}} \right\} \) so that \({\varvec{H}}=\left[ \sigma ^{2}_{{\varvec{H}}} \right] \) and \({\varvec{G}}= \sigma ^{2}_{{\varvec{H}}}{\varvec{I}}_{g}\). We continue to assume \({\varvec{R}}\) has homogenous variance so \({\varvec{R}}= \sigma ^{2}_{{\varvec{R}}}{\varvec{I}}_{n}\), which still provides simplification if this is not the case. Including only a random intercept dictates that a subject’s curve is monotone if the mean curve is and so there is no need for truncation of the random effects’ normal distribution. The random intercept is normally distributed, and the normalising term, \(\eta (\varvec{\beta })\), is null.

Updating the derivative of \(q^{{\left[ t\right] }}_{\text {dev}}\) with respect to \(\sigma ^{2}_{{\varvec{H}}}\) from (22) with \(\eta (\varvec{\beta })=0\), we have

Setting the derivative to zero, we find that

Since \(\eta (\varvec{\beta })=0\), the unconstrained solution for \(\varvec{\beta }\) can be found analytically from (45) and takes the form

in a given iterate. Consequently, the \(\sigma ^{2}_{{\varvec{R}}}\) term can be ignored in the coordinate descent optimisation when only a random intercept is present.

This gives us the final components of the updating equations for our variance components. Using the COLS methods for \(\varvec{\beta }\), (44) for \(\sigma ^{2}_{{\varvec{R}}}\), and (46) for \(\sigma ^{2}_{{\varvec{H}}}\), the EM updating equations are

in this order, where  . This completes the EM algorithm for models with just a random intercept.

. This completes the EM algorithm for models with just a random intercept.

1.3 A random slope

Upon introducing a random slope into the model, we must consider the normalising term and its derivatives, as well as the variance structure of the random effects. These will affect the way we undertake the maximisation step in each iterate of the EM algorithm. The first consideration is the definition and derivative of the normalising term \(\eta (\varvec{\beta })\). Alternatively to (42), define the support by grouping the random effects by individual as

for \(i = 1,2,\ldots ,g\), where \(c(\varvec{\beta })\) is defined in (40). In the case of random slopes, \(\eta (\varvec{\beta })\) can be simplified and evaluated analytically. Additionally, independence of the groups of random effects, and the marginalising over the random intercept, \(w_{1}\), allows us to write \(\eta (\varvec{\beta })\) as

Finally, the normalising term can be written as

by noting its relation to the standard normal cumulative distribution function, denoted by \(\Phi (z)\).

The expression of the normalising term in (49) allows us to evaluate the derivatives of \(\eta (\varvec{\beta })\) analytically. The derivative with respect to \(\varvec{\beta }\) is somewhat involved, requiring envelope theoremFootnote 4 (Milgrom and Segal 2002) to evaluate the derivativeFootnote 5 , in addition to successive chain rules. We find that

, in addition to successive chain rules. We find that

where \(\Phi ^{\prime }(z)\) is just the density of the standard normal distribution. Using the results of Appendix E, the component form of (50) can be written as

where \(p_{i}^{\prime }(x)\) is the derivative of the ith polynomial in the orthonormal basis for \(0\le i \le q\), \(x^{*} = \arg \min _{x \in S} p^{\prime }(x;\varvec{\beta })\), and \(\psi _{1,1}\) is defined in (41).

The second consideration in the M-step when \(r=2\) is finding the derivative of \(\eta (\varvec{\beta })\) with respect to the random effects variance components. The variance-covariance matrix, \({\varvec{G}}\), becomes a block-diagonal matrix made up of g positive definite matrices, \({\varvec{H}}\), with dimension \(2 \times 2\). The variance parameters of \({\varvec{H}}\) have general derivative given by (22) restated here as

Generally, \({\varvec{H}}\) is considered to have the form

and \({\varvec{G}}= {\varvec{I}}_{g} \otimes {\varvec{H}}\). However, to estimate these parameters we need to transform \({\varvec{H}}\), and hence \({\varvec{G}}\), to induce an unconstrained optimisation problem. Although several matrix reparametrisations that preserve positive definiteness exist (Pinheiro and Bates 1996), we use the log-Cholesky decomposition. The Cholesky parametrisation decomposes a positive definite matrix into the product of two triangular matrices, thereby ensuring positive definiteness when working with this form, but to ensure uniqueness we enact a log-transform on the diagonals. The log-Cholesky decomposition can be described as \({\varvec{H}}= {\varvec{L}}_{{\varvec{H}}}{\varvec{L}}_{{\varvec{H}}}^{\top }\), where \({\varvec{L}}_{{\varvec{H}}}\) is a lower triangular matrix with diagonal raised to the exponential number.

Due to the dependence of normalising term, \(\eta (\varvec{\beta })\), on \(\sigma _{{\varvec{H}},1}^{2}\) we change this parametrisation to use an upper triangular matrix rather than the standard lower triangular matrix. The upper triangular matrix is therefore defined as

where the redefined \({\varvec{H}}\) becomes

which ensures that \(\sigma _{{\varvec{H}},1}^{2} = \exp \{2\omega _{2}\}\) is a function of just one of the new parameters rather than two.

Let \({\varvec{J}}_{{\varvec{G}}} = {\varvec{I}}_{g} \otimes {\varvec{J}}_{{\varvec{H}}}\), replacing \({\varvec{G}}\) with \({\varvec{J}}_{{\varvec{G}}}\) the derivative of \(q^{{\left[ t\right] }}_{\text {dev}}\) with respect to the parameters of \({\varvec{J}}_{{\varvec{H}}}\) (and hence \({\varvec{J}}_{{\varvec{G}}}\)) becomes

where a standard derivative-based routine can solve for (55) set to zero, and  and

and  can be found using tmvtnorm (Wilhelm and Manjunath 2015) as discussed in Sect. C.2. The normalising term from (49) can now be represented using \(\omega _{2}\) instead of \(\sigma _{{\varvec{H}},1}\) as

can be found using tmvtnorm (Wilhelm and Manjunath 2015) as discussed in Sect. C.2. The normalising term from (49) can now be represented using \(\omega _{2}\) instead of \(\sigma _{{\varvec{H}},1}\) as

for which the derivative of \(\eta (\varvec{\beta })\) with respect to \(\omega _{2}\) is

and zero for \(\omega _{1}\) and \(\omega _{3}\), thus fully specifying Eq. (55).

In summary, with a random intercept and slope, the M-step becomes

In a single M-step, these individual optimisations should be carried out iteratively to account for the dependence of the parameters detailed in Sect. 4.2. It is possible to undertake each optimisation once if a conditional EM algorithm is used (Meng and Rubin 1993); however, further investigation of the merits in this method is left for future work.

1.4 Higher-order random effects

When \(r>2\), it is not clear how to analytically derive the partial derivatives of \(\eta (\varvec{\beta })\) given the space \(T(\varvec{\beta })\) is no longer box-constrained. Numerical integration is possible, but computationally intensive compared to the methods for \(r\le 2\) previously described.

Rather than working with an rg-dimensional integral, when the groups of random effects are independent (no crossed random effects) we can decompose the integral into g identical parts. Let  be a dummy variable for integration representing any of the subjects’ random effects. The constrained region for each (subject-specific) set of the random effects can be written as

be a dummy variable for integration representing any of the subjects’ random effects. The constrained region for each (subject-specific) set of the random effects can be written as

or in other words, the subject-specific polynomial created from the mean polynomial and the subjects’ random effects must be monotone. With these, we can write \(\eta (\varvec{\beta })\) with only an r-dimensional integral as

We use Monte Carlo integration to evaluate \(\eta (\varvec{\beta })\) and its partial derivatives. The integrand of (59) is a probability density function; hence to approximate the integral, we can generate samples from the distribution (multivariate normal) and count how many are in the integrable region. The approximate value of \(\eta (\varvec{\beta })\) is the proportion of these iterates belonging to \(T_{I}(\varvec{\beta })\).

Once the value of \(\eta (\varvec{\beta })\) can be approximated, numerical differentiation techniques can be used to find  and

and  . However, some optimisations should be made first. The derivative of \(\eta (\varvec{\beta })\) with respect to the relevant parameter \(\alpha \) can be written as

. However, some optimisations should be made first. The derivative of \(\eta (\varvec{\beta })\) with respect to the relevant parameter \(\alpha \) can be written as

and the numerator in (60) can then be numerically differentiated. Without any further adjustments, all realisations will need to be tested for membership in \(T_{I}(\varvec{\beta })\) every time the integral is calculated during numerical integration. To reduce this computational burden, we make two further approximations

-

1.

Fix the realisations of the multivariate normal distribution for the entire EM algorithm (but use a large number of samples);

-

2.

Only use a sub-sample of the realisations when approximating the derivative.

More specifically, the sub-sample should be the realisations from the underlying distribution that are sufficiently close to the monotone boundary, for a given \(\varvec{\beta }\). These are the only samples which will impact calculation of the derivative, as they lose and gain membership in \(T_{I}(\varvec{\beta })\) based on very small changes to \(\varvec{\beta }\) and \(\omega _{1},\omega _{2},...\), respectively. Realisations that are sufficiently far away from the boundary will not change membership during the derivative calculation. Conveniently, we can calculate how close the samples are to the monotone boundary using \(c(\varvec{\beta })\) in (40) and take a subset by smallest absolute value. The size of the sub-sample will affect accuracy of the derivative and computational effort. But in our testing we have found it is a worthwhile trade-off.

Once approximated, the derivatives and function can be used in the M-step as described in Appendix D.3.

Derivative of the normalising term

In Sect. 4.2, we are faced with finding the derivative of the normalising term of the form

In general, finding the derivative of (61) with respect to \(\varvec{\beta }\) requires use of the chain rule to handle the logarithm, and the Fundamental Theorem of Calculus (FTC) for the integral. However, as it stands the integral is too general to make use of the FTC. The difficulty being the integrable region, \(T(\varvec{\beta })\). In the general case, we suggest the use of numerical integration techniques to find this derivative. Since it is the integrable region that is complicated, it may be that Monte Carlo methods are most appropriate. For now, we use the normalising term in the case of Sect. D.3 where we have a random intercept and slope. In this case, the normalising term from (49) is

and we derive the derivative, in (50), as

where \(\sigma _{H,1} = \exp \{\omega _{2}\}\) when using the log-Cholesky decomposition. The difficulty in (50) is finding  , for which we turn to envelope theorem.Footnote 6 The function \(c(\varvec{\beta })\) is the linear distance from the minimum value of \(p^{\prime }(\varvec{\beta })\) to the x axis. It was defined in (40) as

, for which we turn to envelope theorem.Footnote 6 The function \(c(\varvec{\beta })\) is the linear distance from the minimum value of \(p^{\prime }(\varvec{\beta })\) to the x axis. It was defined in (40) as

Standard envelope theorems generally apply to  when \(S ={\mathbb {R}}\); however, the case where S is an arbitrary set is covered by Theorem 1 of Milgrom and Segal (2002). As a result, the derivative of \(c(\varvec{\beta })\) can be written as

when \(S ={\mathbb {R}}\); however, the case where S is an arbitrary set is covered by Theorem 1 of Milgrom and Segal (2002). As a result, the derivative of \(c(\varvec{\beta })\) can be written as

where \(x^{*}(\varvec{\beta }) = \arg \min _{x \in S} p^{\prime }(x;\varvec{\beta })\) which can be calculated for a given \(\varvec{\beta }\). Recall that \(p^{\prime }(x;\varvec{\beta }) = \sum _{i=1}^{q}\beta _{i}p_{i}^{\prime }(x)\) where the \(p_{i}\) are the orthonormal polynomials. Therefore, the component form of (62) is

Having found the derivative of \(c(\varvec{\beta })\) in (63), we can state (50), in component form, as

where \(\psi _{1,1} = \left( \sum _{x \in D_{w}}\left( x-{\bar{x}}\right) ^{2}\right) ^{-1/2}\) and \(\sigma _{H,1}\) can be replaced by \(\exp \{\omega _{2}\}\) when using a log-Cholesky decomposition.

Berkeley growth data

The Berkeley growth dataset (BGD) (Tuddenham and Snyder 1954) is well known in the area of growth curve analysis. It contains a set of repeated height measurements for 39 male and 54 female children over the ages 1–18. In all, there are 2883 observations. The measurements were taken at unequal intervals, a total of 31 times for each participant. The BGD is used to illustrate the monotonic mixed effect models with higher degree of mean and random effects (with constrained subject-specific curves). The fitted curves should be monotonic as heights of children should not decrease over time. We demonstrate the constrained fitting methods on the males in the dataset.

Figure 5 shows the degree 12 fits with subject-specific curves defined by 6 random effects. The mean curve is constrained to be monotonic over the ages 0–18, whilst the subject-specific curves are unconstrained. The subject-specific curves are plotted along with the mean curve and raw data for 9 male subjects. The differences between the subject-specific curves and mean curve demonstrates the flexibility that linear and parametric random effects can add.

The particular individuals in Fig. 5 were chosen to demonstrate that some of the 38 subject-specific curves are non-monotonic between the ages of 16 and 18 (boy 35 is given as an example of a monotonic subject-specific fit). The data for each individual appear clearly monotonically increasing, but in the subject-specific terms we observe a decrease. This may be due to a marginally lower height recorded as one of the last observations, such as in boy 15’s height data. It could also be under-fitting in that area of the data. In any case, we are able to correct for the non-monotonic fits by introducing constrained random effects adhering to monotonicity.

Figure 6 contains the previous model structure (\(q=12\), \(r=6\)), but now the subject-specific curves are constrained to be monotonically increasing over the ages 0–18. This model is more computationally intensive than its lower degree counterparts in Sect. 5, specifically because the evaluation of the random effects’ mean and variance, as well as the penalty term, \(\eta (\varvec{\beta })\), requires Monte Carlo integration.

Incorporating this subject constraint has induced subject monotonicity but also flatter behaviour in the curves between the ages 16 and 18. This is a beneficial result as we would expect growth curves to flatten out in later years as children stop growing. It is worthwhile noting that boy 35’s subject-specific fit is almost unchanged, as we would expect.

Rights and permissions

About this article

Cite this article

Bon, J.J., Murray, K. & Turlach, B.A. Fitting monotone polynomials in mixed effects models. Stat Comput 29, 79–98 (2019). https://doi.org/10.1007/s11222-017-9797-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11222-017-9797-8