Abstract

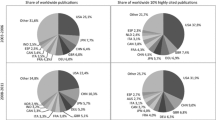

The paper exploits a newly created dataset offering several detailed bibliometric data and indicators on 251 subject categories for a large sample of universities in Europe, North America and Asia. In particular, it addresses the controversial issue of the distance between Europe and USA in research excellence (so called “transatlantic gap”). By building up indicators of objective excellence (top 10% worldwide in publications and citations) and subjective excellence (top 10% in the distribution of share of top journals out of total production at university level), it is shown that European universities fail to achieve objective measures of global excellence, while being competitive only in few fields. The policy implications of this state of affairs are discussed.

Similar content being viewed by others

Notes

The GRBS was a collaborative effort between the United Nations University International Institute for Software Technology and the Center for Measuring University Performance, who founded and lead the initiative. Contributing organizations include: Arizona State University, Institute for Scientific and Technical Information of China, Korean Academy of Science and Technology, Ministry of Higher Education of Malaysia, National Assessment and Accreditation Council of India, National Institute for Informatics (Japan), National Institution for Academic Degrees and University Evaluation of Japan, ProSPER.Net, University of Melbourne, and University of Pisa. The governance structure of the initiative included an International Advisory Board providing expertise in university performance evaluation, bibliometrics, and Sustainable Development, and representing diverse regional and stakeholder perspectives.

The All Science Journal Classification (ASJC) maps source titles in a structured hierarchy of disciplines and sub-disciplines allowing research activity to be categorized according to the field of research. ASJC classifies about 30,000 source titles into a two-level hierarchy. The top level contains 27 subject areas including a Multidisciplinary category and second level contains 309 subject areas. Because GRBS currently only covers Science and Technology, only 23 of the top level subject areas and 251 of the sub-areas are currently used in the system.

For Asia Pacific the countries included are: Australia, China, Honk Kong SAR, India, Japan, Malaysia, New Zealand, Singapore, South Corea, Taiwan—Province of China and Thailand. The North America includes USA and Canada. European countries included are: Austria, Belgium, Bulgaria, Cyprus, Czech Republic, Denmark, Estonia, Finland, France, Germany, Greece, Hungary, Ireland, Latvia, Lithuania, Luxembourg, Netherlands, Norway, Poland, Portugal, Romania, Slovakia, Slovenia, Spain, Sweden, Switzerland, United Kingdom.

References

Abbey, C., Capaldi, E., & Haddawy, P. (2011). Defining the concept of univeristy for research benchmarking. http://www.researchbenchmarking.org/files/Defining%20a%20University.pdf.

Aghion, P., Dewatripont, M., Hoxby, C., Mas-Colell, A., & Sapir, A. (2010). The governance and performance of research universities. Evidence from Europe and the US. Economic Policy, 25(61), 7–59.

Ahlgren, P., & Waltman, L. (2014). The correlation between citation-based and expert-based assessments of publication channels: SNIP and SJR versus Norwegian quality assessments. Journal of Informetrics, 8(4), 985–986.

Aksnes, D. W., & Sivertsen, G. (2004). The effect of highly cited papers on national citation indicators. Scientometrics, 59(2), 213–224.

Albarrán, P., Crespo, J., Ortuño, I., & Ruiz-Castillo, J. (2010). A comparison of the scientific performance of the U.S. and the European Union at the turn of the 21st century. Scientometrics, 85, 329–344.

Albarrán, P., Crespo, J., Ortuño, I., & Ruiz-Castillo, J. (2011a). The skewness of science in 219 sub-fields and a number of aggregates. Scientometrics, 88, 385–397.

Albarrán, P., Ortuño, I., & Ruiz-Castillo, J. (2011b). The measurement of low- and high-impact in citation distributions: Technical results. Journal of Informetrics, 5, 48–63.

Albarrán, P., Ortuño, I., & Ruiz-Castillo, J. (2011c). High- and low impact citation measures: Empirical applications. Journal of Informetrics, 5, 122–145.

Albarrán, P., Ortuño, I., & Ruiz-Castillo, J. (2011d). Average-based versus high- and low impact indicators for the evaluation of scientific distributions. Research Evaluation, 20(4), 325–339.

Bonaccorsi, A. (2007). On the poor performance of European Science. Institutions versus policies. Science and Public Policy, 34(5), 303–316.

Bonaccorsi, A. (2011). European competitiveness in information technology and long term scientific performance. Science and Public Policy, 38(7), 521–540.

Bornmann, L., De Moya Anegón, F., & Leydesdorff, L. (2012). The new excellence Indicator in the World Report of the SCImago Institutions Rankings 2011. Journal of Informetrics, 6(2), 333–335.

Bornmann, L., & Mutz, R. (2011). Further steps towards an ideal method of measuring citation performance: The avoidance of citation (ratio) averages in field-normalization. Journal of Informetrics, 5(1), 228–230.

Campbell, D., Lefebvre C., Picard-Aitken M., Côté G., Ventimiglia A., Roberge G., & Archambault E. (2013). Country and regional scientific production profiles. Directorate-General for Research and Innovation, Publications Office of the European Union.

De Bellis, N. (2009). Bibliometrics and citation analysis: From the science citation index to cybermetrics. Lanham, MD: Scarecrow Press.

DG RTD. (2016). Science, research and innovation performance of the EU. A contribution to the Open Innovation Open Science Open to the World agenda. Directorate-General for Research and Innovation. Publications Office of the European Union. https://rio.jrc.ec.europa.eu/en/file/9083/download?token=LCOIWLRJ.

Dosi, G., Llerena, P., & Sylos, L. M. (2006). The relationship between science, technologies and industrial exploitation. An illustration through the myths and realities of the so called “European paradox”. Research Policy, 35(10), 1450–1464.

Egghe, L. (2005). Power laws in the information production process: Lotkaian informetrics. Amsterdam: Elsevier.

Fernandez-Zubieta, A., Geuna, A., & Lawson, C. (2015). Global mobility of research scientists: The economics of who goes where and why. New York: Academic Press.

Frank, R. H. (2016). Success and luck. Good fortune and the myth of meritocracy. Princeton: Princeton Univerity Press.

Glänzel, W. (2013). High-end performance or outlier? Evaluating the tail of scientometric distributions. Scientometrics, 97(1), 13–23.

Glänzel, W., & Schubert, A. (1988). Characteristic scores and scales in assessing citation impact. Journal of Information Science, 14(2), 123–127.

Glänzel, W., Thijs, B., & Debackere, K. (2014). The application of citation-based performance classes to the disciplinary and multidisciplinary assessment in national comparison and institutional research assessment. Scientometrics, 101(2), 939–952.

Haddawy, P., Hassan, S., Asghar, A., & Amin, S. (2016). A comprehensive examination of the relation of three citation-based journal metrics to expert judgment of journal quality. Journal of Informetrics, 10(1), 162–173.

Hemlin, S., Allwood, C. M., & Martin, B. R. (Eds.). (2004). Creative knowledge environments. The influences on creativity in research and innovation. Cheltenham: Edward Elgar.

Herranz, N., & Ruiz-Castillo, J. (2011). The end of the European paradox. Working paper economic series 11–7, University Carlos III, Madrid.

Kaur, J., Radicchi, F., & Menczer, F. (2013). Universality of scholarly impact metrics. Journal of Informetrics, 7(4), 924–932.

Kostoff, R. N. (2002). Citation analysis of research performer quality. Scientometrics, 53(1), 49–71.

Leydesdorff, L., Bornmann, L., Mutz, R., & Opthof, T. (2011). Turning the tables on citation analysis one more time: Principles for comparing sets of documents. Journal of the American Society for Information Science and Technology, 62(7), 1370–1381.

Leydesdorff, L., & Opthof, T. (2011). Remaining problems with the “new crown indicator” (MNCS) of the CWTS. Journal of Informetrics, 5(1), 224–225.

Lin, C. S., Huang, M. H., & Chen, D. Z. (2013). The influences of counting methods on university rankings based on paper count and citation count. Journal of Informetrics, 7(3), 611–621.

Mingers, J., & Leydesdorff, L. (2015). A review of theory and practice in scientometrics. European Journal of Operational Research, 246(1), 1–19.

Moed, H. F. (2005). Citation analysis in research evaluation. Dordrecht: Springer.

National Science Board. (2014). Science and Engineering indicators. Arlington, VA: National Science Foundation.

Norrby, E. (2010). Nobel prizes and life sciences. Singapore: World Scientific Publishing.

OECD. (2015). Compendium of bibliometric scientific indicators 2014, Chapter 1. Directorate for Science, Technology and Innovation, March.

Plomp, R. (1990). The significance of the number of highly cited papers as an indicator of scientific prolificacy. Scientometrics, 19(3), 185–197.

Plomp, R. (1994). The highly cited papers of professors as an indicator of a research group’s scientific performance. Scientometrics, 29(3), 377–393.

Rodríguez-Navarro, A. (2016). Research assessment based on infrequent achievements: A comparison of the United States and Europe in terms of highly cited papers and Nobel prizes. Journal of the Association for Information Science and Technology, 67(3), 731–740.

Sachwald, F. (2015). Europe’s twin deficits: Excellence and innovation in new sectors. Policy paper by the research, innovation, and science policy experts (RISE). European Commission, Directorate-General for Research and Innovation.

Seglen, P. O. (1992). The skewness of science. Journal of the American Society for Information Science, 43(9), 628–638.

Tijssen, R. J., Visser, M. S., & Van Leeuwen, T. N. (2002). Benchmarking international scientific excellence: Are highly cited research papers an appropriate frame of reference? Scientometrics, 54(3), 381–397.

Van Leeuwen, T. N., Visser, M. S., Moed, H. F., Nederhof, T. J., & Van Raan, A. F. (2003). The Holy Grail of science policy: Exploring and combining bibliometric tools in search of scientific excellence. Scientometrics, 57(2), 257–280.

Vinkler, P. (2007). Eminence of scientists in the light of the h-index and other scientometric indicators. Journal of Information Science, 33(4), 481–491.

Waltman, L. (2016). A review of the literature on citation impact indicators. Journal of Informetrics, 10(2), 365–391.

Waltman, L., Calero-Medina, C., Kosten, J., Noyons, E., Tijssen, R. J., Eck, N. J., et al. (2012). The Leiden Ranking 2011/2012: Data collection, indicators, and interpretation. Journal of the American Society for Information Science and Technology, 63(12), 2419–2432.

Wildawsky, B. (2010). The great brain race: How global universities are reshaping the world. Princeton: Princeton University Press.

Wildgaard, L., Schneider, J. W., & Larsen, B. (2014). A review of the characteristics of 108 author-level bibliometric indicators. Scientometrics, 101(1), 125–158.

Zuckerman, E. (1977). Scientific élite. Nobel laureates in the United States. New York: The Free Press.

Author information

Authors and Affiliations

Corresponding author

Additional information

The authors thank two anonymous referees for suggestions that have improved the paper. This work was partially supported through a fellowship from the Hanse-Wissenschaftskolleg Institute for Advanced Study, Delmenhorst, Germany to Peter Haddawy.

Rights and permissions

About this article

Cite this article

Bonaccorsi, A., Cicero, T., Haddawy, P. et al. Explaining the transatlantic gap in research excellence. Scientometrics 110, 217–241 (2017). https://doi.org/10.1007/s11192-016-2180-2

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-016-2180-2