Abstract

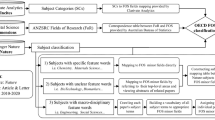

This paper presents a Sciento-text framework to characterize and assess research performance of leading world institutions in fine-grained thematic areas. While most of the popular university research rankings rank universities either on their overall research performance or on a particular subject, we have tried to devise a system to identify strong research centres at a more fine-grained level of research themes of a subject. Computer science (CS) research output of more than 400 universities in the world is taken as the case in point to demonstrate the working of the framework. The Sciento-text framework comprises of standard scientometric and text analytics components. First of all every research paper in the data is classified into different thematic areas in a systematic manner and then standard scientometric methodology is used to identify and assess research strengths of different institutions in a particular research theme (say Artificial Intelligence for CS domain). The performance of framework components is evaluated and the complete system is deployed on the Web at url: www.universityselectplus.com. The framework is extendable to other subject domains with little modification.

Similar content being viewed by others

References

Alwahaishi, S., Martinovič, J., & Snášel, V. (2011). Analysis of the DBLP Publication Classification Using Concept Lattices. Digital enterprise and information systems (pp. 99–108). Berlin: Springer.

Avkiran, N. K., & Alpert, K. (2015). The influence of co-authorship on article impact in OR/MS/OM and the exchange of knowledge with Finance in the twenty-first century. Annals of Operations Research, 235(1), 1–23.

Basu, A., & Aggarwal, R. (2001). International collaboration in science in India and its impact on institutional performance. Scientometrics, 52(3), 379–394.

Bordons, M., Aparicio, J., González-Albo, B., & Díaz-Faes, A. A. (2015). The relationship between the research performance of scientists and their position in co-authorship networks in three fields. Journal of Informetrics, 9(1), 135–144.

Bornmann, L., Leydesdorff, L., & Mutz, R. (2013a). The use of percentiles and percentile rank classes in the analysis of bibliometric data: Opportunities and limits. Journal of Informetrics, 7(1), 158–165.

Bornmann, L., Leydesdorff, L., & Wang, J. (2013b). Which percentile-based approach should be preferred for calculating normalized citation impact values? An empirical comparison of five approaches including a newly developed citation-rank approach (P100). Journal of Informetrics, 7(4), 933–944.

Bornmann, L., & Marx, W. (2011). The h index as a research performance indicator. EurSci Ed, 37(3), 77–80.

Bornmann, L., & Marx, W. (2014). How to evaluate individual researchers working in the natural and life sciences meaningfully? A proposal of methods based on percentiles of citations. Scientometrics, 98(1), 487–509.

Bornmann, L., Moya Anegón, F., & Mutz, R. (2013c). Do universities or research institutions with a specific subject profile have an advantage or a disadvantage in institutional rankings? Journal of the American Society for Information Science and Technology, 64(11), 2310–2316.

Bornmann, L., Stefaner, M., de Moya Anegón, F., & Mutz, R. (2014). Ranking and mapping of universities and research-focused institutions worldwide based on highly-cited papers: A visualisation of results from multi-level models. Online Information Review, 38(1), 43–58.

Ductor, L. (2015). Does co-authorship lead to higher academic productivity? Oxford Bulletin of Economics and Statistics, 77(3), 385–407.

García, J. A., Rodriguez-Sánchez, R., Fdez-Valdivia, J., Torres-Salinas, D., & Herrera, F. (2012). Ranking of research output of universities on the basis of the multidimensional prestige of influential fields: Spanish universities as a case of study. Scientometrics, 93(3), 1081–1099.

Glänzel, W., & Moed, H. F. (2013). Opinion paper: Thoughts and facts on bibliometric indicators. Scientometrics, 96(1), 381–394.

Golub, K. (2006). Automated subject classification of textual Web pages, based on a controlled vocabulary: Challenges and recommendations. New Review of Hypermedia and Multimedia, 12(1), 11–27.

Gupta, B. M., Kshitij, A., & Verma, C. (2011). Mapping of Indian computer science research output, 1999–2008. Scientometrics, 86(2), 261–283.

Hirsch, J. E. (2005). An index to quantify an individual’s scientific research output. Proceedings of the National Academy of Sciences of the United States of America, 102(46), 16569–16572.

Janssens, F., Zhang, L., De Moor, B., & Glänzel, W. (2009). Hybrid clustering for validation and improvement of subject-classification schemes. Information Processing and Management, 45(6), 683–702.

Lazaridis, T. (2009). Ranking university departments using the mean h-index. Scientometrics, 82(2), 211–216.

Leydesdorff, L., & Bornmann, L. (2011). Integrated impact indicators compared with impact factors: An alternative research design with policy implications. Journal of the American Society for Information Science and Technology, 62(11), 2133–2146.

Leydesdorff, L., & Bornmann, L. (2012). The integrated impact indicator (I3), the top-10% excellence indicator, and the use of non-parametric statistics. Research Trends, 29, 5–8.

Leydesdorff, L., Bornmann, L., Mutz, R., & Opthof, T. (2011). Turning the tables on citation analysis one more time: Principles for comparing sets of documents. Journal of the American Society for Information Science and Technology, 62(7), 1370–1381.

Liu, N. C., & Liu, L. (2005). University rankings in China. Higher Education in Europe, 30(2), 217–227.

Molinari, A., & Molinari, J. F. (2008). Mathematical aspects of a new criterion for ranking scientific institutions based on the h-index. Scientometrics, 75(2), 339–356.

Rafols, I., & Leydesdorff, L. (2009). Content-based and algorithmic classifications of journals: Perspectives on the dynamics of scientific communication and indexer effects. Journal of the American Society for Information Science and Technology, 60(9), 1823–1835.

Rehn, C., Kronman, U., & Wadskog, D. (2007). Bibliometric indicators—definitions and usage at Karolinska Institutet. Karolinska Institutet, 13, 2012.

Singh, V. K., Uddin, A., & Pinto, D. (2015). Computer science research: The top 100 institutions in India and in the world. Scientometrics, 104(2), 539–563.

Uddin, A., & Singh, V. K. (2015). A quantity–quality composite ranking of Indian institutions in computer science research. IETE Technical Review, 32(4), 273–283.

Van Raan, A. (1998). The influence of international collaboration on the impact of research results: Some simple mathematical considerations concerning the role of self-citations. Scientometrics, 42(3), 423–428.

Waltman, L., & Eck, N. J. (2012). A new methodology for constructing a publication-level classification system of science. Journal of the American Society for Information Science and Technology, 63(12), 2378–2392.

Waltman, L., & Schreiber, M. (2013). On the calculation of percentile-based bibliometric indicators. Journal of the American Society for Information Science and Technology, 64(2), 372–379.

Zhang, L., Liu, X., Janssens, F., Liang, L., & Glänzel, W. (2010). Subject clustering analysis based on ISI category classification. Journal of Informetrics, 4(2), 185–193.

Acknowledgments

This work is supported by research grants from Department of Science and Technology, Government of India (Grant: INT/MEXICO/P-13/2012) and University Grants Commission of India (Grant: F. No. 41-624/2012(SR)). A preliminary version of this work was presented in 20th Science Technology Indicators Conference in Sep. 2015 at Lugano, Switzerland.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Uddin, A., Bhoosreddy, J., Marisha Tiwari et al. A Sciento-text framework to characterize research strength of institutions at fine-grained thematic area level. Scientometrics 106, 1135–1150 (2016). https://doi.org/10.1007/s11192-016-1836-2

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-016-1836-2