Abstract

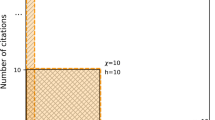

Over the last years the h-index has gained popularity as a measure for comparing the impact of scientists. We investigate if ranking according to the h-index is stable with respect to (i) different choices of citation databases, (ii) normalizing citation counts by the number of authors or by removing self-citations, (iii) small amounts of noise created by randomly removing citations or publications and (iv) small changes in the definition of the index. In experiments for 5,283 computer scientists and 1,354 physicists we show that although the ranking of the h-index is stable under most of these changes, it is unstable when different databases are used. Therefore, comparisons based on the h-index should only be trusted when the rankings of multiple citation databases agree.

Similar content being viewed by others

Notes

This is similar to the mathematical notion of “well-definedness” which requires that the choice of a particular representative element from an equivalence class does not effect the outcome.

References

Ball, P. (2007). Achievement index climbs the ranks. Nature, 448(7155), 737.

Bornmann, L., & Daniel, H. (2005). Does the h-index for ranking of scientists really work? Scientometrics, 65(3), 391–392.

Bornmann, L., & Daniel, H. D. (2009). The state of h index research. Is the h index the ideal way to measure research performance? EMBO reports, 10(1), 2–6.

Hirsch, J. (2005). An index to quantify an individual’s scientific research output. Proceedings of the National Academy of Sciences, 102(46), 16569–16572.

Hirsch, J. (2007). Does the h index have predictive power? Proceedings of the National Academy of Sciences, 104(49), 19193.

Kelly, C., & Jennions, M. (2006). The h index and career assessment by numbers. Trends in Ecology & Evolution, 21(4), 167–170.

Kelly, C., & Jennions, M. (2007). H-index: age and sex make it unreliable. Nature, 449(7161), 403.

Kurien, B. (2008). Name variations can hit citation rankings. Nature, 453(7194), 450.

Schreiber, M. (2007). Self-citation corrections for the Hirsch index. Europhysics Letters, 78(3), 0295–5075.

van Raan, A. (2006). Comparison of the Hirsch-index with standard bibliometric indicators and with peer judgment for 147 chemistry research groups. Scientometrics, 67(3), 491–502.

Vanclay, J. K. (2007). On the robustness of the h-index. Journal of the American Society for Information Science and Technology, 58, 1547–1550.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

This appendix serves the following purposes. First, to give details of how the data from ISI Web of Knowledge (ISI) and from Google Scholar (GS) was preprocessed in an attempt to ensure the highest possible data quality. Second, to report the results for the manual evaluation of the data quality. And, finally, to report detailed numbers for the experiments with GS. These three items correspond to the following three sections.

Data preprocessing

One of the biggest problems in the area of automated bibliometrics is the issue of author disambiguation. How can one know that the author “John Smith” of publication A is the same entity as the author “John Smith” of publication B? Though several approaches to address this problem exist, this hard problem remains largely unsolved. As a consequence, studies either only involve a small set of hand-selected scientists whose publication record is then manually checked, or studies trust the online database and simply assume that different names or different combinations of initial and last name correspond to different scientists. We tried to sidestep the disambiguation problem by identifying a set of authors whose names most likely correspond to only one scientist in the subject area of consideration. In the following, we explain in detail how we obtained our final list of both computer scientists and physicists to use for our experiments.

-

1.

Obtain an initial list of candidate names. For computer science we used all the 681,000 authors listed in the DBLP databaseFootnote 4 and for physics we used all the 82,000 authors listed under the “Physics” section of the arXiv collectionFootnote 5 as of January 2009. Only scientists for which we could obtain their full first and last name were kept.

-

2.

Map all names to ASCII characters. The encoding of the names was converted to ASCII wherever possible using the “Normalization Form Compatibility Decomposition”.Footnote 6 This included conversions such as “Schrödinger → Schrodinger” and “Suñol → Sunol”. Names with characters where this was not possible were removed in this process. Note that GS also returns documents containing “Schrödinger” for the query “Schrodinger” but not vice versa. In ISI the query “Schrödinger” gives an error message and the conversion to “Schrodinger” is required.

-

3.

Remove common US and Chinese family names. We used dictionaries of common US and Chinese names and removed corresponding cases such as “Smith” or “Chiang”. These cases are especially likely to be ambiguous.

-

4.

Remove last names with less than five characters. Very short names such as “Mata” or “Tsai” were removed. This filter removes additional names of Asian origins, for which disambiguation is a serious problem, and it also removes some artifacts where a sequence of initials was contracted to give a “name”.

-

5.

Require unique initial plus last name combination. All names were mapped to a single initial plus a last name, e.g., “John A. Smith → J. Smith”. Parts such as “van” or “de” were treated as part of the last name. For each combination of initial and last name the original list was checked for uniqueness. Only cases with a unique combination were maintained. This was required as GS indexes some and ISI all of its publications using only this combination, so that “John Smith” and “Jack Smith” could not be differentiated. As only the first initial was used this filtering step also avoids that “J. A. Smith” is accidentally conflated with “J. B. Smith”.

-

6.

Remove authors with several aliases. For computer scientists we removed all cases where a single DBLP entity had several aliases. This was done to avoid missing some publications due to a name change of the scientist, e.g., due to marriage. For physicists we did not have a list of such aliases.

-

7.

Require unique entry in ISI. When searching for the initial and last name in ISI’s Author FinderFootnote 7 we required a unique matching entry and all other names were discarded.

The final numbers, 5,614 computer scientists and 1,375 physicists, of scientists with at least one publication in either database were obtained by sampling 10,000 and 3,000 scientists, respectively, right before the very last filtering step. Scientists who did not have a unique ISI entry or for whom no publication with the corresponding topic filter (see below) could be found ended up without any publication found and were ignored for all the experiments. After the two lists of names had been compiled, we obtained bibliographic information by querying GS and ISI as follows.

-

1.

Query GS with full name and subject area filter. For each scientist we sent the corresponding query with the full first and last name, but without any additional initials, to GS. Here we used Google’s topic filterFootnote 8 with the “Engineering, Computer Science, and Mathematics” and the “Physics, Astronomy, and Planetary Science” option for computer science and physics, respectively. Topic filters were used to avoid possible name collisions with scientists in other domains. The returned publications were added to a list of candidate publications for this scientist.

-

2.

Query ISI with initial plus last name and subject area filter. For each scientist we sent the corresponding query with the initial and last name to ISI, as ISI does not index the full first name. Only publications from a subject related to computer science (or mathematics) or physics were considered. The returned publications were added to a list of candidate publications for this scientist.

-

3.

Re-Query ISI with titles from GS. For each item in the current list of GS publications we queried ISI directly for the corresponding title if we had not yet found this publication inside ISI. The returned publications were added to a list of candidate publications for this scientist.

-

4.

Re-Query GS with titles from ISI. For each publication found in ISI but not found in Google Scholar we queried GS directly for the corresponding title. If this title was found it was added to a list of candidate publications.

-

5.

Remove invalid publications. We removed all patents from GS. Additionally, we made sure that each publication did indeed match the corresponding query as in some cases a query “John Smith” could return a publication with the two authors “John Doe” and “Anne Smith”.

-

6.

Remove duplicates in GS. Google Scholar sometimes returns the same publication as a duplicate multiple times. In cases where two or more publications with the same title and the same year of publication were found, only the entry with the highest number of citations was kept. To compare titles for equality all non-alphabetic characters were removed before the comparison.

Basic statistics about the database constructed in this way can be found in Table 1. After obtaining all the bibliographic information we manually checked the quality of the data as discussed in the next section.

Quality evaluation

After obtaining the data from ISI and GS as explained in the previous section, we manually evaluated the quality of our database, both for ISI and for GS. Concretely, for 15 computer scientists and 15 physicists we wanted to know the following.

-

Unique identity: Are the scientists in our database “unique”, i.e., do all the corresponding publications we have correspond to the same person?

-

Data quality: Are the publications we have “valid” publications, e.g., are they authored by the corresponding scientist, do they belong to the correct topic (computer science or physics) and are they not duplicates?

-

Data coverage: Do we have all valid publications for a scientist, i.e., did we not miss relevant publications pertaining to the person under consideration?

Unique identity

To determine if different publications stored under the same author name in our database actually belong to the same person, we looked at clues from the affiliation, the co-authors and the topics of the publications. We considered two publications to belong to two different authors if the affiliations differed, if the sets of co-authors are disjoint and if the topics of the publications appear to be unrelated. For this test we only looked at publications with at least one citation in either ISI or GS as only these publications are relevant for our experiments. Among the 15 computer scientists checked, all were indeed unique and all their publications belonged to a single individual, both in ISI and in GS. For the physicists, there was one case where only three out of four cited publications in GS were authored by the same person and one publication belonged to an individual with the same combination of first name and last name. This person was, however, unique in ISI.

Data quality and coverage

To determine both the quality or cleanness, referred to as precision, and coverage or completeness, referred to as recall, of our database, for each scientist we tried to compile a ground truth list of all the person’s publications. In some cases, such a list could be found on a homepage. In other cases it was impossible to do better than combining and filtering manually the publications for a general query by initial and last name in both ISI and GS. Publications which were clearly not on the topic under consideration (either computer science or physics) were removed from the list.

Once this list was constructed, we then compared the publications in our database to the entries on the list. To reduce the work required for the quality test we only checked the validity of the publications with at least as many citations as the person’s h-index. Other, rarely cited publications, do not contribute to the computation of the h-index. For the test of completeness we tried to find all list entries in our database, regardless of whether they were cited or not.

The focus of the quality evaluation was to show that our preprocessing actually improves the quality of the data compared to, say, simply querying for a person’s last name and initial without any additional filters. Put differently, if a valid publication cannot be found at all in ISI, even if searching for (parts of) its title directly, then no data preprocessing in the world will manage to extract information about this publication from ISI. This is then not a shortcoming of the preprocessing but of the underlying dataset. On the other hand, if a valid publication is lost in the preprocessing then the filtering process might be too aggressive.

Table 3 compares the precision and recall of our extracted and cleaned ISI and GS database with the following simpler versions of obtaining the data. Concerning ISI one could consider the two options of (i) only searching for the initial and last name of a person or (ii) also making use of ISI’s topic filter. As for Google Scholar one could (i) search for the full name of a person, (ii) search for the full name in combination with a topic filter, (iii) search for the initial and last name, or (iv) search for the initial and last name with a topic filter. All of these alternatives are listed in Table 3. Comparing the results for the extracted versions of ISI and GS with the results for their “raw” counterparts shows that our data preprocessing does indeed help to improve the precision without sacrificing too much recall. For example, for Google Scholar the precision in our extracted database is 0.94, which could also have been achieved by searching for the full name with a topic filter directly. However, our recall is still over 0.80, compared to less than 0.60 when searching only for the full name with a topic filter.

Results for GS

In the main publication we only reported numbers for ISI, except for the explicit similarity comparison with GS. In this section we also give the results for GS. For easier comparison purposes, we also include the results for ISI again.

Changes to the definition

As mentioned in the main article, the h-index can be viewed as a member of a large family of possible indices, which all satisfy certain axioms. If all these variants give similar rankings, then this is an indication that the observed ranking is indeed a property of the data rather than a property of the particular index.

To put the percentage of agreeing pairs into perspective it is again helpful to compare with the similarity between the ranking according to the h-index and the ranking according to the total number of citations, as the h-index aims to be a different index. For Google Scholar the corresponding percentages of agreeing pairs were 85 and 90% for all computer scientists and computer scientists with an h-index between 8 and 16, respectively. The corresponding numbers of physicists were 91 and 86%. Similarity values below these threshold can, arguably, be seen as indicating substantially different rankings. Table 4 shows that for Google Scholar as for ISI (see main article) the h-index is stable under most changes, though usage of the curves x 2 and 2x leads to noticeably different rankings.

Normalizing for self-citations and co-author count

Table 5 shows the stability of the h-index when (i) self-citations are removed and when (ii) citations are normalized by the number of authors. As discussed in the main article, the ranking changes very little when self-citations are removed as the percentage of “strict” self-citations is very small. This hold both for ISI and for GS. However, normalizing the number of citations by the number of authors does have a noticeably impact. Here, for the range h∈[8,16] the similarity drops below the similarity with the ranking according to the total number of citations.

Random removal

Table 6 shows the stability of the h-index when citations or publications are removed uniformly at random. Both for ISI and for GS the h-index is very robust under such perturbations.

Rights and permissions

About this article

Cite this article

Henzinger, M., Suñol, J. & Weber, I. The stability of the h-index. Scientometrics 84, 465–479 (2010). https://doi.org/10.1007/s11192-009-0098-7

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-009-0098-7