Abstract

While some stakeholders presume that studying abroad distracts students from efficient pursuit of their programs of study, others regard education abroad as a high impact practice that fosters student engagement and hence college completion. The Consortium for Analysis of Student Success through International Education (CASSIE), compiled semester-by-semester records from 221,981 students across 35 institutions. Of those students, 30,549 had studied abroad. Using nearest-neighbor matching techniques that accounted for a myriad of potentially confounding variables along with matching on institution, the analysis found positive impacts of education abroad on graduation within 4 and 6 years and on cumulative GPA at graduation. A very small increase in credit hours earned emerged, counterbalanced by a small decrease in time-to-degree associated with studying abroad. Overall, the results warrant conclusions that studying abroad does not impede timely graduation. To the contrary, encouraging students to study abroad promotes college completion. These results held similarly for students who had multiple study abroad experiences, and who have studied abroad for varying program lengths.

Similar content being viewed by others

Introduction

The COVID-19 pandemic beginning in spring of 2020 imposed an abrupt pause on education abroad for college students. It is likely that even after the pandemic abates, there will be heightened caution around education abroad and the potential risk it poses to students, faculty, and institutions. Whether the growth that education abroad was experiencing prior to the pandemic continues (Institute for International Education, 2020) may be linked to whether education abroad contributes to broad institutional goals. Internationalization has become central to college and university missions and is encoded as a strategic initiative at between 50% (Helms et al., 2017) and 70% (Childress, 2009) of U.S. colleges. While increasing participation in education abroad has been the most common way in which those initiatives are implemented and assessed (Helms et al., 2017), the rebound of education abroad participation rates in the current environment may depend on advancing other institutional goals such as raising student success rates. Despite progress over the past two decades, about 58% of US baccalaureate-seeking students still fail to graduate within 4 years, and 40% fail to graduate in 6 years (National Center for Education Statistics, 2018). Amidst the national college completion agenda that has arisen to address this challenge, institutions are increasingly judged, and even funded, based on their improvement in college completion (e.g., Partnership for College Completion, 2018). Institutions are held accountable not only for increasing the numbers of students graduating but also for reducing time and credits to degree to reduce the cost of college and associated debt. Finally, colleges and universities are called to accomplish these fiduciary goals while deepening learning experiences and ensuring students gain skills and knowledge for post-graduation success. This challenge has led to a flurry of innovation, inquiry, and advocacy around interventions that elevate student success.

While findings are by no means unequivocal (see Johnson & Stage, 2018), several educational practices have been identified as exerting a high impact on college retention and completion (Kuh, 2008). Among those high impact practices are learning communities, interactions with culturally diverse students, interactions with faculty outside of class, and education abroad. Education abroad warrants attention in the context of the college completion agenda for several reasons. First, education abroad is no longer regarded as a mere nicety for elite students (cf. Chin, 2013). Prior to the pandemic, about 10% of all baccalaureate students—and about 16% of those students who eventually graduate—studied abroad at some point in their college career (Institute for International Education 2019). Moreover, education abroad typically subsumes other high impact practices. The prevailing mode of education abroad in our sample is faculty-led groups. Thus, students studying abroad can expect high interaction with faculty, a type of learning community experience, along with increased exposure to culturally diverse others—all elements of student engagement linked to high impact (Kuh, 2008). Finally, earlier empirical studies suggest that even after controlling for likely confounding variables, students who study abroad enjoy significantly higher probabilities of graduating in four or 6 years, relative to their peers who have not studied abroad (Rubin et al., 2014). Studying abroad has even been recommended as an intentional intervention to enhance retention for high-risk students (Metzger, 2006).

However, prior empirical work on the relationship between education abroad and completion has addressed single institutions or single state contexts, and much of it failed to adequately account for pre-existing differences between students who did and did not study abroad. Furthermore, it is unclear if effects found in prior studies hold up in more recent cohorts when more students from more regions, and thus presumably more diverse students, participate. The study reported here proposes to advance work in this area by investigating a recent national sample that is representative of a variety of institutional, cultural, and policy contexts with statistical techniques that mitigate for selection bias. Finally, this study will address with one sample a range of completion outcomes elevated by the college completion agenda and important to institutional stakeholders and leaders. The purpose of this study, therefore, is to confirm and further explore links between studying abroad and improving rates of college completion—one of the key challenges facing higher education. Accordingly, this paper addresses the question:

Controlling for pre-existing differences between those who do and do not participate in education abroad, what is the relationship between education abroad and the outcomes of likelihood of graduating in four and 6 years, semesters to degree completion, credits earned, and GPA at graduation?

Conceptual Framework

This study is grounded in Tinto’s interactionalist theory of student departure and Astin’s developmental theory of involvement. While both acknowledge the role of student background and preparation in student success, they direct our attention on the criticality of what students do in college. Though Tinto’s and Astin’s theories were originally focused on the outcome of student retention, they have been construed to encompass student success more broadly, including degree completion. Tinto’s theory asserts that student persistence is a product of successful student academic and social integration into the institution (Tinto, 1975, 1993). That integration is partly determined by students’ entering characteristics and external commitments but subsequently shaped by experiences at the institution including academic success; interactions with faculty, staff, and other students; and activities outside the classroom. Interactions that support integration lead to student commitment to the institution and completion (Tinto, 2005). Important to the implications of this study, Tinto placed responsibility on the institution, not just the student, for fostering student integration. Astin (1984) characterized this student interaction and engagement as involvement and proposed it as a precondition for student success. His analysis of the factors shaping student departure led to his postulation that activities which increase student involvement, defined as the amount of energy they devote to the academic experience, contribute to their development and likelihood of success.

The work of Kuh and colleagues provides a bridge to education abroad. Drawing on these and other theoretical underpinnings about the criticality of engagement to student success, as well as empirical evidence based on this theoretical work, Kuh developed the National Survey of Student Engagement (NSSE). This instrument was designed to measure both student behaviors and perceptions of their engagement in activities and practices linked to student learning and development as a means of colleges assessing the extent to which they are promoting engagement and therefore student success. Beginning in 2013, along with a larger revision and reorganization of the survey’s engagement indicators, items were added about participation in high impact practices (NSSE, 2013). Kuh argues these practices are effective because they require significant time and effort, include meaningful interactions with faculty and other students about substantive matters, increase the likelihood of engagement with people different than oneself, typically involve frequent feedback on performance, provide opportunities to apply learning in varied settings, and can be life changing (Kuh, 2008). Among the eight high impact practices identified by Kuh, “diversity/global learning” including study abroad is the most relevant here. The current study draws from these theoretical perspectives to investigate study abroad as an educational practice that institutions can employ to enhance student engagement, interaction, and involvement, which in turn contribute to students’ integration, retention, development, and degree completion.

Education Abroad and Student Success

Enhanced academic engagement is the most frequently proposed mechanism by which studying abroad may lead to student success outcomes such as timely graduation (Rubin et al., 2014). A related mechanism is academic focusing (Hadis, 2005). Education abroad experiences often incorporate elements associated with high impact practices, including substantial time investment for students, out-of-class communication with faculty and peers, and exposure to cultural difference (Kuh, 2008). For example, in typical academic exchange programs, students are immersed in cross-cultural milieux for housing and socializing as well as for classwork, often for months at a time (Engle & Engle, 2003; cf., Goldoni, 2013). Even a 4-week self-contained traveling program that is not culturally immersive can provide extensive out-of-class contact with instructors, a writing-intensive curriculum, field research conducted in collaboration with fellow students, and structured reflection deliberately aimed at transformational learning (e.g., Bell et al., 2016). A number of quantitative studies confirmed that student engagement–especially “deep learning” investments in researching, writing, and talking about course subject matter—was indeed fostered by studying abroad (Burns et al., 2018; Coker et al., 2018; Gonyea, 2008; Landon et al., 2017; Rourke & Kanuka, 2012).

Empirically establishing the link between studying abroad and student learning outcomes is complicated by at least three factors (McAllister-Grande & Whatley, 2020). First, education abroad programs are academically selective by design; they commonly require a minimum GPA for applicants (Adams & Reinig, 2017). Second, the population of students who choose to study abroad typically enjoys stronger high school and prior college achievement relative to those who do not study abroad (Dai, 2020; Thomas & McMahon, 1998; Xu et al., 2013). Given findings that students who study abroad have better access to information about studying abroad relative to students who do not study abroad (Salisbury et al., 2009), it is quite possible that academic advisors play a big role in directing high-achieving undergraduates toward studying abroad, while failing to cultivate such expectations for lower-achieving students. Typical are the findings of Hamir (2011) who found that students who studied abroad, those who applied but never actually participated, and those who neither applied nor participated averaged SAT scores of 1245, 1231, and 1214 respectively; their average sophomore year college GPAs were 3.44, 3.35, and 3.12 respectively. A somewhat discordant finding emerges from research on the integrated model of student choice, which found that while certain high school and college academic experiences predicted intention to study abroad, college admissions test scores did not (Salisbury et al., 2011; Salisbury et al., 2009; see also Luo & Jamieson-Drake, 2015). Finally, the most common time for students to study abroad is during their junior year (Institute for International Education, 2018). This means that the population of students who study abroad contains a disproportionately large number of students who have been retained from first year to second and from second year to third. The subsequent “treatment” with an education abroad experience may be irrelevant to low-risk students’ probability of completing their degrees, relative to the “untreated” portion of their first-year cohort. Many of those untreated students would have dropped out before they, by convention, might have studied abroad. Thus, studies that simply compared graduation rates between those who studied abroad and those who did not within the same first-year cohort (e.g., Malmgren & Galvin, 2008) almost surely inflate the apparent effects of education abroad. Certainly, students who study abroad also differ from their peers along other dispositional and experiential dimensions that are rarely captured in institutional student information systems (Luo & Jamieson-Drake, 2015). Not surprisingly, for example, students who applied for study abroad programs were distinguished from their nonapplicant peers by higher pre-existing levels of curiosity about other cultures (Carlson, 1991). Similarly, even prior to studying abroad, program applicants were more open minded than nonapplicants (Niehoff et al., 2017).

To address the challenges of isolating the possible effects of education abroad on subsequent college outcomes, an earlier federally funded large-sample project—the Georgia Learning Outcomes of Students Studying Abroad Research Initiative (GLOSSARI; Sutton & Rubin, 2004, 2010)—employed two strategies. First, GLOSSARI constructed a comparison group comprised of students who never studied abroad but who persisted in college through the same semester after which a “matched” student left campus to study abroad. Comparison group students were also matched only within the same unit of the then 33-institution University System of Georgia. This physical matching technique resulted in a sample of 19,109 undergraduates who had studied abroad and a comparable group (in terms of retention) of 17,903 students who never studied abroad. GLOSSARI found that the 4-year graduation rate for all students who studied abroad was 7.5% points higher than for peers who did not (18% improvement in rate). The overall advantage for studying abroad on 6-year graduation rates was a gain of 5.3% points (6% improvement in rate). The advantage for African-American undergraduates who studied abroad, relative to African-Americans who did not, was about 10% points higher (13% improvement in rate). For other students of color, the 6-year graduation rate for those who studied abroad was 6% points higher, relative to other students of color who did not study abroad (6% improvement in rate).

GLOSSARI also conducted logistic regressions controlling for the effects of (a) college GPA the semester prior to the target study abroad event, (b) cumulative hours enrolled prior to the target semester, (c) SAT score at admission, and (d) high school GPA. Results indicated that students who had studied abroad had 10% higher odds of graduating in 4 years, compared with those who never studied abroad. There was no significant effect on odds of graduating in 6 years. In sum, GLOSSARI demonstrated that education abroad does not impede timely graduation. To the contrary, the experience seemed to increase the likelihood of college completion.

Several subsequent studies likewise examined the association between education abroad and college completion, but only for single institutions (e.g., Hamir, 2011). Several of these studies adopted research designs similar to GLOSSARI’s by using either sampling or regression techniques to reduce the effects of confounding variables (e.g., DeSalvo & Roe, 2017; Schneider & Thornes, 2018; Xu et al., 2013). Results of these additional studies confirmed GLOSSARI’s conclusions about the positive association between education abroad and college completion.

The Consortium for Analysis of Student Success through International Education

These prior studies regarding the advantage of studying abroad for accelerating college completion are enlightening; they debunk the common supposition that studying abroad interferes with timely graduation. While they make some efforts to control for confounding variables that might also account for college completion, with one exception (DeSalvo & Roe, 2017), they fail to make use of more sophisticated statistical matching techniques to better isolate the effects of education abroad (Haupt et al., 2018). Moreover, the GLOSSARI multi-institution study was limited to a single state and to public institutions. Accordingly, the Consortium for Analysis of Student Success through International Education (CASSIE) was established in 2017 to provide a fuller account of the linkages between education abroad (and other forms of international education) and student success indices including college completion. CASSIE’s broad objectives were as follows:

-

Create a multi-institutional database documenting participation in international education and indices of student success that is diverse with respect to geography, institution type, and student background.

-

Ascertain the effects of education abroad within groups that are under-represented in international education.

-

Employ best practices in statistical matching techniques to control for variables that may be confounded with both participation in education abroad and student success.

-

Provide participating campuses with information about how institution-specific outcomes associated with international education compare to benchmarks.

-

Build capacity for collaboration between campus offices of institutional research and international education.

As of 2019, usable CASSIE data were contributed by 35 institutions, representing 19 states.

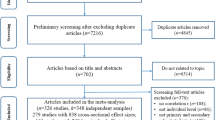

Methods

Data

The CASSIE data set consists of student-level information from the public 4-year universities in Georgia (hereafter, University System of Georgia–USG) as well as public and private universities across the country. Table 1 provides information on these institutions, including control, location, Carnegie Classification, and undergraduate enrollment. Additionally, Table 1 provides the sample size of students in the CASSIE data set and percent of students that ever studied abroad (to be discussed further below). The participating institutions were mostly public (91%), in the Doctoral and Master’s granting Carnegie categories (94%), and had enrollments between four and fifty thousand.

The data set was constructed using two approaches; data collection protocols were approved by the USG’s Institutional Review Board. For the USG institutions, academic records were retrieved through the authors’ administrative access to system-wide student-level data. However, because the administrative data lacked information on whether and when students studied abroad, education abroad offices at each of the USG institutions provided this additional data that were then merged with administrative data. Eight USG institutions were dropped from the sample either because they did not offer bachelor’s degrees or because they had zero or nearly zero study abroad students.Footnote 1 For non-USG institutions, the CASSIE research team developed a data template and definitions document and worked with each institution to obtain the data in a consistent and secure manner. In every case, data sets from several locations on campuses needed to be merged; most commonly education abroad data needed to be combined with registrar’s data, and sometimes with separate admissions and financial aid data. After extensive efforts to recruit a national sample of diverse institutions, 17 non-USG institutions ultimately supplied conforming datasets.

The sample consists of all bachelor’s degree-seeking first-time first-year students (IPEDS definition) who matriculated to their respective institutions in Fall 2010 or Fall 2011—these time frames were selected so that graduation within 6 years could be tracked at the time the data were compiled. Transfer students and students pursuing associate degrees were excluded. The final sample consisted of 221,981 students across 35 institutions. Of these students, 30,649, or 14%, ever studied abroad during the 6-year observation period, which is consistent with national findings (Institute of International Education, 2018). The percent of students in the Fall 2010 and 2011 cohorts who ever studied abroad range from a low of 1% to a high of 42% across the institutions.

Study abroad programs and experiences can differ in a variety of dimensions- length, destination, language of instruction, administrative structure, and frequency. Table 2 provides detail on two important features- the frequency and length of study abroad participation. Of the 30,649 participants, 86.6% studied abroad just once, 10.6% twice, and 2.8% three or more times. Of those students who studied abroad once, 5.4% participated in programs of less than 2 weeks, 43.9% studied abroad for 2–8 weeks, 8.2% studied abroad for 8 weeks to one semester, 38.7% participated in semester-long programs, and 3.8% participated in programs extending beyond a single semester. The unique granularity of information in the CASSIE data set allowed us to examine whether the effects of participation varied based on these features.

For each student, term-by-term records about enrollment, cumulative GPA, declared major, and degrees conferred (if any) were obtained for up to 6 years following first matriculation, allowing us to examine 6-year graduation outcomes. For instance, for the Fall 2010 cohort we observe students up to and including Summer 2016 and for the Fall 2011 cohort until Summer 2017. In addition, demographics (age, race/ethnicity, sex), socio-economic information (Pell or other need-based aid receipt) and prior academic characteristics (high school GPA, admissions test scores) were collected, and used in our analysis.

Table 3 provides descriptive statistics about the sample population (reflecting information reported for first semester of matriculation), broken down by students who had at least one education abroad experience and those who did not. As can be seen, those who studied abroad differed substantially from those who did not along a variety of characteristics. Students who studied abroad had superior academic preparation as reflected by high school GPA and SAT scores. In addition, there was a larger proportion of females but a smaller proportion of underrepresented minorities and need-based aid recipients among education abroad students. Student outcomes by education abroad status appear at the bottom of Table 3. Based just on descriptive statistics, students who studied abroad had higher 6-year graduation rates: 95.1% versus 62.7%. The outcomes of terms to degree and GPA and credit hours earned at degree are calculated for students in the full sample who did achieve graduation within our observation period. Those who studied abroad and who graduated within 6 years did so about half a semester earlier than those who did not study abroad but also graduated. The education abroad group also displayed a higher mean cumulative GPA upon graduation. The right side of Table 3 provides the descriptive data on the 149,186 students who graduated. Among graduates, 29,146, or 20% studied abroad. We see the same dynamics as in the full sample: study abroad participants had higher levels of academic preparation and have higher percentages of female students but lower proportions of underrepresented minority and low-income students.

Analysis

Given that students who study abroad differ from those who do not in a variety of observed and unobserved ways that may affect the outcomes of interest, it is necessary to control for effects of those potentially confounding variables on student success outcomes (Haupt et al., 2018). In the absence of a randomized control trial, researchers must employ methods which attempt to address this endogeneity. We took two approaches. In the first approach, we ran ordinary least squares (OLS) regressions, controlling for multiple covariates such as gender, race, high school GPA, and institution fixed effects. In the second approach, we estimated nearest neighbor matching models, such that students from the same institution but different study abroad participation, were matched on those covariates (Stuart, 2010). Matching analysis has become a routine tool for analyzing the impact of educational interventions in which random assignment is not possible (Lane et al., 2012), and has been employed in previous studies of education abroad outcomes (Waibel et al., 2015; Whatley & Clayton, 2020). Particularly in quasi-experimental analyses of large data sets containing heterogenous participants, authorities recommend propensity score matching as “one method…of selecting the most appropriate data for reliable estimation of causal effects” (Stuart & Rubin, 2008, p. 156; See also Rosenbaum & Rubin, 1985).

Both ordinary least squares regression and matching require strong assumptions about exogeneity of the error term and conditional independence, which may or may not hold. Exogeneity requires that unobserved variables be uncorrelated with treatment, and conditional independence states that once students are matched on observed characteristics, they will also match on unobserved characteristics such as motivation or global mindedness. Recently, alternative weighting procedures have been proposed to help mitigate the impact of unobserved variables on selection into treatment conditions (e.g., Oster, 2019), but the analysis reported here relies on more intuitive matching analyses. Because those assumptions of exogeneity cannot be confirmed, for either regression or matching results, the reader should be cautious about inferring causality from the estimates.

The model we present employed both nearest neighbor matching techniques (for student background and prior achievement variables) as well as exact matching on institution. Students who studied abroad were matched with nearest neighbors who did not study abroad, based on observed characteristics that may have influenced the decision to study abroad (or not) as well as on factors that independently contribute to the college success outcomes we examined. These matching variables included gender, race/ethnicity, and indicators of achievement prior to college such as high school GPA and SAT and are listed in the upper half of Table 3. Students were also matched on the total number of semesters enrolled in college to minimize the comparison of students who studied abroad with students who dropped out of college prior to having the opportunity to study abroad. In addition, we matched students on institution (exact match) so that study abroad students were only matched with non-study abroad students from the same institution. The exact matching on institution mitigates the influence of institution-specific factors that may drive student outcomes, e.g., student success advising initiatives (Bettinger & Baker, 2014), or study abroad culture on campus. In the OLS regressions, we also included institution fixed effects to account for unobserved factors peculiar to students at the same institution. For both the matching and OLS models, we clustered standard errors at the institution level.

In our primary analysis, students who studied abroad once or more than once were lumped together into a single treated category and compared to controls who never studied abroad. Also of interest is whether there are differences due to variations in the intensity or “dosage” of this treatment. Do student outcomes differ significantly for those who study abroad two or more times compared with those who study abroad just once? Similarly, the varying lengths of study abroad programs could have heterogeneous impacts, especially on the outcomes that are directly related to the passage of time such as graduating in 4 or 6 years, and semesters-to-degree. To this end, we also estimated matching models where we disaggregated number of study abroad experiences as well as study abroad experiences of varying durations. We use the Stata procedure teffects nnmatch (StataCorp, 2019) to construct our matches via nearest-neighbor matching and to calculate treatment effects. In this approach each treated student gets paired with one or more control students who are “nearest” to that student, where nearest is determined by a weighted function of all the matching variables (Stuart & Rubin, 2008). Our choice of nearest-neighbor matching over other matching methods such as subclassification or full matching was driven by several factors. Given differences in institutional culture, mission, and policy it seems of paramount importance to only compare students who attend the same institution, and that intra-institutional matching was facilitated by the nearest neighbor method. Additionally, as noted by Abadie and Imbens (2002), applying bootstrap to estimate standard errors does not result in valid inference, however Stata teffects nnmatch employs a method that does correctly estimate variance. Finally, where the number of controls far exceeds the number of treated individuals—as is the case when comparing students with versus without education abroad experiences—the potential loss in sample size due to a dearth of sufficiently near matches is of reduced concern.

In a matching analysis, common support indicates that there may be some observations where there is no appropriate match (Caliendo & Kopeinig, 2008). We used nearest neighbor matching with replacement, meaning the same control could be used to match with one or more treated. In addition, we allowed each treated student to be matched with more than one control neighbor; teffects nnmatch determines the optimal number of neighbors. Table 4 provides diagnostic information on our matches: every treated student was found to have a match among the controls, but not all controls were used as matches. A concern that arises is whether only a small number of controls were matched with the treated observations. We find that is not the case: the ratio of unique matched controls to treated students is 77% across our entire sample, and ranges from a low of 57% to a high of 105% (value greater than 100% possible because we allow for more than one nearest neighbor).

We employed two additional diagnostic tests to assess match quality (the extent to which matched students were comparable): a comparison of distributions for continuous variables, and standardized bias. As an example of the former, we compared the distribution of high school GPA before and after our matches were constructed. Figure 1a and b display that comparison. Within each figure, the chart on the left shows the distribution of high school GPAs across treated and control prior to matching and the chart on the right is after the matches have been created. Figure 1a shows the distribution for our entire sample of students, whereas Fig. 1b shows the results when we focused on the sub-sample of those who graduated within 6 years. Prior to matching we see the high school GPA of control students was substantially different than that of treated students; after matching, the GPAs were much more comparable.

Table 5 shows the results of the standardized bias calculations. Again, we show results for all students and for the subgroup of students who graduated in 6 years. Standardized bias shows the difference in covariates pre- and post-match, by taking the difference in averages and scaling by the standard deviation. An absolute value close to 0 indicates there is little difference in this variable across treated and control, whereas an absolute value close to 1 indicates a substantial difference. Among the unmatched data, the values differed from zero for most covariates. However, among the matched sample, this difference was mostly diminished. In summary, the diagnostic tests indicate the quality of matches was sufficient to produce reliable matching estimates.

Results

We provide the OLS regression results in Table 6 for six outcomes: graduation in 6 years, graduation in 4 years; and conditional on graduating, total hours earned, GPA at graduation, and time to degree (in semesters). In all of the regressions we control for the covariates listed in the upper half of Table 3, along with institution fixed effects, and cluster standard errors by institution. The estimates indicate that students who studied abroad were 8% points more likely to graduate in 6 years compared to students who did not. The magnitude of the 4-year graduation results is even larger, a 14.8% point difference is associated with studying abroad. Conditioned on graduating within 6 years, students who studied abroad were found to earn their degrees about 4.5 weeks faster than those that did not (coefficient of 0.283 × 16 weeks in a semester), have a 0.12 higher GPA upon graduation, and earn about 1.7 credit hours more in their college careers.

Results of the matching analysis appear in Table 7 and are mainly consistent with the findings of the OLS regressions. For example, the matching estimates indicate that among our matched pairs, education abroad had a positive effect on likelihood of timely graduation, although the magnitude of the effect was smaller. Those who studied abroad were 3.8% points more likely to graduate with a bachelor’s degree in 6 years and 6.2% points more likely to graduate within 4 years, compared with those who never studied abroad. For those who graduated within 6 years, education abroad students completed their degrees on average in 0.16 fewer semesters (or 2.6 weeks faster) than students who never studied abroad. They finished college with only 2.19 more credit hours on average than those who did not study abroad. Study abroad graduates also had on average a GPA 0.16 points higher. Taken together, these estimates suggest that for all students, study abroad contributed to higher completion outcomes without contributing to longer time to degree or substantial excess credits.

While the preceding analysis demonstrated the overall impact of studying abroad compared with never studying abroad, we examined possible “dosage effects” of studying abroad by disaggregating education abroad experiences in two ways: by number of study abroad programs a student experienced and by the duration of those study abroad experiences.

Table 8 shows the matching estimates pertaining to number of semesters during which a student was enrolled in a study abroad program. This table disaggregates the impact of study abroad for students who studied abroad once relative to not at all, twice relative to not at all, and three or more times relative to not at all. The first row shows the estimates restricted to just those who studied abroad once (versus not at all). These estimates are remarkably close to the estimates appearing in Table 7, suggesting that findings aggregated across number of study abroad programs were not driven by “higher dose” students, i.e., those with more than one study abroad experience. Comparing those with two study abroad experiences to none at all, we find effect sizes that are larger (in absolute value) than the results for single-timers. However, when we look at those with three or more experiences, the estimates suffer from smaller sample sizes, and we only find statistically significant effects for the three outcomes conditional on graduating (i.e., semesters-to-graduation, GPA at graduation, and credit hours at graduation). To summarize, these results suggest that the contribution of study abroad to student success accrues to all those who study abroad—be it only once or on multiple occasions. Students with multiple study abroad experiences do appear to enjoy further increments, though of small magnitude.

Finally, we examined whether there was evidence of a “dosage effect” based on study abroad program length. To do this, we first excluded students who studied abroad more than once (since their multiple programs might be of different durations). We then constructed matches across students who studied abroad and those who never did, disaggregating the former by duration of their education abroad program. For example, we compared students who studied abroad for less than 2 weeks to otherwise matched students who did not study abroad at all, and we compared students who studied abroad for 2–4 weeks with matched students who never studied abroad, and so on. Table 9 shows those matching estimates. The advantage for those who studied abroad ranged from a 3.1 to 5.9% point increase in the probability of graduating in 6 years, depending on program duration. The advantage in the probability of graduating in 4 years ranged from 3.4 to 9.8% points, depending on program duration. The positive impact on the likelihood of graduation is mostly isomorphic with program length. However, at the duration of one semester or more, the effect was diminished. For outcomes conditional on graduating, study abroad duration was associated with shorter time-to-degree, up to one semester length programs. Programs that were more than one semester did delay degree completion to a small extent. Program length does not seem to differentially impact final GPA, and the fluctuation in credit hours earned varied inconsequentially between 1 and 3 credit hours (depending on program duration). Overall, these estimates suggest that the contribution of study abroad was only weakly associated with program length.

Discussion

This study provides evidence that participation in education abroad is associated with an increase in likelihood of timely college completion and increased GPA at graduation without causing increased time to degree or accrual of substantial extra credits. These findings associating improved college completion are consistent with findings from other single-institution analyses (DeSalvo & Roe, 2017; Hamir, 2011; Scheider & Thornes, 2018; Xu et al., 2013) as well as findings from a state system comprised of multiple institutions (Rubin et al., 2014). It is difficult to compare effect sizes across these studies, though, because the metrics of timely graduation differed (number of semesters, four- or 6-year rates, probabilities of four- or-6-year graduation). The present findings are likely the most conservative estimates among these studies, as the matching techniques to compare those who studied abroad with those who did not were statistically constrained and controlled for the largest number of potentially confounding variables. Indeed, the OLS multiple regressions that we report here in Table 6 yielded uniformly higher magnitudes of effect sizes than the matching analysis results reported in Table 7. In addition, the findings of this study advance previous work by showing the effects of study abroad are not unique to particular institutions or regions and are more generalizable. Further, the findings illustrate that the association of study abroad with college completion persists in relatively recent cohorts, even though the overall pool of students who avail themselves of education abroad was larger than was the case for earlier studies.

Furthermore, this study’s examination of time-to-degree and credits at graduation allays concerns that education abroad imposes additional costs on students beyond the financial cost of participating in the program. The fact that education abroad students graduated on average with just a fraction of one standard course more than their non-study abroad peers contradicts common suppositions that studying abroad will contribute to substantial unapplied credits or excess effort. Similarly, the finding that education abroad students graduated on average 0.16 semesters earlier than those who did not study abroad dispels concerns that studying abroad slows time to degree or results in students incurring the cost of additional terms of enrollment. Additionally, our exploration of “dosage effects” reported in Tables 8 and 9 suggests that the benefits of studying abroad accrue to those who study abroad only once and to those who study abroad in short-duration programs. Some additional benefits with respect to student success outcomes do accrue from studying abroad on more than one occasion and from longer programs, up to one semester in length.

The findings have important implications for both theory and practice. Returning to our conceptual framework, these findings lend support to theories postulating that student interaction, involvement, and engagement—here in the form of education abroad—contribute to student success (Astin, 1984; Tinto, 1975, 1993). These findings furthermore support the position that global learning is a high impact practice that fosters positive academic outcomes (Kuh, 2008). They perhaps also support Hadis’ conjecture that education abroad results in academic focusing, which in turn results in efficient degree completion. Having controlled for number of terms enrolled, we see that study abroad is associated with higher likelihood of the concrete task of completing degree requirements beyond merely remaining enrolled.

In practice, these findings can be used by international education practitioners in promoting participation in study abroad to students. It is a compelling argument that education abroad contributes to the likelihood of degree completion without adding time and substantial credits. This confirmation about academic outcomes supplements the abundant evidence that study abroad contributes to various affective and attitudinal outcomes (Coker et al., 2018; Haas, 2018) to create a comprehensive argument for the benefits of participation. Having this evidence will be especially important in a post-pandemic world as students and parents weigh the benefits of education abroad against not only its financial costs but also heightened fears related to contracting illness or being forced into quarantine.

The findings reported here are also important for campus and state higher education leadership as they weigh decisions about investing finite resources and crafting academic policy. Evidence that education abroad is associated with college completion outcomes elevates it as a practice to be supported to further both student and institutional goals. These findings have very real implications for whether study abroad is viewed as a nice enhancement for the elite few, and thus allowed to exist on the margins, versus a focus in an institution’s portfolio of mechanism to improve student outcomes. The latter will enable global education to receive commensurate resources and an elevated voice in institutional planning and decision making. Furthermore, as a focal strategy, equity in international education participation and outcomes encourages institutions to utilize other research findings on barriers to participation to foster access for all students.

Limitations and Future Research Directions

First, it must be acknowledged that, while advanced matching techniques help minimize the effects of confounding variables on the outcomes of interest, we remain far from establishing causality between studying abroad and student success. Other variables for which we did not match, because they remained unobserved, may have exerted strong causal effects. These other variables include individual dispositions and traits such as open mindedness and organizational skills. Causality might be established by true experiments in which similar students are randomly assigned to an education abroad “treatment.” But such experiments are difficult to conceive (see Petzold & Moog, 2018). One source of data that future studies might exploit are lists of students who applied to study abroad but who for one reason or another failed to participate in a program (e.g., Barclay Hamir, 2011). It may be inferred that those students are more similar in terms of motivation and disposition to the study abroad enrollees than comparison groups drawn from the general student body who never studied abroad.

The CASSIE data consist entirely of individual-level student records obtained from student information systems and offices of international education. While we have detailed records about course-taking patterns, we have none regarding likely individual differences in psychological factors like willingness to take risks, global mindedness, or task perseverance that probably account for large portions of variance in both study abroad access and college success (Luo & Jamieson-Drake, 2015). Future studies may append individual level surveys measuring such factors to the kinds of institutional data collected here to create models of greater explanatory value.

Although CASSIE represents one of the largest and perhaps most diverse set of institutions assembled in this research domain, the sample is nonetheless lacking in some respects. Despite vigorous efforts to bring more institutions on board, only three private institutions and no small liberal arts institutions contributed data. Our experience suggests that many such institutions lacked the institutional research infrastructure to conduct the complex data compilation that CASSIE required (Rubin & Mason, 2021). Although many such institutions are very active and innovative in international education efforts, we are unable to generalize these findings to them. Furthermore, this study focuses on a “traditional” population of bachelor’s degree-seeking first-time first-year students. Future work should investigate similar dynamics for transfer students as well as those seeking associate degrees (see, for example, Raby et al., 2014).

Furthermore, having established a robust case for education abroad as a contributor to student success, it remains to be empirically established what kinds of international education experiences are most potent. Candidate variables to examine in this respect include education abroad destination, instructional leadership (home campus faculty or host nation faculty), and language of instruction (the student’s native language or target world language). Fortunately, data on program features such as these are encoded in the CASSIE data and will be the subject of future investigation. Finally, this work illuminates the associations between education abroad and success for students in the aggregate, but not how this relationship varies by different subgroups. Study abroad is less common among populations such as low-income students and those from minoritized race and ethnicity groups, groups that have historically been less well served by higher education. It is important to ascertain whether students from such traditionally underserved groups experience disparate effects and thus determine if study abroad can be an effective means for fostering completion across groups and promoting equity in student success. Nevertheless, this study moves the field forward and prepares for this future work by using a recent national sample and rigorous methods to establish a clear relationship between study abroad and positive outcomes valued by students and institutions alike.

Notes

Four institutions had zero bachelor’s seekers, three had zero study abroad students, and one institution had three study abroad students. In the process of constructing matches, it was determined the three participants did not match with enough non-study abroad students to cluster standard errors at the institution level. Subsequently this and the other seven institutions were dropped before the final matching analysis was conducted.

References

Abadie, A., & Imbens, G. W. (2002). Simple and bias-corrected matching estimators for average treatment effects (Technical Working Paper No. 0283). Cambridge, MA: National Bureau of Economic Research. DOI https://doi.org/10.3386/t0283.

Adams, R., & Reinig, M. (2017). Student application, selection, and acceptance. In L. Chieffo & C. Spaeth (Eds.), The guide to successful short-term programs abroad (2nd ed., pp. 257–270). NAFSA.

Astin, A. W. (1984). Student involvement: A developmental theory for higher education. Journal of College Student Personnel, 25, 297–308.

Barclay Hamir, H. (2011). Go abroad and graduate on-time: Study abroad participation, degree completion, and time-to-degree (Unpublished doctoral dissertation). University of Texas at Austin, Austin, TX.

Bettinger, E. P., & Baker, R. B. (2014). The effects of student coaching: An evaluation of a randomized experiment in student advising. Educational Evaluation and Policy Analysis, 36(1), 3–19.

Burns, R., Rubin, D. L., & Tarrant, M. (2018). World language learning: The impact of study abroad on student engagement. In D. M. Vellarius (Ed.), Study abroad contexts for enhanced foreign language learning (pp. 1–22). IGI Global. https://doi.org/10.4018/978-1-5225-3814-1.ch001

Bell, H. L., Gibson, H. J., Tarrant, M. A., Perry, L. G., III., & Stoner, L. (2016). Transformational learning through study abroad: US students’ reflections on learning about sustainability in the South Pacific. Leisure Studies, 35(4), 389–405.

Caliendo, M., & Kopeinig, S. (2008). Some practical guidance for the implementation of propensity score matching. Journal of Economic Surveys, 22(1), 31–72.

Carlson, J. S. (1991). Study abroad: The experience of American undergraduates in Western Europe and the United States. Council on International Educational Exchange.

Childress, L. K. (2009). Internationalization plans for higher education institutions. Journal of Studies in International Education, 13(3), 289–309.

Chin, C.S. (2013). Studying abroad can be an expensive waste of time. New York Times online Room for Debate. Retrieved 10/19/19 from https://www.nytimes.com/roomfordebate/2013/10/17/should-more-americans-study-abroad/studying-abroad-can-be-an-expensive-waste-of-time.

Coker, J. S., Heiser, E., & Taylor, L. (2018). Student outcomes associated with short-term and semester study abroad programs. Frontiers, 30(2), 92–105.

Dai, J. (2020). Understanding education abroad with advanced quantitative methodologies: Student profiles and academic outcomes Theses and Dissertations--Education Science. 71. https://uknowledge.uky.edu/edsc_etds/71. Retrieved December 15, 2020.

DeSalvo, D., & Roe, R.M. (2017). Let the data make the case for study abroad. Paper presented at NAFSA Region VI Conference. Central Michigan University.

Engle, L., & Engle, J. (2003). Study abroad levels: Toward a classification of program types. Frontiers, 9(1), 1–20.

Goldoni, F. (2013). Students’ immersion experiences in study abroad. Foreign Language Annals, 46(3), 359–376.

Gonyea, R. M. (2008, November). The impact of study abroad on senior year engagement. Paper presented at the Annual Meeting of the Association for the Study of Higher Education, Jacksonville, FL.

Haas, B. W. (2018). The impact of study abroad on improved cultural awareness: A quantitative review. Intercultural Education, 29(5–6), 571–588.

Hadis, B. F. (2005). Why are they better students when they come back? Determinants of academic focusing gains in the study abroad experience. Frontiers, 11, 57–70.

Hamir, H. B. (2011). Go abroad and graduate on-time: Study abroad participation, degree completion, and time-to-degree. Unpublished doctoral dissertation, The University of Nebraska-Lincoln. Accessed September 1, 2019 from https://digitalcommons.unl.edu/cgi/viewcontent.cgi?article=1065&context=cehsedaddiss.

Haupt, J., Ogden, A. C., & Rubin, D. (2018). Toward a common research model: Leveraging education abroad participation to enhance college graduation rates. Journal of Studies in International Education, 22(2), 1–17. https://doi.org/10.1177/1028315318762519

Helms, R.A., Brajkovic, L., & Strothers, B. (2017). American Council on Education. Retrieved August 10, 2021 from https://www.acenet.edu/Research-Insights/Pages/Internationalization/Mapping-Internationalization-on-U-S-Campuses.aspx.

Institute of International Education. (2018). Data on U.S. students studying abroad outside of the United States. Open Doors Report on International Educational Exchange. Author. http://www.iie.org/opendoors. Retrieved September 2, 2019.

Institute of International Education. (2019). U.S. Study Abroad. https://www.iie.org/Research-and-Insights/Open-Doors/Data/US-Study-Abroad. Retrieved December 1, 2019.

Johnson, S. R., & Stage, F. K. (2018). Academic engagement and student success: Do high-impact practices mean higher graduation rates? The Journal of Higher Education, 89(5), 753–781.

Kuh, G. D. (2008). High-impact educational practices: What they are, who has access to them, and why they matter. Association of American Colleges and Universities.

Landon, A. C., Tarrant, M. A., Rubin, D. L., & Stoner, L. (2017). Beyond “Just Do It” fostering higher-order learning outcomes in short-term study abroad. AERA Open, 3(1), 233285841668604.

Lane, F., To, Y., Shelley, K., & Henson, R. (2012). An illustrative example of propensity score matching with education research. Career and Technical Education Research, 37(3), 187–212.

Luo, J., & Jamieson-Drake, D. (2015). Predictors of study abroad intent, participation, and college outcomes. Research in Higher Education, 56(1), 29–56.

Malmgren, J., & Galvin, J. (2008). Effects of study abroad participation on student graduation rates: A study of three incoming freshman cohorts at the University of Minnesota Twin Cities. NACADA Journal, 28(1), 29–42.

McAllister-Grande, B., & Whatley, M. (2020). International higher education research: The state of the field. Association of International Educators.

Metzger, C. A. (2006). Study abroad programming: A 21st century retention strategy? College Student Affairs Journal, 25(2), 164–175.

National Center for Education Statistics. (2018). Digest of education statistics, 2018 tables and figures. Retrieved 10/19/19 from https://nces.ed.gov/programs/digest/d18/tables/dt18_326.10.asp.

National Survey of Student Engagement. (2013). NSSE’s Conceptual Framework. Retrieved 10/14/20 from https://nsse.indiana.edu/nsse/about-nsse/conceptual-framework/index.html.

Niehoff, E., Petersdotter, L., & Freund, P. A. (2017). International sojourn experience and personality development: Selection and socialization effects of studying abroad and the Big Five. Personality and Individual Differences, 112, 55–61.

Oster, E. (2019). Unobservable selection and coefficient stability: Theory and evidence. Journal of Business & Economic Statistics, 37(2), 187–204.

Petzold, K., & Moog, P. (2018). What shapes the intention to study abroad? An experimental approach. Higher Education, 75(1), 35–54.

Raby, R. L., Rhodes, G. M., & Biscarra, A. (2014). Community college study abroad: Implications for student success. Community College Journal of Research and Practice, 38(2–3), 174–183.

Rosenbaum, P. R., & Rubin, D. B. (1985). Constructing a control group using multivariate matched sampling methods that incorporate the propensity score. The American Statistician, 39(1), 33–38.

Rourke, L., & Kanuka, H. (2012). Student engagement and study abroad. Canadian Journal of University Continuing Education, 38(1), 1–12.

Rubin, D. L., & Mason, L. (2021). Large-scale data collection to promote education abroad: Campus barriers and facilitators. NAFSA Issues and Trends. Retrieved August 15, 2021 from https://www.nafsa.org/professional-resources/research-and-trends/large-scale-data-collection-promote-education-abroad.

Rubin, D., Sutton, R. C., O’Rear, I., Rhodes, G., & Raby R. L. (2014, Fall). Opening the doors of education abroad to enhance academic success for lower achieving students. IEE Networker. (pp 38–41). http://www.nxtbook.com/naylor/IIEB/IIEB0214/index.php?startid=38Salisbury,

Salisbury, M. H, Paulsen, M. B., Pascarella, E.T. (2011). Why do all the study abroad students look alike? Applying an integrated student choice model to explore differences in the factors that influence white and minority students’ intent to study abroad. Research in Higher Education, 52(2), 123–150.

Scheider, J. & Thornes L. (2018). Education abroad participation influences graduation rates. Colorado State University Office of International programs.

StataCorp. (2019). Stata statistical software: Release 16. StataCorp LLC.

Stuart, E. A. (2010). Matching methods for causal inference: A review and a look forward. Statistical Science, 25(1), 1–21.

Stuart, E. A., & Rubin, D. E. B. (2008). Best practices in quasi-experimental designs: Matching methods for causal inference. In J. W. Osborne (Ed.), Best practices in quantitative methods (pp. 155–176). Sage.

Sutton, R. C., & Rubin, D. L. (2004). The GLOSSARI project: Initial findings from a system-wide research initiative on study abroad learning outcomes. Frontiers: The Interdisciplinary Journal of Study Abroad, 10(1), 65–82.

Sutton, R. C., & Rubin, D. L. (2010, June). Documenting the academic impact of study abroad: Final report of the GLOSSARI project. In Annual Conference of NAFSA: Association of International Educators, Kansas City, MO.

Tinto, V. (1975). Dropouts from higher education: A theoretical synthesis of the recent literature. Review of Educational Research, 45, 89–125.

Tinto, V. (1993). Leaving college: Rethinking the causes and cures of student attrition (2nd ed.). University of Chicago Press.

Tinto, V. (2005). Moving from theory to action. In A. Seidman (Ed.), College student retention: Formula for student success. Praeger.

Thomas, S. L., & McMahon, M. E. (1998). Americans abroad: Student characteristics, pre-departure qualifications and performance abroad. International Journal of Educational Management, 12(2), 57–64.

Waibel, S., Petzold, K., & Rüger, H. (2018). Occupational status benefits of studying abroad and the role of occupational specificity: A propensity score matching approach. Social Science Research, 74, 45–61.

Whatley, M., & Clayton, A. B. (2020). Study abroad for low-income students: The relationship between need-based grant aid and access to education abroad. Journal of Student Financial Aid, 49(2), 1.

Xu, M., de Silva, C. R., Neufeldt, E., & Dane, J. H. (2013). The impact of study abroad on academic success: An analysis of first-time students entering Old Dominion University, Virginia, 2000–2004. Frontiers, 23, 90–103.

Acknowledgements

The project described in this report was funded by the US Department of Education, Office of Postsecondary Education, International and Foreign Language Education, International Research Studies program to Angela Bell, PI (award #P017A170020). Any opinions expressed are solely those of the authors.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

Authors report no conflicts of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Bhatt, R., Bell, A., Rubin, D.L. et al. Education Abroad and College Completion. Res High Educ 63, 987–1014 (2022). https://doi.org/10.1007/s11162-022-09673-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11162-022-09673-z