Abstract

Purpose

Mixture item response theory (MixIRT) models can be used to uncover heterogeneity in responses to items that comprise patient-reported outcome measures (PROMs). This is accomplished by identifying relatively homogenous latent subgroups in heterogeneous populations. Misspecification of the number of latent subgroups may affect model accuracy. This study evaluated the impact of specifying too many latent subgroups on the accuracy of MixIRT models.

Methods

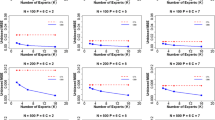

Monte Carlo methods were used to assess MixIRT accuracy. Simulation conditions included number of items and latent classes, class size ratio, sample size, number of non-invariant items, and magnitude of between-class difference in item parameters. Bias and mean square error in item parameters and accuracy of latent class recovery were assessed.

Results

When the number of latent classes was correctly specified, the average bias and MSE in model parameters decreased as the number of items and latent classes increased, but specification of too many latent classes resulted in modest decrease (i.e., < 10%) in the accuracy of latent class recovery.

Conclusion

The accuracy of MixIRT model is largely influenced by the overspecification of the number of latent classes. Appropriate choice of goodness-of-fit measures, study design considerations, and a priori contextual understanding of the degree of sample heterogeneity can guide model selection.

Similar content being viewed by others

References

Alemayehu, D., & Cappelleri, J. C. (2012). Conceptual and analytical considerations toward the use of patient-reported outcomes in personalized medicine. American Health & Drug Benefits, 5(5), 310–317.

Black, N., Burke, L., Forrest, C. B., Sieberer, U. H., Ahmed, S., Valderas, J. M., Bartlett, S. J., & Alonso, J. (2016). Patient-reported outcomes: Pathways to better health, better services, and better societies. Quality of Life Research, 25(5), 1103–1112.

Gibbons, E., Black, N., Fallowfield, L., Newhouse, R., & Fitzpatrick, R. (2016). Essay 4: Patient-reported outcome measures and the evaluation of services. In R. Fitzpatrick & H. Barratt (Eds.), Challenges, solutions and future directions in the evaluation of service innovations in health care and public health. NIHR Journals Library.

Lord, F. M. (1980). Applications of item response theory to practical testing problems. Lawrence Erlbaum Associates.

Muthén, B. O. (1989). Latent variable modeling in heterogeneous populations. Psychometrika, 54, 557–585.

Mellenbergh, G. J. (1989). Item bias and item response theory. International Journal of Educational Research, 13, 127–143.

Meredith, W. (1964). Notes on factorial invariance. Psychometrika, 29, 177–185.

Meredith, W. (1993). Measurement invariance, factor analysis, and factorial invariance. Psychometrika, 58, 525–543.

Millsap, R. E. (2011). Statistical approaches to measurement invariance. Routledge.

Teresi, J. A., Wang, C., Kleinman, M., Jones, R. N., & Weiss, D. J. (2021). Differential item functioning analyses of the patient-reported outcomes measurement information system (PROMIS®) Measures: methods, challenges, advances, and future directions. Psychometrika, 86, 674–711. https://doi.org/10.1007/s11336-021-09775-0

Meredith, W., & Teresi, J. A. (2006). An essay on measurement and factorial invariance. Medical Care, 44(Suppl 3), S69–S77. https://doi.org/10.1097/01.mlr.0000245438.73837.89

Teresi, J. A., & Fleishman, J. A. (2007). Differential item functioning and health assessment. Quality of Life Research, 16(Suppl 1), 33–42.

McHorney, C. A., & Fleishman, J. A. (2006). Assessing and understanding measurement equivalence in health outcome measures. Issues for further quantitative and qualitative inquiry. Medical Care, 44(11 Suppl 3), S205–S210.

Schmitt, N., & Kuljanin, G. (2008). Measurement invariance: Review of practice and implications. Human Resource Management Review, 18(4), 210–222.

Holland, P. W., & Thayer, D. T. (1988). Differential item functioning and the Mantel Haenszel procedure. In H. Wainer, H. I. Braun, & Educational Testing Service (Eds.), Test validity (pp. 129–145). NJ: L Erlbaum Associates.

Crane, P. K., Gibbons, L. E., Jolley, L., & van Belle, G. (2006). Differential item functioning analysis with ordinal logistic regression techniques. DIFdetect and difwithpar. Medical Care, 44(11 Suppl 3), S115–S123.

Zumbo, B. D. (1999). A handbook on the theory and methods of differential item functioning (DIF): Logistic regression modeling as a unitary framework for binary and Likert-type (ordinal) item scores. Directorate of Human Resources Research and Evaluation, Department of National Defense.

Swaminathan, H., & Rogers, H. J. (1990). Detecting differential item functioning using logistic regression procedures. Journal of Educational Measurement, 27, 361–370.

Wu, Q., & Lei, P-W. Using multi-group confirmatory factor analysis to detect differential item functioning when tests are multidimensional. Paper presented at the Annual Meeting of the National Council for Measurement in Education, San Diego: CA, 2009

Gonzales-Roma, V., Hernandez, A., & Gomez-Benito, J. (2006). Power and Type-I error of the mean and covariance structure analysis model for detecting differential item functioning in graded response items. Multivariate Behavioral Research, 41(1), 29–53.

Holland, P. W., & Thayer, D. T. (1988). Differential item functioning and the Mantel-Haenszel procedure. In H. Wainer & H. I. Braun (Eds.), Test Validity (pp. 129–145). Lawrence Erlbaum Associates.

DeMars, C. E. (2009). Modification of the Mantel-Haenszel and logistic regression DIF procedures to incorporate the SIBTEST regression correction. Journal of Educational Behavioral Statistics, 34, 149–170.

Shealy, R., & Stout, W. F. (1993). A model-based standardization approach that separates true bias/DIF from group differences and detects test bias/DIF as well as item bias/DIF. Psychometrika, 58, 159–194.

Güler, N., & Penfield, R. D. (2009). A comparison of logistic regression and contingency table methods for simultaneous detection of uniform and nonuniform DIF. Journal of Educational Measurement, 46(3), 314–329.

De Ayala, R. J. (2009). The theory and practice of item response theory. Guilford Press.

Flowers, C. P., Oshima, T. C., & Raju, N. S. (1999). A description and demonstration of the polytomous-DFIT framework. Applied Psychological Measurement, 23, 309–326.

Rost, J. (1990). Rasch models in latent classes: An integration of two approaches to item analysis. Applied Pscyhological Measurement, 14(3), 271–282.

Mislevy, R. J., & Verhelst, N. (1990). Modeling item responses when different subjects employ different solution strategies. Psychometrika, 55, 195–215.

Sen, S., & Cohen, A. S. (2019). Applications of mixture IRT models: A literature review. Measurement: Interdisciplinary Research and Perspectives, 17(4), 177–191.

Wu, X., Sawatzky, R., Hopman, W., Mayo, N., Sajobi, T. T., Liu, J., Prior, J., Papaioannou, A., Josse, R. G., Towheed, T., Davison, K. S., & Lix, L. M. (2017). Latent variable mixture models to test for differential item functioning: a population-based analysis. Health Qual Life Outcomes, 15, 102.

Sawatzky, R., Ratner, P. A., Kopec, J. A., & Zumbo, B. D. (2012). Latent variable mixture models: A promising approach for the validation of patient-reported outcomes. Quality of Life Research, 21(4), 637–650.

Sawatzky, R., Russell, L. B., Sajobi, T. T., Lix, L. M., Kopec, J., & Zumbo, B. D. (2018). The use of latent variable mixture models to identify invariant items in test construction. Quality of Life Research, 27(7), 1747–1755.

Samuelsen, K. M. (2005).Examining differential item functioning from a latent class perspective. PhD Thesis, University of Maryland; Retrieved January 29, 2019 from http://gradworks.umi.com/31/75/3175148.html.

Samuelsen, K. M. (2008). Examining differential item functioning from a latent mixture perspective. In G. R. Hancock & K. M. Samuelsen (Eds.), Advances in latent variable mixture models (pp. 177–197). Information Age.

Lu, R., & Jiao, H. (2009). Detecting DIF using the mixture Rasch model. Paper presented at the annual meeting of the National Council on Measurement in Education, San Diego, CA

Li, F., Cohen, A. S., Kim, S. H., & Cho, S. J. (2009). Model selection methods for mixture dichotomous IRT models. Applied Psychological Measurement, 33, 353–373.

Maij-de Meij, A. M., Kelderman, H., & van der Flier, H. (2010). Improvement in detection of differential item functioning using a mixture item response theory model. Multivariate Behavioral Research, 45(6), 975–999.

Demars, C. E., & Lau, A. (2011). Differential item functioning in with latent classes: How accurately can we detect who is responding differentially? Educational Psychology & Measurement, 71(4), 597–616.

Sen, S., Cohen, A. S., & Kim, S. (2016). The impact of non-normality on extraction of spurious latent classes in mixture IRT models. Applied Psychological Measurement, 40(2), 98–113.

McLachlan, G., & Peel, D. (2000). Finite mixture models. Wiley series in probability and statistics. Wiley.

Celeux, G., Hurn, M., & Robert, C. P. (2000). Computational and inferential difficulties with mixture posterior distributions. Journal of the American Statistical Association, 95(451), 957–970.

Rousseau, J., & Mengersen, K. (2011). Asymptotic behaviour of the posterior distribution in overfitted mixture models. Journal of the Royal Statistical Society: B, 73(Part 5), 689–710.

Samejima, F. (1997). Graded response model. In W. J. van der Linden & R. K. Hambleton (Eds.), Handbook of modern item response theory (pp. 85–100). Springer.

Lubke, G. H., & Muthén, B. (2005). Investigating population heterogeneity with factor mixture models. Psychological Methods, 10, 21–39.

Baghaei, P., & Carstensen, C. H. (2013). Fitting the mixed Rasch model to a reading comprehension test: Identifying reader types. Practical Assessment, Research & Evaluation, 18(5), n5.

Preinerstorfer, D., & Formann, A. K. (2011). Parameter recovery and model selection in mixed Rasch models. British Journal of Mathematical & Statistical Psychology, 65(2), 251–262.

Kutscher, T., Eid, M., & Crayen, C. (2019). Sample size requirements for applying mixed polytomous item response models: Results of a Monte Carlo simulation study. Frontiers in Psychology, 13(10), 2494.

Choi, I. H., Paek, I., & Cho, S. J. (2017). The impact of various class-distinction features on model selection in the mixture Rasch model. Journal of Experimental Education., 85(3), 411–424.

Jin, K. Y., & Wang, W. C. (2014). Item response theory models for performance decline during testing. Journal of Educational Measurement, 51, 178–200.

Zumbo, B. D., & Harwell, M. R. (1999). The methodology of methodological research: Analyzing the results of simulation experiments (Paper No. ESQBS99–2). University of Northern British Columbia. Edgeworth Laboratory for Quantitative Behavioral Science

Muthén, L. K., & Muthén, B. O. (2017). Mplus: statistical analysis with latent variables: User’s Guide (Version 8). Mplus, 2017

R Core Team. (2018). R: A Language and environment for statistical computing. R Foundation for Statistical Computing

Bauer, D. J., & Curran, P. J. (2003). Distributional assumptions of growth mixture models: Implications for over-extraction of latent trajectory classes. Psychological Methods, 8, 338–363.

Alexeev, N., Templin, J., & Cohen, A. S. (2011). Spurious latent classes in the mixture Rasch model. Journal of Educational Measurement, 48, 313–332.

Nylund, K. L., Asparouhov, T., & Muthén, B. O. (2007). Deciding on the number of classes in latent class analysis and growth mixture modeling: A Monte Carlo simulation study. Structural Equation Model, 14, 535–569.

Muthén, B., Brown, C. H., Masyn, K., Jo, B., Khoo, S. T., Yang, C. C., et al. (2002). General growth mixture modeling for randomized preventive interventions. Biostatistics, 3, 459–475.

Lin, T. H., & Dayton, C. M. (1997). Model selection information criteria for non-nested latent class models. Journal of Educational and Behavioral Statistics, 22, 249–264.

Tein, J. Y., Coxe, S., & Cham, H. (2013). Statistical power to detect the correct number of classes in latent profile analysis. Structural Equation Modeling: A Multidisciplinary Journal, 20(4), 640–657.

Finch, W. H., & French, B. F. (2012). Parameter estimation with mixture item response theory models: A Monte Carlo comparison of maximum likelihood and Bayesian methods. Journal of Modern Applied Statistical Methods, 11(1), 167–178.

Cho, S.-J., Cohen, A. S., & Kim, S.-H. (2013). Markov Chain Monte Carlo estimation of a mixture item response model. Journal of Statistical Computation & Simulation, 83, 278–306.

Acknowledgements

Funding for this study was provided by the Canadian Institutes of Health Research (grant # MOP-142404). LML is supported by a Tier 1 Canada Research Chair in Methods for Electronic Health Data Quality. RS is supported by a Tier 2 Canada Research Chair in Patient-Reported Outcomes. BDZ is supported by the Tier 1 Canada Research Chair in Psychometrics and Measurement and the UBC-Paragon Research Initiative in support of his Paragon UBC Professor of Psychometrics and Measurement. The authors gratefully acknowledge the contributions of the University of Calgary Research Computing Services towards this study.

Funding

The work was supported by Canadian Institutes of Health Research Grant No # MOP-142404.

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design of the simulation study. DS and TS implemented the simulation study and summarized the results. All authors were involved in the critical revision of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

Dr. Sajobi has received consulting fees from Circle Neurovascular Imaging Inc. All other authors have no relevant financial or non-financial interests to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Sajobi, T.T., Lix, L.M., Russell, L. et al. Accuracy of mixture item response theory models for identifying sample heterogeneity in patient-reported outcomes: a simulation study. Qual Life Res 31, 3423–3432 (2022). https://doi.org/10.1007/s11136-022-03169-0

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11136-022-03169-0