Abstract

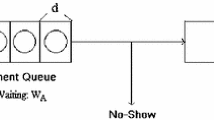

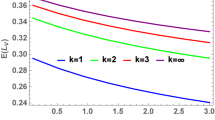

We consider a single-server queueing system where a finite number of customers arrive over time to receive service. Arrivals are driven by appointments, with a scheduled appointment time associated with each customer. However, customers are not necessarily punctual and may arrive either earlier or later than their scheduled appointment times or may not show up at all. Arrival times relative to scheduled appointments are random. Customers are not homogeneous in their punctuality and show-up behavior. The time between consecutive appointments is allowed to vary from customer to customer. Moreover, service times are assumed to be random with a \( \gamma \)-Cox distribution, a class of phase-type distributions known to be dense in the field of positive distributions. We develop both exact and approximate approaches for characterizing the distribution of the number of customers seen by each arrival. We show how this can be used to obtain the distribution of waiting time for each customer. We prove that the approximation provides an upper bound for the expected customer waiting time when non-punctuality is uniformly distributed. We also examine the impact of non-punctuality on system performance. In particular, we prove that non-punctuality deteriorates waiting time performance regardless of the distribution of non-punctuality. In addition, we illustrate how our approach can be used to support individualized appointment scheduling.

Similar content being viewed by others

Notes

With the advent of social distancing, it is likely that appointment-driven arrivals will become even more prevalent.

We use the term ‘online’ to refer to the realistic setting where customers are assigned an appointment time at the time they request one, taking into account previous appointments and the characteristics of the associated customers.

This is an important feature in applications, such as health care, where data may be available, or can be collected, on the punctuality of different customers and where punctuality of different customers can vary significantly.

Typical formulations from the appointment scheduling literature adopt the objective of minimizing the sum of the cost of expected waiting time for customers and the cost of expected overtime for the server.

Numerical results are generated using Wolfram Mathematica 12.1.1 on Mac OS 10.15.4 with an 18 cores Intel Xeon W processor and 64 GB Ram.

References

Ahmadi-Javid, A., Jalali, Z., Klassen, K.: Outpatient appointment systems in healthcare: a review of optimization studies. Eur. J. Oper. Res. 258(1), 3–34 (2017)

Cayirli, T., Veral, E.: Outpatient scheduling in health care: a review of literature. Prod. Oper. Manag. 12, 519–549 (2003)

Cayirli, T., Veral, E.: Outpatient scheduling in health care: a review of literature. Prod. Oper. Manag. 12(4), 519–549 (2003)

Cayirli, T., Veral, E., Rosen, H.: Designing appointment scheduling systems for ambulatory care services. Health Care Manag. Sci. 9(1), 47–58 (2006)

Cheong, S., Bitmead, R., Fontanesi, J.: Modeling scheduled patient punctuality in an infusion center. Lect. Notes Manag. Sci. 5, 46–56 (2013)

Chen, D., Wang, R., Yan, Z., Benjaafar, S.: Appointment scheduling under a service level constraint. 2021. Working paper, The Chinese University of Hong Kong, Shenzhen (2021)

Deceuninck, M., Fiems, D., De Vuyst, S.: Outpatient scheduling with unpunctual patients and no-shows. Eur. J. Oper. Res. 265(1), 195–207 (2018)

Feldman, J., Liu, N., Topaloglu, H., Ziya, S.: Appointment scheduling under patient preference and no-show behavior. Oper. Res. 62(4), 794–811 (2014)

Green, L.V., Savin, S.: Reducing delays for medical appointments: a queueing approach. Oper. Res. 56(6), 1526–1538 (2008)

Gupta, D., Denton, B.: Appointment scheduling in health care: challenges and opportunities. IIE Trans. 40(9), 800–819 (2008)

Hassin, R., Mendel, S.: Scheduling arrivals to queues: a single-server model with no-shows. Manag. Sci. 54(3), 565–572 (2008)

Jansson, B.: Choosing a good appointment system—a study of queues of the type D/M/1. Oper. Res. 14(2), 292–312 (1966)

Jiang, B., Tang, J., Yan, C.: A stochastic programming model for outpatient appointment scheduling considering unpunctuality. Omega 82, 70–82 (2019)

Jiang, R., Shen, S., Zhang, Y.: Integer programming approaches for appointment scheduling with random no-shows and service durations. Oper. Res. 65(6), 1638–1656 (2017)

Jouini, O., Benjaafar, S.: Appointment scheduling with non-punctual arrivals. IFAC Proc. Vol. 42(4), 235–239 (2009)

Kaandorp, G., Koole, G.: Optimal outpatient appointment scheduling. Health Care Manag. Sci. 10(3), 217–229 (2007)

Kim, S.-H., Whitt, W., Cha, W.C.: A data-driven model of an appointment-generated arrival process at an outpatient clinic. INFORMS J. Comput. 30(1), 181–199 (2018)

Klassen, K., Yoogalingam, R.: Appointment system design with interruptions and physician lateness. Int. J. Oper. Prod. Manag. 33(3–4), 394–414 (2013)

Kuiper, A., Kemper, B., Mandjes, M.: A computational approach to optimized appointment scheduling. Queueing Syst. 79(1), 5–36 (2015)

Kuiper, A., Mandjes, M., de Mast, J.: Optimal stationary appointment schedules. Oper. Res. Lett. 45(6), 549–555 (2017)

LaGanga, L.R., Lawrence, S.R.: Appointment overbooking in health care clinics to improve patient service and clinic performance. Prod. Oper. Manag. 21(5), 874–888 (2012)

Lau, H.-S., Lau, A.H.-L.: A fast procedure for computing the total system cost of an appointment schedule for medical and kindred facilities. IIE Trans. 32(9), 833–839 (2000)

Legros, B., Jouini, O., Koole, G.: A uniformization approach for the dynamic control of queueing systems with abandonments. Oper. Res. 66(1), 200–209 (2018)

Luo, J., Kulkarni, V.G., Ziya, S.: Appointment scheduling under patient no-shows and service interruptions. Manuf. Serv. Oper. Manag. 14(4), 670–684 (2012)

Mak, H.-Y., Rong, Y., Zhang, J.: Appointment scheduling with limited distributional information. Manag. Sci. 61(2), 316–334 (2015)

Marshall, A.W., Olkin, I., Arnold, B.C.: Inequalities: Theory of Majorization and Its Applications, vol. 143. Springer, Berlin (1979)

Mercer, A.: Queues with scheduled arrivals: a correction, simplification and extension. J. R. Stat. Soc.: Ser. B (Methodol.) 35(1), 104–116 (1973)

Millhiser, W.P., Valenti, B.C.: Delay distributions in appointment systems with generally and non-identically distributed service times and no-shows (2012). Available on SSRN. http://ssrn.com/abstract=2045074

Millhiser, W.P., Veral, E.A.: Designing appointment system templates with operational performance targets. IIE Trans. Healthc. Syst. Eng. 5(3), 125–146 (2015)

Millhiser, W.P., Veral, E.A., Valenti, B.C.: Assessing appointment systems’ operational performance with policy targets. IIE Trans. Healthc. Syst. Eng. 2(4), 274–289 (2012)

Mitrinovic, D.S., Vasic, P.M.: Analytic Inequalities, vol. 61. Springer, Berlin (1970)

Mohammadi, I., Wu, H., Turkcan, A., Toscos, T., Doebbeling, B.N.: Data analytics and modeling for appointment no-show in community health centers. J. Primary Care Community Health 9, 2150132718811692 (2018)

Parlar, M., Sharafali, M.: Dynamic allocation of airline check-in counters: a queueing optimization approach. Manag. Sci. 54(8), 1410–1424 (2008)

Robinson, L.W., Chen, R.R.: A comparison of traditional and open-access policies for appointment scheduling. Manuf. Serv. Oper. Manag. 12(2), 330–346 (2010)

Samorani, M., Ganguly, S.: Optimal sequencing of unpunctual patients in high-service-level clinics. Prod. Oper. Manag. 25(2), 330–346 (2016)

Soriano, A.: Comparison of two scheduling systems. Oper. Res. 14(3), 388–397 (1966)

Wang, P.: Optimally scheduling n customer arrival times for a single-server system. Comput. Oper. Res. 24(8), 703–716 (1997)

Wang, P.: Sequencing and scheduling n customers for a stochastic server. Eur. J. Oper. Res. 119(3), 729–738 (1999)

Wang, R., Jouini, O., Benjaafar, S.: Service systems with finite and heterogeneous customer arrivals. Manuf. Serv. Oper. Manag. 16(3), 365–380 (2014)

Wang, S., Liu, N., Wan, G.: Managing appointment-based services in the presence of walk-in customers. Manag. Sci. 66(2), 667–686 (2020)

Yue, F., Hu, Q., Yue, F., Hu, Q., Yue, F., Hu, Q., Yue, F., Hu, Q.: Minimizing total cost in outpatient scheduling with unpunctual arrivals. In: International Conference on Service Systems and Service Management (2016)

Zacharias, C., Armony, M.: Joint panel sizing and appointment scheduling in outpatient care. Manag. Sci. 63(11), 3978–3997 (2017)

Zacharias, C., Pinedo, M.: Managing customer arrivals in service systems with multiple identical servers. Manuf. Serv. Oper. Manag. 19(4), 639–656 (2017)

Zacharias, C., Yunes, T.: Multimodularity in the stochastic appointment scheduling problem with discrete arrival epochs. Manag. Sci. 66(2), 744–763 (2020)

Zeng, B., Turkcan, A., Lin, J., Lawley, M.: Clinic scheduling models with overbooking for patients with heterogeneous no-show probabilities. Ann. Oper. Res. 178(1), 121–144 (2010)

Zhu, H., Chen, Y., Leung, E., Liu, X.: Outpatient appointment scheduling with unpunctual patients. Int. J. Prod. Res. 56(5), 1982–2002 (2018)

Acknowledgements

The first and last authors were funded by NPRPC Grant No. NPRP11C-1229-170007 from the Qatar National Research Fund (a member of The Qatar Foundation). The statements made herein are solely the responsibility of the authors.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Triangular distribution for punctuality

Consider the case where customer non-punctuality is homogeneous and follows a symmetric triangular distribution. For \(1 \le n \le M\), we have

Similarly to the uniform distribution case, we calculate \(p_{2,1}\) to complete the initialization step, since we have \(f_1(t)\) from the definition of \(f_n(t)\) and \(p_{2,0} = 1 - p_{2,1}\). Here, we have \(\displaystyle \text{ Pr }\{D_1<d_1\}= \text{ Pr }\{D_1\ge d_1\}= \frac{1}{2}\). When customer 1 arrives before \(d_1\), we have

To derive \(p_{2,1|D_1>d_1}\), we first compute \(h_{2,1|D_1 \ge d_1}(x)\), the pdf of the random variable \(D_2-D_1 | D_1 \ge d_1\). Using (7), we can compute \(h_{1|D_1 \ge d_1}(x)\) on \([d_2-d_1-\tau _2^l-\tau _1^u, d_2-d_1+\tau _2^u]\) as

where \(C_\mathrm{min} = \min \{\tau , d_2-d_1-x\}\) and \(D_\mathrm{min} = \min \{\tau , d_1+\tau +x - d_2\}\). By substituting \(h_{1|D_1 \ge d_1}(x)\) in Eq. (4) by (41), we obtain

With (40) and (42), we complete the initialization step by computing \(p_{2,1}\) as

Appendix B: Details for the analysis of \(\gamma \)-Cox-distributed service times

The moments of the waiting time can be obtained similarly to the exponential service time case. We have

for \(2\le n \le M\), where \(W_{n,r}\) is the random variable denoting the waiting time in the queue of customer n, given that customer n shows up and finds r actual phases of service in system remain to be serviced (i.e., the system state \(R_n\) is r). Since service times follows independent \(\gamma \)-Cox distribution with m phases, the completion time of each phase is independently and exponentially distributed with rate \(\gamma \). Therefore, \(W_{n,r}\) has an r-Erlang distribution with r phases and rate \(\gamma \) per phase. Using Eq. (43) and knowing that \(\displaystyle {{\mathbb {E}}[W_{n,r}]= \frac{r}{\gamma }}\) and \(\displaystyle {{\mathbb {E}}[W_{n,r}^2]=\frac{r(r+1)}{\gamma ^2}}\), we obtain

for \(2\le n \le M\). Moreover, we have

for \(t\ge 0\). Consequently,

The case \(n=1\) is treated separately. The moments and distribution of the first customer’s waiting time can be obtained exactly the same as for the exponential case, using Eqs. (19)–(21).

Appendix C: Proof of Propositions

1.1 Proof of Proposition 1

We first state several definitions and lemmas that will be used in the proof. We denote by \(S_n\) the random variable of the service time of customer n, and by \(A_n\) the random variable for the arrival time of customer n. For a given schedule \(\delta = (d_1, d_2, \ldots , d_M)\), we have \(A_n = D_n\) if customer n is not punctual, and \(A_n = {\mathbb {E}}[D_n]\) if customer n is punctual.

We use \(\gamma _n \in \{0, 1\}\) to denote the type of punctuality of customer n, where \(\gamma _n = 0\) if customer n arrives with non-punctuality at time \(D_n \in [d_n - \tau _n^l, d_n + \tau _n^u]\), and \(\gamma _n = 1\) if customer n arrives with punctuality at time \({\mathbb {E}}[D_n]\). Let us denote by \(\Gamma = (\gamma _1, \ldots , \gamma _M)\) the customer’s punctuality profile and use \(A_n(\Gamma )\), \(W_n(\Gamma )\), and \(C_n(\Gamma )\) to represent the arrival, waiting, and completion time of customer n under the profile \(\Gamma \), where \(A_n(\Gamma ) = D_n\) if \(\gamma _n = 0\), and \(A_n(\Gamma ) = {\mathbb {E}}[D_n]\) if \(\gamma _n = 1\). For \(0 \le k \le M\), let \(\Gamma _k\) denote the profile where the first k customers are punctual and the last \(M-k\) customers are non-punctual.

For \(a_k, s_k \in {\mathbb {R}}\), let \(\mathbf {a}_k = (a_1, \ldots , a_k)\), and \(\mathbf {s}_k= (s_1, \ldots , s_k)\). For \(k = 0, \ldots , M\), we define the function \(h_k(\mathbf {a}_k,\mathbf {s}_k)\) \(:{\mathbb {R}}^{2k} \rightarrow {\mathbb {R}}\) as follows:

Proposition 4

For \(k \in \{1, \ldots , M\}\), \(h_n(\mathbf {a}_n,\mathbf {s}_n)\) is convex with respect to \(a_k\) for \(k \le n \le M\).

Proof

First, note that the function \(h_{k-1}(\mathbf {a}_{k-1},\mathbf {s}_{k-1})\) only relies on the first \(k-1\) elements of \(\mathbf {a}_n\) and \(\mathbf {s}_n\), and is constant with respect to \(a_l\) and \(s_l\), for \(l \ge k\). From standard results, we know that \(\max (C,f(x))\) is convex in x if f(x) is convex in x and C is constant with respect to x. It is then easy to see that \(h_k(\mathbf {a}_k,\mathbf {s}_k) = \max (h_{k-1}(\mathbf {a}_{k-1},\mathbf {s}_{k-1}), a_k) + s_k\) is convex in \(a_k\). Let us consider \(n > k\) and assume that \(h_{n-1}(\mathbf {a}_{n-1},\mathbf {s}_{n-1})\) is convex in \(a_k\). Again, we can see that \(h_{n}(\mathbf {a}_{n},\mathbf {s}_{n}) = \max (h_{n-1}(\mathbf {a}_{n-1},\mathbf {s}_{n-1}), a_{n}) + s_{m+1}\) is convex in \(a_k\). Therefore, by induction, we have shown that \(h_n(\mathbf {a}_{n},\mathbf {s}_{n})\) is convex in \(a_k\) for all \(n \ge k\), which finishes the proof of the proposition. \(\square \)

For \(1 \le k \le n \le M\), we define the functions \(g_{n,k}\) and \({\hat{g}}_{n,k}: {\mathbb {R}}^{2n-2} \rightarrow {\mathbb {R}}\) as follows: If \(n=k\),

Otherwise, for \(n>k\),

By applying Jensen’s inequality and Proposition 4, we may write

uniformly on \({\mathbb {R}}^{2n-2}\), for \(1 \le k \le n \le M\).

Next, we show that for a fixed schedule \(\delta = (d_1, d_2, \ldots , d_M)\), the expected waiting time of all customers decreases as we have more punctual customers at the beginning of the schedule. In particular, we want to show

for every \(k = 1,2, \ldots , M\). This means the customer’s expected waiting time under the case where all customers are non-punctual (i.e., \({\mathbb {E}}[W_n(\Gamma _0)]\)) is higher than that under the case where all customers are punctual (i.e., \({\mathbb {E}}[W_n(\Gamma _M)]\)).

Let \(C_0 = d_1\). Therefore, the waiting time and completion time of each customer can be characterized by the following equations:

Let \(\mathbf {A}_n (\Gamma ) = (A_1 (\Gamma ), A_2 (\Gamma ), \ldots , A_n (\Gamma ))\) and \(\mathbf {S}_n = (S_1, S_2, \ldots , S_n)\) denote, respectively, the random vectors of the arrival times and service times of the first n customers with the punctuality profile \(\Gamma \). It follows that

Consider a fixed \(k = 1, \ldots , M\). The first \(k-1\) customers are punctual under both profiles \(\Gamma _{k-1}\) and \(\Gamma _{k}\). Hence, the expected waiting for customer \(n < k\) are the same (i.e., \({\mathbb {E}}[W_n(\Gamma _{k-1})] = {\mathbb {E}}[W_n(\Gamma _k)]\) for \(n < k\)).

For customer \(n = k\), there are two possible cases. If \(n=k=1\), we have \( {\mathbb {E}}[W_1(\Gamma _{0})] = {\mathbb {E}}[(C_{0}(\Gamma _{0}) - A_1(\Gamma _{0}))^+] = {\mathbb {E}}[(d_1 - D_1)^+]\ge (d_1 - {\mathbb {E}}[D_1])^+= {\mathbb {E}}[(d_1 - {\mathbb {E}}[D_1])^+] = {\mathbb {E}}[(C_{0}(\Gamma _{1}) - A_1(\Gamma _{1}))^+] = {\mathbb {E}}[W_1(\Gamma _{1})]\), where the inequality is obtained by applying Jensen’s inequality. Otherwise, for \(n=k>1\), we have

The inequality is due to (47) and also the fact that the functions \(g_{n,k}\) and \({\hat{g}}_{n,k}\) are integrable given the finite range of customer’s non-punctuality. Similarly, for customer \(n>k\), we obtain

In conclusion, we have proved that (47) holds and that \({\mathbb {E}}[W_n(\Gamma _0)] \ge {\mathbb {E}}[W_n(\Gamma _M)]\), which finishes the proof of the proposition. \(\square \)

1.2 Proof of Proposition 2

Proof

Consider customer n with the two possible appointment times \(\hat{d}_n\) and \(d_n\), such that \(\hat{d}_n > d_n\). For these two appointment times, the random variables \({\widehat{D}}_n\) and \(D_n\) correspond to the arrival times, \({\widehat{V}}_n=C_{n-1}-{\widehat{D}}_n\) and \(V_n=C_{n-1}-D_n\) correspond to the difference between the completion time of customer \(n-1\) and the arrival time of customer n, and \({\widehat{W}}_n=\max (0,{\widehat{V}}_n)\) and \(W_n=\max (0,V_n)\) correspond to the waiting times, respectively. In what follows, we prove that \(W_n\) FOS dominates \({\widehat{W}}_n\).

For \(t \in [\hat{d}_n-\tau _n^l,\hat{d}_n+\tau _n^u]\), we have \(f_{{\widehat{V}}_n}(t)=f_{V_n}(t-(\hat{d}_n-d_n))\). Since \(\hat{d}_n - d_n > 0\), \({\widehat{D}}_n\) is stochastically larger than \(D_n\). In other words, \({\widehat{D}}_n\) FOS dominates \(D_n\). Thus, \(-{\widehat{D}}_n\) FOS dominates \(-D_n\), which implies, given the independence of \(C_{n-1}\), \({\widehat{D}}_n\) and \(D_n\), that \(C_{n-1}-{\widehat{D}}_n\) FOS dominates \(C_{n-1}-D_n\). Since \(\max (\cdot ,0)\) is a non-decreasing function, we have that \({\widehat{W}}_n\) FOS dominates \(W_n\), which completes the proof of the proposition. \(\square \)

1.3 Proof of Proposition 3

When arrival times are uniformly distributed, we would like to prove that the expected waiting time of customer n computed using the exact method, \({\mathbb {E}}[W_n]\), is bounded above by the one computed using the approximate method, \({\mathbb {E}}[{{\widetilde{W}}}_n]\). In other words, we want to show that

with \(\displaystyle f_n (x) = \frac{1}{ \tau _n^l +\tau _n^u}\) on \([d_n-\tau _n^l, d_n+\tau _n^u]\) and \(f_n (x) =0\) otherwise.

Moreover, we want to prove

for \(1 \le n \le M\), where \({\tilde{p}}_{n,i\ge l} = \sum \nolimits _{i=l}^{n-1} {\tilde{p}}_{n,i}\) and \(p_{n,i\ge l} = \sum \nolimits _{i=l}^{n-1} p_{n,i}\).

First, we state several definitions and results that will be used throughout the proof. For any \(x=(x_1,\ldots ,x_n) \in {\mathbb {R}}^n\), let \(x_{[1]} \ge \cdots \ge x_{[n]}\) denote the components of x in decreasing order, and let \(x_{\downarrow } = (x_{[1]}, \ldots , x_{[n]})\) denote the decreasing rearrangement of x. Similarly, let \(x_{(1)} \le \cdots \le x_{(n)}\) denote the components of x in increasing order, and let \(x_{\uparrow } = (x_{(1)}, \ldots , x_{(n)})\) denote the increasing arrangement of x. Let \({\mathbb {D}}\) denote the subspace of descending vectors in \({\mathbb {R}}^n\), in particular \({\mathbb {D}} = \{(x_1,\ldots ,x_n): x_1\ge \cdots \ge x_n\}\). Similarly, we have \(\mathbb {D^+} = \{(x_1,\ldots ,x_n): x_1\ge \cdots \ge x_n \ge 0\}\).

Definition 3

For \(x,y \in {\mathbb {R}}^n\),

and

x is said to be weakly submajorized by y, if \(x \prec _w y\), and x is said to be weakly supermajorized by y, if \(x \prec ^w y\). In either case, x is said to be weakly majorized by y (y weakly majorizes x). Moreover, x is said to be majorized by y (y majorizes x), denoted by \(x \prec y\) if both cases hold.

It is easy to see that

Theorem 1

(Theorem A.7, p.86 in [26])

Let \(\phi \) be a real-valued function, defined and continuous on \({\mathbb {D}}\), and continuously differentiable on the interior of \({\mathbb {D}}\). Denote the partial derivative of \(\phi \) with respect to its kth argument by \(\phi _{(k)}\): \(\phi _{(k)}(z) = \partial \phi (z)/\partial z_k\). Then,

if and only if,

i.e., the gradient \(\nabla \phi (z) \in {\mathbb {D}}\), for all z in the interior of \({\mathbb {D}}\).

Lemma 1

(Theorem H.3.b, p.136 in [26])

If \(x,y \in {\mathbb {D}}\) and \(x \prec _w y\), then

Proposition 5

If \(x,y \in {\mathbb {D}}\) and \(y \prec ^w x\), then for each \(k \in \{ 1,\ldots , n\}\), we have

where \(\mathbb {I^+} = \{(x_1,\ldots ,x_n): 0\le x_1\le \cdots \le x_n\}\).

Proof

Take \(x,y\in {\mathbb {D}}\) with \(y \prec ^w x\). Let \({\hat{x}}\) be the reverse arrangement of x, in particular

and by definition we have \({\hat{y}} \prec ^w {\hat{x}}\). Using Eq. (51), we have \(-{\hat{y}} \prec _w -{\hat{x}}\) with \(-{\hat{x}} \in {\mathbb {D}}\) and \(-{\hat{y}} \in {\mathbb {D}}\). Take \(u \in \mathbb {I^+}\) and let \({\hat{u}}\) be the reverse arrangement of u. We have \({\hat{u}} \in \mathbb {D^+}\). Moreover, for any \(k \in \{1,\ldots ,n\}\), we have

It follows from Lemma 1 that

and therefore we obtain

Note that this proposition could be also proven by applying Theorem 1 with \(\phi (z) = \sum \nolimits _{i=1}^k -z_{n+1-i} u_{k+1-i}\), for \(k \in \{1,\ldots ,n\}\). \(\square \)

Theorem 2

(Chebyshev Integral Inequality) (Theorem 9, p.39 in [31])

Let f and g be real and integrable functions on [a, b] and let them both be either increasing or decreasing. Then,

If one function is increasing and the other is decreasing, the reverse inequality holds.

We use the tilde sign to denote variables computed using the approximation method, such as \({\widetilde{W}}_n\) for the customer’s waiting time. Let us define the following notation:

and

for \(0\le l \le n-1\), and similarly for \(\tilde{p}_{n,i\ge l}\) and \(\tilde{p}_{n,i\le l}\). We use \(g (n, \lambda )\) and \(G (n, \lambda )\) to denote the pdf and cdf of a Poisson distribution with rate \(\lambda \). We have \(g(n, \lambda ) = \frac{ \lambda ^{n}}{n!} e^{-\lambda }\) and \(G(n, \lambda ) = \sum _{i=0}^n\frac{ \lambda ^{i}}{i!} e^{-\lambda }\) if \(n\ge 0\), and \(g(n, \lambda )=G(n, \lambda )=0\) otherwise.

Let us recall the differences between the exact and approximate methods we developed in the main paper. Instead of the conditional distribution of inter-arrival time \(h_{n,j}(\cdot )\) used in the exact method (as shown in Eq. (9)), we use the unconditional inter-arrival time distribution \(h_{n}(\cdot )\) as the approximation for \(3 \le n \le M\) (as shown in Eq. (35)). Since no approximation is involved in the computation of \({\tilde{p}}_{n,i}\) for \(n = 1,2\), the expected waiting time for customers 1 and 2 are the same from both methods. Therefore, to prove Eq. (49), we only need to show

which is equivalent to

for \(3 \le n \le M\).

In the following, we use induction to prove that

for \(3 \le n \le M\). By definition, Eq. (53) leads to

which is equivalent to Eq. (52), since \(p_{n,i\ge 0} = {\tilde{p}}_{n,i\ge 0} = 1\).

Initialization: For \(n=2\), we have \(p_{2,i\ge l} = {\tilde{p}}_{2,i\ge l}\) for \(l=0,1\), as no approximation is involved when computing \({\tilde{p}}_{n,i}\). By definition, we have

Induction: Assume Eq. (53) holds for \(n-1\), which gives

Let us prove that

This reduces to proving that

since \(p_{n,i\ge 0} = {\tilde{p}}_{n,i\ge 0} = 0\).

We start by providing an equivalent formation of \(\text{ Pr }\{R_n =i \mid R_{n-1}=j\}\) as compared to that used in Eqs. (8) and (9). Instead of conditioning on the inter-arrival time between customers \(n-1\) and n, we can compute \(\text{ Pr }\{R_n =i \mid R_{n-1}=j\}\) by conditioning on the arrival time of customer \(n-1\), where we have

for \(0 \le j \le n-2\) and \(0 \le i \le j+1\).

Thus,

Since \(f_{n-1}(v)\) is constant on \([d_{n-1}-\tau _{n-1}^l, d_{n-1}+\tau _{n-1}^u]\), together with Theorem 2, we obtain

where \(f_{n-1,i \ge l}(v)\) is the pdf of the conditional arrival time of customer \(n-1\), given she finds equal or more than l customers in system upon her arrival. The first equality in Eq. (60) is derived by applying Bayes’ theorem. In particular,

Similarly, we can write

Substituting Eq. (59) with Eqs. (60) and (61) gives

Next, we compute \(\sum _{l=k}^{n-1} {\tilde{p}}_{n,i\ge l}\) with a characterization of \({\tilde{p}}_{n,i}\) that is equivalent to what we used in our approximation. In the approximation method, we approximate \(h_{n-1,j}\) in Eq. (9) by \(h_{n-1}\), which leads to

with

We can substitute \(h_{n-1}(x)\) and reformulate \({\tilde{p}}_{n,i}\) by changing the integration variables and ranges, which gives

Then, we can compute \(\sum \nolimits _{l=k}^{n-1} {\tilde{p}}_{n,i\ge l} \) by using Eq. (65). This implies

Finally, we are ready to show that Eq. (57) holds. By assumption, it follows from Eq. (55) that

for all \(k = 1, \ldots , n-2\). For fixed \(u \in [ d_{n}-\tau _{n}^l, d_{n}+\tau _{n}^u]\), it is obvious that \(\Big ( {\mathbb {E}}_{{D_{n-1}}}\big [ G(l, (u- D_{n-1})\mu \big ] \Big )_{l=1}^{n-2}\) and \(\Big ( {\mathbb {E}}_{{D_{n-1}}}\big [ G(l-1, (u- D_{n-1})\mu \big ] \Big )_{l=1}^{n-2}\) are positive and increasing in l. Therefore, according to Proposition 5, we have

which leads to

since \( p_{n-1,i \ge 0 } = {\tilde{p}}_{n-1,i \ge 0 } = 1\). Also, according to Proposition 5, we have

Since Eqs. (67) and (68) hold for all \(u \in [ d_{n}-\tau _{n}^l, d_{n}+\tau _{n}^u]\), together with Eqs. (62) and (66), we deduce that

which completes the proof of the proposition. \(\square \)

Appendix D: Experiments related to Sect. 6

See Fig. 5 and Tables 5, 6, 7, and 8.

Rights and permissions

About this article

Cite this article

Jouini, O., Benjaafar, S., Lu, B. et al. Appointment-driven queueing systems with non-punctual customers. Queueing Syst 101, 1–56 (2022). https://doi.org/10.1007/s11134-021-09724-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11134-021-09724-9