Abstract

This paper examines the political economy of epidemic disease. First, it outlines the incentive and information problems facing policymakers in responding to a new epidemic. Second, it considers the existence of a tradeoff between public health and freedom. Informed by a survey of the history of public health and an analysis of the response to Covid-19, it presents evidence that such a tradeoff can obtain in the short run but that, in the long run, the negative relationship is reversed and the trade-off disappears.

Similar content being viewed by others

1 Introduction

In this paper, I examine the relationship between epidemic disease and the state. Specifically, I consider the existence of a tradeoff between public health and freedom in both the short run and the long run.

Although in recent decades, many scholars have emphasized the significance of epidemics in history (e.g. McNeil, 1974; Brooke, 2014; Campbell, 2016), and historians have documented the rise of the public health movement in the 19th century, disease rarely has featured in prominent political economy accounts of the rise of the modern state. With a few exceptions, it has been confined to histories of disease and medicine, and either popular science (e.g., Crawford, 2000) or specialist studies, rather than foregrounded in the major treatments in historical sociology (for instance Mann, 1986; Tilly, 1990; Ertman, 1997; Scott, 1999; Fukuyama, 2011), political economy (Brennan & Buchanan, 1980; Glaeser & Shleifer, 2003; Besley & Persson, 2011), or economic history (Higgs, 1987; North, 1981; Lindert, 2004).Footnote 1 One exception to this generalization is the important work of Werner Troesken. Troesken (2004, 2015) studied the tradeoff between freedom and control of disease in the United States. But, as documented by Leeson and Thompson (2021), until recently little work has been done by public choice scholars on the intersection of epidemic disease and the state. Although, following the Covid-19 pandemic, that neglect already has begun to be remedied (see, for example March, 2021).

Disease, and attempts to control disease, have played major roles in the evolution of states throughout history. In particular, the rise of modern states in the 19th century saw massive investments in sanitation, the beginning of publicly funded medical research, and the rise of public health infrastructure. Considerable research has explored the extent to which those developments, in conjunction with the scientific breakthroughs of the same period, notably the germ theory of disease, were responsible for the remarkable increase in life expectancy that occurred between 1850 and 1950 (see Mckeown et al., 1975; Harris, 2004; Harris & Hinde, 2019). At the same time, there was a dark side to the public health movement as the authority granted to public health officials was associated with numerous abuses, the most notable of which included forced sterilizations, and the denial of medical treatment to specific populations.

In this paper, I focus on epidemic disease. Contagious diseases pose major challenges to any society. But such diseases pose specific challenges to a liberal social order.Footnote 2 A liberal social order is one that is organized around the principle that, with a few well-defined exceptions, individuals generally can make decisions that are best for themselves. Indeed, the case for a liberal society can be seen as resting on two pillars. The first pillar is the moral intuition that granting individuals this freedom is a good, in and of itself. The second pillar is the empirical claim that a society based on classical liberalism will do better in terms of allowing individuals to flourish as human beings. An important component of that view is that liberalism will make one rich (as argued by McCloskey, 2006). But the benefits of liberalism are broader still: they include good health and longer life expectancy.

The presence of novel, dangerous epidemic diseases threatens both pillars. As such, epidemic disease itself is a threat to the liberal order. What principles should guide our response to such threats? Adam Smith observed that “these exertions of natural liberty of a few individuals which might endanger the security of the whole society are, and ought to be, restrained by laws of all governments, of the most free as well as of the most despotic” (ii.ii Smith, 1776, emphasis added). Moreover, Smith, in discussing how military institutions may be justified for maintaining a martial spirit in the population, took it for granted that a state will devote “its most serious attention to prevent a leprosy or any other loathsome and offensive disease ... from spreading itself among them, though perhaps no other public good might result from such attention besides the prevention of so great a public evil” (Smith, 1776, v. iii. 345).Footnote 3 John Stuart Mill’s (1989) harm principle also offers the basis for numerous public health interventions, at least for harms judged to be sufficiently large and morally serious. Indeed, as Epstein (2003, 2004) documents, constitutional scholars and the US Supreme Court in the Lochner era, recognized the legitimacy of public health inventions that were limited to containing the spread of contagious disease.Footnote 4 The economic theory of externalities pioneered by Alfred Marshall, Pigou (1952) and Ronald Coase (1960) also can justify widespread government action if the externalities in question are large, non-pecuniary, and difficult to observe and measure.

On the other side of the ledger, and as Mill himself recognized, classical liberal thought warns that the power of government to regulate the spread of epidemic disease—known in American law as the quarantine power—can easily be misused (see Parmet, 2008). Perhaps the most notorious example of medical malpractice by public health authorities, the Tuskegee Syphilis Study, begun in the 1930s and continued as late as the early 1970s. The aim was to study the long-run effects of syphilis in African American men. To that end, the study’s participants were examined, had their blood drawn, and their corpses subjected to autopsies, but they were not given proper medical treatment. That protocol became all the more egregious once penicillin was developed to treat syphilis.

Tuskegee’s medical intervention had a lasting negative impact on public health. Alsan and Wanamaker (2018) documents the adverse long-term consequences of that episode of medical malpractice. Specifically, they find that older African Americans living close to Macon County, Alabama—the site of the Tuskegee experiments—were less likely to visit a doctor or utilize the healthcare system more generally. That response translates into a mortality penalty of 4 percentage points for older African American males and accounts for some for 35% of the life expectancy gap between black and white men in 1980. A similar experience occurred in West Africa as a result of medical interventions undertaken during French colonial rule (Lowes & Montero, 2021). Other examples include the forcible sterilization of African Americans in the 1950s and 1960s studied by Price and Darity (2010).

Two lessons can be drawn here and both are important. Major threats such as pandemics can justify widespread government intervention for the duration of the pandemic. But many other historical examples of governments using epidemic diseases to justify violating medical ethics can be identified—including withholding treatment or violating patents’ autonomy by performing surgeries without consent, hence abusing their power. Nonetheless, it does not follow from the simple fact that the extensive governmental powers granted to combat diseases can be or have been abused, that no such powers ever should be granted. In liberal societies, alternative means are available to check such abuses like electoral constraints, independent judicial review, and an independent media.

Setting aside well-known caveats concerning the young or mentally incapacitated, a liberal society handles most individual-level risks without resorting to coercive measures. Individuals who vary in their risk preferences, can calculate the possibility of dying from skydiving, smoking, or driving a car and can make informed decisions. They need not calculate those probabilities perfectly; because the underlying probability distributions for such events are stationary, reliance on simple heuristics will enable them to make informed decisions about the tradeoffs involved.

This framework likewise can handle most externalities and instances of informational asymmetries. Many individual-level externalities can be internalized through markets and social norms. For instance, the external risk my reckless driving poses to others is, to an extent, internalized by insurance premiums. The same goes for skydiving. Many informational asymmetries can be ameliorated by warrantees or firms that aggregate information (i.e., review accident sites, for example). Other externalities may require government intervention. Here, one simple principle that accords with classical liberalism is stated by Buchanan and Tullock (1962): externalities should be resolved at the lowest level at which the externalities in question can be internalized. Thus, local ordinances or city- or state-level laws often are more appropriate for handing many externalities.

A new and highly contagious epidemic disease provides a different set of challenges, however. Unlike the examples considered above, with a novel epidemic disease such as Covid-19, at least initially, the probability of death or serious harm was not a stable number over which individuals could form well-defined beliefs. In the initial phase of the epidemic, the magnitudes of the externalities were unknown.Footnote 5 Early on in the pandemic many commentators were led astray. The probability of catching Covid-19 depends on the share of the population currently exposed to the virus, which is unknown, but might be rising rapidly over time. Moreover, the probability of dying from Covid-19 does not depend only on the virulence of the virus and how it interacts with underlying conditions, but more pertinently on the crowdedness and capacity of the healthcare system ten days or two weeks from now. Thus. comments like the following made by the editor of the New Criterion, Roger Kimball in March 2020 that “you have a 0.000000155963303 chance of contracting and dying from this dread disease?” were nonsensical, because at the time no stable estimate on which individuals could make accurate decisions.

Furthermore, in contrast to the case with localized and stable externalities, little benefit is found in allowing local city or state-level authorities to adopt pandemic policies autonomously, as argued by Congleton (2021), because the size of the externality itself depends on the local policies that are being chosen. If the externalities extend across hundreds or thousands of miles, then pandemic policies should be coordinated at a nation or even global level.Footnote 6 The likely constraint here is that governance problems, including both coordination problems and principal-agent problems often worsen with the size and scope of government.

From a classical liberal perspective, individual-level or private sector responses when and where feasible, are preferable to government mandates. Dangerous drivers are penalized partially by higher insurance premiums, a market response that counterbalances, to a degree, the negative externality imposed by bad drivers. Of course, that market-driven response does not negate the need for criminal sanctions for reckless driving. But, at the margin, insurance premiums scaled to accident risk reduce the need for traffic patrols and police stops. But the market-based response took time to emerge. The profit opportunities available to entrepreneurs from selling provide insurance became apparent over a period of decades in the early 20th century. In the initial 2020 Covid-19 outbreak, no market-based mechanisms were in place capable of penalizing individuals who failed to practice social distancing, who did not self-isolate after becoming symptomatic, or did not wear masks. That is not to say that the private sector did not contribute (or could not have contributed further had it been permitted) to mitigating the spread of virus—private manufacturers like 3M played critical roles in producing personal protective equipment (PPE) and many instances could be cited of private companies such as alcohol distillers reorganizing so as to produce hand-sanitizers—but the brunt of the response to the epidemic was, and had to be, organized politically.

Another possible alternative mechanism for the internalization of externalities that would lessen the need for government mandates and restrictions are social norms. It is probably no coincidence that Japan experienced both a relatively small number of fatalities from Covid-19 during 2020 and promulgated relatively loose constraints on its population. It is at least plausible that pre-existing Japanese social norms concerning mask use, social distancing, handwashing, and a high level of compliance with governmental advice helped to minimize the early spread of Covid-19.Footnote 7 Perhaps paradoxically, attempts to downplay the severity of the virus by libertarian advocates criticizing mask use or advocating unnecessary travel, might have led to, and indeed justify, more onerous government intervention.Footnote 8

As our understanding of the factors responsible for various failures during the Covid-19 pandemic is evolving, it is important to take a historical perspective. In the remainder of the present paper, I first consider the role played by epidemic disease in the rise of modern states. I seek to evaluate both the benefits and the potential dangers of granting a state the power to combat epidemic disease. Specifically, I address the supposed tradeoff between public health and liberty in both the short run and the long run and I consider how the tradeoff can be moderated or even eliminated.

2 The political economy of public health

The control of epidemic disease has long been seen as a core function of a state. Historically, and confining our analysis to Europe and the Americas, the origins of governments’ role in containing epidemic disease go back to the late Middle Ages, and specifically to the period after the Black Death (1347–1352). Following the first, uncontrolled outbreak of bubonic plague, in which at least 40% of Europe’s population died, quarantines and other restrictions regularly were imposed to limit exposure to infectious diseases. For centuries, the effectiveness of such responses was limited by the extent of scientific knowledge. Beginning in the 19th century, a new relationship between the state and control of disease emerged. Governments invested in major sanitation projects in the name of quelling the spread of disease. Vaccination programs were instituted and, in some cases, made mandatory. During the 20th century, government institutions like the US Centers for Disease Control (CDC) were established directing public funds to disease control and medical research. That was a period in which life expectancies rose dramatically and, for the first time, human intervention was responsible for the eradication of several major diseases. But the same period also was characterized by governmental abuses and medical malpractice, most notably but not limited to state-enforced sterilization and euthanasia. The mixed historical record should guide us going forward.

2.1 Incentives and information

To understand how states respond to public health crises, we need to pay attention to two fundamental issues: (1) incentives and (2) information.

By incentives, I principally mean those facing policymakers (though the analysis could be extended to consider the incentives motivating private citizens to comply or not with official policies). Rather than assuming that the state seeks to maximize social welfare, any realistic account of the relationship between the state and epidemic disease should be based on the principle of behavioral symmetry between individuals in the public and private spheres. The public choice of public health assumes that policymakers respond to incentives. We should expect them to implement policies that benefit themselves personally, at least in expectation. The benefits are not necessarily monetary. The utility functions of policymakers may include numerous different components. For instance, policymakers often will place greatest weight on those policies that will help them gain or stay in power. Desierto and Koyama (2020) draw on selectorate theory to identify which pandemic policies are politically optimal.Footnote 9 Specifically, Desierto and Koyama (2020) predict that an incumbent leader chooses the combination of transfers and pandemic policies that will ensure remaining in power. The politically optimal pandemic policy in the model is the policy that would be chosen by the pivotal members of the ruling coalition. Pivotalness here refers to the smallest group whose defection could lead to the incumbent losing power. When applied to democracies, it suggests that policy chosen will not reflect the preferences of the electoral base of the incumbent but rather the newer or more transient members of her electoral coalition.

The theory predicts that a perceived or real tradeoff between controlling a pandemic or mitigating damage to the economy will prompt politicians to respond according to their assessment of the preferences of the pivotal members of the ruling coalition. That is, they will seek to assuage coalition members whose loyalty can least be taken for granted. The responses may include imposing very strict policies if the group of uncertain supporters comprises individuals who are either vulnerable to the disease because of age and unlikely to bear the economic costs of strict policies because they are retired or can work easily from home. Lax policies are possible if that group comprises younger less vulnerable individuals who could lose their jobs or livelihoods easily from a strict response. Over the course of a pandemic, the politicians’ preferences will adapt. But the model can explain why certain governments reacted more slowly than others in the initial outbreak of Covid-19 (weeks that turned out to be critical in seeding the disease locally in Europe and the United States).

Emphasizing the incentives facing politicians also helps to explain why political leaders were so slow in responding to the HIV-Aids crisis of the 1980s. Few electoral rewards were available from focusing resources or attention on a disease that initially seemed to be confined to homosexual men.

It is course impossible to know how the Covid-19 pandemic may have played out had different choices been made by policymakers in early 2020. It may have been the case that given the virus’s combination of virulence and contagiousness that a large number of deaths would have resulted whatever policies were taken. Nonetheless, in 2020 the reactions of political leaders often lagged rather than led public opinion. Political leaders in Western democracies were reluctant to take too radical measures until the number of confirmed Covid-19 cases was sufficiently high. But that meant that, given the pace at which the disease was incubated and spread, policies like travel restrictions and lockdowns were imposed too late to prevent Covid-19 from seeding in local populations.Footnote 10

Incentives thus help to explain some of the variation in the behavior of policymakers. But information also was critical. Policymakers never have complete information. The information they have access to always is incomplete and provisional. Of course, that is to an extent true in normal times, but the problem of “unknown unknowns” is much greater when a novel virus is working its way through the population. That problem is best viewed as a Hayekian knowledge problem (Hayek, 1945).Footnote 11

More specifically, policymakers face several distinctive and severe knowledge problems. First, policymakers necessarily rely on the scientific advice that they receive. Numerous policy missteps during the response to Covid-19 can be traced to faulty scientific advice.Footnote 12 And the problem especially is severe when the information about a novel disease is becoming available in real time. Second, at any point in time, the number of Covid infections is unknown. Policymakers react to the number of confirmed cases or the percentage of positive Covid tests. But that is a lagging indicator and in the early stages of the pandemic, such measures were very unreliable given the patchy, nonrandom testing regime. The information policymakers used as a basis for decision-making thus was both distorted by selection bias and subject to long and variable lags.

Furthermore, it is possible for the incentive problem to interact with the knowledge problem. That is the case, for example, when scientific advisors feed politicians only the information they wish to hear or the information that the scientific advisors believe to be politically palatable, something that marred the UK’s early response to Covid-19, for instance. The policy of the UK government was shaped by experts influenced by behavioral psychology rather than epidemiology (Hahn et al., 2020). Experts employed the concept of “behavioral fatigue” to explain why weaker measures were preferable to more stringent ones. The assumption was that individuals would tire of and disobey stricter rules, so the optimal policy was to choose laxer rules. The major problem with that choice, beyond the fact that the existence of such fatigue was entirely hypothetical, is that it did not consider the effects that laxer rules would have on individuals’ assessments of how transmittable and serious the new disease was.Footnote 13 In choosing not to implement stricter policies early on, policymakers inadvertently conveyed information that the disease likely was not a serious threat. Policy makers appear to have been operating on the basis of the wrong, “behavioralist” model of the world (or a detailed discussion of such misapplications more generally, see Rizzo & Whitman, 2020).

Recognition of both severity of the incentive problem and the information problem is not a reason for cautioning inaction. Not responding to a novel epidemic disease also is a choice (one subject to both the incentive problem and the information problem) and one with potentially devastating consequences. It also is inevitable that mistakes will be made. The important political economy questions surround what rules governments should follow to minimize such missteps and mitigate the overall harm from the epidemic.

2.2 Is there a tradeoff between health and freedom?

Policymakers face tradeoffs and have to make decisions based on their assessment of these tradeoffs. Mandatory lockdowns or travel restrictions impose costs on individuals (often specific individuals) while bringing about collective benefits (in terms of reducing the spread of the epidemic disease in question).

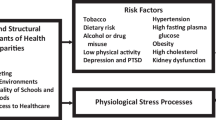

Troesken (2015) examines whether there is a tradeoff between freedom and health in the context of epidemic disease in 18th and 19th century America. Following Troesken’s led, here freedom will be defined in the negative sense, as in freedom from government coercion. In this case, at a single point in time and in a given society, in the presence of a major outbreak of epidemic disease, a clear tradeoff must be made between public health and freedom. In particular, as Geloso et al. (2021) argue, liberal societies may be particularly bad in controlling so-called diseases of commerce such as smallpox (as opposed to “diseases of poverty” such as typhoid fever). Owing to the presence of widespread externalities that are difficult to observe or measure, restrictions on individual freedom will curb the spread of disease whereas the absence of such restrictions will hasten its spread.

Over a longer span of time or looking across different societies, however, the tradeoff disappears. Societies that enjoy more negative freedom are not afflicted more by epidemic diseases. Indeed, the relationship may even reverse. The most important explanation is simply that greater economic freedom spurs medical innovation. A second reason is that over time, private individuals and households will adjust their behavior unilaterally (given access to information and sufficient resources).

Therefore, both over time and at a given point in time, we have a situation that resembles Simpson’s paradox in probability.Footnote 14 Viewed dynamically, the negative relationship at a given point in time between health and liberty (holding innovation and adaptation constant) does not hold when we consider the relationship in the longer run. The paradox also can be observed when examining comparative statics. That is, at a given point in time, the extent of the tradeoff between health and liberty is moderated by other factors such as culture or politics.

That insight cautions against both those commentators who laud the apparent success of authoritarian regimes in combating epidemics or improving public health and against alarmist and conspiratorial opposition to public health restrictions adopted by liberal democratic states in the face of an epidemic disease.

Finally, another relevant variable is the effectiveness of governmental responses, which depend on prevailing scientific knowledge, income levels, and state capacity. Recognizing that point indicates that the tradeoff between health and freedom can in fact be bent. In the long run, prosperity and freedom are inputs into scientific research. Open-ended scientific research flourishes in liberal societies. The tradeoff also can be moderated by a more effective, though not necessarily in the long run more intrusive, government response. That conclusion is evident in the Covid-19 pandemic, though it is important to note that, on average, measures of preexisting state capacity are uncorrelated with pandemic outcomes (Bourne, 2021, 234–235).Footnote 15

In part because of recent experiences in handling outbreaks of epidemic disease, such as MERS in 2015, the South Korean government reacted very rapidly in scaling up testing and implementing a rigorous contract-tracing policy. Infected individuals were confined under government quarantine. Those policies were intrusive, but they meant that South Korea escaped both the worst of the pandemic and the more intrusive, long-lasting, lockdowns imposed in most of the rest of the world during 2020. In contrast, governmental responses in the United Kingdom and the United States were characterized by numerous mistakes; the early months of the pandemic in January and February 2020 were characterized by denial and a failure adequately to prepare for the pandemic, and those mistakes led in the long run to more intrusive interventions. The ineffective responses landed the United States, the United Kingdom and much of Western Europe in the worst of both worlds.

The questions considered here are critical to understanding the political economy of pandemic policy from both a positive and a normative perspective. If a tradeoff between public health and freedom exists, then some individuals will always prioritize the former over the latter. Authoritarian regimes will attract adherents by touting their public health achievements—as indeed regimes like China, the Soviet Union and Cuba have done in the past.Footnote 16 At the same time, if the tradeoff is indeed a genuine one, liberals and libertarians will have to accept the inevitability of high death tolls from periodic outbreaks of epidemic disease as the price of freedom.

3 A brief history of epidemic disease and the state from the middle ages

3.1 Leprosy and the black death

Prior to the Black Death (1347–1352), European polities took little or no action against epidemic disease. This was for several reasons. First such polities were not recognizable states in the modern sense (see the discussion in Johnson & Koyama, 2017, 2). Political authority was decentralized, fragmented, and often overlapping. Tasks such as provision for the poor or economic regulation were left to local authorities, guilds, or the Church. Second, disease was interpreted through a moral and religious lens rather than as matter of “public health”. In this environment the political economy of disease was quite different: given the ineffectiveness of most responses, there was no tradeoff between public health and freedom.

This is clear with respect to the most prominent disease in pre-Black Death medieval sources: leprosy. The bacterium responsible for leprosy (Hansen’s disease) was identified in the late 19th century. The modern disease arrived in Europe around the 6th century CE (the “leprosy” of antiquity was a different disease). As discussed by Moore (1987), leprosy became a major social issue in the 11th and particularly the 12th and 13th centuries as hospitals and colonies for leapers were established across Europe. Gradually, leapers were separated from society.Footnote 17

In general, leprosy was viewed as a religious issue. Medieval attitudes to leprosy were marked by paradox. (Moore, 1987, 57) notes, that on the one hand, “the living death of leprosy was an object of admiration and even envy, as well as terror. The leper had been granted the special grace of entering upon payment for his sins in this life, and could therefore look forward to earlier redemption in the next”. On the other hand, the leper could not help but be the object of social opprobrium and over time leprosy came to be associated with heresy. Moore associates growing fear of leprosy in the 12th and 13th centuries with the contemporaneous intensification of a campaign against heresy. Lepers became “Symbolic representations of evil. Leprosy was a warning to all living that their sinful lives might result in God’s punishment. They reaffirmed one’s commitment to and fear of God” (Covey, 2001, 320). Lepers were not simply confined in order to reduce contagion. Rather once confined, their lives were policed: “men and women were separated, fornication, drinking, gambling and chess prohibited, attendance at mass, food, clothing, and movement inside as well as outside the house minutely regulated, and the whole enforced by a stern code of punishment” (Moore, 1987, 74–75). At the same time, generosity and compassion for lepers could signify piety, and religious relics were viewed as effective treatments for the disease.

The political economy of epidemic disease in the Middle Ages was shaped by the need to find scapegoats for negative economic or epidemiological shocks. During the Great Famine of 1316–1322, lepers were persecuted across France (Barber, 1981). Local authorities were convinced that lepers together with Muslims and Jews were poisoning healthy people. The King of France, Philip V, apparently was convinced of the veracity of those claims, and lepers were burned alive and their goods confiscated. Subsequently, it generally was acknowledged that the lepers were guiltless; they were not accused of poisoning people during subsequent epidemics. But the pattern of blaming outsiders for outbreaks of epidemic disease had become established.

By the 14th century, leprosy had receded. It is likely that some immunity to leprosy had arisen in the overall population. The next major epidemic disease was the Black Death or bubonic plague. It was a new disease: bubonic plague had been absent from Europe for many centuries. We know from recent scientific advances that the first outbreak of bubonic plague—known as the Plague of Justinian and dated to the mid-6th century—disappeared in the middle of the 8th century. No historical memory of plague existed prior to the Black Death. The arrival of plague in the late summer of 1347 in the port of Messina and its rapid spread thereafter across the continent was a huge shock to European society.Footnote 18

The initial framing for understanding the Black Death was religious. The disease was seen as punishment for sins or as heralding the end of the world (see Horrox, 1994). This framing prompted a variety of responses. Perennial outsiders, and already subject to periodic persecution, Jews across Europe became scapegoats. Specifically, they were accused of causing the disease by poisoning wells. Despite this charge being refuted by the Papacy, the result was one of the worst episodes of anti-Jewish violence prior to the Holocaust (see Cohn, 2007; Finley & Koyama, 2018; Jedwab et al., 2019a).

Another response came int he form of unsanctioned displays of popular piety. Processions of flagellates marched to atone for mankind’s guilt, claiming that the penitents could not die from the plague (Aberth, 2000).

The Church itself organized processions and penitential marches. A jubilee of debt forgiveness was held in Rome in 1350. Nonetheless, the Church suffered in the years following the plague from a lack of trained clergy. Moreover, the plague has been implicated in the general institutional decline of the Papacy that occurred in the late Middle Ages (see, for more discussion Johnson & Koyama, 2019).

Apart from this, no organized political response to the Black Death is evident. The rich tried to flee, but in 1347–1352 at least, escape availed them little as the plague reached almost all corners of Europe. Numerous members of Europe’s political elite died, including Joan, daughter to King Edward III of England. In total, at least 40% of Europe’s population died and, while the extent to which the disease was indiscriminate is debated, it is clear that all aspects of society were affected (Jedwab et al., 2022).

It is in the wake of the Black Death that the origins of modern notions of public health can be seen. And it is the in the post-Black Death period that we see the emergence of a tradeoff between public health and liberty. The scale of the shock and the periodic reoccurrence of bubonic plague thereafter called into being some of the first public health measures. In 1377, Ragusa—modern Dubrovnik—imposed a quarantine on travelers from plague invested areas. Such travelers were confined for a month to a nearby uninhabited island.

It is impossible to say how effective the policies were. Ragusa was hit by several plague outbreaks later in the 14th century. We now know that the vector for bubonic plague was not infected persons per se but rats and other flea-bearing rodents. Infected fleas also could be carried in bundles of clothes, straw, or other goods. The fact that Ragusa prohibited the importation of goods from infected areas, which suggests some tacit understanding of how to reduce exposure to the plague. Alfani and Murphy (2017) attribute the comparative absence of the plague in the 16th century to the effectiveness of such measures. Ironically, however, this relative success story may have made Italy more vulnerable to the plague of 1629-30, brought into the peninsula by an invading army.

Throughout Italy, the 15th century saw the establishment of lazarets—dedicated quarantine stations to deal with the plague. Health boards were set up to regulate schools and schools, and to police individuals whose lifestyles made them prone to spreading disease. These policies were imitated elsewhere in Europe, although not to the same degree, in the 16th and 17th centuries.Footnote 19

The terminology for thinking about infectious disease changed alongside the development of state level bureaucracies (Kinzelbach, 2006). Italian cities, like Milan, were at the forefront of these developments. From 1452 onwards, the Milianese health board registered plague deaths and recorded the symptoms of victims. (Cohn & Alfani, 2007, 178) note that “[t]hough not the first attempts to register a city’s deaths (as opposed to burials), they are almost unique in that regard, at least until the civil registers of the nineteenth century, given that university- trained physicians—not parish priests, other clerics, or gravediggers—evaluated the corpses, gathered the reports of symptoms, and pronounced the cause of death”. In the German-speaking lands major investments in public health at the city-level were also made. Informed by the prevailing miasma theory, city councils aired bedding and clothing and clamped down on the vagrant poor. (Kinzelbach, 2006, 386) notes that “The requisite period of stay in ‘healthy’ air was two weeks when persons had not been in touch with victims of an epidemic disease, and four or more weeks when cases of ‘pestilence had occurred in their surroundings or when reports had described the disease as very virulent. This variation in the required quarantine periods as well as restrictions for peddlers trading with secondhand articles may be attributed to the idea that sick persons played an important role in the transmission of diseases, and that something like ‘contagious objects’ and ‘contagious persons’ existed”. Public health ordinances were accompanied by education spending, and they have been studied by economic historians, who view them as important early instances of non-military investments in state capacity (Dittmar & Meisenzahl, 2020).

Hospices were established to deal with disease as were the now familiar quarantines and cordons sanitaire. Such measures often were enforced brutally. Families were boarded up with suspected plague victims. The expense of dealing with epidemic disease could be large. Then, as now, a trade off arose between halting the spread of the disease and short-term damage to the economy. “The business community soon found that a quarantine could cripple a city’s trade and industry. Isolating a single victim’s house, especially in those times of essentially home-centered crafts and industry, could cause losses that rippled through the city as raw or finished materials were destroyed. Urban artisans, who might live close to the margin of survival anyway, could face ruin if health officers proclaimed plague in the town: trade would stop, workers might be confined to their homes, stocks of material might be seized and burnt” (Hays, 2009, 55).

During the late Middle Ages and early modern period, the public health response to epidemic disease was at the city level. As we have seen, the earliest policies for dealing with plague were pioneered in politically fragmented Italy. This was hardly surprisingly; it was small city states like Florence and Venice that also pioneered innovations in tax collection and public debt. Policy responses also reflected the fragmented nature of political authority in general. Larger polities like France remained loosely governed in comparison. But their relatively small size, limited the ability of the Italian city states to coordinate responses to the spread of disease.

The knowledge problem facing early modern doctors and administrators was severe. As (Cipolla, 1981, 5) puts it, “[t]he Health Magistrates did their best to fight the recurrent epidemics of plague, but what constituted the plague they did not know: they were provided with totally absurd notions concerning the etiology of the disease and the mechanism of its propagation”. Sometimes these policies were “successful” despite the theoretical justification for the interventions being mistaken. One example of this can be found in the outfits designed to protect doctors from plague worn in the 17th century. In addition to the famed beaked noses, these costumes entailed wearing oiled and scented overcoats. While the notion that perfumes would protect the wearer was mistaken, these costumes likely did confer protection because the oiled surfaces reduced exposure to infected fleas.

England lagged behind Italy in developing policy responses to epidemic disease and, as elsewhere in Europe, the response initially was local (Slack, 1989). But in 1578, local policies came under central control. Justices of the Peace,

...were to receive reports on the progress of infection from ‘viewers’ or ‘searchers of the dead in each parish, to supervise the activities of constables and overseers of the poor, and to ‘devise and make general taxation’ for the relief of the sick. The clothes and bedding of plague-victims should be burned, and funerals take place after sunset to reduce the number of participants. Above all, infected houses in towns should be completely shut up for at least six weeks, with all members of the family, whether sick or healthy, still inside him. Watchmen were to be appointed to enforce this order, and order officers should provide the inmates with food (Slack, 1989, 169).

Public health became the responsibility of the national government, which issued edits closing market fairs and limiting contact between towns during epidemics, “despite the damage this might do to an urban economy” (Slack, 1989, 170). Assessing the effectiveness of these policies is difficult. Nonetheless, historians such as (Harper, 2021, 367) suggest that “human interventions to control plague made the difference” and that while “[t]he end of plague in western Europe resists tidy explanation ...perhaps the most decisive change was in the nature and power of the state.

The American colonies had broad quarantine powers. John Locke’s constitution of the colony of Carolina “established a broad power to take care of all “corruption or infection of the common air or water, and all things” necessary to protect “the public commerce and health” (Witt, 2020, 15). The colony of Connecticut “authorized the town officials to isolate and care for any person “visited with the Small Pox” or “suspected to be infected”—and to charge the person or their parents or master for the costs” (Witt, 2020, 15–16). American towns, cities, and states frequently resorted to these powers during the colonial period.

In summary, the period between 1500 and 1800 saw the formation of more cohesive, powerful, and interventionists states than had existed previously. Centralization was associated with the idea that the state had a responsibility for limiting the spread of epidemic disease. As discussed above, Adam Smith, writing in the late 18th century, took it for granted that the control of epidemic disease was a legitimate responsibility of the state.

Nonetheless, the effectiveness of such policies was limited by the narrow epistemic base of medicine prior to the late 19th century. The attempt to limit the spread of contagious diseases also were limited by the constrains early modern states faced. The Tudor state was mercantilist and the attempt to control the spread of disease reflects wider concerns with policing borders and international trade. But preventative measures were of limited success: “once plague had reached north-western Europe from the Mediterranean, and particularly when it had reached Amsterdam, whose trade with English ports was continuous, it was difficult to keep it out of Britain” (Slack, 1989, 185).

3.2 Disease, the state, and liberty in the 19th century

The nature of the relationship between epidemic disease and the state changed in the 19th century as governments become more ambitious in addressing public health and as the epistemic basis for disease control and prevention advanced. Several developments deserve attention.

First, intellectual innovations originating in the scientific revolution of the 17th century, became sources of new knowledge that could be used to improve health. It is important to recognize that, as Mokyr (2002) documents, the epistemic basis of many medical inventions prior to the acceptance of the germ theory of disease was very narrow. The dominant explanation for the spread of disease was the miasma theory, which held that odors conveyed noxious disease spores. A disease like tuberculosis was recognized as a single disease only in the early 19th century. The doctor responsible, René Laennec, however, mistakenly concluded that it was not a contagious but hereditary. Nonetheless, the urge to systemize, to “improve”, and the belief that the natural world was legible, characteristic of the Enlightenment, and specifically of what Mokyr (2002) calls the Industrial Enlightenment, was responsible for important medical breakthroughs, such as Edward Jenner’s establishment of the efficacy of the smallpox vaccine.

Second, as argued by Mckeown et al. (1975), economic growth enabled societies to achieve better health outcomes. Alongside the control of epidemic disease, improved nutrition was responsible for the decline in mortality from tuberculosis, diarrhea, and dysentery (for more recent evidence supporting the role of better nutrition, see, Schneider, 2021).

A third development was the establishment of government funded research centers. Such funding began in France in the aftermath of the French Revolution. Historians of medicine place considerable importance on this Paris School of medicine, even though these developments did not necessarily trickle down to improved medical care for many decades (Snowden, 2020).

Fourth, the sanitary movement arose in Industrial Revolution England, associated most prominently with the social reformer Edwin Chadwick. Chadwick, a utilitarian follower of Jeremy Bentham, known as England’s Prussian minister, was prominent in both the reform of the police and the passage of the New Poor Law, as well as in pioneering public sanitation (Brundage, 1986).Footnote 20 Chadwick’s Sanitary Report of 1842 provided an exhaustive survey of the conditions in Britain’s industrializing cities. It documented the widespread pollution and unsanitary conditions in cities like Birmingham, Liverpool, Sheffield, and Glasgow. The intention of the Sanitary Report was to justify a massive centrally organized program of state investment in infrastructure.

Public authorities needed to establish regulations for the construction of building, city planning to control the width of streets so that air and sunlight would penetrate, maintenance of public spaces and oversight to ensure routine maintenance. Interventions were also important to introduce sanitation into a wide range of institutions—army camps and barracks, naval and merchant marine vessels, asylums, hospitals, cemeteries and schools. Boarding houses also needed to be brought into conformity with the system. Chadwick’s reforms were thus resolutely top-down and centralizing. They marked a step in the “Victorian government revolution” that significantly augmented the power of the state (Snowden, 2020, 203).

It is easy to make exaggerated claims on behalf of the sanitary movement. It took many decades for cholera and typhus to be brought under control. Chadwick’s initial report was influenced by the miasma theory of disease. It took many years for public health officials to be convinced by John Snow’s demonstration that typhus was water-borne. Nonetheless, by the 1870s, scientific breakthroughs were working in conjunction with major investments in sanitation and in improving the water supply. And it is important to note that these improvements were often undertaken by private companies before been municipalized by local authorities (see Beach et al., 2016). Indeed, in the United States, Troesken (1999) found that, contrary to the claims of progressive-era reformers private companies often invested more in water filters than did public ones.

Another reason why it is too simple to attribute all of the dramatic fall in mortality, and the rise in life expectancy to government inventions in public health, is that private individuals were also important in improving public health outcomes. As emphasized by Mokyr (2002), new knowledge about the causes of disease generate market demand for better sanitation and product information that previously had not existed.

The rise in income ...increased the consumption of goods that improved health: fresh fruits and vegetables, high-protein foods, home heating, hot water, cleaning materials and so on. At the same time, growing government intervention and improving public health reduced the relative price of clean and safe water, as well as the cost of waste disposal, protection against insects, and verifying the safety of food and drink. Not all changes in relative prices were the result of public health measures: technological progress contributed as well: filtration and chlorination of drinking water, refrigerated ships, pasteurization techniques, and electrical stoves and home heating, all reduced the price of health-enhancing foods (Mokyr, 2002, 167–168).

Even taking these important caveats into account, however, the contributions of the sanitation movement were by any measure impressive. The latest estimates by Chapman (2019) suggest that around 30% of the decline in mortality between 1861 and 1900 in England and Wales was caused by infrastructure investments in water supply and sewerage. Studying the fall in infant mortality in Massachusetts between 1880 and 1915, Alsan and Goldin (2019) find comparably large effects.

While brief, the history surveyed here is consistent with two claims advanced above. That is, (1) in the short-run there is often a trade-off between public health and freedom. But (2) in the long-run, the correlation is often reversed, that is greater freedom is associated with more innovation that can combat the spread of epidemic disease. Whereas Preston (1975) argued that the relationship between income and longevity (and hence indirectly public health) was subject to diminishing returns, greater innovation, driven by freer societies, can potentially mitigate this, by shifting this relationship upwards.

The nature of the short-run trade-off is evident in the anti-vaccination movement that arose in the second part of the 19th century in Britain and America, and to which I now turn.

3.3 Opposition to vaccination in late 19th century America

One example of the purported tradeoff between public health and liberty is that of vaccinations. The creation of the smallpox vaccine by Edward Jenner in 1796 provided the possibility of combating of limiting and then eradicating many infectious diseases. However, the progress of vaccination was painfully slow and an anti-vaccination movement arose in both Britain and America. This discussion draws heavily on Troesken (2015).

In the United States, the early efforts to introduce the smallpox vaccine occurred at the local level. The decision to introduce the vaccine was made by towns or cities. Newspapers and also the clergy encouraged people to get vaccinated. Troesken (2015) argues that the small-scale nature of American society in the early 19th century meant that it was possible for a vaccination campaign to succeed because private cooperation complemented the state provision of the vaccine itself. Moral suasion was sufficient to get large numbers of people vaccinated. As a consequence, there was little opposition to vaccination in the early 19th century.

However, by the mid-19th century, with rapid population growth, urbanization, and internal migration, social pressures were insufficient to ensure widespread compliance. “The emergence of large, ethnically diverse cities gradually undermined the ideology of the township, the spirit of volunteerism, and the individual cooperation that it embodied. With the demise of the township and the overwhelming public health demands of the large industrial cities, state coercion in the provision of public health, particularly vaccination, grew increasingly necessary” (Troesken, 2015, 22). The local “township approach” to public health was replaced with a more intrusive, state-based approach. This approach could be heavy handed, and it prompted a backlash from a growing anti-vaccination movement.Footnote 21 In the United States, anti-vaccinationists successfully used the 14th Amendment to oppose mandatory vaccination.

An important legal case occurred in Cambridge, Massachusetts in 1897 when Albert Pear and Henning Jacobson refused the mandatory and free smallpox vaccinates introduced by the local public health department, claiming that they ran contrary to the Equal Protection Clause of the 14th Amendment. The Massachusetts Supreme Court rejected their case as did the Supreme Court, which upheld the rights of the states to require mandatory vaccinations so long as they did not employ force in implementing them. Nonetheless, in most states there were no such attempts to enforce mandatory vaccination programs in part because they anticipated widespread opposition. This anti-vaccination campaign had significant consequences. As Trosken reports:

By 1900, the United States was the richest country in the world, yet its death rate from smallpox was thirty-one times greater than Germany’s, nearly seventy times greater than Denmark’s and Canada’s, and six times greater than Switzerland’s. Even the British colony of Ceylon (Sri Lanka) had a smallpox rate one-third that of the United States (Troesken, 2015, 22).

Looking across American states, Trosken found that states with weak laws enforcing vaccination experienced ten times the rate of smallpox than those with strong laws (that is 1,03 cases per thousand compared to 0.1 cases per thousand individuals) (p. 90). In contrast, authoritarian regimes like the Soviet Union were able to rapidly implement vaccination programs. As Troesken documents, in the early 20th century there was a U-shaped relationship between the prevalence of smallpox and GDP per capita because “the same institutional features that promoted economic growth simultaneously hindered efforts to implement mandatory vaccination programs” (p 99). Nonetheless, this trade-off between liberty and public health was not an absolute one. It was particularly evident in the case of smallpox. But in the case of other diseases, Trosken found that a more decentralized approach could be effective. And in other areas, such as the control of animal diseases, the United States was a leader in controlling the spread of animal diseases (see Olmstead & Rhode, 2015).

These case studies suggest some preliminary conclusions. In the absence of an epistemic basis for public health interventions, no relationship should be expected between such interventions and actual public health. Hence there should be no tradeoff between public health and other desirable goals, such as liberty. As the epistemic base widens, however, the potential for such a trade-off emerges. Of course, the nature of this trade-off can vary. Autocratic regimes often excel at certain types of public health interventions such as a mandatory vaccination programs or the prohibition of harmful substances but are less successful in implementing other public health programs. More liberal regimes may stumble in mandating vaccines or in interventions that rely on coercion but can be more effect in mobilizing civil society and in promoting scientific knowledge of the factors that undermine health. Nonetheless, the tradeoff between liberty and public heath has particular saliency in the case of a novel pandemic such as Covid-19. Given a fast-moving and highly contagious epidemic, we should expect movement along this tradeoff. Crucially, this tradeoff is a conditional relationship: we should only expect it to hold when other variables (like innovation) are held constant. In the long-run, it may in fact reverse because liberal societies are more likely to generate innovations that improve public health.

4 Implications for understanding Covid-19

The Covid-19 pandemic illustrates several of the key points in this essay, as well as the inherent difficulties involved in making policies and predictions in real time. First, a major and novel epidemic is, by itself, a threat to liberal society. It introduces new, unstable, and uncertain externalities into everyday human interactions. These externalities cannot be easily mitigated by private individuals acting alone nor by local authorities. Government lockdowns and other policy responses have been characterized as major threats to liberty or steps along the road serfdom. But this risks missing the point that the virus itself poses the greater threat to a liberal order.

Viewed historically, that is, in the context of past pandemics or other emergency situations, the lockdown policies implemented in Western democracies in 2020 were not a gross departure from liberal norms. In cases, there, was overreach by governors or local police authorities, but in other cases politicians were too slow rather than too eager to impose lockdowns as they were concerned with the economic costs of such policies and feared being punished by voters.

Second, the ability of public authorities to respond effectively to epidemic diseases depends on the knowledge available to the public and to policy makers. Opposition to the initial lockdowns and demands for rapid reopening were typically characterized by a misunderstanding of how the disease spread. Opposition to lockdowns was correlated with opposition to wearing masks, ironically a low-cost practice that could to a degree moderate reliance on more coercive policies.

Experts and governments also misunderstood the nature of the disease. The WHO acted as a mouthpiece for the Chinese government, refusing to send an independent team to Wuhan, downplaying the risks of air-borne transmission, and delaying the announcement that it was facing a pandemic. While an early travel ban on China might have restricted spread of the disease, the WHO counseled that travel bans were ineffective. Both in the UK and the USA, public health authorities initially cautioned against widespread mask use by the general public before rapidly reversing their advice (see Scientific Advisory Group for Emergencies, 2020). Policymakers outside of East Asia, emphasized the importance of hand washing and surface transmission, and did not adapt quickly to the evidence that the disease was predominantly airborne and that ventilation was critical (e.g. Buonanno et al., 2020; Liu et al., 2020; Ma et al., 2020). Similarly, many policymakers downplayed the possibility of asymptomatic transmission and failed to adequately convey this danger to the public at large.Footnote 22 Even late into the crisis, policymakers failed to convey the importance of adequate ventilation, or the benefits of N/K95 masks and respirators over cloth masks. Similarly, those most opposed to policies encouraging or mandating vaccines, were also often opposed to stricter lock-down measures.

Third, public choice concerns played a critical role in explaining policy failures. In both the United States and the United Kingdom there was widespread government failure in early testing. The CDC’s original Covid test was faulty during the early stages of the epidemic. The FDA’s decision to restrict private companies from developing tests also proved highly costly. During February and early March 2020, tests were restricted to the small number of individuals who could be linked to travel from China even though the disease was already spreading domestically, and had seeded in Europe, specifically Northern Italy. In February 2020, the United States and several European countries sent personal, protective, equipment (PPE) and medical equipment to China. In the subsequent months, they then faced severe shortages of the same equipment. During all of this the Federal government abdicated responsibility to the states, whilst playing favorites between governors. In the UK, the government refused to shut borders or to even require travelers into the country to take a Covid-19 test (until January 2021). The UK government then spent the quiet summer months of 2020 subsidizing people to eat in restaurants and requiring workers to return to work rather than planning for the widely predicted second wave.Footnote 23 The FDA acted faster than many expected in approving vaccines for Covid-19 for emergency use, but then delay full approval for non-emergency use until the second half of 2021. The decision by the CDC and the FDA to pause the Johnson & Johnson vaccine rollout in April 2021 appears to have contributed to vaccine hesitancy. Australia and New Zealand initially won plaudits for implementing strict lockdowns and minimizing the spread of the disease. But in botching their vaccine programs and erring in the direction of greater severity, they have imposed unnecessary costs on their economies and societies.

Fourth, despite these mistakes and the flawed response of policymakers across the world, but especially in the Western democracies, the Covid-19 crisis has also revealed the vitality and ingenuity of modern science. The development of novel MRNA vaccines in record time is a triumph. And it was pharmaceutical companies, one based in the United States (Moderna), the other a multinational (Pfizer) that developed these vaccines, followed by a partnership between a British-Swedish multinational (AstraZeneca) and Oxford University.

Fifth, the Covid-19 pandemic sheds light on the precise nature of the purported tradeoff between health and liberty. In the short-run there is a tradeoff: strict restrictions on individual behavior can restrict the spread of the disease. Specifically, studies such as Haug et al. (2020) find that the most effective interventions are curfews, lockdowns and closing and restricting places where people gather in smaller or large numbers for an extended period. But they also note that these interventions have a large cost, especially for children or the vulnerable. McCannon and Hall (2021) find that less economically free states were quicker to impose stay-at-home orders. Though there is no evidence that autocracies have performed better overall than democracies (Frey et al. 2020). But societal factors, including the effectiveness of the political response, are also important and can indeed moderate the tradeoff between freedom and public health. And in the long-run this relationship can be reversed. It is societies that have the most economic and intellectual freedom that will produce the innovation that will allow us to overcome this disease. It is important to recall that the scale of the Covid-19 crisis is, in part, explained by the secrecy and repressive nature of the Chinese Community Party (Ang 2020). In a more open society, news about the new virus would likely have been available earlier. Moreover, few wish to adopt the Chinese produced vaccine whereas it has been medical innovation in liberal democracies like the United States, United Kingdom, and Germany that have pioneered both therapeutic treatments and vaccines.

5 Concluding comments

This paper has considered the political economy of epidemic disease informed both by the Covid-19 crisis and the history of public health interventions more broadly. Specifically, I focused on the challenges epidemic diseases poses to a liberal order and the possibility that in the short-run, following the emergence of a new infectious disease, a negative tradeoff exists between public health and freedom. This tradeoff suggests that in the face of a particularly contagious epidemic disease, the only way we can safeguard public health is by accepting restrictions on individual freedom that would be unconscionable in normal times. The important lesson from history, I suggest, is that this trade-off only holds in the short-run. In the longer-run the relationship between freedom and public health is positive, both because greater freedom will lead to developments that will improve public health and because societies that are not at the mercy of uncontrolled epidemic disease will be more hospitable for liberal regimes.

A second issue considered in this paper is the political economy of public health more generally. Pandemic policies are made by individuals who respond to incentives and face severe knowledge problems. Future research should consider what factors allow some societies to moderate or mitigate the trade-off between public health and freedom, that is, to have both controlled Covid-19 more effectively and to have made fewer policy missteps along the way. Candidates factors include recent past experience with an epidemic like SARS, higher levels of social cohesion, or a more effective state.

Notes

One important exception is Olmstead and Rhode (2015), which links the introduction of measures to control animal disease in the United States with the rise of the Federal government.

Indeed, historical evidence suggests that the prevalence of epidemic disease contributes to more collectivist and less individualist cultural values (Fincher et al., 2008; Cashdan & Steele, 2013). Individualist values have then been linked to modern economic growth channeled through better institutions (see Gorodnichenko & Roland, 2017).

See the discussion in (Reisman, 1998, 371).

Specifically, (Epstein, 2003, p. S145) notes: “Within the framework of American constitutionalism, quarantine necessarily interfered with the ordinary liberty to travel, but the gains to public health (i.e. the safety of countless others) so outweighed the losses that it was impossible to mount a principled categorical attack against this form of regulation. Behind a veil of ignorance, everyone would opt for quarantine when no lesser remedy could do the job. The basic laissez-faire account of the police power holds: everyone is a net gainer from behind the veil of ignorance of the uniform application of quarantine rules. So understood, this view of the police power was seamlessly incorporated, as Novak has noted, into the American law dealing with the subject.”

Note that it was not merely a matter of the externality’s magnitudes. They also were nonlinear and subject to random shocks: past a certain threshold, the healthcare system could become overloaded, generating a cascading series of negative effects, not only on individuals suffering from Covid-19 but from all kinds of other ailments.

This does not mean that optimal policies will be the same in New York city as in Logan Utah; but it means that they cannot be made based solely on local conditions or in isolation from each other.

Indeed, some evidence is available that cultural norms affected the early spread of Covid-19 (see Min, 2020; Goldstein & Wiedemann, 2020; Woelfert and Kunst 2020). Here, I am taking levels of trust and other social norms as given. In the long run, intrusive or predatory government can erode trust (see Berggren & Jordahl, 2006).

The British website Lockdown Sceptics published numerous articles criticizing mask use: https://lockdownsceptics.org/2020/07/11/latest-news-71/?fbclid=IwAR0HILqITsl7a0mXz1If5JWlgLjOn0qA25ivaTcg5b3qSa7s_yGqRs0eAXg as did the AIER https://www.aier.org/article/unmasking-masks/ and https://www.aier.org/article/the-year-of-disguises/. The case for low-cost interventions such as masking was initially based on credible theoretical arguments (see Leech et al., 2021; Liao et al., 2021; Sankhyan et al., 2021). Given the situation, more rigorous empirical evidence has taken longer to emerge, though a RCT on encouraging mask use has found positive (but small) effects (Abaluck et al., 2021).

Selectorate theory encompasses both democratic and autocratic regime types. Specifically, an incumbent leader’s political survival depends on the continued support of a coalition of size W that is drawn from a ‘selectorate’ of size S (see de Mesquita et al., 2003).

The restrictions proved effectively only in geographically isolated Western polities like New Zealand.

See Pennington (2010) for a discussion of how knowledge problems beset policymakers.

Clear mistakes early on in the pandemic made by the UK or US governments include but were not limited to (1) the UK’s Scientific Advisory Group for Emergencies (SAGE) advising that large public events such as the Cheltenham festival and the Liverpool Atletico Madrid Champions League game go ahead in March 2020; (2) public health authorities advising against mask use.—to the extent of banning masks from being advertised; (3) failure to acknowledge that Covid-19 largely is airborne (see Wilson et al., 2020) and consequent overemphasis on fomite transmission; (4) restrictions on outside activities such as parks and beaches during the lockdowns of March–May 2020 (and in some cases longer). More general problems with the structure of SAGE are discussed by Koppl (2021).

Another trope was the idea that “fear” of the pandemic was somehow worse than the pandemic itself. One example is Sunstein (2020), a notable advocate of using behavioral economics to shape policy. As such, government received advice against policies that might spread “panic”.

Simpson’s paradox was coined in reference to situation where apparent patterns in two separate groups of data but which disappear when those groups are combined. More generally, it can be explained by the presence of a lurking confounder which reverses the sign of a correlation.

Indeed, the model of Desierto and Koyama (2020) provides one explanation why policymakers in states with high capacity might choose not to use that capacity to moderate the spread of an epidemic.

The Soviet Union had twice as many per capita physicians as the United States in 1986 (Geloso et al., 2020, 1). Cuba also is hailed by many observers for its public health achievements. Recent research suggests that those achievements largely are a mirage—infant mortality increased relative to a counterfactual “synthetic” Cuba before returning to trend (Geloso & Pavlik, 2020).

Little is known about why leprosy became so prominent in these centuries. More than 2000 leprosariums existed in France in the mid-12th century for instance. Even today the transmission of the disease remains puzzling. It is likely that greater urbanization and the concentration of poverty may have exacerbated the spread of the disease (Covey, 2001).

(Cohn & Alfani, 2007, 197) note that “surprisingly, the Lazzaretto does not appear from this evidence to have been the ineluctable death trap that it is often portrayed to be (most vividly in Alessandro Manzoni’s I Promessi sposi [1842])”. Examining records from Milan, they find that “[t]he survival rate (two-thirds, or 517 of 779) was remarkably high”.

The Home Secretary Lord John Russell warned Chadwick: “We are busy in introducing system, method, science, economy, regularity, & discipline. But we must beware not lose the co-operation of the country—they will not bear a Prussian Minister, to regulate their domestic affairs—so that some faults must be indulged for the sake of carrying improvement in the mass” (quoted in Philips, 2004, 876).

The anti-vaccination movement was not based on a single ideology. Individuals came to oppose vaccinations for a range of religious, political or ideological reasons. Some opposed vaccines as “unnatural” meddling in God’s order. Others believed that mandatory vaccines were an unjust extension of state power. Still others disbelieved in their scientific efficacy or held that they were dangerous.

One example of this was Georgia governor Brian Kemp who admitted that he did not know that asymptomatic people could spread Covid-19 as late as April 2020 when evidence for asymptomatic transmission had been available since January (Edelman, 2020).

Fetzer et al. (2021) estimates that the subsidy given to individuals eating inside restaurants was responsible for 8-17 % of new infections in August 2020.

References

Abaluck, J., Kwong, L. H., Styczynski, A., Haque, A., Kabir, M. A., Bates-Jeffries, E., et al. (2021). The impact of community masking on COVID-19: A cluster-randomized trial in Bangladesh.

Aberth, J. (2000). From the brink of the apocalypse: Confronting famine, war, plague, and death in the later middle ages. Routledge.

Alfani, G., & Murphy, T. E. (2017). Plague and lethal epidemics in the pre-industrial world. The Journal of Economic History, 77(1), 314–343.

Alsan, M., & Goldin, C. (2019). Watersheds in child mortality: The role of effective water and sewerage infrastructure, 1880–1920. Journal of Political Economy, 127(2), 586–638.

Alsan, M., & Wanamaker, M. (2018). Tuskegee and the health of black men. The Quarterly Journal of Economics, 133(1), 407–455.

Ang, Y. Y. (2020). When COVID-19 meets centralized, personalized power. Nature Human Behaviour, 4(5), 445–447.

Barber, M. (1981). Lepers, Jews and Moslems: The plot to overthrow Christendom in 1321. History, 66(216), 1–17.

Beach, B., Troesken, W., & Tynan, N. (2016). Disease and the municipalzation of private waterowrks in nineteenth century England and Wales. Working Paper.

Berggren, N., & Jordahl, H. (2006). Free to trust: Economic freedom and social capital. Kyklos, 59(2), 141–169.

Besley, T., & Persson, T. (2011). Pillars of prosperity. Princeton University Press.

Bourne, R. A. (2021). Economics in one virus. Cato Institute.

Brennan, G., & Buchanan, J. M. (1980). The power to tax. Liberty Fund.

Brooke, J. L. (2014). Climate change and the course of global history: A rough journey. Cambridge University Press.

Brundage, A. (1986). Ministers, magistrates and reformers: The genesis of the Rural Constabulary Act of 1839. Parliamentary History, 5(1), 55–64.

Buchanan, J. M., & Tullock, G. (1962). The calculus of consent. University of Michigan Press.

Buonanno, G., Morawska, L., & Stabile, L. (2020). Quantitative assessment of the risk of airborne transmission of SARS-CoV-2 infection: prospective and retrospective applications. medRxiv.

Campbell, B. M. (2016). The great transition: Climate, disease and society in the late-medieval world. Cambridge University Press.

Cashdan, E., & Steele, M. (2013). Pathogen prevalence, group bias, and collectivism in the standard cross-cultural sample. Human Nature, 24(1), 59–75.

Chapman, J. (2019). The contribution of infrastructure investment to Britain’s urban mortality decline, 1861–1900. The Economic History Review, 72(1), 233–259.

Cipolla, C. M. (1981). Fighting plague in seventeenth-century Italy. The University of Wisconsin Press.

Coase, R. H. (1960). The problem of social cost. The Journal of Law and Economics, 3(1), 1.

Cohn, S. K. (2007). The black death and the burning of Jews. Past and Present, 196(1), 3–36.

Cohn, S. K., & Alfani, G. (2007). Households and plague in early modern Italy. The Journal of Interdisciplinary History, 38(2), 177–205.

Congleton, R. D. (2021). Federalism and pandemic policies: variety as the spice of life. Public Choice (Forthcoming).

Covey, H. C. (2001). People with leprosy (Hansen’s disease) during the middle ages. The Social Science Journal,38(2), 315–321.

Crawford, D. H. (2000). The invisble enemy: A natural history of viruses. Oxford University Press.

de Mesquita, B. B., Morrow, J. D., Siverson, R. M., & Smith, A. (2003). The logic of political survival. MIT Press.

Desierto, D., & Koyama, M. (2020). Health vs. economy: Politically optimal pandemic policy. Journal of Political Institutions and Political Economy, 1(4), 645–669.

Dittmar, J. E., & Meisenzahl, R. R. (2020). Public goods institutions, human capital, and growth: Evidence from German history. Review of Economic Studies, 87(2), 959–996.

Edelman, A. (2020). Brian Kemp admits he just learned asymptomatic people can spread coronavirus: Georgia Gov.

Epstein, R. A. (2003). Let the shoemaker stick to his last: A defense of the “old” public health.” Perspectives in Biology and Medicine, 46(3), S138–S159.

Epstein, R. A. (2004). In defense of the old public health–the legal framework for the regulation of public health. Brooklyn Law Review, 69, 1421.

Ertman, T. (1997). Birth of Leviathan. Cambridge University Press.

Fetzer, T., et al. (2021). Subsidizing the spread of COVID19: Evidence from the UK’s Eat-Out-to-Help-Out scheme. Economic Journal, (forthcoming).

Fincher, C. L., Thornhill, R., Murray, D. R., & Schaller, M. (2008). Pathogen prevalence predicts human cross-cultural variability in individualism/collectivism. Proceedings of the Royal Society B: Biological Sciences, 275(1640), 1279–1285.

Finley, T., & Koyama, M. (2018). Plague, politics, and pogroms: The Black Death, the rule of law, and the persecution of Jews in the Holy Roman Empire. Journal of Law and Economics, 61(2), 253–277.

Frey, C. B., Chen, C., & Presidente, G. (2020). Democracy, culture, and contagion: Political regimes and countries responsiveness to Covid-19. Covid Economics, 18, 222–238.

Fukuyama, F. (2011). The origins of political order. Profile Books Ltd.

Geloso, V., Berdine, G., & Powell, B. (2020). Making sense of dictatorships and health outcomes. BMJ Global Health, 5, 5.

Geloso, V., Hyde, K., & Murtazashvili I. (2021). Pandemics, economic freedom, and institutional trade-offs. European Journal of Law and Economics (Forthcoming).

Geloso, V., & Pavlik, J. B. (2020). The Cuban revolution and infant mortality: A synthetic control approach. Explorations in Economic History (Forthcoming).

Glaeser, E. L., & Shleifer, A. (2003). The rise of the regulatory state. Journal of Economic Literature, 41(2), 401–425.

Goldstein, D., & Wiedemann, J. (2020). Who do you trust? the consequences of political and social trust for public responsiveness to Covid-19 orders.

Gorodnichenko, Y., & Roland, G. (2017). Culture, institutions, and the wealth of nations. The Review of Economics and Statistics, 99(3), 402–416.

Hahn, U., Chater, N., Lagnado, D., Osman, M., & Raihani, N. (2020). Why a Group of Behavioural Scientists Penned an Open Letter to the U.K. Government Questioning Its Coronavirus Response”, Behavioural Scientist, 16 March 2020<https://behavioralscientist.org/why-a-group-of-behavioural-scientists-penned-an-open-letter-to-the-uk-government-questioning-its-coronavirus-response-covid-19-social-distancing>. U Hahn et al, “Why a Group of Behavioural Scientists Penned an Open Letter to the U.K. Government Questioning Its Coronavirus Response. Behavioural Scientist.

Harper, K. (2021). Plagues upon the earth. Princeton University Press.

Harris, B. (2004). Public health, nutrition, and the decline of mortality: The McKeown Thesis revisited. Social History of Medicine, 17(3), 379–407.

Harris, B., & Hinde, A. (2019). Sanitary investment and the decline of urban mortality in England and Wales, 1817–1914. The History of the Family, 24(2), 339–376.

Haug, N., Geyrhofer, L., Londei, A., Dervic, E., Desvars-Larrive, A., Loreto, V., et al. (2020). Ranking the effectiveness of worldwide Covid-19 government interventions. Nature Human Behaviour, 4(12), 1303–1312.

Hayek, F. (1945). The use of knowledge in society. The American Economic Review, 35, 519–530.

Hays, J. N. (2009). The burden of disease: Epidemics and human response in western history. Rutgers University Press.

Higgs, R. (1987). Crisis and Leviathan. Oxford University Press.

Horrox, R. (1994). The Black Death. Manchester University Press.