Abstract

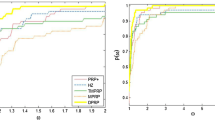

Although the study of global convergence of the Polak–Ribière–Polyak (PRP), Hestenes–Stiefel (HS) and Liu–Storey (LS) conjugate gradient methods has made great progress, the convergence of these algorithms for general nonlinear functions is still erratic, not to mention under weak conditions on the objective function and weak line search rules. Besides, it is also interesting to investigate whether there exists a general method that converges under the standard Armijo line search for general nonconvex functions, since very few relevant results have been achieved. So in this paper, we present a new general form of conjugate gradient methods whose theoretical significance is attractive. With any formula β k ≥ 0 and under weak conditions, the proposed method satisfies the sufficient descent condition independently of the line search used and the function convexity, and its global convergence can be achieved under the standard Wolfe line search or even under the standard Armijo line search. Based on this new method, convergence results on the PRP, HS, LS, Dai–Yuan–type (DY) and Conjugate–Descent–type (CD) methods are established. Preliminary numerical results show the efficiency of the proposed methods.

Similar content being viewed by others

References

Bongartz, I.C., Conn, A., Gould, N., Toint, P.: CUTE: Constrained and Unconstrained Testing Environments. ACM Trans. Math. Softw. 21, 123–160 (1995)

Dai, Y.H.: New properties of a nonlinear conjugate gradient method. Numer. Math. 89, 83–98 (2001)

Dai, Y.H., Yuan, Y.: A nonlinear conjugate gradient method with a strong global convergence property. SIAM J. Optim. 10, 177–182 (1999)

Dai, Y.H., Yuan, Y.: A class of globally convergent conjugate gradient methods. Sci. China Ser. A 46, 251–261 (2003)

Dolan, E.D., Moré, J.J.: Benchmarking optimization software with performance profiles. Math. Program. 91, 201–213 (2002)

Fletcher, R.: Practical Methods of Optimization, vol. 1: Unconstrained Optimization. John Wiley, New York (1987)

Gilbert, J.C., Nocedal, J.: Global convergence properties of conjugate gradient methods for optimization. SIAM J. Optim. 2, 21–42 (1992)

Hager, W.W., Zhang, H.: A new conjugate gradient method with guaranteed descent and an efficient line search. SIAM J. Optim. 16, 170–192 (2005)

Hager, W.W., Zhang, H.: Algorithm 851: CG−DESCENT, a conjugate gradient method with guaranteed descent. ACM Trans. Math. Softw. 32, 113–137 (2006)

Hestenes, M.R., Stiefel, E.: Methods of conjugate gradients for solving linear systems. J. Res. Natl. Bur. Stand. 49, 409–436 (1952)

Liu, D.C., Nocedal, J.: On the limited memory BFGS method for large scale optimization. Math. Program. 45, 503–528 (1989)

Liu, Y.L., Storey, C.S.: Efficient generalized conjugate gradient algorithms, part 1: theory. J. Optim. Theory Appl. 69, 129–137 (1991)

Polak, E., Ribière, G.: Note sur la convergence de méthodes de directions conjuguées. Rev. Française Informat. Recherche Opérationnelle 3, 35–43 (1969)

Powell, M.J.D.: Nonconvex minimization calculations and the conjugate gradient method. In: Lecture Notes in Mathematics, vol. 1066, pp. 122–141. Springer, Berlin (1984)

Powell, M.J.D.: Convergence properties of algorithms for nonlinear optimization. Math. Program., SIAM Rev. 28, 487–500 (1986)

Pu, D.J., Yu, W.: On the convergence property of the DFP algorithm. Ann. Oper. Res. 24, 175–184 (1990)

Wolfe, P.: Convergence conditions for ascent methods. SIAM Rev. 11, 226–235 (1969)

Wolfe, P.: Convergence conditions for ascent methods II: some corrections. SIAM Rev. 13, 185–188 (1971)

Zoutendijk, G.: Nonlinear programming, computational methods. In: Abadie, J. (ed.) Integer and Nonlinear Programming, pp. 37–86. North-Holland, Amsterdam (1970)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Zhang, Y., Wang, K. A new general form of conjugate gradient methods with guaranteed descent and strong global convergence properties. Numer Algor 60, 135–152 (2012). https://doi.org/10.1007/s11075-011-9515-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-011-9515-0