Abstract

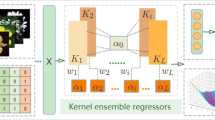

This paper proposes a multi-view representation kernel ensemble Support Vector Machine. Unlike the conventional multiple kernel learning techniques which utilizes a common similarity measure over the entire input space, with the aim of solely learning their models via linear combination of basis kernels in single Reproducing Kernel Hilbert Space (RKHS), our proposed model seeks to concurrently find multiple solutions in corresponding Reproducing Kernel Hilbert Spaces. To achieve this objective, we first derive our proposed model directly from the classical SVM model. Then, leveraging on the concept of multi- view data processing, we consider the original data as a multi - view data controlled by different sub models in our proposed model. The multi-view representations of the original data are subsequently transformed into ensemble kernel models where the linear classifiers are parameterized in multiple kernel spaces. This enables each model to co-optimize the learning of its optimal parameter via the minimization of a cumulative ensemble loss in multiple RKHSs. With this, there is an overall improvement in the accuracy of the classification task as well as the robustness of our proposed ensemble model. Since UCI machine learning data repository provides publicly available benchmark datasets, we evaluated our model by conducting experiments on several UCI classification and image datasets. The results of our proposed model were compared with other state-of-the-art MKL methods, such as SimpleMKL, EasyMKL, MRMKL, RMKL and PWMK. Among these MKL methods, our proposed method demonstrates better performances in the experiments conducted.

Similar content being viewed by others

References

Lee LH, Wan CH, Rajkumar R, Isa D (2012) An enhanced support vector machine classification framework by using Euclidean distance function for text document categorization. Appl Intell 37(1):80–99

Leopold E, Kindermann J (2022) Text categorization with support vector machines. How to represent texts in input space? Mach Learn 46(1):423–444

Hoi SC, Jin R, Lyu MR (2009) Batch mode active learning with applications to text categorization and image retrieval. IEEE Trans Knowl Data Eng 21(9):1233–1248

Kumar MA, Gopal M (2009) Text categorization using fuzzy proximal svm and distributional clustering of words. In: Pacific-asia conference on knowledge discovery and data mining. Springer, pp. 52–61

Timonen M et al (2013) Term weighting in short documents for document categorization, keyword extraction and query expansion. Helsingin yliopisto

Li K, Xie J, Sun X, Ma Y, Bai H (2011) Multi-class text categorization based on LDA and SVM. Proced Eng 15:1963–1967

Peng T, Zuo W, He F (2008) Svm based adaptive learning method for text classification from positive and unlabeled documents. Knowl Inf Syst 16(3):281–301

Qiao Z, Kewen X, Panpan W, Wang H (2017) Lung nodule classification using curvelet transform, LDA algorithm and BAT-SVM algorithm. Pattern Recognit Image Anal 27(4):855–862

Afifi S, GholamHosseini H, Sinha R (2020) Dynamic hardware system for cascade SVM classification of melanoma. Neural Comput Appl 32(6):1777–1788

Kaur P, Pannu HS, Malhi AK (2019) Plant disease recognition using fractional-order Zernike moments and SVM classifier. Neural Comput Appl 31(12):8749–8768

Zhang X, Mahoor MH, Mavadati SM (2015) Facial expression recognition using lp-norm MKL multiclass-SVM. Mach Vis Appl 26(4):467–483

Hsieh C-C, Liou D-H (2015) Novel haar features for real-time hand gesture recognition using SVM. J Real-Time Image Proc 10(2):357–370

Berbar MA (2014) Three robust features extraction approaches for facial gender classification. Vis Comput 30(1):19–31

Verma J, Nath M, Tripathi P, Saini K (2017) Analysis and identification of kidney stone using kth nearest neighbour (KNN) and support vector machine (SVM) classification techniques. Pattern Recognit Image Anal 27(3):574–580

Aydın S, Güdücü Ç, Kutluk F, Öniz A, Özgören M (2019) The impact of musical experience on neural sound encoding performance. Neurosci Lett 694:124–128

Kılıç B, Aydın S (2022) Classification of contrasting discrete emotional states indicated by EEG based graph theoretical network measures. Neuroinformatics 1–15

Ang JC, Haron H, Hamed HNA (2015) Semi-supervised SVM-based feature selection for cancer classification using microarray gene expression data. In: Current Approaches in Applied Artificial Intelligence: 28th International Conference on Industrial, Engineering and Other Applications of Applied Intelligent Systems, IEA/AIE 2015, Seoul, South Korea, June 10–12, 2015, Proceedings. Springer, pp 468–477

Vogado LH, Veras RM, Araujo FH, Silva RR, Aires KR (2018) Leukemia diagnosis in blood slides using transfer learning in CNNS and SVM for classification. Eng Appl Artif Intell 72:415–422

Jafarpisheh N, Teshnehlab M (2018) Cancers classification based on deep neural networks and emotional learning approach. IET Syst Biol 12(6):258–263

Li J, Weng Z, Xu H, Zhang Z, Miao H, Chen W, Liu Z, Zhang X, Wang M, Xu X et al (2018) Support vector machines (SVM) classification of prostate cancer Gleason score in central gland using multiparametric magnetic resonance images: a cross-validated study. Eur J Radiol 98:61–67

Tirumala SS, Narayanan A (2019) Classification and diagnostic prediction of prostate cancer using gene expression and artificial neural networks. Neural Comput Appl 31(11):7539–7548

Zhang L, Zhou W, Wang B, Zhang Z, Li F (2018) Applying 1-norm SVM with squared loss to gene selection for cancer classification. Appl Intell 48(7):1878–1890

Masood A, Al-Jumaily A, Anam K (2014) Texture analysis based automated decision support system for classification of skin cancer using SA-SVM. In: International conference on neural information processing. Springer, pp. 101–109

Dinesh P, Sabenian R (2019) Comparative analysis of zoning approaches for recognition of Indo Aryan language using SVM classifier. Clust Comput 22(5):10955–10962

Jebril NA, Al-Zoubi HR, Abu Al-Haija Q (2018) Recognition of handwritten Arabic characters using histograms of oriented gradient (hog). Pattern Recognit Image Anal 28(2):321–345

Montazer GA, Soltanshahi MA, Giveki D (2017) Farsi/arabic handwritten digit recognition using quantum neural networks and bag of visual words method. Opt Mem Neural Netw 26(2):117–128

Je H-M, Kim D, Yang Bang S (2003) Human face detection in digital video using svmensemble. Neural Process Lett 17(3):239–252

Tao Q-Q, Zhan S, Li X-H, Kurihara T (2016) Robust face detection using local CNN and SVM based on kernel combination. Neurocomputing 211:98–105

Bashbaghi S, Granger E, Sabourin R, Bilodeau G-A (2017) Dynamic ensembles of exemplar-SVMs for still-to-video face recognition. Pattern Recogn 69:61–81

Zhuang J, Tsang IW, Hoi SC (2011) Two-layer multiple kernel learning, in: Proceedings of the fourteenth international conference on artificial intelligence and statistics. In: JMLR workshop and conference proceedings, pp. 909–917

Qi J, Liang X, Xu R (2018) A multiple kernel learning model based on p -norm. Comput Intell Neurosci. https://doi.org/10.1155/2018/1018789

Aiolli F, Donini M (2015) EasyMKL: a scalable multiple kernel learning algorithm. Neurocomputing 169:215–224

Rakotomamonjy A, Bach F, Canu S, Grandvalet Y (2007) More efficiency in multiple kernel learning. In: Proceedings of the 24th international conference on Machine learning, pp 775–782

Suzuki T, Tomioka R (2011) SpicyMKL: a fast algorithm for multiple kernel learning with thousands of kernels. Mach Learn 85:77–108

Yang H, Xu Z, Ye J, King I, Lyu MR (2011) Efficient sparse generalized multiple kernel learning. IEEE Trans Neural Netw 22(3):433–446

Han Y, Yang Y, Li X, Liu Q, Ma Y (2018) Matrix-regularized multiple kernel learning via \((r,~ p) \) norms. IEEE Trans Neural Netw Learn Syst 29(10):4997–5007

Micchelli CA, Pontil M, Bartlett P (2005) Learning the kernel function via regularization. J Mach Learn Res 6(7)

Xu Z, Jin R, Yang H, King I, Lyu MR (2010) Simple and efficient multiple kernel learning by group lasso. In: ICML

Hu M, Chen Y, Kwok JT-Y (2009) Building sparse multiple-kernel SVM classifiers. IEEE Trans Neural Netw 20(5):827–839

Cortes C, Research G, York N (2004) l 2 regularization for learning kernels. In: Proceedings of the twenty-fifth conference on uncertainty in artificial intelligence (UAI2009)

Lanckriet GR, Cristianini N, Bartlett P, Ghaoui LE, Jordan MI (2004) Learning the kernel matrix with semidefinite programming. J Mach Learn Res 5:27–72

Bach F, Lanckriet R, Jordan M (2004) Multiple kernel learning, conic duality, and the smo algorithm. In: Proceedings of the 21th international conference on machine learning (ICML-04), Vol. 10

Sonnenburg S, Rätsch G, Schäfer C, Schölkopf B (2006) Large scale multiple kernel learning. J Mach Learn Res 7:1531–1565

Wang T, Zhang L, Hu W (2021) Bridging deep and multiple kernel learning: a review. Inf Fusion 67:3–13

Huang FJ, LeCun Y (2006) Large-scale learning with svm and convolutional for generic object categorization. In: 2006 IEEE computer society conference on computer vision and pattern recognition (CVPR’06), vol. 1. IEEE, pp. 284–291

Niu X-X, Suen CY (2012) A novel hybrid CNN-SVM classifier for recognizing handwritten digits. Pattern Recogn 45(4):1318–1325

Chagas P, Souza L, Araújo I, Aldeman N, Duarte A, Angelo M, Dos-Santos WL, Oliveira L (2020) Classification of glomerular hypercellularity using convolutional features and support vector machine. Artif Intell Med 103:101808

Tang Y (2013) Deep learning using linear support vector machines, arXiv preprint arXiv:1306.0239

Zareapoor M, Shamsolmoali P, Jain DK, Wang H, Yang J (2018) Kernelized support vector machine with deep learning: an efficient approach for extreme multiclass dataset. Pattern Recogn Lett 115:4–13

Wang J, Feng K, Wu J (2019) Svm-based deep stacking networks. In: Proceedings of the AAAI conference on artificial intelligence, vol. 33, pp. 5273–5280

Damianou A, Lawrence ND (2013) Deep gaussian processes. In: Artificial intelligence and statistics, PMLR, pp. 207–215

Mairal J, Koniusz P, Harchaoui Z, Schmid C (2014) Convolutional kernel networks. Adv Neural Inf Process Syst 27

Mairal J (2016) End-to-end kernel learning with supervised convolutional kernel networks. Adv Neural Inf Process Syst 29

Mohammadnia-Qaraei MR, Monsefi R, Ghiasi-Shirazi K (2018) Convolutional kernel networks based on a convex combination of cosine kernels. Pattern Recogn Lett 116:127–134

Wilson AG, Hu Z, Salakhutdinov R, Xing EP (2016) Deep kernel learning. In: Artificial intelligence and statistics, PMLR, pp. 370–378

Wilson AG, Hu Z, Salakhutdinov RR, Xing EP (2016) Stochastic variational deep kernel learning. Adv Neural Inf Process Syst 29

Cho Y, Saul LK (2010) Large-margin classification in infinite neural networks. Neural Comput 22(10):2678–2697

Cho Y, Saul L (2009) Kernel methods for deep learning. Adv Neural Inf Process Syst 22

Poria S, Peng H, Hussain A, Howard N, Cambria E (2017) Ensemble application of convolutional neural networks and multiple kernel learning for multimodal sentiment analysis. Neurocomputing 261:217–230

Donini M, Aiolli F (2017) Learning deep kernels in the space of dot product polynomials. Mach Learn 106(9):1245–1269

Zhang X, Liu J, Shen J, Li S, Hou K, Hu B, Gao J, Zhang T (2020) Emotion recognition from multimodal physiological signals using a regularized deep fusion of kernel machine. IEEE Trans Cybern 51(9):4386–4399

Lu H, Su H, Hu J, Du Q (2022) Dynamic ensemble learning with multi-view kernel collaborative subspace clustering for hyperspectral image classification. IEEE J Sel Top Appl Earth Obs Remote Sens 15:2681–2695

Song H, Thiagarajan JJ, Sattigeri P, Spanias A (2018) Optimizing kernel machines using deep learning. IEEE Trans Neural Netw Learn Syst 29(11):5528–5540

Huang K, Altosaar J, Ranganath R (2019) Clinicalbert: modeling clinical notes and predicting hospital readmission. arxiv, arXiv preprint arXiv:1904.05342

Sagi O, Rokach L (2018) Ensemble learning: a survey. Wiley Interdiscipl Rev Data Min Knowl Discov 8(4):e1249

Japkowicz N, Stephen S (2002) The class imbalance problem: a systematic study. Intell Data Anal 6(5):429–449

Galar M, Fernandez A, Barrenechea E, Bustince H, Herrera F (2011) A review on ensembles for the class imbalance problem: bagging-, boosting-, and hybrid-based approaches. IEEE Trans Syst Man Cybern Part C (Appl Rev) 42(4):463–484

Bryll R, Gutierrez-Osuna R, Quek F (2003) Attribute bagging: improving accuracy of classifier ensembles by using random feature subsets. Pattern Recogn 36(6):1291–1302

Huang J, Fang H, Fan X (2010) Decision forest for classification of gene expression data. Comput Biol Med 40(8):698–704

Dietterich TG et al (2002) Ensemble learning. The handbook of brain theory and neural networks. Arbib MA 2(1):110–125

Breiman L (1996) Bagging predictors. Mach Learn 24(2):123–140

Dietterich TG, Bakiri G (1994) Solving multiclass learning problems via error-correcting output codes. J Artif Intell Res 2:263–286

Dietterich TG (2000) An experimental comparison of three methods for constructing ensembles of decision trees: bagging, boosting, and randomization. Mach Learn 40(2):139–157

Ho TK (1998) The random subspace method for constructing decision forests. IEEE Trans Pattern Anal Mach Intell 20(8):832–844

Freund Y, Schapire RE, et al. (1996) Experiments with a new boosting algorithm. In: icml, Vol. 96. Citeseer, pp. 148–156

Shen X, Lu K, Mehta S, Zhang J, Liu W, Fan J, Zha Z (2021) MKEL: multiple kernel ensemble learning via unified ensemble loss for image classification. ACM Trans Intell Syst Technol (TIST) 12(4):1–21

Do H, Kalousis A, Woznica A, Hilario M (2009) Margin and radius based multiple kernel learning. In: Joint European conference on machine learning and knowledge discovery in databases. Springer, pp. 330–343

Tanabe, H, Ho TB, Nguyen CH, Kawasaki S (2008) Simple but effective methods for combining kernels in computational biology. In: 2008 IEEE international conference on research, innovation and vision for the future in computing and communication technologies. IEEE, pp. 71–78

Samaria FS, Harter AC (1994) Parameterisation of a stochastic model for human face identification. In: Proceedings of 1994 IEEE workshop on applications of computer vision. IEEE, pp. 138–142

Nene SA, Nayar SK, Murase H et al (1996) Columbia object image library (coil-20). Citeseer

Georghiades AS, Belhumeur PN, Kriegman DJ (2001) From few to many: illumination cone models for face recognition under variable lighting and pose. IEEE Trans Pattern Anal Mach Intell 23(6):643–660

Acknowledgements

This work was funded in part by the Science and Technology Planning Social Development Project of Zhenjiang City (SH2021006).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Quayson, E., Ganaa, E.D., Zhu, Q. et al. Multi-view Representation Induced Kernel Ensemble Support Vector Machine. Neural Process Lett 55, 7035–7056 (2023). https://doi.org/10.1007/s11063-023-11250-z

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-023-11250-z