Abstract

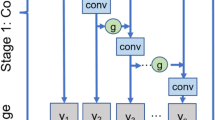

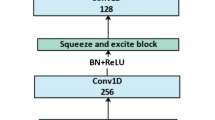

Time series classification (TSC) is one of the significant problems in the data mining community due to the wide class of domains involving the time series data. The TSC problem is being studied individually for univariate and multivariate using different datasets and methods. Subsequently, deep learning methods are more robust than other techniques and revolutionized many areas, including TSC. Therefore, in this study, we exploit the performance of attention mechanism, deep Gated Recurrent Unit (dGRU), Squeeze-and-Excitation (SE) block, and Fully Convolutional Network (FCN) in two end-to-end hybrid deep learning architectures, Att-dGRU-FCN and Att-dGRU-SE-FCN. The performance of the proposed models is evaluated in terms of classification testing error and f1-score. Extensive experiments and ablation study is carried out on multiple univariate and multivariate datasets from different domains to acquire the best performance of the proposed models. The proposed models show effective performance over other published methods, also do not require heavy data pre-processing, and small enough to be deployed on real-time systems.

Similar content being viewed by others

References

Zhang J, Li Y, Xiao W, Zhang Z (2020) Non-iterative and fast deep learning: multilayer extreme learning machines. J Frankl Inst 357:8925–8955

Zhang J, Xiao W, Li Y, Zhang S, Zhang Z (2020) Multilayer probability extreme learning machine for device-free localization. Neurocomputing 396:383–393

Zhang J, Xiao W, Li Y, Zhang S (2018) Residual compensation extreme learning machine for regression. Neurocomputing 311:126–136

Aswolinskiy W, Reinhart RF, Steil J (2018) Time series classification in reservoir-and model-space. Neural Process Lett 48:789–809

Keogh E, Ratanamahatana CA (2005) Exact indexing of dynamic time warping. Knowl Inf Syst 7:358–386

Lin J, Keogh E, Wei L, Lonardi S (2007) Experiencing SAX: a novel symbolic representation of time series. Data Min Knowl Discov 15:107–144

Baydogan MG, Runger G, Tuv E (2013) A bag-of-features framework to classify time series. IEEE Trans Pattern Anal Mach Intell 35:2796–2802

Schäfer P (2015) The BOSS is concerned with time series classification in the presence of noise. Data Min Knowl Discov 29:1505–1530

Schäfer P (2016) Scalable time series classification. Data Min Knowl Discov 30:1273–1298

Schäfer P, Leser U (2017) Fast and accurate time series classification with weasel. In: Proceedings of the 2017 ACM on conference on information and knowledge management, pp 637–646

Lines J, Bagnall A (2015) Time series classification with ensembles of elastic distance measures. Data Min Knowl Discov 29:565–592

Bagnall A, Lines J, Hills J, Bostrom A (2015) Time-series classification with COTE: the collective of transformation-based ensembles. IEEE Trans Knowl Data Eng 27:2522–2535

Lines J, Taylor S, Bagnall A (2016) Hive-cote: the hierarchical vote collective of transformation-based ensembles for time series classification. In: 2016 IEEE 16th international conference on data mining (ICDM), pp 1041–1046

Lines J, Taylor S, Bagnall A (2018) Time series classification with HIVE-COTE: the hierarchical vote collective of transformation-based ensembles. ACM Trans Knowl Discov Data. https://doi.org/10.1145/3182382

Shifaz A, Pelletier C, Petitjean F et al (2020) TS-CHIEF: a scalable and accurate forest algorithm for time series classification. Data Min Knowl Disc 34:742–775. https://doi.org/10.1007/s10618-020-00679-8

Zheng Q, Yang M, Yang J, Zhang Q, Zhang X (2018) Improvement of generalization ability of deep CNN via implicit regularization in two-stage training process. IEEE Access 6:15844–15869

Zheng Q, Zhao P, Li Y et al (2020) Spectrum interference-based two-level data augmentation method in deep learning for automatic modulation classification. Neural Comput Appl. https://doi.org/10.1007/s00521-020-05514-1

Zheng Q, Tian X, Yang M et al (2020) PAC-Bayesian framework based drop-path method for 2D discriminative convolutional network pruning. Multidimens Syst Signal Process 31:793–827. https://doi.org/10.1007/s11045-019-00686-z

Zheng Q, Tian X, Jiang N, Yang M (2019) Layer-wise learning based stochastic gradient descent method for the optimization of deep convolutional neural network. J Intell Fuzzy Syst 37:5641–5654

Zheng Q, Yang M, Tian X, Jiang N, Wang D (2020) A full stage data augmentation method in deep convolutional neural network for natural image classification. Discrete Dyn Nat Soc. https://doi.org/10.1155/2020/4706576

Khan M, Wang H, Ngueilbaye A et al (2020) End-to-end multivariate time series classification via hybrid deep learning architectures. Pers Ubiquit Comput. https://doi.org/10.1007/s00779-020-01447-7

Khan M, Wang H, Riaz A et al (2021) Bidirectional LSTM-RNN-based hybrid deep learning frameworks for univariate time series classification. J Supercomput. https://doi.org/10.1007/s11227-020-03560-z

Wang Z, Yan W, Oates T (2017) Time series classification from scratch with deep neural networks: a strong baseline. In: 2017 international joint conference on neural networks (IJCNN), pp 1578–-1585

Karim F, Majumdar S, Darabi H, Chen S (2018) LSTM fully convolutional networks for time series classification. IEEE Access 6:1662–1669

Elsayed N, Maida AS, Bayoumi M (2018) Deep gated recurrent and convolutional network hybrid model for univariate time series classification. arXiv:1812.07683

Fawaz HI, Lucas B, Forestier G, Pelletier C, Schmidt DF, Weber J et al (2020) Inceptiontime: finding alexnet for time series classification. Data Min Knowl Discov 34:1936–1962

Dempster A, Petitjean F, Webb GI (2020) ROCKET: exceptionally fast and accurate time series classification using random convolutional kernels. Data Min Knowl Discov 34:1454–1495

Seto S, Zhang W, Zhou Y (2015) Multivariate time series classification using dynamic time warping template selection for human activity recognition. In: 2015 IEEE symposium series on computational intelligence, pp 1399–-1406

Schäfer P, Leser U (2017) Multivariate time series classification with WEASEL+ MUSE. arXiv:1711.11343

Baydogan MG, Runger G (2015) Learning a symbolic representation for multivariate time series classification. Data Min Knowl Discov 29:400–422

Zheng Y, Liu Q, Chen E, Ge Y, Zhao JL (2014) Time series classification using multi-channels deep convolutional neural networks. In: International conference on web-age information management, pp 298–310

Karim F, Majumdar S, Darabi H, Harford S (2019) Multivariate lstm-fcns for time series classification. Neural Netw 116:237–245

Zhang X, Gao Y, Lin J, Lu C-T (2020) TapNet: multivariate time series classification with attentional prototypical network. In: AAAI, pp 6845–6852

Bahdanau D, Cho K, Bengio Y (2014) Neural machine translation by jointly learning to align and translate. arXiv:1409.0473

Wang N, Ma S, Li J, Zhang Y, Zhang L (2020) Multistage attention network for image inpainting. Pattern Recogn 106: https://doi.org/10.1016/j.patcog.2020.107448

Ioffe S, Szegedy C (2015) Batch normalization: accelerating deep network training by reducing internal covariate shift. arXiv:1502.03167

Nair V, Hinton GE (2010) Rectified linear units improve restricted boltzmann machines. In: Proceedings of the 27th international conference on machine learning (ICML-10), pp 807–814

Chung J, Gulcehre C, Cho K, Bengio Y (2014) Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv:1412.3555

Srivastava N, Hinton G, Krizhevsky A, Sutskever I, Salakhutdinov R (2014) Dropout: a simple way to prevent neural networks from overfitting. J Mach Learn Res 15:1929–1958

He K, Zhang X, Ren S, Sun J (2015) Delving deep into rectifiers: surpassing human-level performance on imagenet classification. In: Proceedings of the IEEE international conference on computer vision, pp 1026–1034

Lin M, Chen Q, Yan S (2013) Network in network. arXiv:1312.4400

Chollet F et al (2015). Keras. GitHub. Retrieved from https://github.com/fchollet/keras

Hu J, Shen L, Sun G (2018) Squeeze-and-excitation networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7132–7141

Chen Y, Keogh E, Hu B, Begum N, Bagnall A, Mueen A, Batista G (2015) The UCR time series classification archive. http://www.cs.ucr.edu/~eamonn/time_series_data/

Pei W, Dibeklioğlu H, Tax DM, van der Maaten L (2017) Multivariate time-series classification using the hidden-unit logistic model. IEEE Trans Neural Netw Learn Syst 29:920–931

Dua D, Graff C (2017) UCI machine learning repository. University of California, Irvine, School of Information and Computer Sciences

Kingma DP, Ba J (2014) Adam: a method for stochastic optimization. arXiv:1412.6980

Abadi M, Barham P, Chen J, Chen Z, Davis A, Dean J et al (2016) Tensorflow: a system for large-scale machine learning. In: 12th {USENIX} symposium on operating systems design and implementation ({OSDI} 16), pp 265–283

Acknowledgements

This paper was partially supported by NSFC Grant U1509216, U1866602, 61602129, and Microsoft Research Asia.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Khan, M., Wang, H. & Ngueilbaye, A. Attention-Based Deep Gated Fully Convolutional End-to-End Architectures for Time Series Classification. Neural Process Lett 53, 1995–2028 (2021). https://doi.org/10.1007/s11063-021-10484-z

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-021-10484-z