Abstract

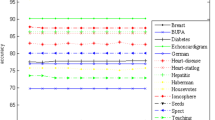

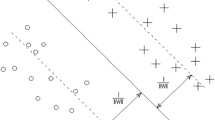

The least squares support vector machine (LSSVM), like standard support vector machine (SVM) which is based on structural risk minimization, can be obtained by solving a simpler optimization problem than that in SVM. However, local structure information of data samples, especially intrinsic manifold structure, is not taken full consideration in LSSVM. To address this problem and inspired by manifold learning technique, we propose a novel iterative least squares classifier, coined optimal locality preserving least squares support vector machine (OLP-LSSVM). The idea is to combine structural risk minimization and locality preserving criterion in a unified framework to take advantage of the manifold structure of data samples to enhance LSSVM. Furthermore, inspired by the recent development of simultaneous optimization technique, adjacent graph of locality preserving criterion is optimized simultaneously to give rise to improved discriminative performance. The resulting model can be solved by alternating optimization method. The experimental results on several publicly available benchmark data sets show the feasibility and effectiveness of the proposed method.

Similar content being viewed by others

References

Vapnik VN (1995) The nature of statistical theory. Springer-Verlag, New York

Burges CJC (1998) A tutorial on support vector machines for pattern recognition. Data Min Knowl Discov 2(2): 121–167

Shawe-Taylor J, Cristianini N (2004) Kernel methods for pattern analysis. Cambridge University Press, Cambridge

Platt JC (1999) Fast training of support vector machines using sequential minimal optimization. In: Schölkopf B, Burges CJC, Smola AJ (eds) Advances in Kernel Methods: support vector learning. MIT Press, Cambridge, MA, pp 185–208

Joachims T (1999) Making large-scale support vector machine learning practical. In: Schölkopf B, Burges CJC, Smola AJ (eds) Advances in Kernel Methods: support vector learning. MIT Press, Cambridge, MA, pp 169–184

Chang CC, Lin CJ (2001) LIBSVM: a library for support vector machines. Software available at http://www.csie.ntu.edu.tw/~cjlin/libsvm

Suykens JAK, Vandewalle J (1999) Least squares support vector machine classifiers. Neural Process Lett 9(3): 293–300

Núñez H, Angulo C, Català A (2006) Rule based learning systems for support vector machines. Neural Process Lett 24(1): 1–18

Yeung DS, Wang D, Ng WWY, Tsang ECC, Zhao X (2007) Structured large margin machines: sensitive to data distributions. Mach Learn 68: 171–200

Xue H, Chen S, Yang Q (2008) Structural support vector machine, advances in neural networks - ISNN 2008. LNCS 5263:501–511

Belkin M, Niyogi P (2001) Laplacian Eigenmaps and spectral techniques for embedding and clustering, advances in neural information processing systems 15, Vancouver, British Columbia, Canada

He X, Niyogi P (2003) Locality preserving projections, advance in neural information processing systems 16, Vancouver, Canada

Zhang LM, Qiao LS, Chen SC (2010) Graph-optimized locality preserving projections. Pattern Recognit 43(6): 1993–2002

Belkin M, Niyogi P, Sindhwani V (2006) Manifold regularization: a geometric framework for learning from labeled and unlabeled examples. J Mach Learn Res 7: 2399–2434

Chung FRK (1997) Spectral graph theory, regional conference series in mathematics, number 92

Tikhonov AN, Arsen VY (1977) Solutions of ill-posed problems. Wiley, New York

Mangasarian OL (1998) Nonlinear programming. SIAM, Philadelphia

Ojeda F, Suykens JAK, Moor BD (2008) Low rank updated LS-SVM classifiers for fast variable selection. Neural Netw 21(2–3): 437–449

Rudin W (1964) Principles of mathematical analysis. 2. McGraw-Hill Book Company, New York

Golub GH, Van Loan CF (1996) Matrix computations. 3. The Johns Hopkins University Press, Baltimore

Muphy PM, Aha DW (1992) UCI repository of machine learning databases. University of California, Irvine. Available at http://www.ics.uci.edu/~mlearn

MOSEK ApS (2008) The MOSEK optimization tools manual. Version 5.0. Software available at http://www.mosek.com

Michie D, Spiegelhalter DJ, Taylor CC (1994) Machine learning, neural and statistical classification. Available: ftp.ncc.up.pt/pub/statlog/

Cai D, He X, Han J (2007) Semi-supervised discriminant analysis. In: Proceedings of the International conference on computer vision

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Chen, X., Yang, J. & Liang, J. Optimal Locality Regularized Least Squares Support Vector Machine via Alternating Optimization. Neural Process Lett 33, 301–315 (2011). https://doi.org/10.1007/s11063-011-9179-8

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-011-9179-8