Abstract

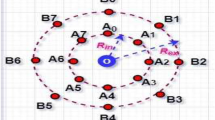

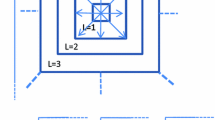

Traditional visual saliency based video compression methods try to encode the image with higher quality in the region of saliency. However, the saliency feature changes according to persons, viewpoints, and distances. In this paper, we propose to apply a technique of human centered perceptual computation to improve video coding in the region of human centered perception. To detect the region of interest (ROI) of human body, upper body, frontal face, and profile face, we construct Harr and histogram of oriented gradients features based combo of detectors to analyze a video in the first frame (intra-frame). From the second frame (inter-frame) onward, the optical flow image is computed in the ROI area of the first frame. The optical flow in human centered ROI is then used for macroblock (MB) quantization adjustment in H.264/AVC. For each MB, the quantization parameter (QP) is optimized with density value of optical flow image. The QP optimization process is based on a MB mapping model, which can be calculated by an inverse of the inverse tangent function. The Lagrange multiplier in the rate distortion optimization is also adapted so that the MB distortion at human centered region is minimized. We apply our technique to the H.264 video encoder to improve coding visual quality. By evaluating our scheme with the H.264 reference software, our results show that the proposed algorithm can improve the visual quality of ROI by about 1.01 dB while preserving coding efficiency.

Similar content being viewed by others

References

Bjontegaard, G. (2004). Calculation of average PSNR differences between RD-curves. In 13th VCEG meeting, VCEG-M33.

Castrillón, M., Déniz, O., Guerra, C., & Hernández, M. (2007). ENCARA2: Real-time detection of multiple faces at different resolutions in video streams. Journal of Visual Communication and Image Representation, 18(2), 130–140.

Cavallaro, A., Steiger, O., & Ebrahimi, T. (2004). Perceptual prefiltering for video coding. In Proceedings of the 2004 international symposium on intelligent multimedia, video and speech processing, 2004, pp. 510–513.

Chen, Z., Lin, W., & Ngan, K. N. (2010). Perceptual video coding: Challenges and approaches. In Proceedings of the 2010 IEEE international conference on multimedia and expo, pp. 784-789.

Chen, Q., Yang, X., Song, L., & Zhang, W. (2009). Robust video region-of-interest coding based on leaky prediction. IEEE Transactions on Circuits and Systems for Video Technology, 19(1), 1389–1394.

Chen, Z., & Guillemot, C. (2010). Perceptually-friendly H.264/AVCavc video coding based on foveated just noticeable-distortion model. IEEE Transactions on Circuits and Systems for Video Technology, 20(6), 806–819.

Dalal, N., & Triggs, B. (2005). Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE computer vision and pattern recognition, vol. 1, pp. 886–893.

Hadizadeh, H., & Bajic, I. V. (2014). Saliency-aware video compression. IEEE Transactions on Image Processing, 23(1), 19–33.

Itti, L., Koch, C., & Niebur, E. (1998). A model of saliency-based visual attention for rapid scene analysis. IEEE Transactions on Pattern Analysis and Machine Intelligence, 20(11), 1254–1259.

Itti, L. (2004). Automatic foveation for video compression using a neurobiological model of visual attention. IEEE Transactions on Image Processing, 13(10), 1304–1318.

ITU-T Rec. Advanced video coding for generic audiovisual services. H.264 and ISO/IEC 14496-10 AVC, February 2014.

ITU-T Recommendation H.265. High efficiency video coding. International Telecommunication Union, April 2013.

JM software. (2013). http://iphome.hhi.de/suehring/tml/download. Accessed March 4, 2013.

Li, Z. G., Gao, W., Pan, F., Ma, S. W., Lim, K. P., Feng, G. N., et al. (2006). Adaptive rate control for h.264. Visual Communication and Image Represent, 17, 376–406.

Li, Z., Qin, S., & Itti, L. (2011). Visual attention guided bit allocation in video compression. Image Vision Computing, 29(1), 1–14.

Liu, Y., Li, Z. G., & Soh, Y. C. (2008). Region-of-interest based resource allocation for conversational video communication of H.264/AVC. IEEE Transactions on Circuits and Systems for Video Technology, 18(1), 134–139.

Luo, Z., Song, L., Zheng, S., & Ling, N. (2013). H.264/advanced video control perceptual optimization coding based on JND-directed coefficient suppression. IEEE Transactions on Circuits and Systems for Video Technology, 23(6), 935–948.

Mittal, A., Moorthy, A. K., & Bovik, A. C. (2012). No-reference image quality assessment in the spatial domain. IEEE Transactions on Image Processing, 21(12), 4695–4708.

Moorthy, A. K., Choi, L. K., Bovik, A. C., & Veciana, G. D. (2012). Video quality assessment on mobile devices: Subjective, behavioral and objective studies. IEEE Journal of Selected Topics in Signal Processing, 6(6), 652–671.

OpenCV (Open Computer Vision Library). http://opencv.org/

Ribas-Corbera, J., & Lei, S. (1999). Rate control in DCT video coding for low-delay communications. IEEE Transactions on Circuits and Systems for Video Technology, 9(1), 172–185.

Sun, D., Roth, S., & Black, M. J. (2010). Secrets of optical flow estimation and their principles. In Proceedings of 2010 IEEE conference on computer vision and pattern recognition (CVPR), pp. 2432–2439.

Tang, C. W., Chen, C. H., Yu, Y. H., & Tsai, C. J. (2006). Visual sensitivity guided bit allocation for video coding. IEEE Transactions on Multimedia, 8(1), 11–18.

Viola, P. & Jones, M. (2001). Rapid object detection using a boosted cascade of simple features. In Proceedings of the 2001 IEEE computer society conference on computer vision and pattern recognition, 1(2), 511–518.

Wiegand, T., Schwarz, H., Joch, A., Kossentini, F., & Sullivan, G. J. (2003). Rate-constrained coder control and comparison of video coding standards. IEEE Transactions on Circuits and Systems for Video Technology, 13(7), 688–703.

Wu, J. X., Christopher, G., & James, M. R. (2011). Real-time human detection using contour cues. In Proceedings of IEEE international conference on robotics and automation (ICRA 2011), pp. 860–867.

Yang, X., Lin, W., Lu, Z., Lin, X., Rahardja, S., Ong, E., et al. (2005). Rate control for videophone using local perceptual cues. IEEE Transactions on Circuits and Systems for Video Technology, 15(4), 496–507.

Yuan, Z., Xiong, H., Song, L., & Zheng, Y. F. (2009). Generic video coding with abstraction and detail completion. In Proceedings of IEEE international conference on acoustics, speech and signal processing, pp. 901–904.

Author information

Authors and Affiliations

Corresponding author

Additional information

This research is supported by the supported by the National Nature Science Foundation of China (Nos. 61105016, 61202369). The Innovation Program of Shanghai Municipal Education Commission (Grant No. 12YZ139).

Rights and permissions

About this article

Cite this article

Tong, M., Gu, Z., Ling, N. et al. Human centered perceptual adaptation for video coding. Multidim Syst Sign Process 27, 785–799 (2016). https://doi.org/10.1007/s11045-015-0347-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11045-015-0347-2