Abstract

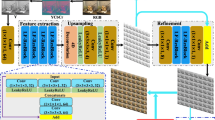

Current light field angular super resolution algorithms generate coarse viewpoint images due to their low learning ability and equally upsample all macropixels on the light field image. In this paper, we propose a novel angular super resolution network to precisely enhance angular resolution, via stacking multiple residual channel attention groups and accurately up-sampling macro pixels with a classified prediction module. Firstly, the network extracts low-frequency angular information on the input light field image, and then refines high-frequency angular information by stacking residual channel attention groups. Secondly, the classified prediction module combines high-frequency and low-frequency angular information to predict three groups of feature maps with three channels. The first two channels of each group predict two different angular information from macro pixels. The third channel learns classification results and ensures up-sampling bases on the most accurate information. According to classified results, we could accurately speculate occlusions, and obtain three high-quality images with the same angular resolution as the input image. Finally, to enhance the angular resolution, we integrate three high-quality images with the input image into one light field image. The experimental results verify the effectiveness of our proposed method, we achieve average PSNR gains of 2.81 dB than the state-of-the-art method.

Similar content being viewed by others

Data Availability

The datasets generated and analyzed during the current study are not publicly available due to the excessive size but are available from the corresponding author on reasonable request.

References

Ng R, Levoy M, Brédif M, Duval G, Horowitz M, Hanrahan P (2005) Light field photography with a hand-held plenoptic camera. PhD thesis, Stanford University

Lumsdaine A, Georgiev T (2009) The focused plenoptic camera. In: 2009 IEEE international conference on computational photography (ICCP), pp 1–8. IEEE

Levoy M, Hanrahan P (1996) Light field rendering. In: Proceedings of the 23rd annual conference on computer graphics and interactive techniques, pp 31–42

Mitra K, Veeraraghavan A (2012) Light field denoising, light field superresolution and stereo camera based refocussing using a gmm light field patch prior. In: 2012 IEEE computer society conference on computer vision and pattern recognition workshops, pp 22–28. IEEE

Srinivasan PP, Wang T, Sreelal A, Ramamoorthi R, Ng R (2017) Learning to synthesize a 4d rgbd light field from a single image. In: Proceedings of the IEEE international conference on computer vision, pp 2243–2251

Li N, Sun B, Yu J (2015) A weighted sparse coding framework for saliency detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 5216–5223

Jeon H-G, Park J, Choe G, Park J, Bok Y, Tai Y-W, So Kweon I (2015) Accurate depth map estimation from a lenslet light field camera. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1547–1555

Shin C, Jeon H-G, Yoon Y, Kweon IS, Kim SJ (2018) Epinet: a fully-convolutional neural network using epipolar geometry for depth from light field images. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4748–4757

Sangeetha S, Kushwah VS, Sumangali K, Sangeetha R, Raja KT, Mathivanan SK (2023) Effect of urbanization through land coverage classification. Radio Sci 58(11):1–13

Zhang S, Chang S, Shen Z, Lin Y (2021) Micro-lens image stack upsampling for densely-sampled light field reconstruction. IEEE Trans Comput Imag 7:799–811

Wang Y, Wang L, Yang J, An W, Yu J, Guo Y (2020) Spatial-angular interaction for light field image super-resolution. In: Computer vision–ECCV 2020: 16th European conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XXIII 16, pp 290–308. Springer

Wang Y, Yang J, Guo Y, Xiao C, An W (2018) Selective light field refocusing for camera arrays using bokeh rendering and superresolution. IEEE Signal Process Lett 26(1):204–208

Adelson EH, Bergen JR et al (1991) The plenoptic function and the elements of early vision. Comput Model of Vis Process 1(2):3–20

Yoon Y, Jeon H-G, Yoo D, Lee J-Y, Kweon IS (2017) Light-field image super-resolution using convolutional neural network. IEEE Signal Process Lett 24(6):848–852

Wu G, Masia B, Jarabo A, Zhang Y, Wang L, Dai Q, Chai T, Liu Y (2017) Light field image processing: an overview. IEEE J Sel Top Signal Process 11(7):926–954

Fang L, DAI Q (2020) Computational light field imaging. Acta Opt Sin 40(1):3–24

Wanner S, Goldluecke B (2013) Variational light field analysis for disparity estimation and super-resolution. IEEE Trans Pattern Anal Mach Intell 36(3):606–619

Kalantari NK, Wang T-C, Ramamoorthi R (2016) Learning-based view synthesis for light field cameras. ACM Trans Graph (TOG) 35(6):1–10

Shi J, Jiang X, Guillemot C (2020) Learning fused pixel and feature-based view reconstructions for light fields. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 2555–2564

Gupta M, Jauhari A, Kulkarni K, Jayasuriya S, Molnar A, Turaga P (2017) Compressive light field reconstructions using deep learning. In: Proceedings of the IEEE conference on computer vision and pattern recognition workshops, pp 11–20

Gul MSK, Gunturk BK (2018) Spatial and angular resolution enhancement of light fields using convolutional neural networks. IEEE Trans Image Process 27(5):2146–2159

Wang X, You S, Zan Y, Deng Y (2021) Fast light field angular resolution enhancement using convolutional neural network. IEEE Access 9:30216–30224

Raj AS, Lowney M, Shah R, Wetzstein G (2017) Light-field database creation and depth estimation. https://lightfields.standford.edu/

Wang Z, Chen J, Hoi SC (2020) Deep learning for image super-resolution: a survey. IEEE Trans Pattern Anal Mach Intell 43(10):3365–3387

Dong C, Loy CC, He K, Tang X (2014) Learning a deep convolutional network for image super-resolution. In: Computer vision–ECCV 2014: 13th European conference, Zurich, Switzerland, September 6-12, 2014, Proceedings, Part IV 13, pp 184–199. Springer

Yoon Y, Jeon H-G, Yoo D, Lee J-Y, So Kweon I (2015) Learning a deep convolutional network for light-field image super-resolution. In: Proceedings of the IEEE international conference on computer vision workshops, pp 24–32

Kim J, Lee JK, Lee KM (2016) Accurate image super-resolution using very deep convolutional networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1646–1654

Kim D-M, Kang H-S, Hong J-E, Suh J-W (2019) Light field angular super-resolution using convolutional neural network with residual network. In: 2019 Eleventh international conference on ubiquitous and future networks (ICUFN), pp 595–597. IEEE

Ledig C, Theis L, Huszár F, Caballero J, Cunningham A, Acosta A, Aitken A, Tejani A, Totz J, Wang Z et al (2017) Photo-realistic single image super-resolution using a generative adversarial network. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4681–4690

Meng N, Ge Z, Zeng T, Lam EY (2020) Lightgan: a deep generative model for light field reconstruction. IEEE Access 8:116052–116063

Shi W, Caballero J, Huszár F, Totz J, Aitken AP, Bishop R, Rueckert D, Wang Z (2016) Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1874–1883

Jin J, Hou J, Yuan H, Kwong S (2020) Learning light field angular super-resolution via a geometry-aware network. Proceedings of the AAAI conference on artificial intelligence, vol 34, pp 11141–11148

Wang Y, Yang J, Wang L, Ying X, Wu T, An W, Guo Y (2020) Light field image super-resolution using deformable convolution. IEEE Trans Image Process 30:1057–1071

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 770–778

Johnson J, Alahi A, Fei-Fei L (2016) Perceptual losses for real-time style transfer and super-resolution. In: Computer vision–ECCV 2016: 14th European conference, Amsterdam, The Netherlands, October 11-14, 2016, Proceedings, Part II 14, pp 694–711. Springer

Meng N, So HK-H, Sun X, Lam EY (2019) High-dimensional dense residual convolutional neural network for light field reconstruction. IEEE Trans Pattern Anal Mach Intell 43(3):873–886

Zhang Y, Li K, Li K, Wang L, Zhong B, Fu Y (2018) Image super-resolution using very deep residual channel attention networks. In: Proceedings of the european conference on computer vision (ECCV), pp 286–301

Hu J, Shen L, Sun G (2018) Squeeze-and-excitation networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7132–7141

Lu E, Hu X (2022) Image super-resolution via channel attention and spatial attention. Appl Intell 52(2):2260–2268

Chen H, Gu J, Zhang Z (2021) Attention in attention network for image super-resolution. arXiv:2104.09497

Dai T, Cai J, Zhang Y, Xia S-T, Zhang L (2019) Second-order attention network for single image super-resolution. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 11065–11074

Dong C, Loy CC, Tang X (2016) Accelerating the super-resolution convolutional neural network. In: Computer vision–ECCV 2016: 14th european conference, Amsterdam, The Netherlands, October 11-14, 2016, Proceedings, Part II 14, pp 391–407. Springer

Lai W-S, Huang J-B, Ahuja N, Yang M-H (2017) Deep laplacian pyramid networks for fast and accurate super-resolution. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 624–632

Lai W-S, Huang J-B, Ahuja N, Yang M-H (2018) Fast and accurate image super-resolution with deep laplacian pyramid networks. IEEE Trans Pattern Anal Mach Intell 41(11):2599–2613

Lim B, Son S, Kim H, Nah S, Mu Lee K (2017) Enhanced deep residual networks for single image super-resolution. In: Proceedings of the IEEE conference on computer vision and pattern recognition workshops, pp 136–144

Zhang Y, Tian Y, Kong Y, Zhong B, Fu Y (2018) Residual dense network for image super-resolution. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2472–2481

Aly HA, Dubois E (2005) Image up-sampling using total-variation regularization with a new observation model. IEEE Trans Image Process 14(10):1647–1659

Bruhn A, Weickert J, Schnörr C (2005) Lucas/kanade meets horn/schunck: combining local and global optic flow methods. Int J Comput Vision 61:211–231

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. arXiv:1409.1556

Kingma DP, Ba J (2014) Adam: a method for stochastic optimization. arXiv:1412.6980

Mei K, Jiang A, Li J, Ye J, Wang M (2018) An effective single-image super-resolution model using squeeze-and-excitation networks. In: Neural information processing: 25th international conference, ICONIP 2018, Siem Reap, Cambodia, December 13–16, 2018, Proceedings, Part VI 25, pp 542–553. Springer

Zhou L, Cai H, Gu J, Li Z, Liu Y, Chen X, Qiao Y, Dong C (2022) Efficient image super-resolution using vast-receptive-field attention. In: European conference on computer vision, pp 256–272. Springer

Acknowledgements

This work was supported by the Shenzhen Fundamental Research fund under Grant 20200810150441003 and JCYJ20190808143415801, the Guangdong Basic and Applied Basic Research Foundation under Grant 2020A1515011559 and 2021A1515012287, and the Natural Science Foundation of Top Talent of SZTU (grant no. 20211061010009).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wang, X., Wang, Z. & You, S. Light field angular super resolution based on residual channel attention and classification up-sampling. Multimed Tools Appl (2024). https://doi.org/10.1007/s11042-024-19359-6

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11042-024-19359-6