Abstract

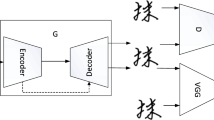

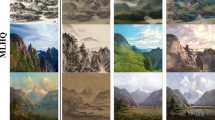

Artistic font plays an important practical value in advertising media and graphic design. The font is rendered to present a unique text effect, which is more ornamental and attractive. In order to explore more efficient font design method, this paper proposes artistic font generation network combining font style and glyph structure discriminators (ArtFontNet). We adopt the idea of generative adversarial network to obtain artistic fonts. The generator uses residual dense module to generate artistic fonts, and font style discriminator and glyph structure discriminator guide the generator together. The font style discriminator supervises the color and texture information of the entire font image. The glyph structure discriminator extracts the glyph structure and texture distribution of the generated image through the Canny edge detection operator. For the task of artistic font generation, our approach achieves significant performance compared to other existing methods. Compared with the font generation methods in the experiment, the PSNR value of the generated image in this paper is increased by 2.95 dB on average. The SSIM value is increased by 0.03 on average. The VIF value improved by 0.025 on average. The UV quantization results are maintained at 85%-90%. From both visual and objective evaluations, ArtFontNet enhances the detail fidelity and style accuracy of the generated artistic fonts.

Similar content being viewed by others

Data availability

The dataset used in this article is available at http://www.icst.pku.edu.cn/struct/Projects/TETGAN.html.

References

Arjovsky M, Chintala S, Bottou L (2017) Wasserstein GAN https://doi.org/10.48550/arXiv.1701.07875

Azadi S, Fisher M, Kim V G, Wang Z, Shechtman E, Darrell T (2018) Multi-content gan for few-shot font style transfer. In Proceedings of the IEEE conference on computer vision and pattern recognition 7564–7573 https://doi.org/10.48550/arXiv.1712.00516

Baluja S (2017) Learning typographic style: from discrimination to synthesis. Mach Vis Appl 551–568 https://doi.org/10.1007/s00138-017-0842-6

Chang B, Zhang Q, Pan S, Meng L (2018) Generating handwritten chinese characters using cyclegan. In 2018 IEEE winter conference on applications of computer vision 199–207 https://doi.org/10.1109/wacv.2018.00028

Gao Y, Guo Y, Lian Z, Tang Y, Xiao J (2019) Artistic glyph image synthesis via one-stage few-shot learning. ACM Trans Graphics 38(6):1–12. https://doi.org/10.1145/3355089.3356574

Gatys LeonA, Ecker AlexanderS, Bethge M (2015) A Neural Algorithm of Artistic Style. https://doi.org/10.48550/arXiv.1508.06576

Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Bengio Y (2020) Generative adversarial networks. Commun ACM 63(11):139–144. https://doi.org/10.1145/3422622

Gu X, Liu J, Zou X, Kuang P (2017). Using checkerboard rendering and deconvolution to eliminate checkerboard artifacts in images generated by neural networks. In 2017 14th Int Comp Conf Wavelet Active Media Technol Inform Process 197–200 https://doi.org/10.1109/ICCWAMTIP.2017.8301478

Gulrajani I, Ahmed F, Arjovsky M, Dumoulin V, Courville A C (2017) Improved training of wasserstein gans. Adv Neural Inform Process Syst 30 https://doi.org/10.48550/arXiv.1704.00028

He K, Zhang X, Ren S, Sun J (2016). Deep residual learning for image recognition. In Proc IEEE Conf Comp Vis Pattern Recog 770–778 https://doi.org/10.48550/arXiv.1512.03385

Huang G, Liu Z, Van Der Maaten L, Weinberger K Q (2017) Densely connected convolutional networks. In Proc IEEE Conf Comp Vis Pattern Recog 4700–4708 https://doi.org/10.48550/arXiv.1608.06993

Isola P, Zhu J Y, Zhou T, Efros A A (2017) Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE conference on computer vision and pattern recognition, 1125–1134 https://doi.org/10.48550/arXiv.1611.07004

Johnson J, Alahi A, Fei-Fei L (2016) Perceptual losses for real-time style transfer and super-resolution. In Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11–14, 2016. Proc Part II 14:694–711. https://doi.org/10.1007/978-3-319-46475-6_43

Lyu P, Bai X, Yao C, Zhu Z, Huang T, Liu W (2017) Auto-encoder guided GAN for Chinese calligraphy synthesis. In 2017 14th IAPR International Conference on Document Analysis and Recognition 1 1095–1100 https://doi.org/10.1109/icdar.2017.181

Mirza M, Osindero S (2014) Conditional generative adversarial nets https://doi.org/10.48550/arXiv.1411.1784

Wang X, Girshick R, Gupta A, He K (2018) Non-local neural networks. In Proc IEEE Conf Comp Vis Pattern Recog 7794–7803 https://doi.org/10.48550/arXiv.1711.07971

Wang W, Liu J, Yang S, Guo Z (2019) Typography with decor: Intelligent text style transfer. Proc IEEE/CVF Conf Comp Vis Pattern Recogn 5889–5897 https://doi.org/10.1109/cvpr.2019.00604

Yang S, Liu J, Lian Z, Guo Z (2017) Awesome typography: Statistics-based text effects transfer. Proc IEEE Conf Comp Vis Pattern Recog 7464–7473 https://doi.org/10.48550/arXiv.1611.09026

Yang S, Liu J, Lian Z, Guo Z (2018) Text effects transfer via distribution-aware texture synthesis. Comput Vis Image Underst 174:43–56. https://doi.org/10.1016/j.cviu.2018.07.004

Yang S, Liu J, Wang W, Guo Z (2019) TET-GAN: Text effects transfer via stylization and destylization. In Proc AAAI Conf Artif Intell 33(01):1238–1245. https://doi.org/10.1609/aaai.v33i01.33011238

Zhang L, Ji Y, Lin X, Liu C (2017) Style transfer for anime sketches with enhanced residual u-net and auxiliary classifier gan. In 2017 4th IAPR Asian conference on pattern recognition 506–511 https://doi.org/10.1109/acpr.2017.61

Zhang H, Goodfellow I, Metaxas D, Odena A (2019) Self-attention generative adversarial networks. In Int Conf Mach Learn 7354–736. https://doi.org/10.48550/arXiv.1805.08318

Zhang Y, Tian Y, Kong Y, Zhong B, Fu Y (2018) Residual dense network for image super-resolution. In Proc IEEE Conf Comp Vis Pattern Recog 2472–2481 https://doi.org/10.1109/cvpr.2018.00262

Zhang XY, Yin F, Zhang YM, Liu CL, Bengio Y (2017) Drawing and recognizing chinese characters with recurrent neural network. IEEE Trans Pattern Anal Mach Intell 40(4):849–862. https://doi.org/10.1109/TPAMI.2017.2695539

Zhu J Y, Park T, Isola P, Efros A A (2017) Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE international conference on computer vision 2223–2232 https://doi.org/10.1109/iccv.2017.244

Acknowledgements

This work was supported by the two funds. They are Research on the Inheritance Technology of Ancient Inscription Calligraphy Culture Based on Artificial Intelligence, 62076200, Chinese National Natural Science Foundation. And Research and Application of Key Technologies for Vectorization of Traditional Chinese Calligraphy Based on Artificial Intelligence, 2023-YBGY-149, key research and development Project in Shaanxi Province.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Confict of interest

The authors declare that they have no confict of interest.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Miao, Y., Jia, H. & Tang, K. Artistic font generation network combining font style and glyph structure discriminators. Multimed Tools Appl 83, 21883–21903 (2024). https://doi.org/10.1007/s11042-023-16396-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-16396-5