Abstract

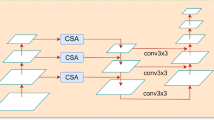

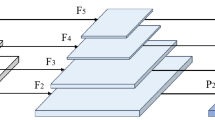

Recently, deep learning-based object detection method has achieved remarkable success. Among them, YOLOv4 has attracted increasing attention with its high accuracy and real-time performance. However, the grasp of contextual semantic information is often unsatisfactory, which is mainly caused by the internal details of the network. To address this issue, we propose an efficient model YOLO-AA (YOLO Model Based on Attention and Atrous Spatial Pyramid Pooling) that enhances the fusion of contextual information. First, we mark the object area a certain degree of attention in different nodes of network propagation, so that it will be conducive to pay more attention to instrumental information; Secondly, considering the issue of parameter and computational complexity, the neck region was optimized, so that the improved model can achieve similar or even better results than the original algorithm with fewer parameter quantities; Then, inspired by the semantic segmentation model DeepLabv3 + , we replace the pooling operation in the Spatial Pyramid Pooling (SPP) module by introducing Depth-wise Separable Convolutions with different dilation rates, with the aim of reflecting multi-scale contextual semantic relationships. Experimental results show that our model has fewer parameters (with 22.83% reduction) while producing higher accuracy (9.02% and 16.89% improvement on the two distinct datasets) compared with the original YOLOv4, which is also competitive to some other representative algorithms.

Similar content being viewed by others

Data availability

The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request.

References

Bochkovskiy A, Wang C-Y, Liao H-YM (2020) Yolov4: Optimal speed and accuracy of object detection, arXiv preprint arXiv:2004.10934

Cai Z, Vasconcelos N (2018) Cascade r-cnn: Delving into high quality object detection, in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, pp. 6154–6162

Chen L-C, Zhu Y, Papandreou G, Schroff F, Adam H (2018) Encoder-decoder with atrous separable convolution for semantic image segmentation, in Proceedings of the European conference on computer vision (ECCV), pp. 801–818

Dosovitskiy A, Beyer L, Kolesnikov A, Weissenborn D, Zhai X, Unterthiner T, Dehghani M, Minderer M, Heigold G, Gelly S (2020) An image is worth 16x16 words: Transformers for image recognition at scale, arXiv preprint arXiv:2010.11929

Everingham M, Eslami S, Van Gool L, Williams CK, Winn J, Zisserman A (2015) The pascal visual object classes challenge: a retrospective. Int J Comput Vis 111(1):98–136

Felzenszwalb PF, Girshick RB, McAllester D, Ramanan D (2010) Object detection with discriminatively trained part-based models. IEEE Trans Pattern Anal Mach Intell 32(9):1627–1645

Ge Z, Liu S, Wang F, Li Z, Sun J (2021) Yolox: Exceeding yolo series in 2021, arXiv preprint arXiv:2107.08430

Ge Z, Liu S, Li Z, Yoshie O, Sun J (2021) Ota: Optimal transport assignment for object detection, in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, pp. 303–312

Girshick R (2015) Fast R-CNN, in Proceedings of the IEEE International Conference on Computer Vision, Santiago, pp. 1440–1448

Girshick R, Donahue J, Darrell T, Malik J (2014) Rich feature hierarchies for accurate object detection and semantic segmentation, in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, pp. 580–587

Girshick R, Donahue J, Darrell T, Malik J (2015) Region-based convolutional networks for accurate object detection and segmentation. IEEE Trans Pattern Anal Mach Intell 38(1):142–158

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition, in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, pp. 770–778

He K, Gkioxari G, Dollár P, Girshick R (2017) Mask r-cnn, in Proceedings of the IEEE International Conference on Computer Vision, Venice, pp. 2961–2969

He K, Zhang X, Ren S, Sun J (2015) Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans Pattern Anal Mach Intell 37(9):1904–1916

Howard AG, Zhu M, Chen B, Kalenichenko D, Wang W, Weyand T, Andreetto M, Adam H (2017) Mobilenets: Efficient convolutional neural networks for mobile vision applications, arXiv preprint arXiv:1704.04861

Huang G, Liu Z, Van Der Maaten L, Weinberger KQ (2017) Densely connected convolutional networks, in Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 4700–4708

Huang Z, Ben Y, Luo G, Cheng P, Yu G, Fu B (2021) Shuffle transformer: Rethinking spatial shuffle for vision transformer, arXiv preprint arXiv:2106.03650

Krizhevsky A, Sutskever I, Hinton GE (2012) Imagenet classification with deep convolutional neural networks. Adv Neural Inf Process Syst 25

Lawrence S, Giles CL, Tsoi AC, Back AD (1997) Face recognition: A convolutional neural-network approach. IEEE Trans Neural Networks 8(1):98–113

LeCun Y, Bengio Y, Hinton G (2015) Deep learning. Nature 521(7553):436–444

Li C, Li L, Jiang H, Weng K, Geng Y, Li L, Ke Z, Li Q, Cheng M, Nie W (2022) YOLOv6: A single-stage object detection framework for industrial applications, arXiv preprint arXiv:2209.02976

Liang H, Zhou H, Zhang Q, Wu T (2022) Object Detection Algorithm Based on Context Information and Self-Attention Mechanism. Symmetry 14:1–16

Lin T-Y, Dollár P, Girshick R, He K, Hariharan B, Belongie S (2017) Feature pyramid networks for object detection, in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, pp. 2117–2125

Liu W, Anguelov D, Erhan D, Szegedy C, Reed S, Fu C-Y, Berg AC (2016) Ssd: Single shot multibox detector, in Proceedings of the European Conference on Computer Vision, Amsterdam, pp. 21–37

Liu Z, Lin Y, Cao Y, Hu H, Wei Y, Zhang Z, Lin S, Guo B (2021) Swin transformer: Hierarchical vision transformer using shifted windows, in Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 10012–10022

Liu S, Qi L, Qin H, Shi J, Jia J (2018) Path aggregation network for instance segmentation, in Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 8759–8768

Liu S, Huang S, Wang S, Muhammad K, Bellavista P, Del Ser J (2023) Visual tracking in complex scenes: A location fusion mechanism based on the combination of multiple visual cognition flows. Information Fusion 96:281–296

Liu S, Gao P, Li Y, Fu W, Ding W (2023) Multi-modal fusion network with complementarity and importance for emotion recognition. Inf Sci 619:679–694

Redmon J, Farhadi A (2018) Yolov3: An incremental improvement, arXiv preprint arXiv:1804.02767

Redmon J, Farhadi A (2017) YOLO9000: better, faster, stronger, in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, pp. 7263–7271

Redmon J, Divvala S, Girshick R, Farhadi A (2016) You only look once: Unified, real-time object detection, in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, pp. 779–788

Ren S, He K, Girshick R, Sun J (2015) Faster r-cnn: Towards real-time object detection with region proposal networks. IEEE Trans Pattern Anal Mach Intell 36(6):1137–1149

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556

Szegedy C, Liu W, Jia Y, Sermanet P, Reed S, Anguelov D, Erhan D, Vanhoucke V, Rabinovich A (2015) Going deeper with convolutions, in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, pp. 1–9

Wang C-Y, Bochkovskiy A, Liao H-YM (2022) YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors, arXiv preprint arXiv:2207.02696

Woo S, Park J, Lee J-Y, Kweon IS (2018) Cbam: Convolutional block attention module, in Proceedings of the European Conference on Computer Vision, Munich, pp. 3–19

Zhang H, Chang H, Ma B, Wang N, Chen X (2020) Dynamic R-CNN: Towards high quality object detection via dynamic training, in Proceedings of the European Conference on Computer Vision, Glasgow, pp. 260–275.

Zou Z, Shi Z, Guo Y, Ye J (2019) Object detection in 20 years: A survey. arXiv preprint arXiv:1905.05055

Acknowledgements

This work was supported by National Natural Science Foundation of China (NSFC) (61976123, 61601427); Taishan Young Scholars Program of Shandong Province; and Key Development Program for Basic Research of Shandong Province (ZR2020ZD44).

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of interest

The authors have no competing interests to declare that are relevant to the content of this article.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhang, M., Jian, M. & Wang, G. YOLO-AA: an efficient object detection model via strengthening fusion context information. Multimed Tools Appl 83, 10661–10676 (2024). https://doi.org/10.1007/s11042-023-16063-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-16063-9