Abstract

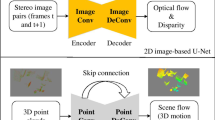

Scene flow estimation is a fundamental task of autonomous driving. Compared with optical flow, scene flow can provide sufficient 3D motion information of the dynamic scene. With the increasing popularity of 3D LiDAR sensors and deep learning technology, 3D LiDAR-based scene flow estimation methods have achieved outstanding results on public benchmarks. Current methods usually adopt Multiple Layer Perceptron (MLP) or traditional convolution-like operation for feature extraction. However, the characteristics of point clouds are not exploited adequately in these methods, and thus some key semantic and geometric structures are not well captured. To address this issue, we propose to introduce graph convolution to exploit the structural features adaptively. In particular, multiple graph-based feature generators and a graph-based flow refinement module are deployed to encode geometric relations among points. Furthermore, residual connections are used in the graph-based feature generator to enhance feature representation and deep supervision of the graph-based network. In addition, to focus on short-term dependencies, we introduce a single gate-based recurrent unit to refine scene flow predictions iteratively. The proposed network is trained on the FlyingThings3D dataset and evaluated on the FlyingThings3D, KITTI, and Argoverse datasets. Comprehensive experiments show that all proposed components contribute to the performance of scene flow estimation, and our method can achieve potential performance compared to the recent approaches.

Similar content being viewed by others

Data Availability

The datasets generated during and/or analysed during the current study are not publicly available due to an ongoing study but are available from the corresponding author on reasonable request.

References

Behl A, Jafari OH, Mustikovela SK, Alhaija HA, Rother C, Geiger A (2017) Bounding boxes, segmentations and object coordinates: How important is recognition for 3d scene flow estimation in autonomous driving scenarios?. In: IEEE International conference on computer vision (ICCV), pp 2593–2602

Chang M-F, Lambert J, Sangkloy P, Singh J, Bak S, Hartnett A, Wang D, Carr P, Lucey S, Ramanan D, Hays J (2019) Argoverse: 3d tracking and forecasting with rich maps. In: IEEE Conference on computer vision and pattern recognition (CVPR), pp 8740–8749

Chen J, Lei B, Song Q, Ying H, Chen DZ, Wu J (2020) A hierarchical graph network for 3d object detection on point clouds. In: IEEE Conference on computer vision and pattern recognition (CVPR), pp 389–398

Dewan A, Caselitz T, Tipaldi GD, Burgard W (2016) Rigid scene flow for 3d lidar scans. In: IEEE International conference on intelligent robots and systems (IROS), pp 1765–1770

Dosovitskiy A, Fischer P, Ilg E, Häusser P, Hazirbas C, Golkov V, Smagt Pvd, Cremers D, Brox T (2015) Flownet: Learning optical flow with convolutional networks. In: IEEE International conference on computer vision (ICCV), pp 2758–2766

Gu X, Wang Y, Wu C, Lee YJ, Wang P (2019) Hplflownet: Hierarchical permutohedral lattice flownet for scene flow estimation on large-scale point clouds. In: IEEE Conference on computer vision and pattern recognition (CVPR), pp 3249–3258

Hadfield S, Bowden R (2011) Kinecting the dots: Particle based scene flow from depth sensors. In: International conference on computer vision, pp 2290–2295

Hornacek M, Fitzgibbon A, Rother C (2014) Sphereflow: 6 dof scene flow from rgb-d pairs. In: IEEE Conference on computer vision and pattern recognition, pp 3526–3533

Huguet F, Devernay F (2007) A variational method for scene flow estimation from stereo sequences. In: IEEE International conference on computer vision, pp 1–7

Hur J, Roth S (2020) Self-supervised monocular scene flow estimation. In: IEEE Conference on computer vision and pattern recognition (CVPR),pp 7394–7403

Hur J, Roth S (2021) Self-supervised multi-frame monocular scene flow. In: IEEE Conference on computer vision and pattern recognition (CVPR), pp 2683–2693

Ilg E, Saikia T, Keuper M, Brox T (2018) Occlusions, motion and depth boundaries with a generic network for disparity, optical flow or scene flow estimation. In: European conference on computer vision (ECCV), pp 626–643

Jampani V, Kiefel M, Gehler PV (2016) Learning sparse high dimensional filters: Image filtering, dense crfs and bilateral neural networks. In: IEEE Conference on computer vision and pattern recognition (CVPR), pp 4452–4461

Jiang H, Sun D, Jampani V, Lv Z, Learned-Miller E, Kautz J (2019) Sense: a shared encoder network for scene-flow estimation. In: IEEE International conference on computer vision (ICCV), pp 3194–3203

Lai H-Y, Tsai Y-H, Chiu W-C (2019) Bridging stereo matching and optical flow via spatiotemporal correspondence. In: IEEE Conference on computer vision and pattern recognition (CVPR), pp 1890–1899

Li Y, Baciu G (2021) Hsgan: Hierarchical graph learning for point cloud generation. IEEE Trans Image Process 30:4540–4554

Li G, Müller M, Thabet A, Ghanem B (2019) Deepgcns: Can gcns go as deep as cnns?. In: IEEE International conference on computer vision (ICCV), pp 9266–9275

Li X, Pontes JK, Lucey S (2021) Neural scene flow prior. In: Advances in neural information processing systems (neurIPS)

Lin Z-H, Huang S-Y, Wang Y-CF (2020) Convolution in the cloud: Learning deformable kernels in 3d graph convolution networks for point cloud analysis. In: IEEE Conference on computer vision and pattern recognition (CVPR), pp 1797–1806

Liu P, King I, Lyu MR, Xu J (2020) Flow2stereo: Effective self-supervised learning of optical flow and stereo matching. In: IEEE Conference on computer vision and pattern recognition (CVPR), pp 6647–6656

Liu X, Qi CR, Guibas LJ (2019) Flownet3d: Learning scene flow in 3d point clouds. In: IEEE Conference on computer vision and pattern recognition (CVPR), pp 529–537

Luo C, Yang Z, Wang P, Wang Y, Xu W, Nevatia R, Yuille A (2020) Every pixel counts ++: Joint learning of geometry and motion with 3d holistic understanding. IEEE Trans Pattern Anal Mach Intell 42(10):2624–2641

Ma W-C, Wang S, Hu R, Xiong Y, Urtasun R (2019) Deep rigid instance scene flow. In: IEEE Conference on computer vision and pattern recognition (CVPR), pp 3609–3617

Mayer N, Ilg E, Häusser P, Fischer P, Cremers D, Dosovitskiy A, Brox T (2016) A large dataset to train convolutional networks for disparity, optical flow, and scene flow estimation. In: IEEE Conference on computer vision and pattern recognition (CVPR), pp 4040–4048

Menze M, Geiger A (2015) Object scene flow for autonomous vehicles. In: IEEE Conference on computer vision and pattern recognition (CVPR), pp 3061–3070

Menze M, Heipke C, Geiger A (2015) Joint 3d estimation of vehicles and scene flow. ISPRS Annals of the Photogrammetry Remote Sensing and Spatial Information Sciences, pp 427–434

Pan L, Dai Y, Liu M, Porikli F, Pan Q (2020) Joint stereo video deblurring, scene flow estimation and moving object segmentation. IEEE Trans Image Process 29:1748–1761

Paszke A, Gross S, Massa F et al (2019) Pytorch: An imperative style, high-performance deep learning library. In: Advances in neural information processing systems (neurIPS)

Pillai S, Leonard JJ (2017) Towards visual ego-motion learning in robots. In: IEEE International conference on intelligent robots and systems (IROS), pp 5533–5540

Pontes JK, Hays J, Lucey S (2020) Scene flow from point clouds with or without learning. In: International conference on 3d vision (3DV), pp 261–270

Puy G, Boulch A, Marlet R (2020) Flot: Scene flow on point clouds guided by optimal transport. In: European conference on computer vision (ECCV), pp 527–544

Qi CR, Yi L, Su H, Guibas LJ (2017) Pointnet++: Deep hierarchical feature learning on point sets in a metric space. In: Advances in neural information processing systems (neurIPS), pp 5099–5108

Qi CR, Zhou Y, Najibi M, Sun P, Vo K, Deng B, Anguelov D (2021) Offboard 3d object detection from point cloud sequences. In: IEEE Conference on computer vision and pattern recognition (CVPR), pp 6134–6144

Quiroga J, Brox T, Devernay F, Crowley J (2014) Dense semi-rigid scene flow estimation from rgbd images. In: European conference on computer vision (ECCV), pp 567–582

Schuster R, Wasenmuller O, Unger C, Kuschk G, Stricker D (2020) Sceneflowfields++: Multi-frame matching, visibility prediction, and robust interpolation for scene flow estimation. In: International journal of computer vision, vol 128, pp 527–546

Shen W, Wei Z, Huang S, Zhang B, Chen P, Zhao P, Zhang Q (2021) Verifiability and predictability: Interpreting utilities of network architectures for point cloud processing. In: IEEE Conference on computer vision and pattern recognition (CVPR), pp 10703–10712

Shi W, Rajkumar R (2020) Point-gnn: Graph neural network for 3d object detection in a point cloud. In: IEEE Conference on computer vision and pattern recognition (CVPR), pp 1708–1716

Su H, Jampani V, Sun D, Maji S, Kalogerakis E, Yang M-H, Kautz J (2018) Splatnet: Sparse lattice networks for point cloud processing. In: IEEE Conference on computer vision and pattern recognition, pp 2530–2539

Teed Z, Deng J (2020) Raft: Recurrent all-pairs field transforms for optical flow. In: European conference on computer vision (ECCV), pp 402–419

Tishchenko I, Lombardi S, Oswald MR, Pollefeys M (2020) Self-supervised learning of non-rigid residual flow and ego-motion. In: International conference on 3d vision (3DV), pp 150–159

Ushani AK, Wolcott RW, Walls JM, Eustice RM (2017) A learning approach for real-time temporal scene flow estimation from lidar data. In: IEEE International conference on robotics and automation (ICRA), pp 5666–5673

Vogel C, Schindler K, Roth S (2013) Piecewise rigid scene flow. In: IEEE International conference on computer vision, pp 1377–1384

Wang P, Li W, Gao Z, Zhang Y, Tang C, Ogunbona P (2017) Scene flow to action map: a new representation for rgb-d based action recognition with convolutional neural networks. In: IEEE Conference on computer vision and pattern recognition (CVPR), pp 416–425

Wang Z, Li S, Howard-Jenkins H, Prisacariu VA, Chen M (2020) Flownet3d++: Geometric losses for deep scene flow estimation. In: IEEE Winter conference on applications of computer vision (WACV), pp 91–98

Wang Y, Sun Y, Liu Z, Sarma SE, Bronstein MM, Solomon JM (2019) Dynamic graph cnn for learning on point clouds. ACM Trans Graphics 38 (5):1–12

Wang G, Wu X, Liu Z, Wang H (2021) Hierarchical attention learning of scene flow in 3d point clouds. IEEE Trans Image Process 30:5168–5181

Wei Y, Wang Z, Rao Y, Lu J, Zhou J (2021) Pv-raft: Point-voxel correlation fields for scene flow estimation of point clouds. In: IEEE Conference on computer vision and pattern recognition (CVPR), pp 6954–6963

Wu F, Jing X-Y, Wei P, Lan C, Ji Y, Jiang G-P, Huang Q (2022) Semi-supervised multi-view graph convolutional networks with application to webpage classification. Inf Sci 591:142–154

Wu W, Qi Z, Fuxin L (2019) Pointconv: Deep convolutional networks on 3d point clouds. In: IEEE Conference on computer vision and pattern recognition (CVPR), pp 9613–9622

Wu W, Wang ZY, Li Z, Liu W, Fuxin L (2020) Pointpwc-net: Cost volume on point clouds for (self-)supervised scene flow estimation. In: European conference on computer vision (ECCV), pp 88–107

Yang G, Ramanan D (2020) Upgrading optical flow to 3d scene flow through optical expansion. In: IEEE Conference on computer vision and pattern recognition (CVPR), pp 1331–1340

Yin Z, Shi J (2018) Geonet: Unsupervised learning of dense depth, optical flow and camera pose. In: IEEE Conference on computer vision and pattern recognition, pp 1983–1992

Zhou T, Brown M, Snavely N, Lowe DG (2017) Unsupervised learning of depth and ego-motion from video. In: IEEE Conference on computer vision and pattern recognition (CVPR), pp 6612–6619

Zou Y, Luo Z, Huang J-B (2018) Df-net: Unsupervised joint learning of depth and flow using cross-task consistency. In: European conference on computer vision (ECCV), pp 38–55

Acknowledgements

This work is sponsored in part by the Natural Science Foundation of Jiangsu Province of China (Grant No. BK20210594 and BK20210588), in part by the Natural Science Foundation for Colleges and Universities in Jiangsu Province (Grant No. 21KJB520016 and 21KJB520015), in part by the Natural Science Foundation of Nanjing University of Posts and Telecommunications (Grant No. NY221019 and NY221074), and in part by the National Natural Science Foundation of China (Grant No. 61931012, 61802204, and 62101280).

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Material preparation, data collection and analysis were performed by Mingliang Zhai, Hao Gao, Ye Liu, Jianhui Nie and Kang Ni. The first draft of the manuscript was written by Mingliang Zhai and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Disclosure of potential conflicts of interest

The authors declare that there are no competing interests regarding the publication of this paper.

Research involving Human Participants and/or Animals

The authors claim that this paper does not involve the research of human participants and/or animals.

Informed consent

All authors contributed significantly to the research reported and have read and approved the submitted manuscript.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhai, M., Gao, H., Liu, Y. et al. Learning graph-based representations for scene flow estimation. Multimed Tools Appl 83, 7317–7334 (2024). https://doi.org/10.1007/s11042-023-15541-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-15541-4