Abstract

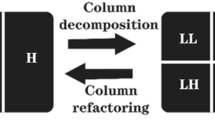

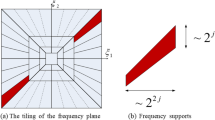

To solve the problems that the correlation of multi-scale coefficients is ignored, as well as inaccurate identification of complementarity and redundancy of source images in traditional infrared and visible image fusion methods, an infrared and visible image fusion method based on quaternion wavelet transform (QWT) and feature-level copula model is proposed in this paper. The proposed method extracts the luminance, contrast and structure features of QWT magnitude and phase subbands. Then, the feature-level copula model capturing the inter-scale and phase-magnitude correlation is constructed to describe the intrinsic structure of images. The redundant and complementary feature type of QWT coefficient is further determined through the similarity of proposed models. According to the feature types, different fusion rules for high-frequency subbands are designed to accurately transfer the salient features of source images into fused image. For the low frequency subbands, a fusion rule is proposed using multiple features to avoid the degradation of image visual quality caused by false information. Finally, the fused low frequency subbands and high frequency subbands are transformed by inverse QWT to get the fused image. Experimental results show that the proposed method can effectively retain the rich details and structure information in infrared and visible images.

Similar content being viewed by others

Data availability

The datasets generated during and/or analyzed during the current study are available in the TNO repository, http://figshare.com/articles/TN_Image_Fusion_Dataset/1008029.

References

Aslantas V, Bendes E (2015) A new image quality metric for image fusion: The sum of the correlations of differences. AEU - Int J Electron Commun 69(12):1890–1896

Bayro-Corrochano E (2006) The theory and use of the quaternion wavelet transform. J Math Imaging Vis 24(1):19–35

Chai P, Luo X, Zhang Z (2017) Image fusion using quaternion wavelet transform and multiple features. IEEE Access 5:6724–6734

Cunha ALD, Zhou J, Do MN (2006) The nonsubsampled contourlet transform: theory, design, and applications. IEEE Trans Image Process 15(10):3089–3101

Deng LN, Yao XF (2017) Research on the fusion algorithm of infrared and visible images based on non-subsampled shearlet transform. Acta Electron Sin 45(12):2965–2970

Geng P, Sun XM, Liu JH (2017) Adopting quaternion wavelet transform to fuse multi-modal medical images. J Med Biol Eng 37(2):230–239

Guo L, Cao X, Liu L (2020) Dual-tree biquaternion wavelet transform and its application to color image fusion. Sig Process 171:1–7

Lasmar N, Berthoumieu Y (2014) Gaussian copula multivariate modeling for texture image retrieval using wavelet transforms. IEEE Trans Image Process 23(5):2246–2261

Li C, Li J, Fu B (2013) Magnitude-phase of quaternion wavelet transform for texture representation using multilevel copula. IEEE Signal Process Lett 20(8):799–802

Li CR, Huang YY, Zhu LH (2017) Color texture image retrieval based on gaussian copula models of Gabor wavelets. Pattern Recogn 64:118–129

Li H, Wu X, Kittler J (2018) Infrared and visible image fusion using a deep learning framework. In: 24th International Conference on Pattern Recognition. Beijing, China. ICPR, 2705–2710

Li K, Luo X, Zhang Z et al (2019) Image fusion using quaternion wavelet transform and copula model. Comput Sci 46(4):293–299

Li H, Wu XJ, Durrani TS (2019) Infrared and visible image fusion with ResNet and zero-phase component analysis. Infrared Phys Technol 102:1–22

Li H, Wu XJ, DenseFuse (2019) A fusion approach to infrared and visible images. IEEE Trans Image Process 28(5):2614–2623

Liu GX, Yang WHA (2002) A wavelet-decomposition based image fusion scheme and its performance evaluation. Acta Autom Sin 28(6):927

Liu X, Chen S, Song L et al (2022) Self-attention negative feedback network for real-time image super-resolution. J King Saud Univ Comput Inf Sci 34(8):6179–6186

Liu S, Wang S, Liu X et al. Human inertial thinking strategy: a novel fuzzy reasoning mechanism for IoT-Assisted visual monitoring. IEEE Internet Things J. https://doi.org/10.1109/JIOT.2022.3142115

Liu S, Xu X, Zhang Y et al. A reliable sample selection strategy for weakly supervised visual tracking. IEEE Trans Reliab. https://doi.org/10.1109/TR.2022.3162346

Liu Z, Blasch E, Xue Z et al (2012) Objective assessment of multiresolution image fusion algorithms for context enhancement in night vision: a comparative study. IEEE Trans Pattern Anal Mach Intell 34(1):94–109

Liu J, Lei Y, Xing Y, Lu C (2016) Fusion technique for SAR and gray visible image based on hidden Markov model in non-subsample shearlet transform domain. Control Decis 31(03):453–457

Liu Y, Chen X, Ward RK et al (2016) Image fusion with convolutional sparse representation. IEEE Signal Process Lett 23(12):1882–1886

Liu Y, Chen X, Peng H et al (2017) Multi-focus image fusion with a deep convolutional neural network. Inform Fusion 36:191–207

Luo X, Zhang Z, Wu X (2016) A novel algorithm of remote sensing image fusion based on shift-invariant shearlet transform and regional selection. AEU-Int J Electron C 70(2):186–197

Luo X, Zhang Z, Zhang B, Wu X (2017) Image fusion with contextual statistical similarity and nonsubsampled shearlet transform. IEEE Sens J 17(6):1760–1771

Luo X, Wang A, Zhang Z, Xiang X, Wu X (2021) LatRAIVF: an infrared and visible image Fusion Method based on latent regression and adversarial training. IEEE Trans Instrum Meas 70:1–16

Luo X, Gao Y, Wang A, Zhang Z, Wu X (2023) IFSepR: a general framework for image fusion based on separate representation learning. IEEE Trans Multimedia 25:608–623

Ma J, Chen C, Li C et al (2016) Infrared and visible image fusion via gradient transfer and total variation minimization. Inform Fusion 31:100–109

Ma J, Zhou Z, Wang B et al (2017) Infrared and visible image fusion based on visual saliency map and weighted least square optimization. Infrared Phys Technol 82:8–17

Ma J, Ma Y, Li C (2019) Infrared and visible image fusion methods and applications: a survey. Inform Fusion 45:153–178

Ma J, Yu W, Liang P et al (2019) FusionGAN: a generative adversarial network for infrared and visible image fusion. Inform Fusion 48:11–26

Marchi V, Rojas A, Louzada FA (2012) The chi-plot and its asymptotic confidence interval for analyzing bivariate dependence: an application to the average intelligence and atheism rates across nations data. J Data Sci 10(4):711–722

Shreyamsha Kumar BK (2015) Image fusion based on pixel significance using cross bilateral filter. Signal Image Video Process 9(5):1193–1204

Simoncelli EP (1999) Modeling the joint statistics of images in the wavelet domain. Proc SPIE Int Soc Opt Eng 3813:188–195

Sklar A (1973) Random variables, joint distribution functions and copulas. Kybernetika 9(6):449–460

Ulow TB (1999) Hypercomplex spectral signal representations for the processing and analysis of images. Bericht des Instituts für Informatik

Wang X, Wei T, Zhou Z, Song C (2013) Research of remote sensing image fusion method based on the contourlet coefficients’ correlativity. J Comput Res Dev 50(08):1778–1786

Wang Z, Bovik AC, Sheikh HR et al (2004) Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process 13(4):600–612

Wang X, Song R, Mu Z et al (2020) An image NSCT-HMT model based on copula entropy multivariate gaussian scale mixtures. Knowl Based Syst 193:1–11

Xiang T, Yan L, Gao R (2015) A fusion algorithm for infrared and visible images based on adaptive dual-channel unit-linking PCNN in NSCT domain. Infrared Phys Technol 69:53–61

Yan L, Xiang TZ (2016) Fusion of infrared and visible images based on edge feature and adaptive PCNN in NSCT domain. Acta Electron Sin 44(04):761–766

Yang B, JING Z-L (2010) Image fusion algorithm based on the quincunx-sampled discrete wavelet frame. Acta Autom Sin 36(1):12

Yin M, Duan P, Chu B, Liang X (2016) Image fusion based on non-subsampled quaternion shearlet transform. J Image Graph 21(10):1289–1297

Zhang Q, Fu Y, Li H et al (2013) Dictionary learning method for joint sparse representation-based image fusion. Opt Eng 52(5):1–11

Zhang XL, Li XF, Li J (2014) Validation and correlation analysis of metrics for evaluating performance of image fusion. Acta Autom Sin 40(02):306–315

Acknowledgements

This work was supported in part by the National Natural Science Foundation of China under Grant 61772237, in part by the Six Talent Climax Foundation of Jiangsu under Grant XYDXX-030.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Luo, X., Li, K., Wang, A. et al. Infrared and visible image fusion based on quaternion wavelets transform and feature-level Copula model. Multimed Tools Appl 83, 28549–28577 (2024). https://doi.org/10.1007/s11042-023-15536-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-15536-1