Abstract

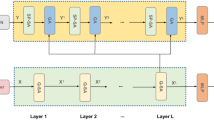

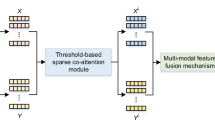

Most VQA(visual question answering) models can not understand the scene text in the image. Poor text reading ability is a significant reason for the current VQA model’s poor performance. To solve the problems, we designed a co-attention model that incorporates the scene text features in images. We detect and obtain the OCR token in the image through the OCR model, which is conducive to further understanding the image. We design a model based on a co-attention mechanism, including a question self-attention unit, question-guided image visual attention unit and question-guided image OCR token attention unit. The redundant question information is filtered under the question self-attention module. The question-guided attention module is used to obtain the final visual features and OCR token features in the image. The information of question text features, visual image features and OCR token features in the image is fused. We design a classifier which can get an answer from the fixed answer set or directly copy the text detected from the OCR model as the final answer so that the model can answer the questions about the text in the image. The experimental results show that our model is improved.

Similar content being viewed by others

Data Availability

The public dataset VQA 2.0 used in this paper can be found here: https://visualqa.org/download.html(access on 13 Feb 2023). We use VQA challenge website (https://eval.ai/challenge/830/overview) to evaluate the scores on test-dev or test-std split. The link of the experiment results is as follows: https://evalai.s3.amazonaws.com/media/submission_files/submission_202957/2aa0cb55-7cb9-4505-8cd8-37ca0382ff45.json

Code Availability

The current version of the code is available at https://github.com/yanfeng918/openvqa-ocr-softcopy

References

Anderson P, He X, Buehler C, Teney D, Johnson M, Gould S, Zhang L (2018) Bottom-up and top-down attention for image captioning and visual question answering. In: Proceedings of the IEEE Conference on computer vision and pattern recognition, pp 6077–6086

Antol S, Agrawal A, Lu J, Mitchell M, Batra D, Zitnick CL, Parikh D (2015) Vqa: Visual question answering. In: Proceedings of the IEEE International conference on computer vision, pp 2425–2433

Ba JL, Kiros JR, Hinton GE (2016) Layer normalization. arXiv:1607.06450

Ben-Younes H, Cadene R, Cord M, Thome N (2017) Mutan: Multimodal tucker fusion for visual question answering. In: Proceedings of the IEEE International conference on computer vision, pp 2612–2620

Bojanowski P, Grave E, Joulin A, Mikolov T (2017) Enriching word vectors with subword information. Trans Assoc Computat Linguistics 5:135–146

Borisyuk F, Gordo A, Sivakumar V (2018) Rosetta: Large scale system for text detection and recognition in images. In: Proceedings of the 24th ACM SIGKDD International conference on knowledge discovery & data mining, pp 71–79

Cadene R, Ben-Younes H, Cord M, Thome N (2019) Murel: Multimodal relational reasoning for visual question answering. In: Proceedings of the IEEE/CVF Conference on computer vision and pattern recognition, pp 1989–1998

Chen C, Han D, Chang C-C (2022) Caan:Context-aware attention network for visual question answering. Pattern Recogn 132:108980

Chen Y-C, Li L, Yu L, El Kholy A, Ahmed F, Gan Z, Cheng Y, Liu J (2020) Uniter: Universal image-text representation learning. In: Computer Vision–ECCV 2020: 16th European conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XXX, pp 104–120. Springer

Chen K, Wang J, Chen L-C, Gao H, Xu W, Nevatia R (2015) Abc-cnn:, An attention based convolutional neural network for visual question answering. arXiv:1511.05960

Fukui A, Park DH, Yang D, Rohrbach A, Darrell T, Rohrbach M (2016) Multimodal compact bilinear pooling for visual question answering and visual grounding. In: Proceedings of the 2016 Conference on empirical methods in natural language processing. Association for computational linguistics, ???. https://doi.org/10.18653/v1/d16-1044

Gao P, Jiang Z, You H, Lu P, Hoi SC, Wang X, Li H (2019) Dynamic fusion with intra-and inter-modality attention flow for visual question answering. In: Proceedings of the IEEE/CVF Conference on computer vision and pattern recognition, pp 6639–6648

Goyal Y, Khot T, Summers-Stay D, Batra D, Parikh D (2017) Making the v in vqa matter: Elevating the role of image understanding in visual question answering. In: Proceedings of the IEEE Conference on computer vision and pattern recognition, pp 6904–6913

Guo M-H, Xu T-X, Liu J-J, Liu Z-N, Jiang P-T, Mu T-J, Zhang S-H, Martin RR, Cheng M-M, Hu S-M (2022) Attention mechanisms in computer vision: a survey. Computational Visual Media, pp 1–38

Hochreiter S, Schmidhuber J (1997) Long short-term memory. Neural Comput 9(8):1735–1780

Hu R, Singh A, Darrell T, Rohrbach M (2020) Iterative answer prediction with pointer-augmented multimodal transformers for textvqa. In: Proceedings of the IEEE/CVF Conference on computer vision and pattern recognition, pp 9992–10002

Jia S, Zhang Y (2018) Saliency-based deep convolutional neural network for no-reference image quality assessment. Multimed Tools Appl 77:14859–14872

Jiang X, Yu J, Qin Z, Zhuang Y, Zhang X, Hu Y, Wu Q (2020) Dualvd: an adaptive dual encoding model for deep visual understanding in visual dialogue. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol 34, pp 11125–11132

Kim J-H, Jun J, Zhang B-T (2018) Bilinear attention networks. arXiv:1805.07932

Kim J-H, On K-W, Lim W, Kim J, Ha J-W, Zhang B-T (2016) Hadamard product for low-rank bilinear pooling. arXiv:1610.04325

Li J, Li D, Xiong C, Hoi S (2022) Blip: Bootstrapping language-image pre-training for unified vision-language understanding and generation. In: International conference on machine learning, pp 12888–12900. PMLR

Lu J, Batra D, Parikh D, Lee S (2019) Vilbert: Pretraining task-agnostic visiolinguistic representations for vision-and-language tasks. Advances in neural information processing systems, p 32

Manmadhan S, Kovoor BC (2020) Visual question answering: a state-of-the-art review. Artif Intell Rev 53(8):5705–5745

Peng L, Yang Y, Wang Z, Huang Z, Shen HT (2020) Mra-net: Improving vqa via multi-modal relation attention network. IEEE Transactions on Pattern Analysis and Machine Intelligence

Pennington J, Socher R, Manning CD (2014) Glove: Global vectors for word representation. In: Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), pp 1532–1543

Shahi TB, Sitaula C (2021) Natural language processing for nepali text: a review. Artif Intell Rev, pp 1–29

Singh A, Natarajan V, Shah M, Jiang Y, Chen X, Batra D, Parikh D, Rohrbach M (2019) Towards vqa models that can read. In: Proceedings of the IEEE/CVF Conference on computer vision and pattern recognition, pp 8317–8326

Tan H, Bansal M (2019) Lxmert:, Learning cross-modality encoder representations from transformers. arXiv:1908.07490

Teney D, Anderson P, He X, Van Den Hengel A (2018) Tips and tricks for visual question answering: Learnings from the 2017 challenge. In: Proceedings of the IEEE Conference on computer vision and pattern recognition, pp 4223–4232

Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, Kaiser L, Polosukhin I (2017) Attention is all you need. arXiv:1706.03762

Vinyals O, Toshev A, Bengio S, Erhan D (2015) Show and tell: a neural image caption generator. In: Proceedings of the IEEE Conference on computer vision and pattern recognition, pp 3156–3164

Wang P, Yang A, Men R, Lin J, Bai S, Li Z, Ma J, Zhou C, Zhou J, Yang H (2022) Ofa: Unifying architectures, tasks, and modalities through a simple sequence-to-sequence learning framework. In: International conference on machine learning, pp 23318–23340. PMLR

Yan F, Silamu W, Li Y (2022) Deep modular bilinear attention network for visual question answering. Sensors 22(3):1045

Yan F, Silamu W, Li Y, Chai Y (2022) Spca-net: a based on spatial position relationship co-attention network for visual question answering. The Vis Comput 38(9-10):3097–3108

Yang Z, He X, Gao J, Deng L, Smola A (2016) Stacked attention networks for image question answering. In: Proceedings of the IEEE Conference on computer vision and pattern recognition, pp 21–29

Yu D, Fu J, Tian X, Mei T (2019) Multi-source multi-level attention networks for visual question answering. ACM Transactions on Multimedia Computing, Communications, and Applications (TOMM) 15(2s):1–20

Yu Z, Yu J, Cui Y, Tao D, Tian Q (2019) Deep modular co-attention networks for visual question answering. In: Proceedings of the IEEE/CVF Conference on computer vision and pattern recognition, pp 6281–6290

Yu Z, Yu J, Xiang C, Fan J, Tao D (2018) Beyond bilinear: Generalized multimodal factorized high-order pooling for visual question answering. IEEE Trans Neural Netw Learn Syst 29(12):5947–5959. https://doi.org/10.1109/tnnls.2018.2817340

Zhang S, Chen M, Chen J, Zou F, Li Y-F, Lu P (2021) Multimodal feature-wise co-attention method for visual question answering, vol 73

Zhang Y, Hutchinson P, Lieven NA, Nunez-Yanez J (2020) Remaining useful life estimation using long short-term memory neural networks and deep fusion. IEEE Access 8:19033–19045

Zhang W, Yu J, Wang Y, Wang W (2021) Multimodal deep fusion for image question answering. Knowl-Based Syst 212:106639

Funding

This work was supported in part by the National Natural Science Foundation of China under Grant U1911401 and Key Project of Science and Technology Innovation 2030 supported by the Ministry of Science and Technology of China under Grant ZDI135-96.

Author information

Authors and Affiliations

Contributions

For this research, Y.F. and W.S. designed the concept of the research; Y.F.and C.Y. implemented experimental design; Y.F. conducted data analysis; Y.F. wrote the draft paper; W.S. and Y.L. reviewed and edited the whole paper.

Corresponding author

Ethics declarations

Ethics approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Consent for Participate

Not Applicable.

Consent for Publication

All authors have read and agreed to the published version of the manuscript.

Conflict of Interests

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Yan, F., Silamu, W., Chai, Y. et al. OECA-Net: A co-attention network for visual question answering based on OCR scene text feature enhancement. Multimed Tools Appl 83, 7085–7096 (2024). https://doi.org/10.1007/s11042-023-15418-6

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-15418-6