Abstract

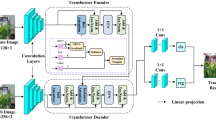

Recently, Siamese-based trackers have become particularly popular. The correlation module in these trackers is responsible for fusing the feature information from the template and the search region, to obtain the response results. However, there are very rich contextual information and feature dependencies among video sequences, and it is difficult for a simple correlation module to efficiently integrate useful information. Therefore, the tracker encounters the challenges of information loss and local optimal solutions. In this work, we propose a novel attention-enhanced network with a Transformer variant for robust visual tracking. The proposed method carefully designs the local feature information association module (LFIA) and the global feature information fusion module (GFIF) based on the attention mechanism, which can effectively utilize contextual information and feature dependencies to enhance feature information. Our approach transforms the visual tracking problem into a bounding box prediction problem, using only a simple prediction network for object localization, without any prior knowledge. Ultimately, we propose a robust tracker called RANformer. Experiments show that the proposed tracker achieves state-of-the-art performance on 7 popular tracking benchmarks while meeting real-time requirements with a speed exceeding 40FPS.

Similar content being viewed by others

References

Akter L, Islam MM (2021) Hepatocellular carcinoma patient’s survival prediction using oversampling and machine learning techniques[C]//2021 2nd international conference on robotics, electrical and signal processing techniques (ICREST). IEEE 445–450

Akter L, Islam M, Al-Rakhami MS et al (2021) Prediction of cervical cancer from behavior risk using machine learning techniques[J]. SN Comput Sci 2(3):1–10

Al-Rakhami MS, Islam MM, Islam MZ et al (2021) Diagnosis of COVID-19 from X-rays using combined CNN-RNN architecture with transfer learning[J]. MedRxiv 2020(08):24.20181339

Altan A, Hacıoğlu R (2020) Model predictive control of three-axis gimbal system mounted on UAV for real-time target tracking under external disturbances. Mech Syst Signal Process 138:106548

Asraf A, Islam M, Haque M (2020) Deep learning applications to combat novel coronavirus (COVID-19) pandemic[J]. SN Comput Sci 1(6):1–7

Ayon SI, Islam MM (2019) Diabetes prediction: A deep learning approach[J]. Int J Inform Engin Electron Bus 12(2):21

Ayon SI, Islam MM, Hossain MR (2020) Coronary artery heart disease prediction: A comparative study of computational intelligence techniques[J]. IETE J Res:1–20

Bertinetto L, Valmadre J, Henriques JF, Vedaldi A, Torr PH (2016) Fully-convolutional siamese networks for object tracking. In: proc. European conference on computer vision. Springer, Cham, pp 850–865

Bertinetto L, Valmadre J, Golodetz S, Miksik O, Torr PH (2016) Staple: Complementary learners for real-time tracking. In: Proc. IEEE Conf Comput Vision Patt Recog. pp 1401–1409

Bhat G, Johnander J, Danelljan M, Khan FS, Felsberg M (2018) Unveiling the power of deep tracking. In: Proc. Eur Conf Comput Vision (ECCV). pp 483–498

Bhat G, Danelljan M, Gool LV, Timofte R (2019) Learning discriminative model prediction for tracking. In: Proc. IEEE/CVF Int Conf Comput Vis. pp 6182–6191

Bhat G, Danelljan M, Gool LV, Timofte R (2020) Know your surroundings: exploiting scene information for object tracking. In: Proc. European Conference on Computer Vision. Springer, Cham. pp 205–221

Carion N, Massa F, Synnaeve G, Usunier N, Kirillov A, Zagoruyko S (2020) End-to-end object detection with transformers. In: Proc. European Conference on Computer Vision. Springer, Cham, pp 213–229

Danelljan M, Häger G, Khan F, Felsberg M (2015) Learning Spatially Regularized Correlation Filters for Visual Tracking. In: Proc. IEEE Int Conf Comput Vision. pp 4310–4318

Danelljan M, Robinson A, Khan FS, Felsberg M (2016) Beyond correlation filters: Learning continuous convolution operators for visual tracking. In: Proc. European Conference on Computer Vision. Springer, Cham, pp 472–488

Danelljan M, Bhat G, Shahbaz KF, Felsberg M (2017) Eco: Efficient convolution operators for tracking. In: Proc. IEEE Conf Comput Vis Patt Recog. pp 6638–6646

Danelljan M, Bhat G, Khan FS, Felsberg M (2019) Atom: Accurate tracking by overlap maximization. In: Proc. IEEE/CVF Conf Comput Vision Patt Recog. pp 4660–4669

Danelljan M, Gool LV, Timofte R (2020) Probabilistic regression for visual tracking. In: Proc. IEEE/CVF Conf Comput Vis Patt Recog. pp 7183–7192

Das S, Sadi MS, Haque MA et al (2019) A machine learning approach to protect electronic devices from damage using the concept of outlier[C]//2019 1st international conference on advances in science, engineering and robotics technology (ICASERT). IEEE 1–6

Ding X, Larson EC (2020) Incorporating uncertainties in student response modeling by loss function regularization. Neurocomputing. 409:74–82

Fan B, Li X, Cong Y, Tang Y (2018) Structured and weighted multi-task low rank tracker. Pattern Recogn 81:528–544

H. Fan, L. Lin, F. Yang, P. Chu, G. Deng, S. J. Yu, H.X. Bai, Y. Xu, C. Y. Liao, H.B. Ling (2019) Lasot: A high-quality benchmark for large-scale single object tracking. In: Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp 5374–5383

Fu H, Zhang Y, Zhou W, Wang X, Zhang H (2020) Learning reliable-spatial and spatial-variation regularization correlation filters for visual tracking. Image Vis Comput 94:103869

Galoogahi KH, Fagg A, Huang C, Ramanan D, Lucey S (2017) Need for speed: A benchmark for higher frame rate object tracking. In: Proc. IEEE Int Conf Comput Vision. pp 1125–1134

Ge W, Yang S, Yu Y (2018) Multi-evidence filtering and fusion for multi-label classification, object detection and semantic segmentation based on weakly supervised learning. In: Proc. IEEE Conf Comput Vision Patt Recog. pp 1277–1286

Haque MR, Islam MM, Iqbal H et al (2018) Performance evaluation of random forests and artificial neural networks for the classification of liver disorder[C]//2018 international conference on computer, communication, chemical, material and electronic engineering (IC4ME2). IEEE 1–5

Hasan M, Islam MM, Zarif MII, Hashem MMA (2019) Attack and anomaly detection in IoT sensors in IoT sites using machine learning approaches[J]. Int Things 7:100059

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proc. IEEE Conf Comput Vision Patt Recog. pp 770–778

Henriques JF, Caseiro R, Martins P, Batista J (2015) High-speed tracking with Kernelized correlation filters. IEEE Trans Pattern Anal Mach Intell 37:583–596

Huang L, Zhao X, Huang K (2021) GOT-10k: a large high-diversity benchmark for generic object tracking in the wild. IEEE Trans Pattern Anal Mach Intell 43:1562–1577

Islam M (2020) An efficient human computer interaction through hand gesture using deep convolutional neural network[J]. SN Comput Sci 1(4):1–9

Islam MM, Iqbal H, Haque MR et al (2017) Prediction of breast cancer using support vector machine and K-nearest neighbors[C]//2017 IEEE region 10 humanitarian technology conference (R10-HTC). IEEE 226–229

Islam MZ, Islam MM, Asraf A (2020) A combined deep CNN-LSTM network for the detection of novel coronavirus (COVID-19) using X-ray images[J]. Inform Med Unlocked 20:100412

Islam M, Haque M, Iqbal H et al (2020) Breast cancer prediction: A comparative study using machine learning techniques[J]. SN Comput Sci 1(5):1–14

Islam MM, Tayan O, Islam MR et al (2020) Deep learning based systems developed for fall detection: A review[J]. IEEE Access 8:166117–166137

Islam MM, Karray F, Alhajj R, Zeng J (2021) A review on deep learning techniques for the diagnosis of novel coronavirus (COVID-19)[J]. IEEE Access 9:30551–30572

Islam MR, Moni MA, Islam MM, Rashed-al-Mahfuz M, Islam MS, Hasan MK, Hossain MS, Ahmad M, Uddin S, Azad A, Alyami SA, Ahad MAR, Lio P (2021) Emotion recognition from EEG signal focusing on deep learning and shallow learning techniques[J]. IEEE Access 9:94601–94624

Islam MR, Islam MM, Rahman MM, Mondal C, Singha SK, Ahmad M, Awal A, Islam MS, Moni MA (2021) EEG channel correlation based model for emotion recognition[J]. Comput Biol Med 136:104757

Jha S, Seo C, Yang E, Joshi GP (2021) Real time object detection and tracking system for video surveillance system. Multimed Tools Appl 80(3):3981–3996

Kashiani H, Shokouhi SB (2019) Visual object tracking based on adaptive Siamese and motion estimation network. Image Vis Comput 83:17–28

M Kristan, et al (2018) The sixth visual object tracking vot2018 challenge results. In: Proc. Eur Conf Comput Vision (ECCV) Workshops. pp 0–0

Lersteau C, Rossi A, Sevaux M (2018) Minimum energy target tracking with coverage guarantee in wireless sensor networks. Eur J Oper Res 265(3):882–894

Li B, Yan J, Wu W, Zhu Z, Hu X (2018) High performance visual tracking with siamese region proposal network. In: Proc. IEEE Conference on Computer Vision and Pattern Recognition. pp 8971–8980

Li B, Wu W, Wang Q, Zhang F, Xing J, Yan J (2019) Siamrpn++: Evolution of siamese visual tracking with very deep networks. In: Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp 4282–4291

Liang P, Blasch E, Ling H (2015) Encoding color information for visual tracking: algorithms and benchmark. IEEE Trans Image Process 24(12):5630–5644

Lin TY, Maire M, Belongie S, Hays J, Perona P, Ramanan D, Dollár P, Zitnick CL (2014) Microsoft coco: Common objects in context. In: Proc. European conference on computer vision. Springer, Cham. pp 740–755

Liu D, Liu G (2019) A transformer-based variational autoencoder for sentence generation. In: Proc. 2019 International Joint Conference on Neural Networks (IJCNN). IEEE pp 1–7

Loshchilov I, Hutter F (2017) Decoupled weight decay regularization, arXiv preprint arXiv:1711.05101

Lukezic A, Matas J, Kristan M (2020) D3S-A discriminative single shot segmentation tracker. In: Proc. IEEE/CVF Conf Comput Vision Patt Recog. pp 7133–7142

Luo W, Sun P, Zhong F, Liu W, Zhang T, Wang Y (2019) End-to-end active object tracking and its real-world deployment via reinforcement learning. IEEE Trans Pattern Anal Mach Intell 42(6):1317–1332

Mueller M, Smith N, Ghanem B (2016) A benchmark and simulator for uav tracking. In: Proc. European Conference on Computer Vision. Springer, Cham. pp 445–461

Muhammad LJ, Islam M, Usman SS et al (2020) Predictive data mining models for novel coronavirus (COVID-19) infected patients’ recovery[J]. SN Comput Sci 1(4):1–7

Nam H, Han B (2016) Learning multi-domain convolutional neural networks for visual tracking. In: Proc. IEEE Conf Comput Vision Patt Recog. pp 4293–4302

Nasr M, Islam MM, Shehata S et al (2021) Smart healthcare in the age of AI: Recent advances, challenges, and future prospects[J]. IEEE Access

Olague G, Hernández DE, Llamas P, Clemente E, Briseño JL (2019) Brain programming as a new strategy to create visual routines for object tracking. Multimed Tools Appl 78(5):5881–5918

Qi Y, Zhang S, Qin L, Huang Q, Yao H, Lim J, Yang MH (2018) Hedging deep features for visual tracking. IEEE Trans Pattern Anal Mach Intell 41(5):1116–1130

Rahman MM, Manik MMH, Islam MM et al (2020) An automated system to limit COVID-19 using facial mask detection in smart city network[C]//2020 IEEE international IOT, electronics and mechatronics conference (IEMTRONICS). IEEE 1–5

Rahman MM, Islam M, Manik M et al (2021) Machine learning approaches for tackling novel coronavirus (COVID-19) pandemic[J]. Sn Comput Sci 2(5):1–10

Saha P, Sadi MS, Islam MM (2021) EMCNet: automated COVID-19 diagnosis from X-ray images using convolutional neural network and ensemble of machine learning classifiers[J]. Inform Med Unloc 22:100505

Sun C, Wang D, Lu H, Yang MH (2018) Correlation tracking via joint discrimination and reliability learning. In: Proc. IEEE Conf Comput Vision Patt Recog. pp 489–497

Sun Z, Wang Y, Gong C, Laganiere R (2021) Study of UAV tracking based on CNN in noisy environment. Multimed Tools Appl 80(4):5351–5372

Valmadre J, Bertinetto L, Henriques J, et al. (2017) End-to-end representation learning for correlation filter based tracking. In: Proc. IEEE Conf Comput Vision Patt Recog. pp 2805–2813

Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, Kaiser L, Polosukhin I (2017) Attention is all you need. In: Proc. Adv Neural Inf Proces Syst. pp 5998–6008

Voigtlaender P, Luiten J, Torr PH, Leibe B (2020) Siam r-cnn: Visual tracking by re-detection. In: Proc. IEEE/CVF Conf Comput Vision Patt Recog. pp 6578–6588

Wang Q, Teng Z, Xing J, Gao J, Hu W, Maybank S (2018) Learning attentions: residual attentional siamese network for high performance online visual tracking. In: Proc. IEEE Conference on Computer Vision and Pattern Recognition. pp 4854–4863

Wang Q, Yuan C, Wang J, Zeng W (2018) Learning attentional recurrent neural network for visual tracking. IEEE Trans Multimed 21(4):930–942

Wang S, Jiang F, Zhang B, Ma R, Hao Q (2019) Development of UAV-based target tracking and recognition systems. IEEE Trans Intell Transp Syst 21(8):3409–3422

Wang GT, Luo C, Xiong ZW, Zeng WJ (2019) Spm-tracker: Series-parallel matching for real-time visual object tracking. In: Proc. IEEE/CVF Conf Comput Vision Patt Recog. pp 3643–3652

Wang Y, Wang T, Zhang G, Cheng Q, Wu JQ (2020) Small target tracking in satellite videos using background compensation. IEEE Trans Geosci Remote Sens 58(10):7010–7021

Wu Y, Lim J, Yang M (2015) Object tracking benchmark, in IEEE transactions on pattern analysis and. Mach Intel 37:1834–1848

Wu Y, Liu Z, Zhou X, Ye L, Wang Y (2021) ATCC: accurate tracking by criss-cross location attention. Image Vis Comput 111:104188

Xiao Y, Kamat VR, Menassa CC (2019) Human tracking from single RGB-D camera using online learning. Image Vis Comput 88:67–75

Xu T, Feng ZH, Wu XJ, Kittler J (2019) Learning adaptive discriminative correlation filters via temporal consistency preserving spatial feature selection for robust visual object tracking, IEEE Transactions on Image Processing. pp 5596–5609

T. Yang, A. B. Chan, Learning dynamic memory networks for object tracking. In: Proc. Eur Conf Comput Vis, 2018, pp 152–167.

Yang K, He Z, Zhou Z, Fan N (2020) SiamAtt: Siamese attention network for visual tracking. Knowl-Based Syst 203:106079

Y. Yu, Y. Xiong, W. Huang, M. R. Scott, Deformable siamese attention networks for visual object tracking. In: Proc. IEEE/CVF Conf Comput Vision Pattern Recog, 2020, pp 6728–6737.

Yuan D, Chang X, Huang PY, Liu Q, He Z (2020) Self-supervised deep correlation tracking. IEEE Trans Image Proc 30:976–985

Zhang J, Ma S, Sclaroff S (2014) MEEM: Robust tracking via multiple experts using entropy minimization. In: Proc. European Conference on Computer Vision. Springer, Cham, pp 188–203

Zhang Z, Peng H, Fu J, Li B, Hu W (2020) Ocean: Object-aware anchor-free tracking. In: Proc. European Conference on Computer Vision. pp 771–787

Zheng L, Tang M, Chen Y, Wang J, Lu H (2020) Learning feature embeddings for discriminant model based tracking. In: Proc. Eur Conf Comput Vision (ECCV) 23(28):759–775

Zhu Z, Wang Q, Li B, Wu W, Yan J, Hu W (2018) Distractor-aware siamese networks for visual object tracking. In: Proc. Eur Conf Comput Vis. pp 101–117

Acknowledgments

This work is supported in part by the Natural Science Foundation of Heilongjiang Province of China under Grant No.F201123 and in part by the National Natural Science Foundation of China under Grant 52171332.

Data availability statement

Our manuscript has no available data.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

We declare that we have no financial or non-financial conflicts of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Gu, F., Lu, J. & Cai, C. A robust attention-enhanced network with transformer for visual tracking. Multimed Tools Appl 82, 40761–40782 (2023). https://doi.org/10.1007/s11042-023-15168-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-15168-5