Abstract

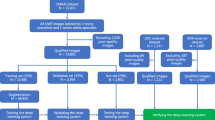

Retinal image quality assessment is an essential prerequisite for diagnosis of retinal diseases. Its goal is to identify high quality retinal images in which anatomic structures and lesions attracting ophthalmologists’attention most are exhibited clearly and definitely while reject poor quality images. Motivated by this, we mimic the way that ophthalmologists assess the quality of retinal images and propose a method termed SalStructuIQA. First, two salient structures are detected for retinal quality assessment. One is large-size salient structures including optic disc and exudates in large-size. The other is tiny-size salient structures mainly including vessels. Then the proposed two salient structure priors are incorporated with deep convolutional neural network (CNN) to enforce CNN pay attention to these salient structures. Accordingly, two CNN architectures named Dual-branch SalStructIQA and Single-branch SalStructIQA are designed for the incorporation, respectively. Experimental results show that the Fscore of our proposed Dual-branch SalStructIQA and Single-branch SalStructIQA are 0.8723 and 0.8662 respectively on the public Eye-Quality dataset, which demonstrates the effectiveness of our methods.

Similar content being viewed by others

Abbreviations

- CNN:

-

convolution neural network

- IQA:

-

image quality assessment

- Natural-IQA:

-

natural image quality assessment

- Retinal-IQA:

-

retinal image quality assessment

- SalStructIQA:

-

our salient structure IQA method

- FR:

-

full-reference

- RR:

-

reduced-reference

- NR:

-

no-reference

- LS:

-

large-scale

- TS:

-

tiny-scale

- I :

-

retinal images

- R c o n t r a s t :

-

contrast map

- P L S :

-

probability map

- M L S :

-

the mask of detected large-size salient structures

- \(\mathbf {R}_{line}^{\prime }\) :

-

the multi-scale line response

- \(\mathbf {R}_{line}^{\prime \prime }\) :

-

the standardized line response

- M T S :

-

the mask of detected tiny-size salient structures

- A c c :

-

accuracy

- P :

-

precision

- R :

-

recall

- F s c o r e :

-

a comprehensive evaluation index

References

Achanta R, Hemami S, Estrada F et al (2009) Frequency-tuned salient region detection. In: 2009 IEEE conference on computer vision and pattern recognition, pp 1597–1604

Burges CJC, Shaked T, Renshaw E, et al (2005) Learning to rank using gradient descent. In: Machine learning, proceedings of the 22nd international conference (ICML 2005), pp 89–96

Chollet F (2017) Xception: deep learning with depthwise separable convolutions. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1251–1258

Davis H, Russell S, Barriga E et al (2009) Vision-based, real-time retinal image quality assessment. In: 2009 22nd IEEE international symposium on computer-based medical systems, pp 1–6

Deng J, Dong W, Socher R et al (2009) Imagenet: a large-scale hierarchical image database. In: 2009 IEEE conference on computer vision and pattern recognition, pp 248–255

Dias JMP, Oliveira CM, da Silva Cruz LA (2014) Retinal image quality assessment using generic image quality indicators. Inf Fusion 19:73–90

Fu H, Wang B, Shen J, et al (2019) Evaluation of retinal image quality assessment networks in different color-spaces. In: International conference on medical image computing and computer-assisted intervention, pp 48–56

He KM, Zhang XY, Ren SQ, Sun J (2015) Delving deep into rectifiers: surpassing human-level performance on ImageNet classification. IEEE Int Conference Comput Vision:1026–1034

He K, Zhang X, Ren S, et al (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 770–778

Hu J, Shen L, Sun G (2018) Squeeze-and-excitation networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7132–7141

Huang G, Liu Z, Laurens VDM, Weinberger KQ (2017) Densely connected convolutional networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4700–4708

Hunter A, Lowell JA, Habib M et al (2011) An automated retinal image quality grading algorithm. In: 2011 Annual international conference of the IEEE engineering in medicine and biology society, pp 5955–5958

Jia S, Zhang Y (2018) Saliency-based deep convolutional neural network for no-reference image quality assessment. Multimed Tools Appl 77 (12):14,859–14,872

Kang L, Ye P, Li Y et al (2014) Convolutional neural networks for no-reference image quality assessment. In: 2014 IEEE conference on computer vision and pattern recognition, pp 1733–1740

Köhler T, Budai A, Kraus MF, et al (2013) Automatic no-reference quality assessment for retinal fundus images using vessel segmentation. In: Proceedings of the 26th IEEE international symposium on computer-based medical systems, pp 95–100

Krizhevsky A, Sutskever I, Hinton GE (2012) Imagenet classification with deep convolutional neural networks. In: Advances in neural information processing systems, pp 1097–1105

Lalonde M, Gagnon L, Boucher MC, et al (2001) Automatic visual quality assessment in optical fundus images. In: Proceedings of vision interface, pp 259–264

Lee SC, Wang Y (1999) Automatic retinal image quality assessment and enhancement. In: Medical imaging 1999: image processing, international society for optics and photonics, pp 1581–1590

Li Q, Lin W, Gu K, et al (2019) Blind image quality assessment based on joint log-contrast statistics. Neurocomputing 331:189–198

Li XM, Hu XW, Qi XJ, et al (2021) Rotation-oriented collaborative self-supervised learning for retinal disease diagnosis. IEEE Trans Med Imaging 40(9):2284–2294

Liu X, Weijer JVD, Bagdanov AD (2017) Rankiqa: learning from rankings for no-reference image quality assessment. In: Proceedings of the IEEE international conference on computer vision, pp 1040–1049

Ma K, Liu W, Liu T et al (2017a) Dipiq: blind image quality assessment by learning-to-rank discriminable image pairs. IEEE Trans Image Process 26(8):3951–3964

Ma K, Liu W, Zhang K, et al (2017b) End-to-end blind image quality assessment using deep neural networks. IEEE Trans Image Process 27 (3):1202–1213

MacGillivray TJ, Cameron JR, Zhang Q, et al (2015) Suitability of uk biobank retinal images for automatic analysis of morphometric properties of the vasculature. PLoS One 10(5):e0127914

Mittal A, Moorthy AK, Bovik AC (2012) No-reference image quality assessment in the spatial domain. IEEE Trans Image Process 21(12):4695–4708

Moorthy AK, Bovik AC (2011) Blind image quality assessment: from natural scene statistics to perceptual quality. IEEE Trans Image Process 20 (12):3350–3364

Muddamsetty SM, Moeslund TB (2021) Multi-level quality assessment of retinal fundus images using deep convolution neural networks. In: 16th International joint conference on computer vision theory and applications, pp 661–668

Nguyen UT, Bhuiyan A, Park LA, et al (2013) An effective retinal blood vessel segmentation method using multi-scale line detection. Pattern Recognit 46(3):703–715

Niu YH, Gu L, Zhao YT, Lu F (2022) Explainable diabetic retinopathy detection and retinal image generation. IEEE J Biomed Health Inform 26 (1):44–55

Ou FZ, Wang YG, Zhu G (2019) A novel blind image quality assessment method based on refined natural scene statistics. In: 2019 IEEE international conference on image processing (ICIP), pp 1004–1008

Pachade S, et al (2021) Retinal fundus multi-disease image dataset (rfmid): a dataset for multi-disease detection research. In Data 6(2):14

Parashar D, Agrawal D (2021) 2-D Compact Variational Mode Decomposition-Based Automatic Classification of Glaucoma Stages From Fundus Images. IEEE Trans Instrum Meas 70:1–10

Raj A, Shah NA, Tiwari AK, et al (2020) Multivariate regression-based convolutional neural network model for fundus image quality assessment. IEEE Access 8:57,810–57,821

Saad MA, Bovik AC, Charrier C (2012) Blind image quality assessment: a natural scene statistics approach in the dct domain. IEEE Trans Image Process 21(8):3339–3352

Saha SK, Fernando B, Cuadros J, et al (2018) Automated quality assessment of colour fundus images for diabetic retinopathy screening in telemedicine. J Digit Imaging 31(6):869–878

Selvaraju RR, Cogswell M, Das A, et al (2017) Grad-cam: visual explanations from deep networks via gradient-based localization. In: Proceedings of the IEEE international conference on computer vision, pp 618–626

Shen Y, Fang R, Sheng B, et al (2018) Multi-task fundus image quality assessment via transfer learning and landmarks detection. In: International workshop on machine learning in medical imaging. Springer, pp 28–36

Shen Y, Sheng B, Fang R, et al (2020) Domain-invariant interpretable fundus image quality assessment. Medical Image Anal 61(12):101654

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. arXiv:14091556

Szegedy C, Vanhoucke V, Ioffe S, et al (2016) Rethinking the inception architecture for computer vision. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2818–2826

Tennakoon R, Mahapatra D, Roy P, et al (2016) Image quality classification for dr screening using convolutional neural networks. In: Ophthalmic medical image anal. 3rd int. workshop, pp 113–120

Usher D, Himaga M, Dumskyj M, et al (2003) Automated assessment of digital fundus image quality using detected vessel area. In: Proceedings of medical image understanding and analysis. Citeseer, pp 81–84

Wang Z, Bovik AC (2006) Modern image quality assessment. Synth Lect Image Video Multimed Process 2(1):1–156

Wang S, Jin K, Lu H, et al (2015) Human visual system-based fundus image quality assessment of portable fundus camera photographs. IEEE Trans Medical Imaging 35(4):1046–1055

Yan Q, Gong D, Zhang Y (2018) Two-stream convolutional networks for blind image quality assessment. IEEE Trans Image Process 28(5):2200–2211

Yang S, Jiang Q, Lin W, et al (2019) Sgdnet: an end-to-end saliency-guided deep neural network for no-reference image quality assessment. In: Proceedings of the 27th ACM international conference on multimedia, pp 1383–1391

Yang XH, Li F, Liu HT (2020) Deep feature importance awareness based no-reference image quality prediction. Neurocomputing 401:209–223

Yu F, Sun J, Li A et al (2017) Image quality classification for dr screening using deep learning. In: 2017 39th Annual international conference of the ieee engineering in medicine and biology society (EMBC), pp 664–667

Zago GT, Andreão RV, Dorizzi B, et al (2018) Retinal image quality assessment using deep learning. Comput Biol Med 103:64–70

Acknowledgements

This work of Prof. Beiji Zou is partially supported by the National Key R&D Program of China (NO. 2018AAA0102100); Dr. Qing Liu is partially supported by the National Natural Science Foundation of China (No. 62006249) and Changsha Municipal Natural Science Foundation (kq2014135). This work was supported in part by the High Performance Computing Center of Central South University.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

None declared.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Xu, Z., Zou, B. & Liu, Q. A deep retinal image quality assessment network with salient structure priors. Multimed Tools Appl 82, 34005–34028 (2023). https://doi.org/10.1007/s11042-023-14805-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-14805-3