Abstract

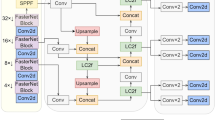

Evidence suggests that vision is among the most critical factors in marine information exploration. Instead, underwater images are generally poor quality due to color casts, lack of texture details, and blurred edges. Therefore, we propose the Multiscale Gated Fusion conditional GAN (MGF-cGAN) for underwater image enhancement. The generator of MGF-cGAN consists of Multiscale Feature Extract Module (Ms-FEM) and Gated Fusion Module (GFM). In Ms-FEM, we use three different parallel subnets to extract feature information, which can extract richer features than a single branch. The GFM can adaptively fuse the three outputs from Ms-FEM. GFM generates better chromaticity and contrast than other fusion ways. Additionally, we add the Multiscale Structural Similarity Index Measure (MS-SSIM) loss to train the network, which is highly similar to human perception. Extensive experiments across three benchmark underwater image datasets corroborate that MGF-cGAN can generate images with better visual perception than classical and State-Of-The-Art (SOTA) methods. It achieves 27.1078dB PSNR and 11.9437 RMSE on EUVP dataset. More significantly, enhanced results of MGF-cGAN also provide excellent performance in underwear saliency detection, SURF key matching test, and so on. Based on this study, MGF-cGAN is found to be suitable for data preprocessing in an underwater multimedia system.

Similar content being viewed by others

Data Availability

The authors confirm that the data generated or analyzed and supporting the findings of this study are available within the article.

References

Ahn J, Yasukawa S, Sonoda T, et al. (2018) An optical image transmission system for deep sea creature sampling missions using autonomous underwater vehicle. IEEE J Ocean Eng 45:350–361

Anwar S, Li C (2020) Diving deeper into underwater image enhancement: a survey. Signal Process Image Commun 89:115978

Bay H, Ess A, Tuytelaars T, Van Gool L (2008) Speeded-up robust features (SURF). Comput Vis image Underst 110:346–359

Cao Z, Hidalgo G, Simon T, et al. (2019) Openpose: realtime multi-person 2D pose estimation using part affinity fields. IEEE Trans Pattern Anal Mach Intell 43:172–186

Chen X, Yu J, Kong S, et al. (2019) Towards real-time advancement of underwater visual quality with GAN. IEEE Trans Ind Electron 66:9350–9359

Chen X, Yu J, Kong S, et al. (2019) Towards real-time advancement of underwater visual quality with GAN. IEEE Trans Ind Electron 66:9350–9359

Fan G-F, Yu M, Dong S-Q, et al. (2021) Forecasting short-term electricity load using hybrid support vector regression with grey catastrophe and random forest modeling. Util Policy 73:101294

Fazlali H, Shirani S, McDonald M, et al. (2020) Aerial image dehazing using a deep convolutional autoencoder. Multimed Tools Appl 79:29493–29511

Feng X, Li J, Hua Z (2020) Low-light image enhancement algorithm based on an atmospheric physical model. Multimed Tools Appl 79:32973–32997

Fu X, Cao X (2020) Underwater image enhancement with global–local networks and compressed-histogram equalization. Signal Process Image Commun 86:115892

Goferman S, Zelnik-Manor L, Tal A (2011) Context-aware saliency detection. IEEE Trans Pattern Anal Mach Intell 34:1915–1926

Gulrajani I, Ahmed F, Arjovsky M et al (2017) Improved training of Wasserstein GANs. In: Proceedings of the international conference on neural information processing systems, pp 5769-5779

Guo JC, Li CY, Guo CL, Chen SJ (2017) Research progress of underwater image enhancement and restoration methods. J Image Graph 22:273–287

Guo Y, Li H, Zhuang P (2019) Underwater image enhancement using a multiscale dense generative adversarial network. IEEE J Ocean Eng 45:862–870

He K, Zhang X, Ren S, Sun J (2015) Delving deep into rectifiers: surpassing human-level performance on imagenet classification. In: Proceedings of the IEEE international conference on computer vision, pp 1026–1034

Huang K-Q, Wang Q, Wu Z-Y (2006) Natural color image enhancement and evaluation algorithm based on human visual system. Comput Vis Image Underst 103:52–63

Huang G, Liu Z, Van Der Maaten L, Weinberger KQ (2017) Densely connected convolutional networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4700–4708

Huang D, Wang Y, Song W et al (2018) Shallow-water image enhancement using relative global histogram stretching based on adaptive parameter acquisition. In: Proceedings of the international conference on multimedia modeling, pp 453–465

Huang Y, Liu M, Yuan F (2021) Color correction and restoration based on multi-scale recursive network for underwater optical image. Signal Process Image Commun 93:116174

Huynh-Thu Q, Ghanbari M (2008) Scope of validity of PSNR in image/video quality assessment. Electron Lett 44:800–801

Ioffe S, Szegedy C (2015) Batch normalization: accelerating deep network training by reducing internal covariate shift. In: Proceedings of the international conference on machine learning, pp 448–456

Islam MJ, Xia Y, Sattar J (2020) Fast underwater image enhancement for improved visual perception. IEEE Robot Autom Lett 5:3227–3234

Isola P, Zhu J-Y, Zhou T, Efros AA (2017) Image-to-image translation with conditional adversarial networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1125–1134

Jaffe JS (1990) Computer modeling and the design of optimal underwater imaging systems. IEEE J Ocean Eng 15:101–111

Li S, Liu f, Wei J (2022), Underwater image restoration based on exponentiated mean local variance and extrinsic prior. Multimed Tools Appl. https://doi.org/10.1007/s11042-021-11269-1

Li R, Pan J, Li Z, Tang J (2018) Single image dehazing via conditional generative adversarial network. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 8202–8211

Li C, Anwar S, Porikli F (2020) Underwater scene prior inspired deep underwater image and video enhancement. Pattern Recognit 98:107038

Li C, Guo C, Ren W, et al. (2020) An underwater image enhancement benchmark dataset and beyond. IEEE Trans Image Process 29:4376–4389

Li C, Anwar S, Hou J, et al. (2021) Underwater image enhancement via medium transmission-guided multi-color space embedding. IEEE Trans Image Process 30:4985–5000

Li H, Zhuang P (2021) Dewaternet: a fusion adversarial real underwater image enhancement network. Signal Process Image Commun 95:116248

Liu P, Wang G, Qi H, et al. (2019) Underwater image enhancement with a deep residual framework. IEEE Access 7:94614–94629

McGlamery BL (1980) A computer model for underwater camera systems. In: Ocean optics VI. International society for optics and photonics, pp 221–231

Mirza M, Osindero S (2014) Conditional generative adversarial nets. arXiv:14111784

Naga Srinivasu P, Balas VE (2021) Performance measurement of various hybridized kernels for noise normalization and enhancement in high-resolution MR images. Bio-inspired Neurocomputing. Springer, Berlin, pp 1–24

Pan PW, Yuan F, Cheng E (2018) Underwater image de-scattering and enhancing using dehazenet and HWD. J Mar Sci Technol 26:531–540

Panetta K, Gao C, Agaian S (2015) Human-visual-system-inspired underwater image quality measures. IEEE J Ocean Eng 41:541–551

Rahman Z, Jobson DJ, Woodell GA (2004) Retinex processing for automatic image enhancement. J Electron Imaging 13:100–110

Ren W, Ma L, Zhang J et al (2018) Gated fusion network for single image dehazing. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3253–3261

Sharma T, Agrawal I, Verma NK (2020) CSIDNet: compact single image dehazing network for outdoor scene enhancement. Multimed Tools Appl 79:30769–30784

Shen P, Zhang L, Wang M, Yin G (2021) Deeper super-resolution generative adversarial network with gradient penalty for sonar image enhancement. Multimed Tools Appl 80:28087–28107

Sun X, Liu L, Li Q, et al. (2019) Deep pixel-to-pixel network for underwater image enhancement and restoration. IET Image Process 13:469–474

Tsai D-Y, Lee Y, Matsuyama E (2008) Information entropy measure for evaluation of image quality. J Digit Imaging 21:338–347

Wang T-C, Liu M-Y, Zhu J-Y et al (2018) High-resolution image synthesis and semantic manipulation with conditional gans. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 8798–880

Wang Y, Guo J, Gao H, Yue H (2021) UIEC2-Net: CNN-based underwater image enhancement using two color space. Signal Process Image Commun 96:116250

Wang Y, Yin S, Basu A (2021) A multi-scale attentive recurrent network for image dehazing. Multimed Tools Appl 80:32539–32565

Wang Y, Zhang J, Cao Y, Wang Z (2017) A deep CNN method for underwater image enhancement. In: IEEE International conference on image processing, pp 1382–1386

Wu Q, Guo Y, Hou J, et al. (2021) Underwater optical image processing based on double threshold judgements and optimized red dark channel prior method. Multimed Tools Appl 80:29985–30002

Yang M, Sowmya A (2015) An underwater color image quality evaluation metric. IEEE Trans Image Process 24:6062–6071

Yang M, Hu K, Du Y, et al. (2020) Underwater image enhancement based on conditional generative adversarial network. Signal Process Image Commun 81:115723

Yu X, Qu Y, Hong M (2018) Underwater-GAN: underwater image restoration via conditional generative adversarial network. In: Proceedings of the international conference on pattern recognition, pp 66–75

Yuan Q, Li J, Zhang L, et al. (2020) Blind motion deblurring with cycle generative adversarial networks. Vis Comput 36:1591–1601

Z Wang A, C Bovik H, Sheikh R, Simoncelli EP (2004) Image quality assessment: from error visibility to structural similarity. IEEE Trans image Process 13:600–612

Zhang H, Sindagi V, Patel VM (2019) Image de-raining using a conditional generative adversarial network. IEEE Trans Circ Syst Video Technol 30:3943–3956

Zhao H, Gallo O, Frosio I, Kautz J (2016) Loss functions for image restoration with neural networks. IEEE Trans Comput imaging 3:47–57

Zhou J, Yao J, Zhang w, Zhang D (2021) Multi-scale retinex-based adaptive gray-scale transformation method for underwater image enhancement. Multimed Tools Appl. https://doi.org/10.1007/s11042-021-11327-8

Zong X, Chen Z, Wang D (2021) Local-cycleGAN: a general end-to-end network for visual enhancement in complex deep-water environment. Appl Intell 51:1947–1958

Acknowledgements

This work is supported by the National Key Research and Development Program of China under Grant (No. 2018YFB1403303), the Key Research and Development Program of Liaoning Province under Grant (No. 2019JH2/10100014).

Author information

Authors and Affiliations

Contributions

Xu Liu implemented the algorithm, performed the experiments, and wrote the manuscript. Sen Lin revised the paper and provided funding support. Zhiyong Tao provided funding support.

Corresponding author

Ethics declarations

Conflict of Interests

The authors declare no confict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Liu, X., Lin, S. & Tao, Z. Learning multiscale pipeline gated fusion for underwater image enhancement. Multimed Tools Appl 82, 32281–32304 (2023). https://doi.org/10.1007/s11042-023-14687-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-14687-5