Abstract

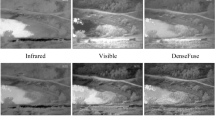

Multispectral image fusion plays a crucial role in smart city environment safety. In the domain of visible and infrared image fusion, object vanishment after fusion is a key problem which restricts the fusion performance. To address this problem, a novel Salient Regional Generative Adversarial Network GAN (SaReGAN) is presented for infrared and VIS image fusion. The SaReGAN consists of three parts. In the first part, the salient regions of infrared image are extracted by visual saliency map and the information of these regions is preserved. In the second part, the VIS image, infrared image and salient information are merged thoroughly in the generator to gain a pre-fused image. In the third part, the discriminator attempts to differentiate the pre-fused image and VIS image, in order to learn details from VIS image based on the adversarial mechanism. Experimental results verify that the SaReGAN outperforms other state-of-the-art methods in quantitative and qualitative evaluations.

Similar content being viewed by others

Data Availability

Data sharing not applicable to this article as no datasets were generated or analyzed during the current study.

References

Chen J, Zhang L, Lu L, Li Q, Hu M, Yang X (2021) A novel medical image fusion method based on rolling guidance filtering. Internet Things 14:100172

Cheng M-M, Mitra NJ, Huang X, Torr PH, Hu S-M (2014) Global contrast based salient region detection. IEEE Trans Pattern Anal Mach Intell 37 (3):569–582

Eskicioglu AM, Fisher PS (1995) Image quality measures and their performance. IEEE Trans Commun 43(12):2959–2965

Gao M, Jiang J, Zou G, John V, Liu Z (2019) Rgb-d-based object recognition using multimodal convolutional neural networks: a survey. IEEE Access 7:43110–43136

Han Y, Cai Y, Cao Y, Xu X (2013) A new image fusion performance metric based on visual information fidelity. Inf Fusion 14(2):127–135

Hermessi H, Mourali O, Zagrouba E (2021) Multimodal medical image fusion review: theoretical background and recent advances. Signal Process 183:108036

Hermessi H, Mourali O, Zagrouba E (2021) Multimodal medical image fusion review: theoretical background and recent advances. Signal Process 183:108036

Kaur H, Koundal D, Kadyan V (2021) Image fusion techniques: a survey. Arch Comput Meth Eng 28(7):4425–4447

Kumar BS (2015) Image fusion based on pixel significance using cross bilateral filter. SIViP 9(5):1193–1204

Li H, Wu X-J (2018) Infrared and visible image fusion using latent low-rank representation, arXiv:1804.08992.

Li Q, Wu W, Lu L, Li Z, Ahmad A, Jeon G (2020) Infrared and visible images fusion by using sparse representation and guided filter. J Intell Transp Syst 24(3):254–263

Li B, Xian Y, Zhang D, Su J, Hu X, Guo W (2021) Multi-sensor image fusion: a survey of the state of the art. J Comput Commun 9(6):73–108

Li Q, Yang X, Wu W, Liu K, Jeon G (2021) Pansharpening multispectral remote-sensing images with guided filter for monitoring impact of human behavior on environment. Concurrency and Computation: Practice and Experience

Liu F, Chen L, Lu L, Ahmad A, Jeon G, Yang X (2020) Medical image fusion method by using laplacian pyramid and convolutional sparse representation. Concurrency and Computation: Practice and Experience

Liu S, Gao M, John V, Liu Z, Blasch E (2020) Deep learning thermal image translation for night vision perception. ACM Trans Intell Syst Technol (TIST) 12(1):1–18

Ma J, Yu W, Liang P, Li C, Jiang J (2018) Fusiongan: a generative adversarial network for infrared and visible image fusion. Information Fusion

Ma J, Zhou Y (2020) Infrared and visible image fusion via gradientlet filter. Comput Vis Image Underst 103016

Ma J, Zhou Z, Wang B, Zong H (2017) Infrared and visible image fusion based on visual saliency map and weighted least square optimization. Infrared Phys Technol 82:8–17

Pan T, Jiang J, Yao J, Wang B, Tan B (2020) A novel multi-focus image fusion network with u-shape structure. Sensors 20(14):3901

Qu G, Zhang D, Yan P (2002) Information measure for performance of image fusion. Electron Lett 38(7):313–315

Reynolds JH, Desimone R (2003) Interacting roles of attention and visual salience in v4. Neuron 37(5):853–863

Roberts JW, Van Aardt JA, Ahmed FB (2008) Assessment of image fusion procedures using entropy, image quality, and multispectral classification. J Appl Remote Sens 2(1):023522

Ronneberger O, Fischer P, Brox T (2015) U-net: convolutional networks for biomedical image segmentation. In: International conference on medical image computing and computer-assisted intervention. Springer, pp 234–241

Tian J, Chen L (2012) Adaptive multi-focus image fusion using a wavelet-based statistical sharpness measure. Signal Process 92(9):2137–2146

Toet A (2014) TNO image fusion Dataset. https://doi.org/10.6084/m9.figshare.1008029.v1, https://figshare.com/articles/dataset/TNO_Image_Fusion_Dataset/1008029. Accessed 04 June 2021

Yang C, Zhang J-Q, Wang X-R, Liu X (2008) A novel similarity based quality metric for image fusion. Inf Fusion 9(2):156–160

Yu N, Qiu T, Bi F, Wang A (2011) Image features extraction and fusion based on joint sparse representation. IEEE J Sel Top Signal Process 5 (5):1074–1082

Yu F, Koltun V (2015) Multi-scale context aggregation by dilated convolutions. arXiv: Computer Vision and Pattern Recognition

Zhang Q, Guo B (2009) Multifocus image fusion using the nonsubsampled contourlet transform. Signal Process 89(7):1334–1346

Zhang H, Xu H, Tian X, Jiang J, Ma J (2021) Image fusion meets deep learning: a survey and perspective. Inf Fusion 76:323–336

Zhao J, Feng H, Xu Z, Li Q, Liu T (2013) Detail enhanced multi-source fusion using visual weight map extraction based on multi scale edge preserving decomposition. Opt Commun 287:45–52

Zhou T, Li Q, Lu H, Cheng Q, Zhang X (2022) Gan review: models and medical image fusion applications. Information Fusion

Funding

This work is supported in part by the National Natural Science Foundation of Shandong Province (Nos. ZR2021QD041 and ZR2020MF127).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethics approval

We declare that there is no ethics issue.

Conflict of Interests

We declare that we have no conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Gao, M., Zhou, Y., Zhai, W. et al. SaReGAN: a salient regional generative adversarial network for visible and infrared image fusion. Multimed Tools Appl (2023). https://doi.org/10.1007/s11042-023-14393-2

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11042-023-14393-2