Abstract

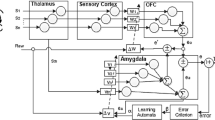

Listening to music can evoke different emotions in humans. Music emotion recognition (MER) can predict a person’s emotions before listening to a song. However, there are three problems with MER studies. First, the brain is the seat of music perception, but the simulation of MER based on the brain’s limbic system has not been examined so far. Secondly, although the effect of individual differences is recognized on the perception and induction of music emotion in the literature, less attention has been paid to the personalization of the model. Finally, most previous studies have emphasized the classification of music pieces into emotional groups, while often a piece of music creates several emotions with different values. The purpose of the present study is to introduce an optimized model of brain emotional learning (BEL) which is combined with Thayer’s psychological model to predict the quantitative value of all emotions that hat would reach a specific person by listening to a new piece of music. The proposed model consists of 12 emotional parts that work in parallel where each part is responsible for evaluating one Thayer’s specific emotion. Four neural areas of the emotional brain are simulated for each part. The input signal is adjusted using Thayer’s dimensions and a fuzzy system. The average of the results obtained with the proposed model were: R2 = 0.69 for arousal, R2 = 0.36 for valence, and MSE = 0.051, which was better and faster than the multilayer network models and even the original BEL model for all emotions.

Similar content being viewed by others

Data availability

All data generated or analysed during this study are included in this published article as Songs-Database-TheRadifofMirzaAbdollah and Brief_data_Experiment files.

References

Abbası Layegh M, Haghıpour S, Najafı Sarem Y (2014) Classification of the Radif of Mirza Abdollah a canonic repertoire of Persian music using SVM method. Gazi Uni J Sci Part A: Engin Innova 1(4):57–66 Retrieved from https://dergipark.org.tr/tr/pub/gujsa/issue/7441/98108

Adolphs R (2017) How should neuroscience study emotions? By distinguishing emotion states, concepts, and experiences. Soc Cogn Affect Neurosci 12-1:24–31. https://doi.org/10.1093/scan/nsw153

Aljanaki A, Wiering F, Veltkamp RC (2016) Studying emotion induced by music through a crowdsourcing game. Inf Process Manag 52-1:115–128. https://doi.org/10.1016/j.ipm.2015.03.004

Aljanaki A, Yang YH, Soleymani M (2017) Developing a benchmark for emotional analysis of music. PLoS One 12(3):e0173392. https://doi.org/10.1371/journal.pone.0173392

Balasubramanian G, Kanagasabai A, Mohan J, Seshadri NG (2018) Music induced emotion using wavelet packet decomposition-an EEG study. Biomed Signal Proc Contr 42:115–128. https://doi.org/10.1016/j.bspc.2018.01.015

Basak H, Kundu R, Singh PK, Ijaz MF, Woźniak M, Sarkar R (2022) A union of deep learning and swarm-based optimization for 3D human action recognition. Sci Rep 12(1):1–17. https://doi.org/10.1038/s41598-022-09293-8

Bhatti UA, Huang M, Wu D, Zhang Y, Mehmood A, Han H (2019) Recommendation system using feature extraction and pattern recognition in clinical care systems. Enterprise Inform Syst 13(3):1–24. https://doi.org/10.1080/17517575.2018.1557256

Bogdanov D, Wack N, Gómez E, Gulati S, Herrera P, Mayor O, Roma G, Salamon J, Zapata J, Serra X (2013) ESSENTIA: an audio analysis library for music information retrieval. In proceedings of the 14th international society for music information retrieval conference 493-498. https://doi.org/10.1145/2502081.2502229

Campobello G, Dell’Aquila D, Russo M, Segreto A (2020) Neuro-genetic programming for multigenre classification of music content. Appl Soft Comput 94:106488. https://doi.org/10.1016/j.asoc.2020.106488

Chen YA, Wang JC, Yang YH, Chen HH (2017) Component tying for mixture model adaptation in personalization of music emotion recognition. IEEE/ACM Transac Audio, Speech, Lang Proc 25:1409–1420. https://doi.org/10.1109/TASLP.2017.269356

Cortes DS, Tornberg C, Bänziger T, Elfenbein HA, Fischer H, Laukka P (2021) Effects of aging on emotion recognition from dynamic multimodal expressions and vocalizations. Sci Rep 11(1):2647. https://doi.org/10.1038/s41598-021-82135-1

Davies S (1994) Musical meaning and expression. Cornell University Press https://doi.org/10.2307/2956368

Dde B-G, Lozano-Diez A, Toledano DT, Gonzalez-Rodriguez J (2019) Exploring convolutional, recurrent, and hybrid deep neural networks for speech and music detection in a large audio dataset. EURASIP J Audio, Speech, Music Proc 152:1–18. https://doi.org/10.1186/s13636-019-0152-1

Er MB, Cig H, Aydilek IB (2020) A new approach to recognition of human emotions using brain signals and music stimuli. Appl Acoust 175:107840. https://doi.org/10.1016/j.apacoust.2020.107840

Farhoudi Z, Setayeshi S (2021) Fusion of deep learning features with mixture of brain emotional learning for audio-visual emotion recognition. Speech Comm 127:92–103. https://doi.org/10.1016/j.specom.2020.12.001

Ferreira L, Whitehead J (2019) Learning to generate music with sentiment. 20th International Society for Music Information Retrieval Conference. Delft, the Netherlands 384-390. https://doi.org/10.5281/zenodo.3527824

Garg A, Chaturvedi V, Kaur AB, Varshney V, Parashar A (2022) Machine learning model for mapping of music mood and human emotion based on physiological signals. Multimed Tools Appl 81:5137–5177. https://doi.org/10.1007/s11042-021-11650-0

Gaut B, Lopes DM (2013) The Routledge companion to aesthetics, 3rd edition. Routledge. https://doi.org/10.4324/9780203813034

Gemmeke JF, Ellis DPW, Freedman D, Jansen A, Lawrence W, Moore RC, Plakal M, Ritter M (2017) Audio set: an ontology and human-labeled dataset for audio events. IEEE international conference on acoustics, speech, and signal processing, ICASSP 776-780. https://doi.org/10.1109/ICASSP.2017.7952261

Graben PB, Blutner R (2019) Quantum approaches to music cognition. J Math Psychol 91:38–50. https://doi.org/10.1016/j.jmp.2019.03.002

Grekow J (2018) Audio features dedicated to the detection and tracking of arousal and valence in musical compositions. J Inform Telecomm 2-3:322–333. https://doi.org/10.1080/24751839.2018.1463749

Grekow J (2021) Music emotion recognition using recurrent neural networks and pretrained models. J Intell Inf Syst 57:531–546. https://doi.org/10.1007/s10844-021-00658-5

Hasanzadeh F, Annabestani M, Moghimi S (2021) Continuous emotion recognition during music listening using EEG signals: a fuzzy parallel cascades model. Appl Soft Comput 101:107028. https://doi.org/10.1016/j.asoc.2020.107028

Hausmann M, Hodgetts S, Eerola T (2016) Music-induced changes in functional cerebral asymmetries. Brain Cogn 104:58–71. https://doi.org/10.1016/j.bandc.2016.03.001

Hizlisoy S, Yildrim S, Tufekci Z (2020) Music emotion recognition using convolutional long short term memory deep neural networks. Engin Sci Technol, Int J 24-3:760–767. https://doi.org/10.1016/j.jestch.2020.10.009

Hyung Z, Park JS, Lee K (2017) Utilizing context-relevant keywords extracted from a large collection of user-generated documents for music discovery. Inf Process Manag 53-5:1185–1200. https://doi.org/10.1016/j.ipm.2017.04.006

Jafari NZ, Arvand P (2016) The function of education in codification of Radif in Iranian Dastgahi music. J Lit Art Stud 6(1):74–81. https://doi.org/10.17265/2159-5836/2016.01.010

Koelsch S (2018) Investigating the neural encoding of emotion with music. Neuron 98-6:1075–1079. https://doi.org/10.1016/j.neuron.2018.04.029

Krumhansl CL (1997) An exploratory study of musical emotions and psychophysiology. Can J Exp Psychol 51-4:336–352. https://doi.org/10.1037/1196-1961.51.4.336

LaBerge D, Samuels SJ (1974) Toward a theory of automatic information processing in reading. Cog Psycho, Elsevier BV 6-2:293–323. https://doi.org/10.1016/0010-0285(74)90015-2

Lindquist KA, Wager TD, Kober H, Bliss-Moreau E, Barrett LF (2012) The brain basis of emotion: a meta-analytic review. Behav Brain Sci 35:121–143. https://doi.org/10.1017/S0140525X11000446

Liu T, Han L, Ma L, Guo D (2018) Audio-based deep music emotion recognition. AIP Conf Proc 1967:040021. https://doi.org/10.1063/1.5039095

Malheiro R, Panda R, Gomes P, Paiva RP (2018) Emotionally-relevant features for classification and regression of music lyrics. IEEE Trans Affect Comput 9-2:240–254. https://doi.org/10.1109/TAFFC.2016.2598569

Meyer LB (1956) Emotion and meaning in music. University of Chicago Press, Chicago. https://doi.org/10.1093/acprof:oso/9780199751396.003.0003

Mo S, Niu J (2017) A novel method based on OMPGW method for feature extraction in automatic music mood classification. IEEE Trans Affect Comput 10-3:313–324. https://doi.org/10.1109/TAFFC.2017.2724515

Moren J, Balkenius C (2000) A computational model of emotional learning in the amygdale. In Mayer JA, Berthoz A, Floreano D, Roitblat HL, Wilson SW (eds) From animals to animats 6. MIT, Cambridge, pp 383–391. https://doi.org/10.7551/mitpress/3120.003.0041

Motamed S, Setayeshi S, Rabiee A (2017) Speech emotion recognition based on brain and mind emotional learning model. Biologic Insp Cog Architect 19:32–38. https://doi.org/10.1016/j.bica.2016.12.002

Nguyen VL, Kim D, Ho VP, Lim Y (2017) A New Recognition Method for Visualizing Music Emotion. Int J Electri Comput Engin 7–3:1246–1254. https://doi.org/10.11591/ijece.v7i3.pp1246-1254

Orjesek R, Jarina R, Chmulik M (2022) End-to-end music emotion variation detection using iteratively reconstructed deep features. Multimed Tools Appl 81:5017–5031. https://doi.org/10.1007/s11042-021-11584-7

Panda R, Malheiro RM, Paiva RP (2020) Audio features for music emotion recognition: a survey. IEEE Trans Affect Comput. https://doi.org/10.1109/TAFFC.2020.3032373

Rachman FH, Sarno R, Fatichah C (2018) Music emotion classification based on lyrics-audio using corpus based emotion. Int J Electr Comput Engin 8–3:1720–1730. https://doi.org/10.11591/ijece.v8i3.pp1720-1730

Rajesh S, Nalini NJ (2020) Musical instrument emotion recognition using deep recurrent neural network. Procedia Compu Sci 167:6–25. https://doi.org/10.1016/j.procs.2020.03.178

Rumelhart DE, McClelland JL (1986) Parallel distributed processing: explorations in the microstructure of cognition: foundations. MIT Press, Cambridge, Massachusetts. https://doi.org/10.7551/mitpress/5236.001.0001

Russell JA (1980) A circumplex model of affect. J Pers Soc Psychol 39-6:1161–1178. https://doi.org/10.1037/h0077714

Russo M, Kraljević L, Stella M, Sikora M (2020) Cochleogram-based approach for detecting perceived emotions in music. Inf Process Manag 57-5:1022700. https://doi.org/10.1016/j.ipm.2020.102270

Sahabi S (2022) Study of changing notes root and functions in Dastgah Homayun from Radif of Mirza-Abdollah (transcribed by Dariush Talai). J Dram Arts Music 12(27):35–47 http://dam.journal.art.ac.ir/article_1065.html?lang=en

Sahoo KK, Dutta I, Ijaz MF, Wozniak M, Singh PK (2021) TLEFuzzyNet: fuzzy rank-based ensemble of transfer learning models for emotion recognition from human speeches. IEEE Access 9:166518–166530. https://doi.org/10.1109/ACCESS.2021.3135658

Sarkar R, Choudhury S, Dutta S, Roy A, Saha SK (2019) Recognition of emotion in music based on deep convolutional neural network. Multimed Tools Appl 79:765–783. https://doi.org/10.1007/s11042-019-08192-x

Sen A, Srivastava M (1990) Regression analysis: theory, methods, and applications. Springer, New York. https://doi.org/10.1007/978-1-4612-4470-7

Sharafbayani H (2017) Sources of Foroutan Radif. J Dram Arts Music 7(13):131–145. https://doi.org/10.30480/dam.2017.336

Singer N, Jacoby N, Lin T, Raz G, Shpigelman L, Gilam G, Granot RY, Hendler T (2016) Common modulation of limbic network activation underlies musical emotions as they unfold. Neuroimage 141:517–529. https://doi.org/10.1016/j.neuroimage.2016.07.002

Soleymani M, Caro MN, Schmidt EM, Sha CY, Yang YH (2013) 1000 songs for emotional analysis of music. In: Proceedings of the 2nd ACM international workshop on crowdsourcing for multimedia, Barcelona, Spain, 22 October 2013; ACM: New York. USA, NY, pp 1–6. https://doi.org/10.1145/2506364.2506365

Tala'i, D (2000) Traditional Persian art music: the Radif of Mirza Abdollah. Costa Mesa, CA: Mazda Publishers https://doi.org/10.4000/abstractairanica.35712

Thayer RE (1989) The biopsychology of mood and arousal. Oxford University Press, 23, 352 https://doi.org/10.1016/0003-6870(92)90319-Q

Turchet L, Pauwels J (2022) Music emotion recognition: intention of composers-performers versus perception of musicians, non-musicians, and listening machines. IEEE/ACM Transac Audio, Speech Lang Proc 30:305–316. https://doi.org/10.1109/TASLP.2021.3138709

Tzanetakis G, Cook P (2000) MARSYAS: a framework for audio analysis. Organised Sound 4-3:169–175. https://doi.org/10.1017/S1355771800003071

Vempala NN, Russo FA (2018) Modeling music emotion judgments using machine learning methods. Front Psychol 8:2239. https://doi.org/10.3389/fpsyg.2017.02239

Xiaobin T (2018) Fuzzy clustering based self-organizing neural network for real time evaluation of wind music. Cog Symultistems Res 52:359–364. https://doi.org/10.1016/j.cogsys.2018.07.016

Yang YH, Chen HH (2012) Machine recognition of music emotion: a review. ACM Trans Intell Syst Technol 3(3):1–30. https://doi.org/10.1145/2168752.2168754

Zonis E (1973) Classical Persian music: an introduction. Harvard University Press, Cambridge, MA. https://doi.org/10.1017/S0020743800024399

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interests

All authors certify that they have no affiliations with or involvement in any organization or entity with any financial interest or non-financial interest in the subject matter or materials discussed in this manuscript.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Jandaghian, M., Setayeshi, S., Razzazi, F. et al. Music emotion recognition based on a modified brain emotional learning model. Multimed Tools Appl 82, 26037–26061 (2023). https://doi.org/10.1007/s11042-023-14345-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-14345-w