Abstract

Multi-focus image fusion merges multiple source images of the same scene with different focus values to obtain a single image that is more informative. A novel approach is proposed to create this single image in this paper. The method’s primary stages include creating initial decision maps, applying morphological operations, and obtaining the fused image with the created fusion rule. Initial decision maps consist of label values represented as focused or non-focused. While determining these values, the first decision is made by feeding the image patches obtained from each source image to the modified CNN architecture. If the modified CNN architecture is unstable in determining label values, a new improvement mechanism designed based on focus measurements is applied for unstable regions where each image patch is labelled as non-focused. Then, the initial decision maps obtained for each source image are improved by morphological operations. Finally, the dynamic decision mechanism (DDM) fusion rule, designed considering the label values in the decision maps, is applied to minimize the disinformation resulting from classification errors in the fused image. At the end of all these steps, the final fused image is obtained. Also, in the article, a rich dataset containing two or more than two source images for each scene is created based on the COCO dataset. As a result, the method’s success is measured with the help of objective and subjective metrics. When the visual and quantitative results are examined, it is proven that the proposed method successfully creates a perfect fused image.

Similar content being viewed by others

References

Amin-Naji M, Aghagolzadeh A, Ezoji M (2019) Ensemble of CNN for multi-focus image fusion. Inf Fusion 51:201–214

Amin-Naji M, Aghagolzadeh A, Ezoji M (2020) CNN’s hard voting for multi-focus image fusion. J Ambient Intell Humaniz Comput 11:1749–1769

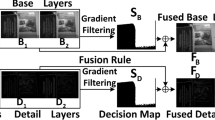

Aymaz S, Köse C, Aymaz Ş (2020) Multi-focus image fusion for different datasets with super-resolution using gradient-based new fusion rule. Multimed Tools Appl 79:13311–13350

Bai X, Zhang Y, Zhou F (2015) Quadtree-based multi-focus image fusion using a weighted focus-measure[J]. Inf Fusion 22:105–118

Bogoni L, Hansen M (2001) Pattern-selective colour image fusion. Pattern Recognit 34:1515–1526

Bouzos O, Andreadis I, Mitianoudis N (2019) Conditional random field model for robust multi-focus image fusion. IEEE Trans Image Process 28(11):5636–5648

Burt P, Adelson E (1985) Merging images through pattern decomposition, in Applications of Digital Image Processing VIII. Int Soc Opt Photonics 575:173–181

Chen Y, Blum RS (2009) A new automated quality assessment algorithm for image fusion[J]. Image Vis Comput 27(10):1421–1432

Chen L, Li J, Chen C (2013) Regional multi-focus image fusion using sparse representation. Opt Express 21(4):5182–5197

Chen C, Gend P, Lu K (2015) Multi-focus image fusion based on multiwavelet and DFB. Chem Eng Trans 46:277–283

D.Gai X, Shen H, Chen P, Su (2020) Multi-focus image fusion method based on two-stage of convolutional neural network. Sig Process 176:107681

Du C, Gao S (2018) Multi-focus image fusion algorithm based on pulse coupled neural network modified decision map. Optik 157:1003–1015

Du C, Gao S, Liu Y, Gao B (2019) Multi-focus image fusion using deep support value convolutional neural network. Optik 176:567–578

Fu W, Huang S, Li Z, Shen H, Li J, Wang P (2016) The optimal algorithm for Multi-source RS image fusion. MethodsX 3:87–101

Guo X, Nie R, Cao J, Zhou D, Qian W (2018) Fully convolutional network-based multi-focus image fusion. Neural Comput 30(7):1775–1800

Hao X, Zhao H, Liu J (2015) Multi-focus colour image sequence fusion based on mean shift segmentation. Appl Opt 54(30):8982–8989

He K, Zhou D, Zhang X, Nie R (2018) Multi-focus: focused region finding and multi-scale transform for image fusion. Neurocomputing 320:157–170

Hua KL, Wang HC, Rusdi AH, Jiang SY (2014) A novel multi-focus image fusion based on random walks. J Vis Commun İmage R 25:951–962

Huang W, Jing Z (2007) Evaluation of focus measures in multi-focus image fusion. Pattern Recognit Lett 28(4):493–500

Jiang Q, Jin X, Lee S, Yao S (2016) A Novel Multi-Focus Image Fusion Method Based on Stationary Wavelet Transform and Local Features of Fuzzy Sets. Neurocomputing 174:733–748

Jung H, Kim Y, Jang H, Ha N, Sohn K (2020) Unsupervised deep image fusion with structure tensor representations. IEEE Trans Image Process 29:3845–3858

Krizhevsky A, Nair V, Hinton G. https://www.cs.toronto.edu/~kriz/cifar.html. Accessed 11 Oct 2020

Li H, Manjunath B, Mitra S (1995) Multisensor image fusion using the wavelet transform. Graph Models Image Process 57(3):235–245

Li S, Kwok J, Wang Y (2001) Combination of images with diverse focuses using the spatial frequency. Inform Fusion 2(3):169–176

Li M, Cai W, Tan Z (2006) A region-based multi-sensor image fusion scheme using pulse–coupled neural network. Pattern Recognit Lett 27(16):1948–1956

Li H, Chai Y, Yin H, Liu G (2012) Multi-focus image fusion and denoising scheme based on homogeneity similarity. Opt Commun 285(2012):91–100

Li S, Kang X, Fang L, Hu J, Yin H (2017) Pixel-level Image Fusion: A survey of the state of the art. Inform Fusion 33:100–112

Li H, Qiu H, Yu Z, Li B (2017) Multi-focus image fusion via fixed window technique of multiscale images and non-local means filtering. Sig Process 138:71–85

Li H, Nie R, Cao J, Guo X, Zhou D, He K (2019) Multi-focus image fusion using u-shaped networks with a hybrid objective. IEEE Sens J 19(21):9755–9765

Li L, Ma H, Jia Z, Si Y (2021) A novel multiscale transform decomposition-based multi-focus image fusion framework. Multimed Tools Appl 80:12389–12409

Liu Y, Wang Z (2014) Simultaneous image fusion and denoising with adaptive sparse representation[J]. IET Image Process 9(5):347–357

Liu Y, Jin J, Wang Q, Shen Y, Dong X (2013) Novel focus region detection method for multi-focus image fusion using quaternion wavelet. J Electron Imaging 22(2):23017

Liu Y, Jin J, Wang Q, Shen Y, Dong X (2014) Region level based multi-focus image fusion using quaternion wavelet and normalized cut. Sig Process 97:9–30

Liu Y, Liu S, Wang Z (2015) A general framework for image fusion based on multi-scale transform and sparse representation[J]. Inf Fusion 24:147–164

Liu C, Long Y, Mao J (2016) Energy efficient multi-focus image fusion based on neighbour distance and morphology. Optik 127:11354–11363

Liu Y, Chen X, Peng H, Wang Z (2017) Multi-focus image fusion with a deep convolutional neural network. Inform Fusion 36:191–207

Liu Y, Zheng C, Zheng Q, Yuan H (2018) Removing Monte Carlo noise using a Sobel operator and a guided image filter. Vis Comput 34:589–601

Liu Y, Wang L, Cheng J, Li C, Chen X (2020) Multi-focus image fusion: A survey of the state of the art. Inform Fusion 64:71–91

Liu S, Lu Y, Wang J, Hu S, Zhao J, Zhu Z (2020) A new focus evaluation operator based on max-min filter and its application in high-quality multi-focus image fusion. Multidimens Syst Signal Process 31:569–590

Ma J, Yu W, Liang P (2019) FusionGAN: a generative adversarial network for infrared and visible image fusion[J]. Inf Fusion 48:11–26

Moushmi S, Sowmya V, Soman KP (2016) Empirical wavelet transform for multi-focus image fusion. Proceedings of the international conference on soft computing systems. Advances in Intelligent Systems and Computing, India

Nejati M, Samavi S, Karimi N, Soroushmehr SMR, Shirani S, Roosta I, Najarian K (2017) Surface-area based focus criterion for multi-focus image fusion. Inform Fusion 36:284–295

Pa J, Hegde AV (2015) A review of quality metrics for fused image. International conference on water resources, coastal and ocean engineering (ICWRCOE (2015)) 4, pp 133–142

Panigrahy C, Seal A, Mahato NK (2020) Fractal dimension based parameter adaptive dual-channel PCNN for multi-focus image fusion. Opt Lasers Eng 133:106141

Petrovic V, Xydeas C (2004) Gradient-based multiresolution image fusion. IEEE Trans Image Process 13(2):228–237

Tang H, Xiao B, Li W, Wang G (2018) Pixel convolutional neural network for multi-focus image fusion. Inf Sci (Ny) 433:125–141

Wang H (2018) Multi-focus image fusion algorithm based on focus detection in spatial and nsct domain. PLoS ONE 13(9):e0204225

Wen Y, Yang X, Celik T, Sushkova O, Albertini MK (2020) Multi-focus image fusion using convolutional neural network. Multimed Tools Appl 76(4):34531–34543

Yang B, Li S (2012) Pixel-level image fusion with simultaneous orthogonal matching pursuit. Inform Fusion 13(1):10–19

Yang Y, Que Y, Huang S, Lin P (2017) Technique for multi-focus image fusion based on fuzzy-adaptive pulse-coupled neural network. SIVip 11:439–446

Yang Y, Zhang Y, Huang S, Wu J (2020) Multi-focus image fusion via NSST with non-fixed base dictionary learning. Int J Syst Assur Eng Manag 11:849–855

Yin H, Li Y, Chai Y, Liu Z, Zhu Z (2016) A novel sparse-representation-based multi-focus image fusion approach. Neurocomputing 216:216–229

Zhang X, Li X, Feng Y (2016) A new multi-focus image fusion based on spectrum comparison. Sig Process 123:127–142

Zhang Y, Bai X, Wang T (2017) Boundary finding based multi-focus image fusion through multi-scale morphological focus-measure[J]. Inf fusion 35:81–101

Zhang B, Lu X, Pei H, Liu H, Zhao Y, Zhou W (2016) Multi-focus Image fusion algorithm based on focused region extraction. Neurocomputing 174:733–748

Zhang Y, Liu Y, Sun P (2020) IFCNN: a general image fusion framework based on convolutional neural network[J]. Inf Fusion 54:99–118

Zheng Y, Essock EA, Hansen BC, Haun AM (2007) A new metric based on extended spatial frequency and its application to DWT based fusion algorithms. Inform Fusion 8:177–192

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Aymaz, S., Köse, C. & Aymaz, Ş. A novel approach with the dynamic decision mechanism (DDM) in multi-focus image fusion. Multimed Tools Appl 82, 1821–1871 (2023). https://doi.org/10.1007/s11042-022-13323-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-022-13323-y