Abstract

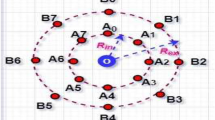

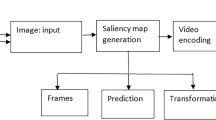

This paper presents a robust spatio-temporal saliency estimation method based on modeling motion vectors and transform residuals extracted from the H.264/AVC compressed bitstream. Spatial saliency is estimated by analyzing the detailed sub-band coefficients obtained by the wavelet decomposition of the luminance component of the macro-blocks, while temporal saliency is estimated by modeling the block motion vector orientation information using local derivative patterns. Dempster Shafer fusion rule is used to fuse the spatial saliency map and the motion saliency map to obtain the final saliency for a video frame. Extensive experimental validation along with comparative analysis with state-of-the-art methods is carried out to establish the proposed saliency method.

Similar content being viewed by others

References

Agarwal G, Anbu A, Sinha A (2003) A fast algorithm to find the region-of-interest in the compressed mpeg domain. In: International conference on multimedia and expo, vol 2, pp II–133

Bellitto G, Salanitri FP, Palazzo S, Rundo F, Giordano D, Spampinato C (2020) Video saliency detection with domain adaptation using hierarchical gradient reversal layers. arXiv:2010.01220

Borji A (2021) Saliency prediction in the deep learning era: Successes and limitations. IEEE Trans Pattern Anal Mach Intell 43(2):679–700

Borji A, Itti L (2013) State-of-the-art in visual attention modeling. IEEE Trans Pattern Anal Mach Intell 35(1):185–207

Fontani M, Bianchi T, De Rosa A, Piva A, Barni M (2013) A framework for decision fusion in image forensics based on dempster–shafer theory of evidence. IEEE Trans Inform Forens Secur 8(4):593–607

Goferman S, Manor LZ, Tal A (2012) Context-aware saliency detection. IEEE Trans Pattern Anal Mach Intell 34(10):1915–1926

Hadizadeh H, Bajic IV (2014) Saliency-aware video compression. IEEE Trans Image Process 23(1):19–33

Hadizadeh H, Enriquez MJ, Bajic IV (2012) Eye-tracking database for a set of standard video sequences. IEEE Trans Image Process 21(2):898–903

Itti L, Koch C (2001) Computational modelling of visual attention. Nat Rev Neurosci 2(3):194

Khatoonabadi SH, Bajić IV, Shan Y (2015) Compressed-domain correlates of human fixations in dynamic scenes. Multimed Tools Appl 74 (22):10057–10075

Khatoonabadi SH, Bajić IV, Shan Y (2017) Compressed-domain visual saliency models: a comparative study. Multimed Tools Appl 76(24):26297–26328

Khatoonabadi SH, Vasconcelos N, Bajic IV, Shan Y (2015) How many bits does it take for a stimulus to be salient?. In: IEEE conference on computer vision and pattern recognition, pp 5501–5510

Le Meur O, Le Callet P, Barba D, Thoreau D (2006) A coherent computational approach to model bottom-up visual attention. IEEE Trans Pattern Anal Mach Intell 28(5):802–817

Li Y, Lei X, Liang Y, Chen J (2018) Human fixations detection model in video-compressed-domain based on mve and obdl. In: Advanced optical imaging technologies, vol 10816

Li Y, Li S, Chen C, Hao A, Qin H (2020) A plug-and-play scheme to adapt image saliency deep model for video data. arXiv:2008.09103

Li Y, Li Y (2017) A fast and efficient saliency detection model in video compressed-domain for human fixations prediction. Multimed Tools Appl 76(24):26273–26295

Liu T, Yuan Z, Sun J, Wang J, Zheng N, Tang X, Shum H (2011) Learning to detect a salient object. IEEE Trans Pattern Anal Mach Intell 33(2):353–367

Liu W, Li Z, Sun S, Gupta MK, Du H, Malekian R, Sotelo MA, Li W (2021) Design a novel target to improve positioning accuracy of autonomous vehicular navigation system in gps denied environments. IEEE Trans Industr Inform

Liu Y, Han J, Zhang Q, Shan C (2020) Deep salient object detection with contextual information guidance. IEEE Trans Image Process 29:360–374

Ma Y, Li Z, Malekian R, Zheng S, Sotelo MA (2021) A novel multimode hybrid control method for cooperative driving of an automated vehicle platoon. IEEE Internet Things J 8(7):5822–5838

Ma YF, Zhang HJ (2001) A new perceived motion based shot content representation. In: International conference on image processing, vol 3, pp 426–429

Ouerhani N, Hugli H (2005) Robot self-localization using visual attention. In: International symposium on computational intelligence in robotics and automation, pp 309–314

Peters RJ, Iyer A, Itti L, Koch C (2005) Components of bottom-up gaze allocation in natural images. Vis Res 45(18):2397–2416

Rodriguez MD, Ahmed J, Shah M (2008) Action mach a spatio-temporal maximum average correlation height filter for action recognition. In: IEEE Conference on computer vision and pattern recognition, pp 1–8

Shafer G (1976) A mathematical theory of evidence. Princeton University Press, Princeton

Siagian C, Itti L (2007) Rapid biologically-inspired scene classification using features shared with visual attention. IEEE Trans Pattern Anal Mach Intell 29(2):300–312

Sinha A, Agarwal G, Anbu A (2004) Region-of-interest based compressed domain video transcoding scheme. In: IEEE International conference on acoustics, speech, and signal processing, vol 3, pp iii–161

Sun M, Zhou Z, Hu Q, Wang Z, Jiang J (2019) SG-FCN: A motion and memory-based deep learning model for video saliency detection. IEEE Trans Cybern 49(8):2900–2911

Wiegand T, Sullivan GJ, Bjontegaard G, Luthra A (2003) Overview of the h.264/AVC video coding standard. IEEE Trans Circuits Syst Video Technol 13(7):560–576

Zhang B, Gao Y, Zhao S, Liu J (2010) Local derivative pattern versus local binary pattern: Face recognition with high-order local pattern descriptor. IEEE Trans Image Process 19(2):533–544

Zhang D, Han J, Han J, Shao L (2016) Cosaliency detection based on intrasaliency prior transfer and deep intersaliency mining. IEEE Trans Neural Netw Learn Syst 27(6):1163–1176

Zhang Q, Huang N, Yao L, Zhang D, Shan C, Han J (2020) RGB-T salient object detection via fusing multi-level CNN features. IEEE Trans Image Process 29:3321–3335

Acknowledgements

The authors would like to thank the anonymous reviewers for their valuable feedback which helped us to improve the paper.

Funding

This research work is supported by SERB, Government of India under grant No ECR/2016/000112.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interests

The authors have no conflicts of interest to declare that are relevant to the content of this article.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Sandula, P., Okade, M. Robust spatio-temporal saliency estimation method for H.264 compressed videos. Multimed Tools Appl 81, 39021–39039 (2022). https://doi.org/10.1007/s11042-022-13148-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-022-13148-9