Abstract

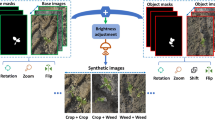

Difficulties in the recognition of beet seedlings and weeds can arise from a complex background in the natural environment and a lack of light at night. In the current study, a novel depth fusion algorithm was proposed based on visible and near-infrared imagery. In particular, visible (RGB) and near-infrared images were superimposed at the pixel-level via a depth fusion algorithm and were subsequently fused into three-channel multi-modality images in order to characterize the edge details of beets and weeds. Moreover, an improved region-based fully convolutional network (R-FCN) model was applied in order to overcome the geometric modeling restriction of traditional convolutional kernels. More specifically, for the convolutional feature extraction layers, deformable convolution was adopted to replace the traditional convolutional kernel, allowing for the entire network to extract more precise features. In addition, online hard example mining was introduced to excavate the hard negative samples in the detection process for the retraining of misidentified samples. A total of four models were established via the aforementioned improved methods. Results demonstrate that the average precision of the improved optimal model for beets and weeds were 84.8% and 93.2%, respectively, while the mean average precision was improved to 89.0%. Compared with the classical R-FCN model, the performance of the optimal model was not only greatly improved, but the parameters were also not significantly expanded. Our study can provide a theoretical basis for the subsequent development of intelligent weed control robots under weak light conditions.

Similar content being viewed by others

References

Abouzahir S, Sadik M, Sabir E (2021) Bag-of-visual-words-augmented Histogram of Oriented Gradients for efficient weed detection. Biosyst Eng 202:179–194

Akbarzadeh P, Ahderom, Apopei A (2018) Plant discrimination by Support Vector Machine classifier based on spectral reflectance. Comput Electron Agric 148:250–258

Al-Smadi M, Qawasmeh O, Al-Ayyoub M, Jararweh Y, Gupta B (2017) Deep recurrent neural network vs. support vector machine for aspect-based sentiment analysis of Arabic hotels’ reviews. J Comput Sci 27:386–393

Andrea CC, Daniel B, Misael J (2017) Precise weed and maize classification through convolutional neuronal networks. IEEE Second Ecuador Technical Chapters Meeting (ETCM)1–6

Baareh AK, Elsayad A, Al-Dhaifallah M (2021) Recognition of splice-junction genetic sequences using random forest and Bayesian optimization. Multimed Tools Appl 2021:1–18

Bakhshipour A, Jafari A, Nassiri SM, Zare D (2017) Weed segmentation using texture features extracted from wavelet sub-images. Biosyst Eng 157:1–12

Dai J, Li Y, He K, Sun J (2016) R-FCN: Object detection via region-based fully convolutional networks. In: Advances in Neural Information Processing Systems, pp 379–387

Dai J, Qi H, Xiong Y, Li Y, Zhang G et al (2017) Deformable convolutional networks. In: 2017 IEEE International Conference on Computer Vision (ICCV), pp 764-773

Dinesh Kumar JR, Ganesh Babu C, Priyadharsini K (2021) An experimental investigation to spotting the weeds in rice field using deepnet. Mater Today: Proc 45:8041-53

Everingham M, Eslami S, Gool LV (2015) The pascal visual object classes challenge: a retrospective. Int J Comput Vis 111:98–136

García B, Mylonas N, Athanasakos L, Fountas S (2020) Improving weeds identification with a repository of agricultural pre-trained deep neural networks. Comput Electron Agric 175:105593

Huang H, Deng J, Lan Y, Yang A, Deng X et al (2018) A fully convolutional network for weed mapping of unmanned aerial vehicle (UAV) imagery. PLoS ONE 13:e0196302

Jiang H, Wang P, Zhang Z, Mao W, Zhao B et al (2018) Fast identification of field weeds based on deep convolutional network and binary hash code. Trans Chin Soc Agric Mach 49:30–38

Li H, Wu X (2019) DenseFuse: A fusion approach to infrared and visible images. IEEE Trans Image Process 28(5):2614–2623

Li B, Bai B, Han C (2020) Upper body motion recognition based on key frame and random forest regression. Multimed Tools Appl 79:5197–5212

Lin T, Goyal P, Girshick R, He K, Dollár P (2017) Focal loss for dense object detection. In: IEEE Trans Pattern Anal Mach Intell, pp 2999-3007

Maswadi K, Ghani NA, Hamid S, Rasheed MB (2021) Human activity classification using Decision Tree and Naïve Bayes classifiers. Multimed Tools Appl 80:21709–21726

Milioto A, Lottes P, Stachniss C (2018) Real-time semantic segmentation of crop and weed for precision agriculture robots leveraging background knowledge in CNNs. In: 2018 IEEE International Conference on Robotics and Automation (ICRA), pp 2229-2235

Nogueira K, Penatti OAB, dos Santos JA (2017) Towards better exploiting convolutional neural networks for remote sensing scene classification. Pattern Recogn 61:539–556

Pearse GD, Tan AYS, Watt MS, Franz MO, Dash JP (2020) Detecting and mapping tree seedlings in UAV imagery using convolutional neural networks and field-verified data. ISPRS J Photogramm Remote Sens 168:156–169

Raghavendra R, Dorizzi B, Rao A, Kumar GH (2011) Particle swarm optimization based fusion of near infrared and visible images for improved face verification. Pattern Recogn 44:401–411

Raja R, Nguyen TT, Slaughter DC, Fennimore SA (2020) Real-time weed-crop classification and localisation technique for robotic weed control in lettuce. Biosyst Eng 192:257–274

Ren S, He K, Girshick R, Sun J (2017) Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans Pattern Anal Mach Intell 39:1137–1149

Ren X, Meng F, Hu T, Liu Z, Wang C (2018) Infrared-visible image fusion based on Convolutional Neural Networks (CNN). Intelligence Science and Big Data Engineering: 301–307

Sandoval-Insausti H, Chiu YH, Dong HL, Wang S, Chavarro JE (2021) Intake of fruits and vegetables by pesticide residue status in relation to cancer risk. Environ Int 156:106744

Shin HC, Roth HR, Gao M, Lu L, Xu Z et al (2016) Deep convolutional neural networks for computer-aided detection: CNN architectures, dataset characteristics and transfer learning. IEEE Trans Med Imaging 35:1285–1298

Shrivastava A, Gupta A, Girshick R (2016) Training region-based object detectors with online hard example mining. In: IEEE Computer Society, pp 761-769

Sun J, He X, Tan W, Wu X, Shen J et al (2018) Recognition of crop seedling and weed recognition based on dilated convolution and global pooling in CNN. Trans Chin Soc Agric Eng 34:159–465

Wang H, Li Z, Yang L, Gupta BB, Chang C (2018) Visual saliency guided complex image retrieval. Pattern Recognit Lett 130:64–72

Wang T, Knap J (2020) Stochastic gradient descent for Semilinear elliptic equations with uncertainties. J Comput Phys 426:109945

Wu G, Li Y (2021) CyclicNet: an alternately updated network for semantic segmentation. Multimed Tools Appl 80:3213–3227

Yan B (2018) Identification of weeds in maize seedling stage by machine vision technology. J Agric Mechanization Res 40:212–216

Ying Z, Ge L, Ren Y, Wang R, Wang W (2017) A new image contrast enhancement algorithm using exposure fusion framework. In: Presented at International Conference on Computer Analysis of Images and Patterns, pp 36-46

Zhang J, Li M, Feng Y, Yang C (2020) Robotic grasp detection based on image processing and random forest. Multimed Tools Appl 79:2427–2446

Zhao P, Wei X (2014) Weed recognition in agricultural field using multiple feature fusions. Trans Chin Soc Agric Mach 45:275–281

Acknowledgements

This work is partially supported by a project funded by the Priority Academic Program Development of Jiangsu Higher Education Institutions (PAPD-2018-87), Synergistic Innovation Center of Jiangsu Modern Agricultural Equipment and Technology (4091600002). Project of Faculty of Agricultural Equipment of Jiangsu University (4121680001).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Sun, J., Yang, K., He, X. et al. Beet seedling and weed recognition based on convolutional neural network and multi-modality images. Multimed Tools Appl 81, 5239–5258 (2022). https://doi.org/10.1007/s11042-021-11764-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-021-11764-5