Abstract

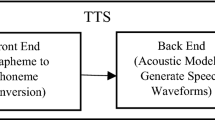

An end-to-end text-to-speech system generates acoustic features directly from input text to synthesize speech from it. The challenges of using these models for Persian language are lack of a proper data, and also detection of exceptions and Ezafe between words inherently (without grapheme-to-phoneme). In this paper, we propose to use an special end-to-end tts system named Tacotron2, and suggest solutions for the mentioned problems. For the lack of data problem, we collect a dataset proper for end-to-end text-to-speech including 21 hours of Persian speech and corresponding text. We use multi-resolution convolution and part of speech embedding layers in the encoder part of Tacotron2, to overcome the exceptions and Ezafe detection problem. In addition, in the case of Tacotron2, Mel-spectrogram generation process is unstable due to high dropout rate at inference time. To handle this problem, we propose to use a convex optimization method, named Net-Trim. Experimental results show that our proposed method increases Tacotron2 mean opinion score from 3.01 to 3.97. Furthermore, the proposed method decreases Mel cepstral distortion in comparison with Tacotron2.

Similar content being viewed by others

References

Aghasi A, Abdi A, Nguyen N, Romberg J (2017) Net-Trim: Convex pruning of deep neural networks with performance guarantee. 31st Conference on neural information processing systems (NIPS). Long Beach, CA, USA, pp 3177–3186

Arik SÖ, Chrzanowski M, Coates A, Diamos G, Gibiansky A, Kang Y, Li X, Miller J, Ng, A, Raiman J, Others (2017) Deep voice: Real-time neural text-to-speech. In: Proceedings of the 34th international conference on machine learning, vol 70, pp 195–204

Battenberg E, Mariooryad S, Stanton D, Skerry-Ryan RJ, Shannon M, Kao D, Bagby T (2019a) Effective use of variational embedding capacity in expressive end-to-end speech synthesis. In: International conference on learning representations (ICLR), Addis Ababa, Ethiopia

Battenberg E, Skerry-Ryan RJ, Mariooryad S, Stanton D, Kao D, Shannon M, Bagby T (2019b) Location-relative attention mechanisms for robust long-form speech synthesis. In: 45th International conference on acoustics, speech, and signal processing (ICASSP), pp 6189–6193

Bollepalli B, Juvela L, Alku P (2019) Lombard speech synthesis using transfer learning in a tacotron text-to-speech system. In: Proceedings of interspeech, pp 2833–2837

Chen M, Chen M, Liang S, Ma J, Chen L, Wang S, Xiao J (2019) Cross-lingual, multi-speaker text-to-speech synthesis using neural speaker embedding. In: Interspeech, pp 2105–2109

Chung Y-A, Wang Y, Hsu W-N, Zhang Y, Skerry-Ryan RJ (2019) Semi-supervised training for improving data efficiency in end-to-end speech synthesis. In: 2019 IEEE International conference on acoustics, speech and signal processing (ICASSP), pp 6940–6944

Gibiansky A, Arik S, Diamos G, Miller J, Peng K, Ping W, Raiman J, Zhou Y (2017) Deep voice 2: Multi-speaker neural text-to-speech. In: Guyon I, Luxburg UV, Bengio S, Wallach H, Fergus R, Vishwanathan S, Garnett R (eds) Advances in neural information processing systems (NIPS) 30, Curran Associates, Inc, pp 2962–2970

Haig G (2011) Linker, relativizer, nominalizer, tense-particle: On the Ezafe in West Iranian. John Benjamins Publishing Company, Amsterdam

Halabi N (2016) Modern standard persian phonetics for speech synthesis. PhD thesis, University of Southampton

Hashemian M, Heidari Soureshjani K (2013) An analysis of pronunciation errors of iranian efl learners. Research in English Language Pedagogy 1(1):5–12

Hendessi F, Ghayoori A, Gulliver TA (2005) A speech synthesizer for persian text using a neural network with a smooth ergodic hmm. ACM Transactions on Asian Language Information Processing 4(1):38–52

Ito, K (2018) https://github.com/keithito/tacotron

King SJ, Karaiskos V (2011) The blizzard challenge 2011. In: Proceedings of blizzard challenge workshop

Kubichek R (1993) Mel-cepstral distance measure for objective speech quality assessment. In: Proceedings of IEEE pacific rim conference on communications computers and signal processing, vol 1, pp 125–128

Łańcucki A (2021) Fastpitch: Parallel text-to-speech with pitch prediction. In: IEEE international conference on acoustics, speech and signal processing (ICASSP), pp 6588–6592

Lee Y, Rabiee A, Lee S-Y (2017) Emotional end-to-end neural speech synthesizer. In: 31st Conference on neural information processing systems (NIPS)

Luo R, Tan X, Wang R, Qin T, Li J, Zhao S, Chen E, Liu T-Y (2021) Lightspeech: Lightweight and fast text to speech with neural architecture search. In: IEEE International conference on acoustics, speech and signal processing (ICASSP), pp 5699–5703

Mama R (2018) https://github.com/Rayhane-mamah/Tacotron-2

Miao C, Shuang L, Liu Z, Minchuan C, Ma J, Wang S, Xiao J (2021) Efficienttts: An efficient and high-quality text-to-speech architecture. In: Meila M, Zhang T (eds) Proceedings of the 38th International conference on machine learning, volume 139 of Proceedings of machine learning research, PMLR, pp 7700–7709

Naderi N, Nasersharif B (2017) Multiresolution convolutional neural network for robust speech recognition. In: 2017 Iranian Conference on Electrical Engineering (ICEE), pp 1459–1464

Ning Y, He S, Wu Z, Xing C, Zhang L-J (2019) A review of deep learning based speech synthesis. Applied Sciences 9(19):1–16

Park K, Mulc T (2019) CSS10: A collection of single speaker speech datasets for 10 languages. In: Interspeech, pp 1566–1570

Ping W, Peng K, Gibiansky AO, Arık, S, Kannan A, Narang S (2018) Deep Voice 3: Scaling Text-to-speech with convolutional sequence learning. In: International conference on learning representations (ICLR)

Ratnaparkhi A (1997) A simple introduction to maximum entropy models for natural language processing. IRCS Technical Reports Series, University of Pennsylvania, Philadelphia, PA, USA

Rosenberg A, Ramabhadran B (2017) Bias and statistical significance in evaluating speech synthesis with mean opinion scores. In: Proceedings of interspeech, pp 3976–3980

Seraji M (2013) PrePer : A Pre-processor for persian. In: fifth International conference on iranian linguistics (ICIL5)

Shamsfard M (2011) Challenges and open problems in persian text processing. Proceedings of LTC 11:65–69

Shen J, Pang R, Weiss RJ, Schuster M, Jaitly N, Yang Z, Chen Z, Zhang Y, Wang Y, Skerry-Ryan RJ, Saurous RA, Agiomyrgiannakis Y, Wu Y (2018) Natural TTS synthesis by conditioning wavenet on mel spectrogram predictions. In: 2018 IEEE International conference on acoustics, speech and signal processing (ICASSP). Calgary, AB, Canada, pp 4779–4783

Skerry-Ryan RJ, Battenberg E, Xiao Y, Wang Y, Stanton D, Shor J, Weiss RJ, Clark R, Saurous RA (2018) Towards end-to-end prosody transfer for expressive speech synthesis with tacotron. ICML, pp 4700–4709

Soozandehfar SMA, Souzandehfar M (2011) How to improve pronunciation? an in-depth contrastive investigation of sound-spelling systems in english and persian. Journal of Language Teaching and Research 2(5):1086–1098

Sotelo J, Mehri S, Kumar K, Santos JF, Kastner K, Courville A, Bengio Y (2017) Char2wav: End-to-end speech synthesis. In: International conference on learning representations (ICLR), workshop track

Toghyani Khorasgani A, Khorasgani A, Aray N (2017) A survey on several potentially problematic areas of pronunciation for iranian efl learners. Indonesian EFL Journal 1(2):189–198

Tokuda K, Yoshimura T, Masuko T, Kobayashi T, Kitamura T (2000) Speech parameter generation algorithms for hmm-based speech synthesis. In: 2000 IEEE International conference on acoustics, speech, and signal processing. proceedings (Cat. No.00CH37100), vol 3, pp 1315–1318

Valle R, Li J, Prenger R, Catanzaro B (2020) Mellotron: Multispeaker expressive voice synthesis by conditioning on rhythm, pitch and global style tokens. In: IEEE International conference on acoustics, speech and signal processing (ICASSP), pp 6189–6193

van den Oord A, Dieleman S, Zen H, Simonyan K, Vinyals O, Graves A, Kalchbrenner N, Senior A, Kavukcuoglu K (2016) Wavenet: A generative model for raw audio. arXiv:1609.03499

Wang Y, Skerry-Ryan RJ, Stanton D, Wu Y, Weiss R, Jaitly N, Yang Z, Xiao Y, Chen Z, Bengio S, Le Q, Agiomyrgiannakis Y, Clark R, Saurous R (2017) Tacotron: Towards end-to-end speech synthesis. In: Interspeech, pp 4006–4010

Wang Y, Stanton D, Zhang Y, Skerry-Ryan RJ, Battenberg E, Shor J, Xiao Y, Ren F, Jia Y, Saurous RA (2018) Style Tokens: Unsupervised style modeling, control and transfer in end-to-end speech synthesis. In: ICML, pp 5180–5189

Weiss RJ, Skerry-Ryan R, Battenberg E, Mariooryad S, Kingma DP (2021) Wave-tacotron: Spectrogram-free end-to-end text-to-speech synthesis. In: IEEE International conference on acoustics, speech and signal processing (ICASSP), pp 5679–5683

Yang G, Yang S, Liu K, Fang P, Chen W, Xie L (2021) Multi-band melgan: Faster waveform generation for high-quality text-to-speech. In: IEEE Spoken language technology workshop (SLT), pp 492–498

Yasuda Y, Wang X, Takaki S, Yamagishi J (2019) Investigation of enhanced tacotron text-to-speech synthesis systems with self-attention for pitch accent language. In: 2019 IEEE International conference on acoustics, speech and signal processing (ICASSP), pp 6905–6909

Zen H, Clark R, Weiss RJ, Dang V, Jia Y, Wu Y, Zhang Y, Chen Z (2019) LibriTTS: A corpus derived from librispeech for text-to-speech. In: Proceedings of interspeech, pp 1526–1530

Zhang J-X, Ling Z-H., Dai L-R (2018) Forward attention in sequence-to-sequence acoustic modeling for speech synthesis. In: 2018 IEEE International conference on acoustics, speech and signal processing (ICASSP), pp 4789–4793

Zhang M, Wang X, Fang F, Li H, Yamagishi J (2019) Joint training framework for text-to-speech and voice conversion using multi-source Tacotron and WaveNet. In: INTERSPEECH. Graz, Austria, pp 1298–1302

Zivkovic M, Bacanin N, Venkatachalam K, Nayyar A, Djordjevic A, Strumberger I, Al-Turjman F (2021) Covid-19 cases prediction by using hybrid machine learning and beetle antennae search approach. Sustainable Cities and Society 66:102669

Acknowledgements

The authors are grateful to AmerAndish company for supporting this project and their helpful feedback. We acknowledge the raw data, the tools needed for collecting and cleaning data, the computing resources, and the users for rating all are provided by AmerAndish company.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Naderi, N., Nasersharif, B. & Nikoofard, A. Persian speech synthesis using enhanced tacotron based on multi-resolution convolution layers and a convex optimization method. Multimed Tools Appl 81, 3629–3645 (2022). https://doi.org/10.1007/s11042-021-11719-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-021-11719-w