Abstract

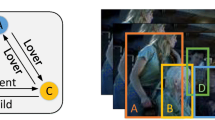

Automatically interpreting social relations, e.g., friendship, kinship, etc., from visual scenes has huge potential application value in areas such as knowledge graphs construction, person behavior and emotion analysis, entertainment ecology, etc. Great progress has been made in social analysis based on structured data. However, existing video-based methods consider social relationship extraction as a general classification task and categorize videos into only predefined types. Such methods are unable to recognize multiple relations in multi-person videos, which is obviously not consistent with the actual application scenarios. At the same time, videos are inherently multimodal. Subtitles in the video also provide abundant cues for relationship recognition that is often ignored by researchers. In this paper, we introduce and define a new task named “Multiple-Relation Extraction in Videos (MREV)”. To solve the MREV task, we propose the Visual-Textual Fusion (VTF) framework for jointly modeling visual and textual information. For the spatial representation, we not only adopt a SlowFast network to learn global action and scene information, but also exploit the unique cues of face, body and dialogue between characters. For the temporal domain, we propose a Temporal Feature Aggregation module to perform temporal reasoning, which assesses the quality of different frames adaptively. After that, we use a Multi-Conv Attention module to capture the inter-modal correlation and map the features of different modes to a coordinated feature space. By this means, our VTF framework comprehensively exploits abundant multimodal cues for the MREV task and achieves 49.2% and 50.4% average accuracy on a self-constructed Video Multiple-Relation(VMR) dataset and ViSR dataset, respectively. Extensive experiments on VMR dataset and ViSR dataset demonstrate the effectiveness of the proposed framework.

Similar content being viewed by others

References

Aimar ES, Radeva P, Dimiccoli M (2019) Social relation recognition in egocentric photostreams. In: 2019 IEEE International Conference on Image Processing (ICIP), pp. 3227–3231. IEEE.

Arandjelovic R, Gronat P, Torii A, Pajdla T, Sivic J (2016) Netvlad: Cnn architecture for weakly supervised place recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 5297–5307

Barr JR, Cament LA, Bowyer KW, Flynn PJ (2014) Active clustering with ensembles for social structure extraction. In: IEEE Winter Conference on Applications of Computer Vision, pp. 969–976. IEEE

Carreira J, Zisserman A (2017) Quo vadis, action recognition? A new model and the kinetics dataset. CoRR abs/1705.07750. http://arxiv.org/abs/1705.07750

Carreira J, Zisserman A (2017) Quo vadis, action recognition? a new model and the kinetics dataset. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6299–6308

Chen YY, Hsu WH, Liao HYM (2012) Discovering informative social subgraphs and predicting pairwise relationships from group photos. In: Proceedings of the 20th ACM international conference on Multimedia, pp. 669–678

Chiu YI, Huang CR, Chung PC (2013) Character relationship analysis in movies using face tracks. In: MVA, pp. 431–434

Dai P, Lv J, Wu B (2019) Two-stage model for social relationship understanding from videos. In: 2019 IEEE International Conference on Multimedia and Expo (ICME), pp. 1132–1137. IEEE

Dai Q, Carr P, Sigal L, Hoiem D(2015) Family member identification from photo collections. In: 2015 IEEE Winter Conference on Applications of Computer Vision, pp. 982–989. IEEE

Deng J, Guo J, Xue N, Zafeiriou S (2019) Arcface: Additive angular margin loss for deep face recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4690–4699

Deng J, Guo J, Zhou Y, Yu J, Kotsia I, Zafeiriou S (2019) Retinaface: Single-stage dense face localisation in the wild. arXiv preprint arXiv:1905.00641

Dibeklioglu H (2017) Visual transformation aided contrastive learning for video-based kinship verification. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2459–2468

Ding L, Yilmaz A (2010) Learning relations among movie characters: A social network perspective. In: European conference on computer vision, pp. 410–423. Springer

Ding L, Yilmaz A (2011) Inferring social relations from visual concepts. In: 2011 International Conference on Computer Vision, pp. 699–706. IEEE

Feichtenhofer C, Fan H, Malik J, He K (2018) Slowfast networks for video recognition. CoRR abs/1812.03982. http://arxiv.org/abs/1812.03982

Feichtenhofer C, Fan H, Malik J, He K (2019) Slowfast networks for video recognition. In: Proceedings of the IEEE international conference on computer vision, pp. 6202–6211

Feng F, Yang Y, Cer D, Arivazhagan N, Wang W (2020) Language-agnostic bert sentence embedding. arXiv preprint arXiv:2007.01852

Goel A, Ma KT, Tan C (2019) An end-to-end network for generating social relationship graphs. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 11186–11195

Golder S (2008) Measuring social networks with digital photograph collections. In: Proceedings of the nineteenth ACM conference on Hypertext and hypermedia, pp. 43–48

He K, Gkioxari G, Dollár P, Girshick R (2017) Mask r-cnn. In: Proceedings of the IEEE international conference on computer vision, pp. 2961–2969

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 770–778

Huang G, Mattar MA, Berg TL, Learned-Miller E (2008) Labeled faces in the wild: A database forstudying face recognition in unconstrained environments

Jiang YG, Wu Z, Tang J, Li Z, Xue X, Chang SF (2018) Modeling multimodal clues in a hybrid deep learning framework for video classification. IEEE Trans Multimed 20(11):3137–3147

Kampman O, Barezi EJ, Bertero D, Fung P (2018) Investigating audio, video, and text fusion methods for end-to-end automatic personality prediction. In: Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers), pp. 606–611

Kanagaraj K, Priya GGL (2021) A new 3d convolutional neural network (3d-cnn) framework for multimedia event detection. Signal Image Video Process 15(4):779–787

Kemelmacher-Shlizerman I, Seitz S, Miller D, Brossard E (2016) The megaface benchmark: 1 million faces for recognition at scale. 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) pp. 4873–4882

Khademi M (2020) Multimodal neural graph memory networks for visual question answering. In: Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, pp. 7177–7188

Kohli N, Yadav D, Vatsa M, Singh R, Noore A (2018) Supervised mixed norm autoencoder for kinship verification in unconstrained videos. IEEE Trans Image Process 28(3):1329–1341

Kukleva A, Tapaswi M, Laptev I (2020) Learning interactions and relationships between movie characters. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 9849–9858

Li J, Wong Y, Zhao Q, Kankanhalli MS (2017) Dual-glance model for deciphering social relationships. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2650–2659

Li M, Zareian A, Lin Y, Pan X, Whitehead S, Chen B, Wu B, Ji H, Chang SF, Voss C et al (2020) Gaia: A fine-grained multimedia knowledge extraction system. In: Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics: System Demonstrations, pp. 77–86

Liu J, Deng Y, Bai T, Huang C (2015) Targeting ultimate accuracy: Face recognition via deep embedding. ArXiv abs/1506.07310

Liu W, Wen Y, Yu Z, Li M, Raj B, Song L (2017) Sphereface: Deep hypersphere embedding for face recognition. 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) pp. 6738–6746

Liu W, Wen Y, Yu Z, Yang M (2016) Large-margin softmax loss for convolutional neural networks. ArXiv abs/1612.02295

Liu X, Liu W, Zhang M, Chen J, Gao L, Yan C, Mei T (2019) Social relation recognition from videos via multi-scale spatial-temporal reasoning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3566–3574

Liu Y, Peng B, Shi P, Yan H, Zhou Y, Han B, Zheng Y, Lin C, Jiang J, Fan Y et al (2018) iqiyi-vid: A large dataset for multi-modal person identification. arXiv preprint arXiv:1811.07548

Long X, Gan C, De Melo G, Wu J, Liu X, Wen S (2018) Attention clusters: Purely attention based local feature integration for video classification. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7834–7843

Lv J, Liu W, Zhou L, Wu B, Ma H (2018) Multi-stream fusion model for social relation recognition from videos. In: International Conference on Multimedia Modeling, pp. 355–368. Springer

Lv J, Wu B (2019) Spatio-temporal attention model based on multi-view for social relation understanding. In: International Conference on Multimedia Modeling, pp. 390–401. Springer

Lv J, Wu B, Zhou L, Wang H (2018) Storyrolenet: Social network construction of role relationship in video. IEEE Access 6:25958–25969

Nan CJ, Kim KM, Zhang BT (2015) Social network analysis of tv drama characters via deep concept hierarchies. In: 2015 IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining (ASONAM), pp. 831–836. IEEE

Parkhi O, Vedaldi A, Zisserman A (2015) Deep face recognition. In: BMVC

Ramanathan V, Yao B, Fei-Fei L (2013) Social role discovery in human events. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2475–2482

Schroff F, Kalenichenko D, Philbin J (2015) Facenet: A unified embedding for face recognition and clustering. 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) pp. 815–823

Sun Q, Schiele B, Fritz M (2017) A domain based approach to social relation recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3481–3490

Tran D, Bourdev LD, Fergus R, Torresani L, Paluri M (2014) C3D: generic features for video analysis. CoRR abs/1412.0767. http://arxiv.org/abs/1412.0767

Vicol P, Tapaswi M, Castrejon L, Fidler S (2018) Moviegraphs: Towards understanding human-centric situations from videos. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 8581–8590

Wang H, Wang Y, Zhou Z, Ji X, Li Z, Gong D, Zhou J, Liu W (2018) Cosface: Large margin cosine loss for deep face recognition. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition pp. 5265–5274

Wang L, Xiong Y, Wang Z, Qiao Y, Lin D, Tang X, Gool LV (2016) Temporal segment networks: Towards good practices for deep action recognition. CoRR abs/1608.00859. http://arxiv.org/abs/1608.00859

Wang Z, Chen T, Ren J, Yu W, Cheng H, Lin L (2018) Deep reasoning with knowledge graph for social relationship understanding. arXiv preprint arXiv:1807.00504

Wen Y, Zhang K, Li Z, Qiao Y (2016) A discriminative feature learning approach for deep face recognition. In: ECCV

Weng CY, Chu WT, Wu JL (2009) Rolenet: Movie analysis from the perspective of social networks. IEEE Trans Multimed 11(2):256–271

Wu P, Ding W, Mao Z, Tretter D (2009) Close & closer: Discover social relationship from photo collections. In: 2009 IEEE International Conference on Multimedia and Expo, pp. 1652–1655. IEEE

Wu X, Granger E, Kinnunen TH, Feng X, Hadid A (2019) Audio-visual kinship verification in the wild. In: 2019 International Conference on Biometrics (ICB), pp. 1–8. IEEE

Yan H, Hu J (2018) Video-based kinship verification using distance metric learning. Pattern Recognit 75:15–24

Yeh MC, Tseng MC, Wu WP (2012) Automatic social network construction from movies using film-editing cues. In: 2012 IEEE International Conference on Multimedia and Expo Workshops, pp. 242–247. IEEE

Yuan K, Yao H, Ji R, Sun X (2010) Mining actor correlations with hierarchical concurrence parsing. In: 2010 IEEE International Conference on Acoustics, Speech and Signal Processing, pp. 798–801. IEEE

Yuxin P, Jinwei Q, Xin H (2019) Current research status and prospects on multimedia content understanding. J Comput Res Dev 56(1):183–208

Zadeh A, Chen M, Poria S, Cambria E, Morency L (2017) Tensor fusion network for multimodal sentiment analysis. In: Conference on Empirical Methods in Natural Language Processing, pp. 1103–1114

Zhang M, Liu X, Liu W, Zhou A, Ma H, Mei T (2019) Multi-granularity reasoning for social relation recognition from images. In: 2019 IEEE International Conference on Multimedia and Expo (ICME), pp. 1618–1623. IEEE

Zhang Z, Luo P, Loy CC, Tang X (2015) Learning social relation traits from face images. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 3631–3639

Zhong Y, Arandjelović R, Zisserman A (2018) Ghostvlad for set-based face recognition. In: Asian Conference on Computer Vision, pp. 35–50. Springer

Zhou L, Lv J, Wu B (2017) Social network construction of the role relation in unstructured data based on multi-view. In: 2017 IEEE Second International Conference on Data Science in Cyberspace (DSC), pp. 382–388. IEEE

Zhou L, Wu B, Lv J (2018) Sre-net model for automatic social relation extraction from video. In: CCF Conference on Big Data, pp. 442–460. Springer

Zhu Z, Yu J, Wang Y, Sun Y, Hu Y, Wu Q (2020) Mucko: Multi-layer cross-modal knowledge reasoning for fact-based visualquestion answering. arXiv preprint arXiv:2006.09073

Acknowledgements

This study was supported by the National Natural Science Foundation of China (grant no. 61972047), the National Key Research and Development Program of China (2018YFC0831500), and the NSFC-General Technology Basic Research Joint Funds (grant no. U1936220).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Liu, Z., Hou, W., Zhang, J. et al. A Multimodal Approach for Multiple-Relation Extraction in Videos. Multimed Tools Appl 81, 4909–4934 (2022). https://doi.org/10.1007/s11042-021-11466-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-021-11466-y