Abstract

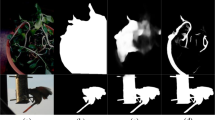

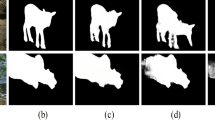

We combine SNet network based on gating mechanism with poolnet network to solve the problem of salient object detection. The network construction of this paper is based on FPN, which is a classic U-net backbone network. Inspired by poolnet, we also introduce the global feature guidance module. By aggregating the high-level semantic information into the transposition convolution stage of different scales, the higher-level semantic features can be used more effectively. Although the introduction of global information can effectively improve the effect of saliency monitoring, how to aggregate the global and local features of different scales still needs to be further explored. Inspired by SNet network, we also integrate snet into our network. In the specific feature fusion process, the feature values of different channels are weighted, and each channel is given different weights. The more important semantic information is extracted from multiple channels, and the key semantic information in the feature map is retained. Compared with the current typical methods, we find that the introduction of snet module can reduce the generation of error areas of saliency map, and further improve the integrity of saliency map. For different regions of the same object, due to the difference of color contrast and texture, the saliency map generated by the previous method is inconsistent in the same object region. Our method can effectively solve this problem. For the same object, we can generate consistent results of saliency probability. Through quantitative evaluation with the existing 15 methods (including SOTA method). Our network can process 300 ∗ 267 images faster than 11FPS, which is at a medium level compared with the most advanced networks. These networks include DGRL, PiCANet, PoolNet and so on. The Precision and Recall curve results show that our network performs well on DUT-O, DUT-S and ECSSD data sets, and the minimum precision values are all above 0.47. The false positive prediction of salient objects in the graph is low, and the overall performance of the model is good.

Similar content being viewed by others

References

Borji A, Cheng M, Jiang H, Li J (2015) Salient object detection: A benchmark. IEEE Trans Image Process 24(12):5706–5722

Cheng M, Zhang G, Mitra NJ, Huang X, Hu S (2011) Global contrast based salient region detection. Comput Vis Pattern Recogn 37(3):409–416

Cook A-p-f-o-l (2017) Global average pooling layers for object localization

Dai J, He K, Li Y, Ren S, Sun J (2016) Instance-sensitive fully convolutional networks. Eur Conf Comput Vis:534–549

Dai J, Li Y, He K, Sun J (2016) R-fcn: Object detection via region-based fully convolutional networks. Advances In Neural Information Processing Systems

Girshick R (2015) Fast r-cnn. Proc IEEE Int Conf Comput Vis:1440–1448

Hand DJ, Christen P (2018) A note on using the f-measure for evaluating record linkage algorithms. Stat Comput 28(3):539–547

Harel J, Koch C, Perona P (2006) Graph-based visual saliency. Adv Neural Inf Process Syst:545–552

Hariharan B, Arbelaez P, Girshick R, Malik J (2015) Hypercolumns for object segmentation and fine-grained localization. Proc IEEE Conf Comput Vis Pattern Recogn:447–456

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. Comput Vis Pattern Recogn:770–778

He K, Zhang X, Ren S, Sun J (2016) Identity mappings in deep residual networks. Eur Conf Comput Vis:630–645

Hou Q, Liu J, Cheng M, Borji A, Torr PHS (2018) Three birds one stone: A general architecture for salient object segmentation, edge detection and skeleton extraction. arXiv: Computer Vision and Pattern Recognition

Hu J, Shen L, Albanie S, Sun G, Wu E (2019) Squeeze-and-excitation networks. IEEE Trans Pattern Anal Mach Intell:1–1

Huang X, Shen C, Boix X, Zhao Q (2015) Salicon: Reducing the semantic gap in saliency prediction by adapting deep neural networks. Proc IEEE Int Conf Comput Vis:262–270

Jiang H, Wang J, Yuan Z, Wu Y, Zheng N, Li S (2013) Salient object detection: A discriminative regional feature integration approach. Proc IEEE Conf Comput Vis Pattern Recogn:–2090

Ketkar N (2017) Introduction to pytorch. Deep Learn Python:195–208

Kingma DP, Ba J (2014) Adam: A method for stochastic optimization. arXiv: Learning

Krizhevsky A, Sutskever I, Hinton G E (2012) Imagenet classification with deep convolutional neural networks. Adv Neural Inf Process Syst:1097–1105

Lee G, Tai Y, Kim J (2016) Deep saliency with encoded low level distance map and high level features. Proc IEEE Conf Comput Vis Pattern Recogn:660–668

Li G, Xie Y, Lin L, Yu Y (2017) Instance-level salient object segmentation. Proc IEEE Conf Comput Vis Pattern Recogn:247–256

Li G, Yu Y (2015) Visual saliency based on multiscale deep features. Proc IEEE Conf Comput Vis Pattern Recogn:5455–5463

Li X, Lu H, Zhang L, Ruan X, Yang M (2013) Saliency detection via dense and sparse reconstruction. Int Conf Comput Vis:2976–2983

Lin T, Dollar P, Girshick R, He K, Hariharan B, Belongie S (2017) Feature pyramid networks for object detection. Proc IEEE Conf Comput Vis Pattern Recogn:936–944

Lin T, Goyal P, Girshick R, He K, Dollar P (2017) Focal loss for dense object detection, pp 2999–3007

Liu J, Hou Q, Cheng M, Feng J, Jiang J (2019) A simple pooling-based design for real-time salient object detection. Proc IEEE Conf Comput Vis Pattern Recogn:3917–3926

Liu N, Han J (2016) Dhsnet: Deep hierarchical saliency network for salient object detection. Proc IEEE Conf Comput Vis Pattern Recogn:678–686

Liu N, Han J, Yang M (2018) Picanet: Learning pixel-wise contextual attention for saliency detection. Comput Vis Pattern Recogn:3089–3098

Liu W, Anguelov D, Erhan D, Szegedy C, Reed S, Fu C, Berg AC (2016) Ssd: Single shot multibox detector. Eur Conf Comput Vis:21–37

Long J, Shelhamer E, Darrell T (2015) Fully convolutional networks for semantic segmentation. Proc IEEE Conf Comput Vis Pattern Recogn:3431–3440

Noh H, Hong S, Han B (2015) Learning deconvolution network for semantic segmentation. Proc IEEE Int Conf Comput Vis:1520–1528

Pang J, Chen K, Shi J, Feng H, Ouyang W, Lin D (2019) Libra r-cnn: Towards balanced learning for object detection. Proc IEEE Conf Comput Vis Pattern Recogn:821–830

Perazzi F, Krahenbuhl P, Pritch Y, Hornung A (2012) Saliency filters: Contrast based filtering for salient region detection. 2012 IEEE Conference on Computer Vision and Pattern Recognition, pp 733–740

Powers D M W (2011) Evaluation: from precision, recall and f-measure to roc, informedness, markedness and correlation 2(1):37–63

Redmon J, Divvala SK, Girshick R, Farhadi A (2016) You only look once: Unified, real-time object detection. Proc IEEE Conf Comput Vis Pattern Recogn:779–788

Redmon J, Farhadi A (2017) Yolo9000: Better, faster, stronger. Proc IEEE Conf Comput Vis Pattern Recogn:6517–6525

Ren S, He K, Girshick R, Sun J (2015) Faster r-cnn: towards real-time object detection with region proposal networks 2015:91–99

Ronneberger O, Fischer P, Brox T (2015) U-net: Convolutional networks for biomedical image segmentation. Int Conf Med Image Comput Comput-Assist Interven:234–241

Sethi A, Rahurkar M, Huang TS (2007) Event detection using variable module graphs for home care applications. EURASIP J Adv Signal Process 2007 (1):111–111

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. Computer Vision and Pattern Recognition

Toshev A, Szegedy C (2014) Deeppose: Human pose estimation via deep neural networks. Proc IEEE Conf Comput Vis Pattern Recogn:1653–1660

Van Wyk GJ, Bosman AS (2018) Evolutionary neural architecture search for image restoration. 2019 International Joint Conference on Neural Networks (IJCNN)

Wang L, Lu H, Ruan X, Yang M (2015) Deep networks for saliency detection via local estimation and global search. Comput Vis Pattern Recogn:3183–3192

Wang L, Lu H, Wang Y, Feng M, Wang D, Yin B, Ruan X (2017) Learning to detect salient objects with image-level supervision. Proc IEEE Conf Comput Vis Pattern Recogn:3796–3805

Wang L, Wang L, Lu H, Zhang P, Ruan X (2016) Saliency detection with recurrent fully convolutional networks. Eur Conf Comput Vis:825–841

Wang L, Wang L, Lu H, Zhang P, Ruan X (2019) Salient object detection with recurrent fully convolutional networks. IEEE Trans Pattern Anal Mach Intell 41(7):1734–1746

Wang T, Borji A, Zhang L, Zhang P, Lu H (2017) A stagewise refinement model for detecting salient objects in images. Proc IEEE Int Conf Comput Vis:4039–4048

Wang T, Zhang L, Wang S, Lu H, Yang G, Ruan X, Borji A (2018) Detect globally, refine locally: A novel approach to saliency detection. Comput Vis Pattern Recogn:3127–3135

Willmott CJ, Matsuura K (2005) Advantages of the mean absolute error (mae) over the root mean square error (rmse) in assessing average model performance. Clim Res 30(1):79–82

Xie S, Tu Z (2015) Holistically-nested edge detection. Proc IEEE Int Conf Comput Vis:1395–1403

Yan Q, Xu L, Shi J, Jia J (2013) Hierarchical saliency detection. Proc IEEE Conf Comput Vis Pattern Recogn:1155–1162

Yang C, Zhang L, Lu H, Ruan X, Yang M (2013) Saliency detection via graph-based manifold ranking. Comput Vis Pattern Recogn:3166–3173

Yang W, Ouyang W, Li H, Wang X (2016) End-to-end learning of deformable mixture of parts and deep convolutional neural networks for human pose estimation. Proc IEEE Conf Comput Vis Pattern Recogn:3073–3082

Zhang P, Wang D, Lu H, Wang H, Ruan X (2017) Amulet: Aggregating multi-level convolutional features for salient object detection. Proc IEEE Int Conf Comput Vis:202–211

Zhang X, Wang T, Qi J, Lu H, Wang G (2018) Progressive attention guided recurrent network for salient object detection. Comput Vis Pattern Recogn:714–722

Zhao R, Ouyang W, Li H, Wang X (2015) Saliency detection by multi-context deep learning. Comput Vis Pattern Recogn:1265–1274

Acknowledgements

Thanks for the technical support provided by the National Natural Science Foundation of China 263 (No. 71471174) and National Defence Pre-research Foundation (No. 41412040304).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Ning, L., Jincai, H. & Yanghe, F. Construction of multi-channel fusion salient object detection network based on gating mechanism and pooling network. Multimed Tools Appl 81, 12111–12126 (2022). https://doi.org/10.1007/s11042-021-11031-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-021-11031-7