Abstract

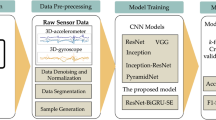

Activity recognition represents the task of classifying data derived from different sensor types into one of predefined activity classes. The most popular and beneficial sensors in the area of action recognition are inertial sensors such as accelerometer and gyroscope. Convolutional neural network (CNN) as one of the best deep learning methods has recently attracted much attention to the problem of activity recognition, where 1D kernels capture local dependency over time in a series of observations measured at inertial sensors (3-axis accelerometers and gyroscopes) while in 2D kernels apart from time dependency, dependency between signals from different axes of same sensor and also over different sensors will be considered. Most convolutional neural networks used for recognition task are built using convolution and pooling layers followed by a few number of fully connected layers but large and deep neural networks have high computational costs. In this paper, we propose a new architecture that consists solely of convolutional layers and find that with removing the pooling layers and instead adding strides to convolution layers, the computational time will decrease notably while the model performance will not change or in some cases will even improve. Also both 1D and 2D convolutional neural networks with and without pooling layer will be investigated and their performance will be compared with each other and also with some other hand-crafted feature based methods. The third point that will be discussed in this paper is the impact of applying fast fourier transform (FFT) to inputs before training learning algorithm. It will be shown that this preprocessing will enhance the model performance. Experiments on benchmark datasets demonstrate the high performance of proposed 2D CNN model with no pooling layers.

Similar content being viewed by others

References

https://medium.freecodecamp.org/an-intuitive-guide-to-convolutional-neural-networks-260c2de0a050. Accessed 24 april 2018

Alsheikh MA, Selim A, Niyato L, Doyle S, Lin H, Tan P (2016) Deep activity recognition models with triaxial accelerometers. Proceeding of AAAI workshops

Anguita D, Ghio A, Oneto L, Parra X, Reyes-Ortiz JL (2012) Human activity recognition on smartphones using a multiclass hardware-friendly support vec- tor machine. In: Proceeding of international conference on ambient assisted living and home care (IWAAL)

Anguita D, Ghio A, Oneto L, Parra X, Reyes-Ortiz JL (2013) A public domain dataset for human activity recognition using smartphones. Proceedings of international conference on European symposium on artificial neural networks (ESANN)

Banos O, Garcia R, Holgado JA, Damas M, Pomares H, Rojas I, Saez A, Villalonga C (2014) mHealthDroid: a novel framework for agile development of mobile health applications. Proceedings of the 6th International Work-conference on Ambient Assisted Living an Active Ageing (IWAAL 2014), Belfast, Northern Ireland, December 2–5

Banos O, Villalonga C, Garcia R, Saez A, Damas M, Holgado JA, Lee S, Pomares H, Rojas I (2015) Design: implementation and validation of a novel open framework for agile development of mobile health applications. BioMedical Engineering OnLine 14(S2:S6):1–20

Edel M, Köppe E (2016) Binarized-blstm-rnn based human activity recognition. In: International Conference on Indoor Positioning and Indoor Navigation (IPIN). IEEE, pp 1–7

Ha S, Choi S (2016) Convolutional neural networks for human activity recognition using multiple accelerometer and gyroscope sensors. In: IEEE International Joint Conference on Neural Networks (IJCNN), pp 381–388

Ha S, Yun J-M, Choi S (2015) Multi-modal convolutional neural networks for activity recognition. In: IEEE International Conference on Systems, Man, and Cybernetics (SMC). IEEE, pp 3017–3022

Hammerla NY, Halloran S, Plotz T (2016) Deep convolutional and recurrent models for human activity recognition using wearables. Proceeding of the 25th International joint conference on artificial intelligence (IJCAI)

Ignatov A (2017) Real-time human activity recognition from accelerometer data using convolutional. Neural Netw Appl Soft Comput J 62:915–922

Jian C, Bin X (2013) Cost-effective activity recognition on mobile devices. In: International conference on body area networks

Jiang W, Yin Z (2015) Human activity recognition using wearable sensors by deep convolutional neural networks. ACM Conference on multimedia

Jordao A, Torres LAB, Schwartz WR (2018) Novel approaches to human activity recognition based on accelerometer data. In: Signal, Image and Video Processing, vol 12. Springer, pp 1387–1394

Khan AM (2013) Recognizing physical activities using Wii remote. Int J Inf Educ Technol 3:60–62

Kwapisz J, Weiss G, Moore S (2010) Activity recognition using cell phone accelerometers. SIGKDD Explorations 12:74–82

Lara O, Labrador M (2012) A survey on human activity recognition using wearable sensors. In: IEEE Communication Surveys & Tutorials

Lee S, Yoon SM, Cho H (2017) Human activity recognition from accelerometer data using CNN. IEEE International conference on big data and smart computing

Masum AKM, Hossain ME, Humayra A, Islam S, Barua A, Alam GR (2019) A Statistical and deep learning approach for human activity recognition. In: 3rd International conference on trends in electronics and informatics (ICOEI). Tirunelveli, India, pp 1332–1337

Oukrich N, Maach A, Sabri E, Mabrouk E (2016) Activity recognition using back-propagation algorithm and minimum redundancy feature selection method. IEEE International colloquium on information science and technology

Panwar M, Dyuthi SR, Prakash KC, Biswas D, Acharyya A, Maharatna K, Gautam A, Naik GR (2017) CNN based approach for activity recognition using a wrist-worn accelerometer. 39th Annual International conference of the IEEE meeting of the engineering in medicine and biology society (EMBC)

Qin Q, Zhang Y, Meng S, Qin Z, Choob KKR (2020) Imaging and fusing time series for wearable sensor-based human activity recognition. Information Fusion Journal Elsevier 53:80–87

Ramasamy Ramamurthy S, Roy N (2018) Recent trends in machine learning for human activity recognition - A survey. In: WIREs Data Mining Knowl Discov

Ravi D, Wong C, Lo B, Yang G (2016) Deep learning for human activity recognition: A resource efficient implementation on low-power devices. In: 2016 IEEE 13th International Conference on Wearable and Implantable Body Sensor Networks (BSN), pp 71–76

Ravi D, Wong C, Lo B, Yang G (2016) A deep learning approach to on-node sensor data analytics for mobile or wearable devices. IEEE J Biomed Health Inform 21:56–64

Ronao CA, Cho SB (2015) Evaluation of deep convolutional neural network for human activity recognition with smart phone sensors. Proceeding of KIISE Korea computer conference

Ronao CA, Cho SB (2016) Human activity recognition with smartphone sensors using deep learning neural networks. Expert Syst Appl 235–244:59

San PP, Kakar P, Li X-L, Krishnaswamy S, Yang J-B, Nguyen MN (2017) Deep learning for human activity recognition. Big data analytics for sensor-network collected intelligence. Elsevier, pp 186–204

Sani S, Wiratunga N, Massie S (2017) Learning deep features for knn-based human activity recognition. Proceedings of the ICCBR workshops

Sharma A, Lee Y-D, Chung W-Y (2008) High accuracy human activity monitoring using neural network. Proceedings of international conference on convergence and hybrid information technology

Springenberg JT, Dosovitskiy A, Brox T, Riedmiller M (2015) Striving for simplicity : The all convolutional net. Workshop Contribution at ICLR

Vu TH, Dang A, Dung L, Wang J (2017) Self-gated recurrent neural networks for human activity recognition on wearable devices. In: Proceedings of the on thematic workshops of ACM multimedia. ACM, pp 179–185

Wang J, Chen Y, Hao S, Peng X, Hu L (2018) Deep learning for sensor-based activity recognition: A survey. In: Pattern recognition letters. Elsevier

Wu W, Dasgupta S, Ramirez EE, Peterson C, Norman GJ (2012) Classification accuracies of physical activities using smartphone motion sensors. Journal of Medical Internet Research 14

Yang JB, Nguyen MN, San PP, Li XL, Krishnaswamy S (2015) Deep convolutional neural networks on multichannel time series for human activity recognition. Proceeding of the International joint conference on artificial intelligence (IJCA)

Yang Z, Raymond OI, Zhang C, Wan Y, Long J (2018) DFTerNet: towards 2-bit dynamic fusion networks for accurate human activity recognition. IEEE Access 6:56750–56764

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Gholamrezaii, M., AlModarresi, S. A time-efficient convolutional neural network model in human activity recognition. Multimed Tools Appl 80, 19361–19376 (2021). https://doi.org/10.1007/s11042-020-10435-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-020-10435-1