Abstract

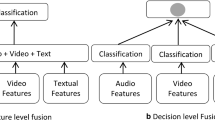

Due to the availability of an enormous amount of multimodal content on the social web and its applications, automatic sentiment analysis, and emotion detection has become an important and widely researched topic. Improving the quality of multimodal fusion is an important issue in this field of research. In this paper, we present a novel attention-based multimodal contextual fusion strategy, which extract the contextual information among the utterances before fusion. Initially, we fuse two-two modalities at a time and finally, we fuse all three modalities. We use a bidirectional LSTM with an attention model for extracting important contextual information among the utterances. The proposed model was tested on IEMOCAP dataset for emotion classification and CMU-MOSI dataset for sentiment classification. By incorporating the contextual information among utterances in the same video, our proposed method outperforms the existing methods by over 3% in emotion classification and over 2% in sentiment classification.

Similar content being viewed by others

Change history

20 February 2021

A Correction to this paper has been published: https://doi.org/10.1007/s11042-021-10591-y

References

Bahdanau D, Cho K, Bengio Y (2014) Neural machine translation by jointly learning to align and translate, arXiv:1409.0473

Busso C, Bulut M, Lee C, Kazemzadeh A, Mower E, Kim S, Chang J, Lee S, Narayanan S (2008) IEMOCAP: interactive emotional dyadic motion capture database. J Language Resour Evaluat 42(4):335–359

Cambria E (2016) Affective computing and sentiment analysis. IEEE Intell Syst 31(2):102–107

Celli F, Lepri B, Biel J-I, Gatica-Perez D, Riccardi G, Pianesi F (2014) The workshop on computational personality recognition 2014. In: Proceedings of the 22nd ACM International Conference on Multimedia. Orlando, pp 1245–1246

Chen LS, Huang TS, Miyasato T, Nakatsu R (1998) Multimodal human emotion/expression recognition. In Proceedings Third IEEE International Conference on Automatic Face and Gesture Recognition, Washington, DC, pp 366–371

de Kok S, Punt L, van den Puttelaar R, Ranta K, Schouten K, Frasincar F (2018) Review-aggregated aspect-based sentiment analysis with ontology features. Prog Artif Intell 7(4):295–306

Ellis JG, Jou B, Chang S-F (2014) why we watch the news: a dataset for exploring sentiment in broadcast video news," in Proceedings of the 16th International Conference on Multimodal Interaction, Istanbul, Turkey

Eyben F, Wöllmer M, Graves A, Schuller B, Douglas-Cowie E, Cowie R (2010) On-line emotion recognition in a 3-D activation-valence-time continuum using acoustic and linguistic cues. J Multimodal User Interfaces 3(1–2):7–19

Eyben F, Wöllmer M, Schuller B (2013) Recent developments in openSMILE, the Munich open-source multimedia feature extractor, in Proceedings of the 21st ACM international conference on Multimedia, Barcelona, Spain

Gohil S, Vuik S, Darzi A (2018) Sentiment analysis of health care tweets: review of the methods used, JMIR Public Health Surveill 4(2)

Graves A, Mohamed A-r, Hinton G (2013) Speech recognition with deep recurrent neura networks, in International Conference on Acoustics. Speech and Signal Processing, Vancouver

Gupta P, Tiwari R, Robert N (2016) Sentiment analysis and text summarization of online reviews: a survey," in International Conference on Communication and Signal Processing (ICCSP), Melmaruvathur, India

Hochreiter S, Schmidhuber J (1997) Long short-term memory. Neural Comput 9(8):1735–1780

Huddar MG, Sannakki SS, Rajpurohit VS (2019) A survey of computational approaches and challenges in multimodal sentiment analysis. Int J Comput Sci Eng 7(1):876–883

Ji S, Xu W, Yang M, Yu K (2013) 3d convolutional neural networks for human action recognition. IEEE Trans Pattern Anal Mach Intell 35(1):221–231

Karpathy A, Toderici G, Shetty S, Leung T, Sukthankar R, Fei-Fei L (2014) Large-scale video classification with convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, pp 1725–1732

Kingma DaBJ (2014) Adam: a method for stochastic optimization, arXiv preprint arXiv:1412.6980, vol 15

Kirilenko AP, Stepchenkova SO, Kim H, Li X (2018) Automated sentiment analysis in tourism: comparison of approaches. Journal of Travel Research 57(8):1012–1025

Kiritchenko S, Zhu X, Mohammad SM (2014) Sentiment analysis of short informal texts. J Artif Intell Res 50:723–762

Korayem M, Crandall D, Abdul-Mageed M (2012) Subjectivity and sentiment analysis of arabic: A survey. In: International conference on advanced machine learning technologies and applications. Springer, Berlin, Heidelberg, pp 128–139

Li X, Xie H, iChenb L, Wang J, Deng X (2014) News impact on stock price return via sentiment analysis. Knowledge-Based Syst 69:14–23

Liu B, Zhang LL (2012) A survey of opinion mining and sentiment analysis, in mining text data. Springer, Boston, pp 415–463

Lo SL, Cambria E, Chiong R, Cornforth D (2017) Multilingual sentiment analysis: from formal to informal and scarce resource languages. Artif Intell Rev 48(4):499–527

Lyu K, Kim H (2016) Sentiment analysis using word polarity of social media. Wirel Pers Commun 89(3):941–958

Mariethoz J, Bengio S (2005) A unified framework for score normalization techniques applied to text-independent speaker verification. IEEE Signal Process Lett 12(7):532–535

Mars A, Gouider MS (2017) Big data analysis to features opinions extraction of customer. Procedia Comput Sci 112:906–916

Mikolov T, Chen K, Corrado G, Dean J (2013) Efficient Estimation of Word Representations in Vector Space," arXiv:1301.3781.

Mohammad SM, Kiritchenko S, Zhu X (2013) NRC-Canada: Building the state-of-the-art in sentiment analysis of tweets. In: Second Joint Conference on Lexical and Computational Semantics (*SEM), Volume 2: Proceedings of the Seventh International Workshop on Semantic Evaluation (SemEval 2013), Atlanta, pp 321–327

Nagamma P, Pruthvi HR, Nisha KK, Shwetha NH (2015) An improved sentiment analysis of online movie reviews based on clustering for box-office prediction, in International Conference on Computing. Communication & Automation, Noida

Nalisnick ET, Baird HS (2013) Extracting sentiment networks from Shakespeare's plays in 12th International Conference on Document Analysis and Recognition, Washington, DC, USA

Noroozi F, Marjanovic M, Njegus A, Escalera S, Anbarjafari G (2017) Audio-visual emotion recognition in video clips. IEEE Trans Affect Comput 10(1):60–75

Peng B, Li J, Chen J, Han X, Xu R, Wong K-F (2015) Trending sentiment-topic detection on twitter. In: International Conference on Intelligent Text Processing and Computational Linguistics. Springer, Cham, pp 66–77

Penga H, Ma Y, Lib Y, Cambria E (2018) Learning multi-grained aspect target sequence for Chinese sentiment analysis. Knowl-Based Syst 148:167–176

Perez-Rosas V, Mihalcea R, Morency L-P (2013) Utterance-level multimodal sentiment analysis, in Proceedings of the 51st Annual Meeting of the Association for Computational Linguistics. Sofia, Bulgaria

Poria S, Cambria E, Gelbukh A (2015) Deep convolutional neural network textual features and multiple kernel learning for utterance-level multimodal sentiment analysis, EMNLP, p 2539–2544

Poria S, Chaturvedi I, Cambria E, Hussain A (2016) Convolutional MKL based multimodal emotion recognition and sentiment analysis. IEEE 16th International Conference on Data Mining (ICDM), Barcelona

Poria S, Cambria E, Bajpai R, Hussain A (2017) A review of affective computing: from unimodal analysis to multimodal fusion. Information Fusion 37:98–125

Poria S, Cambria E, Hazarika D, Mazumder N, Zadeh AL (2017) Context-dependent sentiment analysis in user-generated. In: Proceedings of the 55th annual meeting of the association for computational linguistics (volume 1: Long papers), Vancouver, pp 873–883

Ramteke J, Shah S, Godhia D, Shaikh A (2016) Election result prediction using twitter sentiment analysis. International Conference on Inventive Computation Technologies (ICICT), Coimbatore

Rosas VP, Mihalcea R, Morency L-P (2013) Multimodal sentiment analysis of spanish online videos. IEEE Intell Syst 28(3):38–45

Rozgić V, Ananthakrishnan S, Saleem S, Kumar R, Prasad R (2013) Ensemble of SVM trees for multimodal emotion recognition. In: Proceedings of The 2012 Asia Pacific Signal and Information Processing Association Annual Summit and Conference. Hollywood, pp 1–4

Teh YW, Hinton GE (2000) Rate-coded restricted Boltzmann machines for face recognition. In: Proceedings of the 13th International Conference on Neural Information Processing Systems (NIPS'00). MIT Press, Cambridge, pp 872–878

Thakora P, Sasi DS (2015) Ontology-based sentiment analysis process for social media content. Procedia Comput Sci 53:199–207

Wöllmer M, Weninger F, Knaup T, Schuller B, Sun C, Sagae K, Morency L-P (2013) YouTube movie reviews: sentiment analysis in an audio-visual context. IEEE Intell Syst 28(3):46–53

Wu CH, Liang WB (2010) Emotion recognition of affective speech based on multiple classifiers using acoustic-prosodic information and semantic labels. IEEE Trans Affect Comput 2(1):10–21

Xu K, Ba J, Kiros R, Cho K, Courville A, Salakhutdinov R, Zemel R, Bengio Y (2015) Show, attend and tell: Neural image caption generation with visual attention. In: International Conference on Machine Learning, Lille, pp 2048–2057

Zadeh A, Zellers R, Pincus E, Morency L-P (2016) Multimodal sentiment intensity analysis in videos: facial gestures and verbal messages. IEEE Intell Syst 31(6):82–88

Zadeh A, Chen M, Poria S, Cambria E, Morency L-P (2017) Tensor fusion network for multimodal sentiment analysis. Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, Copenhagen, pp 1103–1114

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The original online version of this article was revised: Equation 18 contains an error.

Rights and permissions

About this article

Cite this article

Huddar, M.G., Sannakki, S.S. & Rajpurohit, V.S. Attention-based multimodal contextual fusion for sentiment and emotion classification using bidirectional LSTM. Multimed Tools Appl 80, 13059–13076 (2021). https://doi.org/10.1007/s11042-020-10285-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-020-10285-x