Abstract

The use of computer simulation to understand how human faces age has been a growing area of research since decades. It has been applied to the search for missing children as well as to the fields of entertainment, cosmetics and dermatology research. Our objective is to elaborate a model for the age-related changes of visual cues which affect the perception of age, so that we may better predict them. Traditional approaches based on the Active Appearance Model (AAM) tend to blurry appearance and wipe out texture details such as wrinkles. We introduce Wrinkle Oriented Active Appearance Model (WOAAM) where a new channel is added to the AAM dedicated to analyze wrinkles. Firstly, we propose to represent both the shape and texture of each wrinkle on a face by a compact and interpretable vector. Afterwards, to model the distribution of wrinkles on a face, we introduce a new way to approximate an empiric joint probability density by creating an ensemble of joint probability densities estimated by Kernel Density Estimation. Finally, we show how to create new samples from such an ensemble of densities, and thus synthesize new plausible wrinkles. In comparison to other methods which add wrinkles at post-processing level, our method fully integrates them in AAM. Thereby, the wrinkles generated are statistically representative of a specific age in terms of number, length, shape and intensity. With an age estimation Convolutional Neural Network, we found that age-progressed faces produced by the WOAAM better reduces the gap between the expected age and the estimated age than those produced by a classic AAM.

Similar content being viewed by others

1 Introduction

Age progression has been an ever-growing field for several decades. It has been applied to the search for missing children [28, 30], entertainment [32], cosmetics [1, 3] and dermatology research [1, 24]. In this kind of applications, artificial facial aging must consider age-related morphological changes as well as skin appearance modifications in order to provide realistic results. The most dramatic change of the face with age is morphological and results from facial growth; it occurs from birth to early adulthood [8]. Another age-related morphological modification concerns the facial volumes due to fat distribution variations; they vary all along life, from birth to late adulthood [7]. During adulthood facial skin also undergoes dramatic changes with age, including wrinkling and sagging, increases of pigmented irregularities [39]. All these skin age-related features are keys in the perception of facial age in adults [5, 9, 22, 27]. Our objective is to elaborate a model for age-related changes of visual cues on older women faces affecting age perception to better predict them. As we will see in the next section, lots of age progression methods change shape and appearance without incorporating specific aging signs such as wrinkles.

For this reason, we propose WOAAM (Wrinkle Oriented Active Appearance Model): we base our work on the Active Appearance Model to simulate facial aging (Section 2.1 p. 3), to which we incorporate a specific channel to analyze and synthesize wrinkles (Sections 2.2 and 2.3 p. 4–5) before explaining the computation of an aging trajectory (Section 2.4 p. 7). Afterwards, we will show images resulting from the aging and rejuvenating of faces (Section 3.1 p. 8), and finally, that this approach increases/decreases perceived age more precisely than the unmodified Active Appearance Model, tested with an age estimation Convolutional Neural Network (Section 3.2 p. 8).

1.1 Related works

Given the diversity of potential applications of facial aging and the growing variety of computer vision techniques, many methods have been developed in recent decades [10, 21, 36].

Ramanathan and Chellappa [23] propose a craniofacial growth model to analyze shape variations due to age for children under 18 years of age. Shapes are defined by a set of facial landmarks, and a model of facial deformation for aging during childhood is introduced. Then, faces are warped according to the deformation model to rejuvenate or age. This model permits them to estimate an age based on a face and to mock-up the face aging process for children. This model only takes on board shape variations because that is considered the principal source of variations from birth to adolescence.

When elaborating a model for facial aging during adulthood, in addition to shape, texture changes also need to be considered. The work of Lanitis et al. [16, 17] is the first to use Active Appearance Model on age progression. They use AAM to create a subspace modeling both texture and shape variations of faces. Regression of coordinates from this newly created space on age indicates the direction of facial aging. Finally, they can project a new face in this subspace, translate it in the face aging direction and reconstruct a shape and texture to obtain an aged appearance. Nevertheless, AAM-based age progression is known to produce a blurry texture because wrinkles and spots are never perfectly aligned between people.

Facing this problem, more recent approaches [4, 35] use AAM to produce appearance and shape, and add a post-processing step on appearance to superimpose patches of high-frequency details. While faces produced are plausible, details added are not statistically learned for age progression, as texture patches that contain details are chosen with a similarity measure, and not with respect to a precise age.

Jinli Suo et al. [13] divide faces into several patches to create an And-Or graph containing every patches at five age intervals, spaced over ten years. And nodes represent different parts of the face, whereas Or nodes represent the different realizations of these parts for the population in every age group. They use a first order Markov chain to model aging of parts of the face. Wrinkles are annotated and their properties (numbers, lengths, positions...) are modeled by a Poisson distribution, for each property. Artificial aging can be created by decomposing a face, present in age group t, in a And-Or graph Gt, and to sample the probability p(Gt+ 1 | Gt) with Gibbs sampling algorithm; the graph Gt+ 1 can be collapsed to generate a new face.

Another approach creates a prototype [5, 26], an average face from faces within a constrained age group, meant to represent typical features from this group. A younger face can be then warped in the mean shape, and the prototype blended on the texture of the younger face to make it look older. As for AAM-based methods, prototype-based methods suffer from the same problem; making an average face will blur out every non-aligned high frequency detail. Tiddeman et al. [33, 34] propose to add a post-processing step to enhance high frequency information on the average face. They extract fine details with wavelet decomposition [33] for every face to add them on the final average face, with a parameter σ controlling the level of details to transfer. In [34], they combine wavelet decomposition with Markov Random Field to regenerate fine details on the average face, which produces more realistic results. Although having more wrinkles, the final results are not completely realistic nor completely facial aging oriented, as details generated are not chosen with respect to age.

Shu et al. [29] propose to encode aging pattern of faces in age-group specific dictionaries. Every two neighboring dictionaries are learned jointly taking into consideration extra personalized facial characteristics, e.g. mole, which are invariant in the aging process. However, faces produced are still blurry and no wrinkles appear, even for long-term aging (+ 40 years).

Promising approaches [2, 20, 37, 38, 40] propose to use Deep Neural Networks to produce aged faces.

Antipov et al. [2] propose age conditional Generative Adversarial Network (acGAN). Generative Adversarial Networks (GAN) are known to produce images with sharper textures because the reconstruction metric is not defined in the pixels space, but in the latent variables space. They combine a GAN with a face recognition neural network to preserve identity during reconstruction and aging.

Wang et al. [37, 38] introduce a Recurrent Face Aging (RFA) framework using a Recurrent Neural Network which takes as input a single image and automatically outputs a series of aged faces.

Zhang et al. [40] presented Conditional Adversarial Autoencoder (CAAE). They use an Autoencoder combined with 2 discriminators working on latent variables and output images to impose photo-realistic results. The first discriminator Dz imposes latent variables z to be uniformly distributed to avoid “holes” in the latent space, and thus to produce a smooth age progression. The second discriminator Dimg, inspired by the GAN architecture, discriminates between real images and generated images, and its loss is used to improve the photo-realism of pictures. Age progression is achieved by regressing the latent variables with respect to age.

However, age progression algorithms based on neural networks can produce in some cases unrealistic faces (e.g the 2 eyes of a reconstructed face can have different shapes). In addition, lots of these algorithms work on low resolution faces, at most 128 × 128 [2, 20, 40]. Thus, as the used faces are too small to show fine details, these face aging systems cannot generate faces with fine wrinkles.

In addition to facial aging, many applications aim to estimate age from faces.

Early works have been made by Kwon and Lobo [14, 15]; they computed several distance ratios between landmarks at specific locations on faces to distinguish between 3 age classes, babies, young adults, and seniors.

Lanitis et al. [17, 18] proposed to obtain a compact parametric description of face images using Active Appearance Model and to use this description to estimate ages. Shapes are normalized with Procrustes Analysis and parametrized with Principal Component Analysis. Thereafter, faces are warped in the mean shape before being also parametrized with Principal Component Analysis. Shape and appearance parameters are then concatenated and a third Principal Component Analysis is performed. Finally, the authors tested a range of classifiers and regressions like linear regression, quadratic regression, cubic regression, and artificial neural network.

Guo et al. [11] proposed the Biological Inspired Features. Face images are firstly convoluted with several Gabor kernels extracting specific details in terms of scales and orientations. Secondly, the result undergoes a max pooling compensating for small translations and small rotations. Finally, the pooled feature is used with Support Vector Machines to estimate age with a low Mean Absolute Error.

Recent uses of deep convolutional neural networks have demonstrated great performance and robustness on big datasets with large variations in pose and illumination. Rothe et al. [25] proposed to use the ConvNet VGG-16 [31] pretrained on the ImageNet database for image classification. Thereafter, they finetuned it with a database of 500k celebrity faces to estimate biological age. Finally, they finetuned it again on the database of the ChaLearn LAP 2015 challenge which they won.

In view of the current state of art and our constraints, we base our work on the Active Appearance Model to simulate facial aging (Section 2.1 p. 3), to which we incorporate a specific channel to fully integrate wrinkles (Section 2.2 p. 4); in this subspace, computed aging trajectories will take into account shape, appearance and wrinkles, differing from other methods which use classic AAM and add a post-processing step to include wrinkles.

Afterwards, we detail how to synthesize aged faces from our new wrinkle oriented AAM (Section 2.3 p. 5) before explaining the computation of an aging trajectory (Section 2.4 p. 7).

Finally, we propose to study the quality of our aging system by presenting images resulting from the aging and rejuvenating of faces (Section 3.1 p. 8). Then, we show that this approach increases/decreases perceived age more precisely than the unmodified Active Appearance Model with an age estimation convolutional neural network (Section 3.2 p. 8).

To analyze faces in the light of facial aging, we propose 3 contributions.

The first contribution is the parametrization of each wrinkle where shape and texture are represented altogether by a very understandable 7-length vector. Conversely, such a vector can be used to produce a wrinkle in shape and texture just from parameters.

To represent a group of wrinkles in one facial zone, we propose an approximation of an arbitrary joint probability of n random variables, as the set of every joint probability for every random variable taken two at a time; that is our second contribution.

Our third and last contribution is a new method of sampling for our approximated density mentioned above.

2 Proposed method

We propose a parametric model based on an Active Appearance Model (AAM) able to project a face in a latent space integrating high frequency facial details such as wrinkles. The face is transposed in this latent space, in a direction identified as an aging direction, and reconstructed to synthesize an aged face.

We will firstly describe AAM (Section 2.1), before explaining how to integrate high-frequency details like wrinkles (Section 2.2) and synthesize them (Section 2.3). Finally, we will present how to identify an aging trajectory in the latent space and use it to make a face look younger/older (Section 2.4).

2.1 Active appearance model

Active Appearance Model [6] is a statistical model which creates a subspace modelling appearance and shape variations in an annotated dataset of faces.

For shape, we put landmarks on key points, and afterwards, a Procrustean analysis is performed to align shapes on the mean shape using translation, rotation and homothety. Appearance information is then computed by warping every image into the mean shape, using each individual annotation.

After that, according to the AAM algorithm, Principal Component Analysis (PCA) is carried out separately for shape and appearance, and a final PCA is made on the concatenation of shape weights and appearance weights. This creates a subspace, which models variations present in the dataset of shape and appearance (see Fig. 1). Because the PCA has the advantage of being perfectly invertible, we can reconstruct a shape and an appearance from any point in the newly created subspace.

2.2 Analyzing wrinkles

As mentioned in [4], aged faces produced by AAM will always seem blurry. This is because high frequency details, like wrinkles, must be perfectly aligned between faces for the PCA to capture their variations and thus to reconstruct them.

We propose a new framework (Fig. 2), first to represent each wrinkle by a compact vector (Section 2.2.1), and after that to represent all wrinkles on a face by a feature vector which is robust enough for PCA and thus able to still retain wrinkle information after PCA reconstruction (Section 2.2.2).

2.2.1 Wrinkle model

We propose a separate model to analyze the shape and texture variations of wrinkles.

First, wrinkles are annotated with 5 points for each wrinkle. Afterwards, these 5 points are transformed into more explainable pose parameters containing:

-

center (cx, cy) of wrinkle

-

length ℓ which is equal to the geodesic distance between the first point and the last point of annotation

-

angle a in degrees

-

curvature \(\phantom {\dot {i}}\mathcal {C}\) computed as least squares minimization of

$$ \min \parallel Y - \mathcal{C}X^{2} {\parallel_{2}^{2}} $$(1)with Y (resp. X) the ordinates (resp. abscissa) of the wrinkle centered with the origin, and with first and last points horizontally aligned.

Here we just transformed the shape of a wrinkle in a 5-length vector \(\phantom {\dot {i}}(c_{x}, c_{y}, \ell , a, \mathcal {C})\).

In addition, each texture wrinkle is extracted by making a bounding box around annotation and only keeping high frequency information by Difference of Gaussians (see Fig. 3). Here we blur texture with parameter σb = 6 and subtract blurring result with the untouched texture to make a high-pass filter and extract wrinkles. This filter has the advantage of being able to reconstruct perfectly the original image by simply summing the low and high frequency versions of the image. Here, as the wrinkle is high frequency information, we only keep the high frequency image and drop the low frequency version which contains skin color.

After that, wrinkle appearance is warped in the mean shape and then transformed in pose parameters. A second derivative Lorentzian function (Eq. 2) is fitted on each column and the average of every parameter found by fitting is kept (Fig. 4).

where μ and σ are respectively location and scale of the second derivative Lorentzian function, and, A and o are tweaking parameters to adjust the curve. Only A and σ are kept to characterize respectively depth and width of wrinkles.

Thus, we constructed a model able to transform a wrinkle in a set of 7 understandable parameters \(\phantom {\dot {i}}(c_{x}, c_{y}, \ell , a, \mathcal {C}, A, \sigma )\), 5 for shape and 2 for appearance. On a side note, we can say that other pose parameters could have been computed. Taking the curvature parameter \(\phantom {\dot {i}}\mathcal {C}\) as minimization of (Eq. 1) is implicitly modeling wrinkle shapes as second order polynomials. For more accurate but more complex modeling, third or fourth order polynomials, or any parametric curve, could be used. Also, concerning appearance pose parameters, our modeling implicitly defines wrinkles as having uniform intensity and width. Instead of taking the average parameters (A, σ), several parameters (Ai, σi) could have been taken at different locations for each wrinkle appearance.

2.2.2 Robust feature

The objective remains to obtain a representation of wrinkles for each face and to analyze them by applying PCA. As people have different numbers of wrinkles, we cannot just compute parameters for each wrinkle in a face and concatenate them to create a fixed-length representation usable with PCA. We have to find a fixed-length representation vector of wrinkles for each face.

We propose to estimate the probability density modeling the structure of wrinkles for each face and each zone.

Using the system introduced in Section 2.2.1, each wrinkle is represented by a 7-length vector. We divide faces into 15 zones (forehead, nasolabial folds, chin, cheeks…), aiming to compute a joint probability P(d1,…, d7) of wrinkles from each zone and each face. Unfortunately, such joint probabilities can have a very large memory footprint because of dimensionality, as the memory size of densities grows exponentially with dimensionality. To circumvent this problem, we propose an approximation of an arbitrary joint probability of n random variables by computing every joint probability for every random variable taken two at a time (Fig. 5). More precisely, we propose to approximate P(d1,…, dn) by the set {P(d1, d2), P(d1, d3),…, P(dn− 1, dn)}. From now, when the number of dimensions n grows linearly, work memory no longer grows exponentially but quadratically \(\phantom {\dot {i}}{\Theta }(\frac {n(n-1)}{2})\).

Joint probabilities are computed by Kernel Density Estimation (KDE) with a Gaussian kernel of standard deviation σkde = 1.5 for 60x60 densities; σkde parameter controlling the tradeoff between accuracy of wrinkles representation with a low σkde, and generalization with a higher σkde.

Thus, for one face, we propose to extract a vector containing, for each of the 15 zones:

-

number of wrinkles nw in current zone,

-

average wrinkle,

-

densities computed with KDE on wrinkles where the average wrinkle was subtracted,

and to concatenate all 15 vectors to create the representation of wrinkles in one face.

2.3 Synthesizing wrinkles

We now have a representation of wrinkles that we are able to incorporate in the classic AAM as seen on Fig. 2. PCA being perfectly invertible, we can reconstruct a shape, an appearance and a wrinkles representation vector from any point in the final PCA space. However we must define how to generate wrinkles from our wrinkles representation vector.

We propose a new sampling method able to extract plausible wrinkles from our wrinkles representation vector, which is composed of joint probabilities. Algorithm’s main point is finding a point iteratively, dimension after dimension, whose projections in each density is above a probability threshold; the threshold is decreased from 0.9 to 0.1 progressively to find the best candidate; precise algorithm is available on the Appendix page 16.

First of all, peaks are found in P(cx, cy) and Sample function is called for each peak found with a peak as parameters px and py, from the peak with highest probability to the lowest.

We will present a step-by-step running of the function Sample for a given peak (39,41). A vector p = (39,41,0,0,0,0,0) is created which will contain the point’s coordinate created by the function (Fig. 6).

After that, function Get_argmax_min will extract two 1-D densities, P(cx = 39, ℓ) and P(cy = 41, ℓ), apply the minimum operator element-wise on them, and finally find the coordinate with highest probability ii such as ii = argmax (min (P (cx = 39, ℓ), P(cy = 41, ℓ))) (Figs. 7 and 8). With p3 = ii, if P(p3) is below the reference Pref = 0.9, Pref is decreased at 0.8; otherwise the search for p4 begins with Pref still equals to 0.9 and p = (39,41, p3,0,0,0,0).

The two extracted red lines on Fig. 7 are the first two curves at the top, the third curve is the result of the element-wise minimum operator. We find that the maximum is obtained for ℓ = 1

Here, p3 = 1 and P(p3) = 0.52, so Pref is sequentially decreased from 0.9 to 0.8, then 0.7, then 0.6, and finally 0.5, where the value of P(p3) is accepted and the search for p4 begins with Pref equals to 0.5 and p = (39,41,1,0,0,0,0).

For p4, the same processing is made with the three 1-D densities P(cx = 39, a), P(cy = 41, a) and P(ℓ = 1, a). With p4 found (Figs. 9 and 10), if P(p4) is below the reference Pref = 0.5, then backtracking starts: P(cx = 39, ℓ = 1) and P(cy = 41, ℓ = 1) are set to 0 and a new p3 has to be found; otherwise the search for p5 begins with p = (39,41,1, p4,0,0,0).

The three extracted red lines on Fig. 9 are the first three curves at the top, the fourth curve is the result of the element-wise minimum operator. We find that the maximum is obtained for a = 25

As the algorithm keeps running, more and more cases are explored to finally get a point p which maximizes probabilities in densities given the starting peak (px, py), and thus corresponds to a plausible wrinkle.

The wrinkle representation vector contains the number of wrinkles nw to generate, the average wrinkle and the densities. We can create the nw wrinkles parameters by running this algorithm nw times and adding them to the average wrinkle.

Afterwards, we trivially have to produce wrinkles shape and texture from parameters (see Section 2.2.1 p. 4 for definition of these parameters).

Shape is created from \(\phantom {\dot {i}}(c_{x}, c_{y}, \ell , a, \mathcal {C})\) by sampling the polynomial defined by the curvature \(\phantom {\dot {i}}\mathcal {C}\) until the specified geodesic length ℓ is reached. After that, points composing the shape are rotated according to angle a and finally center (cx, cy) is added to shape.

Texture is produced by creating an empty image and variations of a second derivative Lorentzian function (see Eq. 2) of parameters (A, σ) are affected to each column.

Finally, texture is warped in the newly created shape, for every wrinkle, and wrinkles are subsequently blended by merging the gradient of wrinkles with gradient of the underlying face (Fig. 11).

2.4 Aging trajectory

The final PCA subspace of the system (Fig. 2) models variations of faces in shape, appearance and wrinkles where original pictures are projected, and can be back-projected and perfectly reconstructed as we keep all components. As we can see in Fig. 2, we drop the final PCA from the classic AAM (Fig. 1). As PCA is unsupervised, the PCA algorithm could combine on a same component, variations correlated with age and others uncorrelated with age, perturbing the following trajectories computation part. In that respect, we keep the first 3 PCAs, reducing dimensions of our data and thus making the computation of trajectories possible, and drop the last PCA. As a consequence, PCA weights W in the final subspace correspond to the concatenation of PCA weights from the 3 channels: (Wshape, Wappearance, Wwrinkles). As our objective is to identify variations correlated with perceived age, we have to make a regression f of PCA weights W on perceived ages \(\phantom {\dot {i}}\mathcal {A}\). We decided to make a cubic polynomial regression to model facial aging in our case, as this choice gave us the best results:

To make a face with a perceived age a look older/younger of y years, we have to project it on the final subspace to obtain weights Wcurrent, apply this formula:

with f− 1(a) = Wmean, a and reconstruct a new face from Wnew. As multiple different faces can match the same age, f− 1(a) will return the average PCA weights Wmean, a of this specific age.

Just as [17], we make a Monte Carlo simulation to inverse f: we generate a lot of plausible weights W; the corresponding age for each weight w ∈ W is found by applying f(w), and f− 1 is a lookup table where for a given age a, f− 1(a) is an average of all weights Wa ⊂ W as such f(Wa) = a.

3 Analyzing results

In this section, we first present examples of aged and rejuvenated faces resulting from our model (Section 3.1), and after that we quantify the correlation between age progressed faces and the perception of these faces by an independent age estimation algorithm (Section 3.2). We show that our system is better correlated with the perception of age than the classic AAM (Section 3.2.2).

Our database consists of 400 Caucasian women taken in 2014, in frontal pose with a neutral expression and with the same lightning (Fig. 12). All faces are resized to 667 × 1000 resolution and annotated with 270 landmarks to locate eyebrows, eyes, mouth, nose, and facial contour. In addition, 5 landmarks are placed on each wrinkle. Each face has been rated by 30 untrained raters to obtain a precise perceived age; perceived ages in the dataset range from 43 to 85 years with an average of 69 years.

3.1 Qualitative results

As seen on Fig. 13, aging changes several known cues on a face [7, 8, 39].

Concerning shape, the size of the mouth is reduced, especially the height of the lower mouth; eyebrows and eyes are both reduced as well, and we can see facial sagging at the lower ends of the jaw.

Concerning appearance, the face globally becomes whiter and yellowish, eyebrows and eyelashes are less present, and the mouth loses its red color as aging progresses.

With aging, more wrinkles appear and existing wrinkles are deeper, wider and longer. As we can see, new wrinkles created by our system are plausibly located with realistic texture.

3.2 Quantitative results

3.2.1 Age estimation

As in [25], we employ a pre-trained VGG-16 CNN [31] to create a face representation less sen- sitive to pose and illumination : we feed a picture as input where the face has been cropped and the representation produced is the output from block5_pool, the last pooling output.

Afterwards, a Ridge regression is made in a 40-fold manner. As seen on Fig. 14, we obtain a R2 score of 0.92 and an average absolute error and maximum absolute error of respectively, 2.8 years and 13.7 years. On the very same database, the average human estimates perceived age with an average absolute error and maximum absolute error of respectively, 5.5 years and 17.1 years.

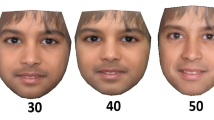

3.2.2 Comparison with prior works

For this experiment, we compare the perception of aged faces and the perception of rejuvenated faces for Active Appearance Model (AAM) [17], Conditional Adversarial Autoencoder (CAAE) [40] and our method Wrinkle Oriented Active Appearance Model (WOAAM). To test facial aging, we use faces with a perceived age of less than 60 years, and, for rejuvenating faces, a perceived age of 70 years and more. For AAM and WOAAM, each face is aged/rejuvenated 2 years at a time, and we compare, on average, the difference between estimated and expected age. For CAAE, each face is aged/rejuvenated 10 years at a time because this method use 10 discrete labels, and each label account for a 10-year interval.

As we can see on Figs. 15 and 16, our method produces faces that are perceived as older than classic AAM and CAAE for aging, and younger for rejuvenating. In other words, a facial aging with WOAAM of y years better reduces the gap between the expected age and the age estimated by the age estimation system than a classic AAM or CAAE. For a 10-year aging period, the estimation of age has increased by 4.9 years for WOAAM, by 3.4 years for AAM, and by 2.9 years for CAAE. Also, for a 10-year rejuvenating period, the estimation of age has decreased by 4 years for WOAAM, by 2.3 years for AAM, and by 1.5 years for CAAE. On average, we improved performance by a factor of 1.5 over AAM, and by a factor of 2.5 over CAAE.

However, we can note that for a 10-year period of aging and rejuvenating, the estimation of age has been altered too slightly: respectively, by only 4 years and -3.4 years, which is low. This can be explained by the fact that we used only one aging trajectory, and because our model does not consider age spots.

Age spots could be incorporated in our model by creating a dedicated channel in our system, as we did for wrinkles. Afterwards, pose parameters of each age spots shape could be computed by fitting an ellipse to shapes and taking parameters of the fitted ellipses. Also, pose parameters of each age spots appearance could be computed by taking their mean RGB color. After that, we can carry out the same processing that we made for wrinkles. Firstly, to estimate the probability density modeling the structure of age spots for each face and each zone. Secondly, we can compute a PCA on our age spots representation vectors and connect the output to the final PCA. Thus, aging trajectories would take into account age spots, in addition to shape, appearance and wrinkles.

4 Conclusion

We presented a new framework to analyze facial aging taking into account shape, appearance and wrinkles. We showed that the system can generate realistic faces for aging and rejuvenating, and such age-progressed faces better influence age perception than with Active Appearance Model or Conditional Adversarial Autoencoder. On average, we demonstrated an improvement factor of 2.0 over prior works.

Nevertheless, the model can be improved in several ways. Firstly, the realism of the faces produced by the model has not been rated in this study. Moreover, we know that facial aging is influenced by environmental factors like sun exposure, alcohol consumption or eating practices [12, 19]. A potential improvement could be to compute multiple trajectories in function of those factors. In addition, dark spots must be included in the model to increase the accuracy of facial aging. We are confident that dark spots can be integrated in the same way as wrinkles. This is the objective of future research.

References

Aarabi P (2013) How brands are using facial recognition to transform marketing, https://venturebeat.com/2013/04/13/marketing-facial-recognition/

Antipov G, Baccouche M, Dugelay JL (2017) Face aging with conditional generative adversarial networks, arXiv:1702.01983, bibtex: antipov_face_2017

Boissieux L, Kiss G, Thalmann NM, Kalra P (2000) Simulation of skin aging and wrinkles with cosmetics insight. In: Computer animation and simulation 2000, bibtex: boissieux_simulation_2000. Springer, Berlin, pp 15–27. https://doi.org/10.1007/978-3-7091-6344-3_2

Bukar AM, Ugail H, Hussain N (2017) On facial age progression based on modified active appearance models with face texture. In: Angelov P, Gegov A, Jayne C, Shen Q (eds) Advances in computational intelligence systems, vol 513, bibtex: angelov_facial_2017. Springer International Publishing, Cham, pp 465–479. https://doi.org/10.1007/978-3-319-46562-3_30

Burt DM, Perrett DI (1995) Perception of Age in Adult Caucasian Male Faces: Computer Graphic Manipulation of Shape and Colour Information. Proc R Soc B Biol Sci 259(1355):137–143. https://doi.org/10.1098/rspb.1995.0021, bibtex: burt_perception_1995

Cootes TF, Edwards GJ, Taylor CJ (1998) Active appearance models. In: Burkhardt H, Neumann B (eds) Computer Vision — ECCV’98. Lecture Notes in Computer Science, pp 484–498, https://doi.org/10.1007/BFb0054760, bibtex: cootes_active_1998. Springer, Berlin

Donofrio LM (2000) Fat distribution: a morphologic study of the aging face. Dermatologic Surgery: Official Publication for American Society for Dermatologic Surgery [et Al] 26(12):1107–1112. bibtex: donofrio_fat_2000

Farkas LG, Eiben OG, Sivkov S, Tompson B, Katic MJ (2004) Forrest CR (2004) Anthropometric measurements of the facial framework in adulthood: age-related changes in eight age categories in 600 healthy white North Americans of European ancestry from 16 to 90 years of age. The Journal of Craniofacial Surgery 15(2):288–298. bibtex: farkas_anthropometric_2004

Fink B, Grammer K, Matts P (2006) Visible skin color distribution plays a role in the perception of age, attractiveness, and health in female faces. Evolution and Human Behavior 27(6). https://doi.org/10.1016/j.evolhumbehav.2006.08.007, bibtex: fink_visible_2006

Fu Y, Guo G, Huang TS (2010) Age Synthesis and Estimation via Faces: A Survey. IEEE Transactions on Pattern Analysis and Machine Intelligence 32 (11):1955–1976. https://doi.org/10.1109/TPAMI.2010.36, bibtex: fu_age_2010

Guo G, Mu G, Fu Y, Huang TS (2009) Human age estimation using bio-inspired features. In: IEEE conference on computer vision and pattern recognition, 2009. CVPR 2009, IEEE, pp 112–119. bibtex: guo_human_2009

Guyuron B, Rowe DJ, Weinfeld AB, Eshraghi Y, Fathi A, Iamphongsai S (2009) Factors contributing to the facial aging of identical twins. Plast Reconstr Surg 123(4):1321–1331. https://doi.org/10.1097/PRS.0b013e31819c4d42, bibtex: guyuron_factors_2009

Suo J, Zhu S-C, Shan S, Chen X (2010) A compositional and dynamic model for face aging. IEEE Transactions on Pattern Analysis and Machine Intelligence 32 (3):385–401. https://doi.org/10.1109/TPAMI.2009.39, http://ieeexplore.ieee.org/document/4782970/, bibtex: jinli_suo_compositional_2010

Kwon YH, Lobo NdV (1994) Age classification from facial images. In: 1994 Proceedings of IEEE conference on computer vision and pattern recognition, pp 762–767. https://doi.org/10.1109/CVPR.1994.323894, bibtex: kwon_age_1994

Kwon YH, Lobo NdV (1999) Age Classification from Facial Images. Comput Vis Image Underst 74(1):1–21. https://doi.org/10.1006/cviu.1997.0549, http://www.sciencedirect.com/science/article/pii/S107731429790549X, bibtex: kwon_age_1999

Lanitis A, Taylor C, Cootes T (1999) Modeling the process of ageing in face images. IEEE 1:131–136. https://doi.org/10.1109/ICCV.1999.791208, http://ieeexplore.ieee.org/document/791208/, bibtex: lanitis_modeling_1999

Lanitis A, Taylor CJ, Cootes TF (2002) Toward automatic simulation of aging effects on face images. IEEE Transactions on Pattern Analysis and Machine Intelligence 24 (4):442–455. http://ieeexplore.ieee.org/xpls/abs_all.jsp?arnumber=993553, bibtex: lanitis_toward_2002

Lanitis A, Draganova C, Christodoulou C (2004) Comparing Different Classifiers for Automatic Age Estimation. IEEE Transactions on Systems, Man, and Cybernetics Part B, Cybernetics : a Publication of the IEEE Systems, Man, and Cybernetics Society 34:621–8. https://doi.org/10.1109/TSMCB.2003.817091, bibtex: lanitis_comparing_2004

Latreille J, Kesse-Guyot E, Malvy D, Andreeva V, Galan P, Tschachler E, Hercberg S, Guinot C, Ezzedine K (2012) Dietary monounsaturated fatty acids intake and risk of skin photoaging. PLoS ONE, 7(9). https://doi.org/10.1371/journal.pone.0044490, http://www.ncbi.nlm.nih.gov/pmc/articles/PMC3435270/, bibtex: latreille_dietary_2012

Liu S, Sun Y, Zhu D, Bao R, Wang W, Shu X, Yan S (2017) Face aging with contextual generative adversarial nets. In: Proceedings of the 2017 ACM on multimedia conference, 2017. https://doi.org/10.1145/3123266.3123431, bibtex: liu_face_2017. ACM, New York

Marcolin F, Vezzetti E (2017) Novel Descriptors for Geometrical 3d Face Analysis. Multimedia Tools Appl 76(12):13,805–13,834. https://doi.org/10.1007/s11042-016-3741-3

Mark LS, Pittenger JB, Hines H, Carello C, Shaw RE, Todd JT (1980) Wrinkling and head shape as coordinated sources of age-level information. Percept Psychophys 27(2):117–124. https://doi.org/10.3758/BF03204298, bibtex: mark_wrinkling_1980

Ramanathan N, Chellappa R (2008) Modeling shape and textural variations in aging faces. IEEE, 1–8. https://doi.org/10.1109/AFGR.2008.4813337, http://ieeexplore.ieee.org/document/4813337/

Restylane (2012) The restylane imagine tool. http://www.restylane.ca/rest-imagine-tool/

Rothe R, Timofte R, Van Gool L (2015) Dex: deep expectation of apparent age from a single image. In: Proceedings of the IEEE international conference on computer vision workshops, pp 10–15. http://www.cv-foundation.org/openaccess/content_iccv_2015_workshops/w11/html/Rothe_DEX_Deep_EXpectation_ICCV_2015_paper.html, bibtex: rothe_dex_2015

Rowland D, Perrett D (1995) Manipulating facial appearance through shape and color. IEEE IEEE Comput Graph Appl 15(5):70–76. https://doi.org/10.1109/38.403830, http://ieeexplore.ieee.org/document/403830/, bibtex: rowland_manipulating_1995

Samson N, Fink B, Matts PJ, Dawes NC, Weitz S (2010) Visible changes of female facial skin surface topography in relation to age and attractiveness perception. J Cosmet Dermatol 9(2):79–88. https://doi.org/10.1111/j.1473-2165.2010.00489.x, bibtex: samson_visible_2010

Scherbaum K, Sunkel M, Seidel HP, Blanz V (2007) Prediction of individual non-linear aging trajectories of faces. In: Computer graphics forum, Wiley Online Library, vol 26, pp 285–294. https://doi.org/10.1111/j.1467-8659.2007.01050.x/full, bibtex: scherbaum_prediction_2007

Shu X, Tang J, Lai H, Liu L, Yan S (2015) Personalized age progression with aging dictionary. In: Proceedings of the IEEE international conference on computer vision, pp 3970–3978

Simonite T (2006) Virtual face-ageing may help find missing persons. https://www.newscientist.com/article/dn10164-virtual-face-ageing-may-help-find-missing-persons/

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. arXiv:1409.1556, bibtex: simonyan_very_2014

Sydell L (2009) Building the curious faces of ’Benjamin Button’, https://www.npr.org/templates/story/story.php?storyId=100668766

Tiddeman B, Burt DM, Perrett D (2001) Prototyping and transforming facial textures for perception research. IEEE Computer Graphics and Applications 21. https://doi.org/10.1109/38.946630, bibtex: tiddeman_prototyping_2001

Tiddeman BP, Stirrat MR, Perrett DI (2005) Towards realism in facial image transformation: results of a wavelet mrf method. In: Computer graphics forum, Wiley Online Library, vol 24, pp 449–456. https://doi.org/10.1111/j.1467-8659.2005.00870.x/full, bibtex: tiddeman_towards_2005

Tsai MH, Liao YK, Lin IC (2014) Human face aging with guided prediction and detail synthesis. Multimedia Tools and Applications 72(1):801–824. https://doi.org/10.1007/s11042-013-1399-7, bibtex: tsai_human_2014

Vezzetti E, Marcolin F, Tornincasa S, Ulrich L, Dagnes N (2017) 3d geometry-based automatic landmark localization in presence of facial occlusions. Multimedia Tools and Applications pp 1–29. https://doi.org/10.1007/s11042-017-5025-y

Wang W, Cui Z, Yan Y, Feng J, Yan S, Shu X, Sebe N (2016) Recurrent face aging. In: 2016 IEEE conference on computer vision and pattern recognition (CVPR), pp 2378–2386. https://doi.org/10.1109/CVPR.2016.261

Wang W, Yan Y, Cui Z, Feng J, Yan S, Sebe N (2018) Recurrent face aging with hierarchical autoregressive memory. IEEE Transactions on Pattern Analysis and Machine Intelligence PP(99):1–1. https://doi.org/10.1109/TPAMI.2018.2803166

Yaar M, Gilchrest BA (2007) Photoageing: mechanism, prevention and therapy. Br J Dermatol 157(5):874–887. https://doi.org/10.1111/j.1365-2133.2007.08108.x, bibtex: yaar_photoageing_2007

Zhang Z, Song Y, Qi H (2017) Age Progression/Regression by conditional adversarial autoencoder. arXiv:1702.08423

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Martin, V., Séguier, R., Porcheron, A. et al. Face aging simulation with a new wrinkle oriented active appearance model. Multimed Tools Appl 78, 6309–6327 (2019). https://doi.org/10.1007/s11042-018-6311-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-018-6311-z