Abstract

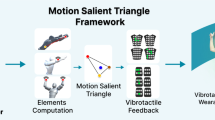

In the digital health, with the development of communication, medical information in all modalities is growing exponentially. Therefore, an effective communication for multi-modal data including tactile and visual information is paramount. In this paper, we propose a novel method to compress the tactile video data from GelSight sensors for the applications of digital health. Firstly, our method combines the visual and tactile modalities to extract the saliency information for the tactile videos. A target recognition network is designed as the visual assistance, which helps tactile videos to extract the effective information frames by recognizing whether objects are touching or not. Secondly, we design a special coding for inter- and intra-frame prediction to further extract the saliency information and compress the tactile signal. Intra-frame prediction utilizes a dynamic group of pictures (GOP) strategy to reduce time redundancy. And intra-frame prediction based on low-rank sparse decomposition (LRSD) is then used to further achieve efficient compression. Finally, Through extensive evaluation of metrics, such as peak signal-to-noise ratio (PSNR), structural similarity index (SSIM), and learned perceptual image patch similarity (LPIPS), our method obtains the better results than advanced video coding (AVC) and high efficiency video coding (HEVC). Our method achieves an average bitrate savings of 23.6% compared to HEVC and 61.4% compared to AVC. The results show that the proposed method can greatly compress the amount of haptic data with high reconstruction quality.

Similar content being viewed by others

References

Shi X, Liu T, Lu L (2021) 5G-advanced network enable tactile and multi-modality communication service. IEEE 21st Int Conf Commun Technol (ICCT) 360–364

Khan HU, Ali N, Nazir S et al (2023) Multi-criterial based feature selection for health care system. Mob Netw Appl 12(1):1–14

Yi B (2021) An overview of the Chinese healthcare system. Hepatobiliary Surg Nutr 10(1):93–95

Chen Z, Tian S, Shi X, Lu H (2023) Multiscale shared learning for fault diagnosis of rotating machinery in transportation infrastructures. IEEE Trans Industr Inf 19(1):447–458

Savaris A, Marquez Filho AAG, de Mello RRP et al (2017) Integrating a PACS network to a statewide telemedicine system: a case study of the Santa Catarina State Integrated telemedicine and Telehealth System. 2017 IEEE 30th Int Symp Computer-Based Med Syst (CBMS) 356–357

Prodhan UK, Rahman MZ, Jahan I et al (2017) Development of a portable telemedicine tool for remote diagnosis of telemedicine application. Int Conf Comput Commun Automation (ICCCA) 2017:287–292

Rostovskaya T, Rosukhovskii D (2021) The efficiency of use of a thermal imager in telemedicine planning of treatment for venous patients. IEEE 22nd Int Conf Young Prof Electron Devices and Mater (EDM) 407–410

Xiong L, Chng CB, Chui CK, Yu P, Li Y (2017) Shared control of a medical robot with haptic guidance. Int J Comput Assist Radiol Surg 12:137–147

Knopp S, Lorenz M, Pelliccia L et al (2018) Using industrial robots as haptic devices for VR-training. 2018 IEEE Conf Virtual Real 3D User Interfaces 607–608

Yi C, Rho S, Wei B, Yang C, Ding Z, Chen Z, Jiang F (2022) Detecting and correcting IMU movements during joint angle estimation. IEEE Trans Instrum Meas 71:1–14

Yi C, Wei B, Ding Z, Yang C, Chen Z, Jiang F (2022) A self-aligned method of IMU-based 3-DoF lower-limb joint angle estimation. IEEE Trans Instrum Meas 71:1–10

Yi C, Park S-O, Yang C, Jiang F, Ding Z, Zhu J, Liu J (2022) Muscular human cybertwin for internet of everything: a pilot study. IEEE Trans Industr Inf 18(12):8445–8455

Qiao Y, Zheng Q, Lin Y et al (2020) Haptic communication: toward 5G tactile internet. 2020 Cross Strait Radio Sci Wirel Technol Conf (CSRSWTC) 1–3

Chaudhuri S, Bhardwaj A, Chaudhuri S et al (2018) Perceptual deadzone. Kinesthetic Percept 1:17–28

Steinbach E, Strese M, Eid M et al (2018) Haptic codecs for the tactile internet. Proc IEEE 107(2):447–470

Tirmizi A, Pacchierotti C, Hussain I, Alberico G, Prattichizzo D (2016) A perceptually-motivated deadband compression approach for cutaneous haptic feedback. IEEE Haptics Symp 1:223–228

Zeng C, Zhao T, Liu Q et al (2020) Perception-lossless codec of haptic data with low delay. Proc 28th ACM Int Conf Multimedia 3642–3650

Okamoto S, Yamada Y (2010) Perceptual properties of vibrotactile material texture: effects of amplitude changes and stimuli beneath detection thresholds. IEEE/SICE Int Symp Syst Integr 384–389

Baran EA, Kuzu A, Bogosyan S, Gokasan M, Sabanovic A (2016) Comparative analysis of a selected DCT-based compression scheme for haptic data transmission. IEEE Trans Ind Inform 12(3):1146–1155

Luca PD, Formisano A (2020) Haptic data accelerated prediction via multi-core implementation. Adv Intell Sys Comput 1:110–121

Noll A, Gülecyüz B, Hofmann A, Steinbach E (2020) A rate-scalable perceptual wavelet-based vibrotactile codec. IEEE Haptics Symp 854–859

Yuan W, Dong S, Adelson E (2017) GelSight: high-resolution robot tactile sensors for estimating geometry and force. Sensors 17(12):2762

Donlon E, Dong S, Liu M, Li J, Adelson E, Rodriguez A (2018) Gelslim: a high-resolution, compact, robust, and calibrated tactile-sensing finger. IEEE/RSJ Int Conf Intell Robots Syst (IROS) 2018:1927–1934

Redmon J, Divvala S, Girshick R, Farhadi A (2016) You only look once: unified, real-time object detection. Proc IEEE Conf Comput Vision Pattern Recognit 779–788

Rahman D, Setianingsih C, Dirgantara FM (2022) Outdoor social distancing violation system detection using YOLO algorithm. 2022 5th International seminar on research of information technology and intelligent systems (ISRITI) 313–317

Bharti V, Singh S (2021) Social distancing violation detection using pre-trained object detection models. 2021 19th OITS International conference on information technology (OCIT) 319–324

Chien J, Zhang L, Winken M, Li X, Liao R-L, Gao H, Hsu C-W, Liu H, Chen C-C (2021) Motion vector coding and block merging in the versatile video coding standard. IEEE Trans Circuits Syst Video Technol 31(10):3848–3861

Li Y, Zhu JY, Tedrake R, Torralba A (2019) Connecting touch and vision via cross-modal prediction. Proc IEEE/CVF Conf Comput Vision Pattern Recognit 10609–10618

Acknowledgements

The paper is supported in part by the National Natural Science Foundation of China (62001246, 62231017, 62071255), Key R and D Program of Jiangsu Province Key project and topics under Grant (BE2021095, BE2023035), Foundation of Shanxi Key Laboratory of Machine Vision and Virtual Reality (No. 447-110103), Open Research Fund of Jiangsu Engineering Research Center of Communication and Network Technology. The Key Project of Natural Science Foundation of Jiangsu Province (BE2023087). The major projects of the Natural Science Foundation of the Jiangsu Higher Education institutions (20KJA510009).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Financial interests

The authors have no relevant financial interests to disclose.

Non-financial interests

The authors have no relevant non-financial interests to disclose.

Conflict of interest

The authors declare that they have no conflicts of interest to report regarding the present study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Mingkai Chen, Xinmeng Tan, and Huiyan Han are contributed equally to this work.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Chen, M., Tan, X., Han, H. et al. Tactile Codec with Visual Assistance in Multi-modal Communication for Digital Health. Mobile Netw Appl (2024). https://doi.org/10.1007/s11036-024-02294-z

Accepted:

Published:

DOI: https://doi.org/10.1007/s11036-024-02294-z