Abstract

Many important real-world applications of machine learning, statistical physics, constraint programming and information theory can be formulated using graphical models that involve determinism and cycles. Accurate and efficient inference and training of such graphical models remains a key challenge. Markov logic networks (MLNs) have recently emerged as a popular framework for expressing a number of problems which exhibit these properties. While loopy belief propagation (LBP) can be an effective solution in some cases; unfortunately, when both determinism and cycles are present, LBP frequently fails to converge or converges to inaccurate results. As such, sampling based algorithms have been found to be more effective and are more popular for general inference tasks in MLNs. In this paper, we introduce Generalized arc-consistency Expectation Maximization Message-Passing (GEM-MP), a novel message-passing approach to inference in an extended factor graph that combines constraint programming techniques with variational methods. We focus our experiments on Markov logic and Ising models but the method is applicable to graphical models in general. In contrast to LBP, GEM-MP formulates the message-passing structure as steps of variational expectation maximization. Moreover, in the algorithm we leverage the local structures in the factor graph by using generalized arc consistency when performing a variational mean-field approximation. Thus each such update increases a lower bound on the model evidence. Our experiments on Ising grids, entity resolution and link prediction problems demonstrate the accuracy and convergence of GEM-MP over existing state-of-the-art inference algorithms such as MC-SAT, LBP, and Gibbs sampling, as well as convergent message passing algorithms such as the concave–convex procedure, residual BP, and the L2-convex method.

Similar content being viewed by others

1 Introduction

Graphical models that involve cycles and determinism are applicable to a growing number of applications in different research communities, including machine learning, statistical physics, constraint programming, information theory, bioinformatics, and other sub-disciplines of artificial intelligence. Accurate and efficient inference within such graphical models is thus an important issue that impacts a wide number of communities. Inspired by the substantial impact of statistical relational learning (SRL) (Getoor and Taskar 2007), Markov logic (Richardson and Domingos 2006; Singla 2012) is a powerful formalism for graphical models that has made significant progress towards the goal of combining the powers of both first-order logic (Flach 2010) and probability. However, probabilistic inference represents a major bottleneck and can be problematic for learning when using it as a subroutine.

Loopy belief propagation (LBP) is a commonly used message-passing algorithm for performing approximate inference in graphical models in general, including models instantiated by an underlying Markov Logic. However, LBP often exhibits erratic behavior in practice. In particular, it is still not well understood when LBP will provide good approximations in the presence of cycles and when models possess both probabilistic and deterministic dependencies. Therefore, the development of more accurate and stable message passing based inference methods is of great theoretical and practical interest. Perhaps surprisingly, belief propagation achieves good results for coding theory problems with loopy graphs (Mceliece et al. 1998; Frey and MacKay 1998). In other applications, however, LBP often leads to convergence problems. In general LBP therefore has the following limitation:

Limitation 1 In the presence of cycles, LBP is not guaranteed to converge.

It is known that the local optima of the Bethe free energy correspond to local minima of LBP, and it has been proven that violating the uniqueness condition for the Bethe free energy generates several local minima (i.e., fixed points) in the space of LBP’s marginal distributions (Heskes 2004; Yedidia et al. 2005). From a variational perspective, it is known that if a factor graph has more than one cycle, then the convexity of the Bethe free energy is violated. A graph involving a single cycle has a unique local minimum and usually guarantees the convergence of LBP (Heskes 2004). From the viewpoint of a local search, LBP performs a gradient-descent/ascent search over the marginal space, endeavoring to converge to a local optimum (Heskes 2002). Heskes viewpoint is that the problem of non-convergence is related to the fact that LBP updates the unnormalized marginal of each variable by computing a coarse geometric average of the incoming messages received from its neighboring factors (Heskes 2002). Under Heskes’ line of analysis, LBP can make large moves in the space of the marginals and therefore it becomes more likely to overshoot the nearest local optimum. This produces an orbiting effect and increases the possibility of non-convergence. Other lines of analysis are based on the fact that messages in LBP may circulate around the cycles, which can lead to local evidence being counted multiple times (Pearl 1988). This, in turn, can aggravate the possibility of non-convergence. In practice, non-convergence occasionally appears as oscillatory behavior when updating the marginals (Koller and Friedman 2009).

Determinism plays a substantial role in reducing the effectiveness of LBP (Heskes 2004). For example, hard clauses in a Markov logic lead to deterministic dependencies in the corresponding factor graphs for groundings and therefore are particularly challenging for inference with LBP. It has been observed empirically that carrying out LBP on cyclic graphical models with determinism is more likely to result in a two-fold problem of non-convergence or incorrectness of the results (Mooij and Kappen 2005; Koller and Friedman 2009; Potetz 2007; Yedidia et al. 2005; Roosta et al. 2008). A second limitation of LBP could thus be formulated as:

Limitation 2 In the presence of determinism (a.k.a. hard clauses), LBP may deteriorate to inaccurate results.

In its basic form LBP also does not leverage the local structures of factors, handling them as black boxes. Using Markov logic as a concrete example, LBP often does not take into consideration the logical structures of the underlying clauses that define factors (Gogate and Domingos 2011). Thus, if some of these clauses are deterministic (e.g., hard clauses) or have extreme skewed probabilities, then LBP will be unable to reconcile the clauses. This, in turn, impedes the smoothing out of differences between the messages. The problem is particularly acute for those messages that pass through hard clauses which fall inside dense cycles. This can drastically elevate oscillations, making it difficult to converge to accurate results, and leading to the instability of the algorithm with respect to finding a local minimum (see pages 413–429 of Koller and Friedman 2009, for more details). On the flip side of this issue Koller and Friedman point out that one can prove that if the factors in a graph are less extreme—such that the skew of the network is sufficiently bounded—it can give rise to a contraction property that guarantees convergence (Koller and Friedman 2009). In our work here we are interested in taking advantage of determinism when it exists in the factors of an underlying graph in a way that does not increase the threat of non-convergence.

The literature available on LBP—which is perhaps the most widely used form of message-passing based inference—is heavily influenced by ideas from machine learning (ML) and constraint satisfaction (CS) among others. Although LBP has been scrutinized both theoretically and practically in various ways, most of the existing research either avoids the limitation of determinism when handling cycles, or does not take into consideration the limitation of cycles when handling determinism.

It is well known that techniques such as the junction tree algorithm (Lauritzen and Spiegelhalter 1988) are able to transform a graphical model into larger clusters of variables such that the clusters satisfy the running intersection property and that such a structure can then be used to obtain exact inference results. Such results also hold when the underlying graphical models possess deterministic dependencies. For many problems however, the tree width of the resulting junction tree may be so large that inference becomes intractable. More recent work has explored the interesting question of how to construct thin junction trees (Bach and Jordan 2001). However, many graphical models derived from a Markov logic or problems with complex constraints quickly lead to trees with large tree widths.

In this paper, one of our key objectives is to bring probabilistic Artificial Intelligence, Machine Learning and Constraint Programming techniques closer together through the lens of variational message-passing inference. That is, to address the limitations of LBP discussed above, we introduce Generalized arc-consistency Expectation-Maximization Message-Passing (GEM-MP), a novel approach to inference for graphical models based on variational message-passing and arc-consistency within extended factor graphs. In this work we have focused on Markov logic and Ising models, but our GEM-MP framework is applicable to other representations, including standard graphical models defined in terms of factor graphs. We achieve this by first re-parameterizing the factor graph in such a way that the inference task of computing the probability of unobserved variables given observed variables can be formulated as a variational message passing procedure using auxiliary variables in the extended and re-parameterized factor graph. We then take advantage of the fact that procedures such as variational inference and EM can be viewed in terms of free energy minimization equations. We formulate our Message-Passing approach as the E and M steps of a variational message passing technique reminiscent of classical variational EM procedures (Beal and Ghahramani 2003; Neal and Hinton 1999). This variational formulation leads to the synthesis of new rules that update an approximation to the joint conditional distribution, minimizing the Kullback–Leibler (KL) divergence in a way that also maximizes a lower bound on the true model evidence. Since the procedure monotonically decreases the KL divergence it alleviates Limitation 1 and leads to convergence of the lower bound and KL divergence to a fixed quantity. Furthermore, we exploit the logical structures within factors by applying a generalized arc-consistency concept (Rossi et al. 2006), and to use that to perform a variational mean-field approximation when updating the marginals. Since the procedure is cast within a variational framework, variational bounds apply which can ensure the algorithm converges to a local minimum in terms of the KL divergence.

We have organized the rest of the paper in the following manner. In Sect. 2, we review some key basic concepts in further detail including: Markov Logic, LBP, constraint propagation techniques, Variational Bounds, Expectation maximization (EM) and KL Divergences. In Sect. 3, we demonstrate the framework of GEM-MP variational inference. In Sect. 4 we then derive GEM-MP’s general update rule for Markov logic. In sect. 5, we generalize GEM-MP’s update rules to be applicable for MRFs. In Sect. 6, we conduct a thorough experimental study. This is followed by a discussion in Sect. 7. In Sect. 8 we examine related work. Finally, in Sect. 9, we present our conclusions and discuss directions for future research. The “Appendix” contains the proofs of all propositions used in the paper.

2 Preliminaries

To set the stage for our work here in this section we provide a more detailed discussion of: Markov logic; belief propagation; constraint satisfaction problems, constraint propagation and generalized arch consistency; and variational methods. We begin by reviewing Markov logic using a concrete explanatory example presented in Table 1. This example is an excerpt of the knowledge base for the Cora dataset. That is, suppose that we are given a citation database in which each citation has author, title, and venue fields. We need to know which pairs of citations refer to the same citation and the same authors (i.e. both the SameBib and SameAuthor relations are unknown). For simplicity, our objective will be to predict the SameBib ground atoms’ marginals. At this point, let us first express our basic notation.

Notation A first-order knowledge base (KB) is a set of formulas in first-order logic. Traditionally, as shown in Table 1, it is convenient to convert formulas to clausal form (CNF). After propositional grounding, we get a formula \({\mathcal {F}}\), which is a conjunction of m ground clauses. We use \(f \in {\mathcal {F}}\) to denote a ground clause which is a disjunction of literals built from \({\mathcal {X}}\), where \({\mathcal {X}} = \left\{ X_1, X_2, \ldots , X_n\right\} \) is a set of n Boolean random variables representing ground atoms. The set \({\mathcal {X}}_{f}\) corresponds to the variables appearing in the scope of a ground clause f. Both “\(+\)” and “−” will be used to denote the positive (true) and negative (false) appearance of the ground atoms. We use \(Y_i\) as a subset of satisfying (or valid) entries of ground clause \(f_i\), and \(y_k \in Y_i, \, k \in \left\{ 1,.., |Y_i| \right\} \) denotes each valid entry in \(Y_i\), where the local entry of a factor is valid if it has non-zero probability. We use \(f^s_i\) (resp. \(f^h_i\)) to indicate that the clause \(f_i\) is soft (resp. hard); the soft and the hard clauses are included in the two sets \({\mathcal {F}}^s\) and \({\mathcal {F}}^h\) respectively. The sets \({\mathcal {F}}_{X_j+}\) and \({\mathcal {F}}_{X_j-}\) include the clauses that contain positive and negative literals for ground atom \(X_j\), respectively. Thus \({\mathcal {F}}_{X_j}={\mathcal {F}}_{X_j+} \cup {\mathcal {F}}_{X_j-}\) denotes the whole of \(X_j\)’s clauses, and its cardinality as \(\left| {\mathcal {F}}_{X_j}\right| \). For each ground atom \(X_j\), we use \(\beta _{X_j} = \left[ \beta ^+_{X_j},\beta ^-_{X_j}\right] \) to denote its positive and negative marginal probabilities, respectively.

Markov logic (Richardson and Domingos 2006) is a set of first-order logic formulas (or CNF clauses), each of which is associated with a numerical weight w. Larger weights w reflect stronger dependencies, and thereby deterministic dependencies have the largest weight (\(w \rightarrow \infty \)), in the sense that they must be satisfied. We say that a clause has deterministic dependency if at least one of its entries has zero probability.

The power of Markov logic appears in its ability to bridge the gap between logic and probability theory. Thus it has become one of the preferred probabilistic graphical models for representing both probabilistic and deterministic knowledge, with deterministic dependencies (for short we say determinism) represented as hard clauses, and probabilistic ones represented as soft clauses.

Grounded (factor graph) obtained by applying clauses in Table 1 to the constants: \(\left\{ \text {Gilles(G), Chris(C)} \right\} \) for \(a_1\) and \(a_2\); \(\left\{ \text {Citation1}(C_1)\text { and Citation2}(C_2) \right\} \) for \(r_1\), \(r_2\), and \(r_3\). The factor graph involves: 12 ground atoms in which 4 are evidence (dark ovals) and 8 are non-evidence (non-dark ovals); 24 ground clauses wherein 8 are hard (\({\mathcal {F}}^h = \left\{ f_{1},\ldots ,f_{8}\right\} \)) and 16 are soft (\({\mathcal {F}}^s = \left\{ f_9,\ldots ,f_{24}\right\} \))

To understand the semantics of Markov logic, recall the explanatory example in Table 1. In this example, Markov logic enables us to model the KB by using rules such as the following: 1. Regularity rules of the type that say “if the authors are the same, then their records are the same.” This rule is helpful but innately uncertain (i.e., it is not true in all cases). Markov logic considers this rule as soft and attaches it to a weight (say, 1.1); 2. Transitivity rules that state “If one citation is identical to two other citations, then these two other citations are identical too.” These types of rules are important for handling non-unique names of citations. Therefore, we suppose that Markov logic considers these rules as hard and assigns them an infinite weight.Footnote 1

Subsequently, we will represent Markov logic as a factor graph after grounding it using a small set of typed constants (say, for example, \(a_1, a_2 \in \left\{ \text {Gilles(G), Chris(C)} \right\} \), and \(r_1, r_2, r_3 \in \left\{ \text {Citation1}(C_1), \text {Citation2}(C_2) \right\} \)). The output is a factor graph that is shown in Fig. 1, which is a bipartite graph \(\left( {\mathcal {X}},{\mathcal {F}}=\left\{ {\mathcal {F}}^h,{\mathcal {F}}^s\right\} \right) \) that has a variable node (oval) for each ground atom \(X_j \in {\mathcal {X}}\) (here \({\mathcal {X}}\) includes the ground atoms: SBib(\(C_1,C_1\)), SBib(\(C_2,C_1\)), SBib(\(C_2,C_2\)), SBib(\(C_1,C_2\)), Ar(\(C_1,G\)), Ar(\(C_2,G\)), Ar(\(C_1,C\)), Ar(\(C_2,C\)), SAr(C, C), SAr(C, G), SAr(G, C), and SAr(G, G)). If the truth value of the ground atom is known from the evidence database, we mark it as evidence (dark ovals). It also involves a factor node for each hard ground clause \(f^h_i \in {\mathcal {F}}^h\) (bold red square) and each soft ground clause \(f^s_i \in {\mathcal {F}}^s\) (non-bold blue square), with an edge linking node \(X_j\) to factor \(f_i\), if \(f_i\) involves \(X_j\). This factor graph compactly represents the joint distribution over \({\mathcal {X}}\) as:

where \(\lambda \) is the normalizing constant, \(f^s_i\) and \(f^h_i\) are soft and hard ground clauses respectively, and \(|{\mathcal {F}}^h|\) and \(|{\mathcal {F}}^s|\) are the number of hard and soft ground clauses, respectively. Note that, typically, the hard clauses are assigned the same weight (\(w \rightarrow \infty \)). But, without loss of accuracy, we can recast them as factors that allow \(\{0,1\}\) probabilities without recourse to infinity.

Loopy belief propagation The object of the inference task is to compute the marginal probability of the non-evidence atoms (e.g., SameBib) given some others as evidence (e.g., Author). One widely used approximate inference technique is loopy belief propagation (LBP) (Yedidia et al. 2005), which provides exact marginals of query atoms conditional on evidence ones when the factor graph is a tree or a forest, and approximate marginals if the factor graph has cycles. LBP proceeds by alternating the passing of messages between variable (ground atom) nodes and their neighboring factor (ground clause) nodes. The message from a variable \(X_j\) to a factor \(f_i\) is:

The message from a factor \(f_i\) to variable \(X_j\) is:

The messages are frequently initialized to 1, and the unnormalized marginal of a single variable \(X_j\) can be approximated by computing a coarse geometrical average of its incoming messages Footnote 2:

While there are different schedules for passing messages in graphs with loops, one of the most commonly used is synchronous scheduling, wherein all messages are simultaneously updated by using the messages from the previous iteration.

Now consider the atoms that we are interested in as a query [SBib(\(C_1,C_1\)), SBib(\(C_2,C_1\)), SBib(\(C_1,C_2\)), and SBib(\(C_2,C_2\))] on the factor graph represented in Fig. 1. Remarkably, these query atoms are involved in many cycles. This emphasizes, at least theoretically, the existence of more than one fixed point (or local optimum) which raises the threat of non-convergence (Limitation 1). In addition, six of these cycles (i.e., those represented with dashed orange lines) such as SBib(\(C_1,C_1\))—\(f_5\)—SBib(\(C_2,C_1\))—\(f_4\)— SBib(\(C_1,C_2\)) have no evidences (i.e., all the atoms in the cycles are queries). Therefore, the double counting problem is expected to happen (Limitation 1). Moreover the six cycles contain only hard clauses, which hinders the process of smoothing out the messages to converge to accurate results (Limitation 2).

Constraint propagation A Constraint Satisfaction Problem (Rossi et al. 2006) is a triple \(\big <{\mathcal {X}}, {\mathcal {D}}, {\mathcal {C}}\big>\) where \({\mathcal {X}}\) is an n-tuple of variables \({\mathcal {X}}=\big <X_1,\ldots ,X_n\big>\), \({\mathcal {D}}\) is a corresponding n-tuple of domains \({\mathcal {D}}=\big <D_1,\ldots ,D_n\big>\) such that \(X_j \in D_j\), and \({\mathcal {C}}\) is a m-tuple of constraints \({\mathcal {C}}=\big <c_1,\ldots ,c_m\big>\). A constraint \(c_i\) is a pair \(\big <{\mathcal {R}}_{{\mathcal {X}}_{c_i}},{\mathcal {X}}_{c_i}\big>\) where \({\mathcal {R}}_{{\mathcal {X}}_{c_i}}\) is a relation on the variables \({\mathcal {X}}_{c_i}=\text {scope}(c_i)\). A solution to the CSP is a complete assignment (or a possible world) \(s=\big <v_1,\ldots ,v_n\big>\) where \(v_j \in D_j\) and each \(c_i \in {\mathcal {C}}\) is satisfied in that \({\mathcal {R}}_{{\mathcal {X}}_{c_i}}\) holds on the projection of s onto the scope \({\mathcal {X}}_{c_i}\). S denotes the set of solutions to the CSP. Constraint propagation (Rossi et al. 2006) is the process of removing inconsistent values in the domains that violate some constraint in \({\mathcal {C}}\). One form of constraint propagation is to apply generalized arc consistency for each constraint \(c \in {\mathcal {C}}\) until a fixed point is reached.

Definition 1

(Generalized arc consistency (GAC)) Given a constraint \(c \in {\mathcal {C}}\) which is defined over the subset of variables \({\mathcal {X}}_{c}\), it is generalized arc consistent (GAC) iff for each variable \(X_j \in {\mathcal {X}}_{c}\) and for each value \(d \in {\mathcal {D}}_{X_j}\) in its domain, there exists a value \(d_k \in {\mathcal {D}}_{X_k}\) for each variable \(X_k \in {\mathcal {X}}_{c} {\setminus } \left\{ X_j\right\} \) that constitutes at least one valid tuple (or valid local entry) that satisfies c.

We can extend this CSP formalism to Weighted CSPs (Rossi et al. 2006) to include soft constraints. This too requires extending GAC to soft generalized arc consistency (soft GAC) to tackle the soft constraints (van Hoeve et al. 2006). At a high level, one can view GAC (or soft GAC) as a function that takes any variable \(X_j \in {\mathcal {X}}\) and returns all other consistent variables’ values that support the values of \(X_j\) with respect to the constraints \(c \in {\mathcal {C}}\). For instance, in our example of Cora in Fig. 1, applying GAC to the hard constraint (or clause) \(f_{6}: \lnot \text {SBib}(C_1,C_1) \vee \lnot \text {SBib}(C_1,C_2)\) with respect to ground atom assignment \(\text {SBib}(C_1,C_1) = true\) implies maintaining only the truth value “false” in the domain of \(\text {SBib}(C_1,C_2)\). This is because the only valid local entry of \(f_{6}\) that supports \(\text {SBib}(C_1,C_1) = true\) is \(\left\{ (true, false)\right\} \).

We can also apply GAC in a probabilistic form. For instance, probabilistic arc consistency (pAC) (Horsch and Havens 2000) performs BP in the form of arc consistency to compute the relative frequency of a variable taking on a particular value in all solutions for binary CSPs (Horsch and Havens 2000, for more details). pAC can be summarized as follows. We start by initializing all variables to have uniform distributions. At each step, each variable stores its previous solution probability distribution, then incoming messages from neighbouring variables are processed, and the results are maintained locally so that there is no need to send messages to all neighbours when no changes are made in the distribution. The new distribution is approximated by multiplying all information maintained from the recent message received from all neighbours. If the variable’s solution distribution has changed then a new message is sent to all neighbours.

Variational bounds, EM and KL divergences To derive a method with enhanced algorithmic behavior and theoretical semantics for BP, we shall be interested in a variational bound that is widely known in the context of variational expectation maximization algorithms (Beal and Ghahramani 2003; Neal and Hinton 1999). Suppose that we have a model \({\mathcal {M}}_\theta \) with parameters \(\theta \), observed data \({\mathcal {O}}=\{O_1,\ldots ,O_n\}\) and hidden variables \({\mathcal {H}}=\{H_1,\ldots ,H_n\}\). By introducing an approximation to our distribution over hidden variables given by \(\large {q}_{{\mathcal {H}}}({\mathcal {H}})\), we can leverage Jensen’s inequality to obtain a lower bound \({\mathcal {F}}_{{\mathcal {M}}_{\theta }}\) on the log marginal likelihood of the form \(\log \, P({\mathcal {O}} | {\mathcal {M}}_{\theta })\) as follows:

This lower bound \({\mathcal {F}}_{{\mathcal {M}}_{\theta }}\) in Eq. (5e) is called the free-energy. In Eq. (5d), \(\large {E}_{\large {q}_{{\mathcal {H}}}({\mathcal {H}})}\) is the expected log marginal likelihood and \(\large {H}\) is the shannon entropy term. Its role in variational EM (Beal and Ghahramani 2003) is that it justifies an iterative optimization algorithm for the lower bound whereby one performs the following steps: (the E-step) in which one makes the bound tighter by computing and updating \(\large {q}_{{\mathcal {H}}}({\mathcal {H}})\), and (the M-step) which uses the approximation to update the parameters of the model, which typically will increase the log marginal likelihood. If the exact posterior is used, or if the approximation to the posterior is exact, then the inequality is met with equality and the original EM algorithm is obtained. Both LBP and variational EM approaches share a similar objective which is to minimize a corresponding energy equation (Yedidia et al. 2005), the Gibbs free energy and variational free energy, respectively. Variational inference over hidden or unobserved variables in the E-step of traditional variational EM has an advantage in that it corresponds to minimizing the KL divergence of an approximation and our quantity of interest as we discuss below.

With a little more analysis it is possible to also determine that the free-energy is smaller than the log-marginal likelihood by an amount equal to the Kullback–Leibler (KL) divergence between \(\large {q}_{{\mathcal {H}}}({\mathcal {H}})\) and the posterior distribution of the hidden variables \(P({\mathcal {H}} | {\mathcal {O}}, {\mathcal {M}}_{\theta })\):

That is, since the marginal likelihood of the observed data is a fixed quantity, maximizing the lower bound (or the variational free energy) through variational inference over hidden variables is equivalent to minimizing the KL divergence between our approximation and the true distribution over hidden variables. Thus in the E-step of a variational EM algorithm one can perform iterative updates for \(\large {q}_{{\mathcal {H}}}({\mathcal {H}})\) in a class of distributions \({\mathcal {Q}}\) in a way that minimizes the KL divergence between the posterior \(P({\mathcal {H}} | {\mathcal {O}}, {\mathcal {M}}_{\theta })\) with the goal of obtaining

In our work here we will perform variational inference reminiscent of this approach.

3 GEM-MP framework

At a conceptual level our overall GEM-MP approach consists of the following three key elements. First, we extend the factor graph used to represent a given problem using mega-node random variables which behave identically to groups of variables participating in a factor. Second, we perform variational inference to update an approximation over the original variables and the mega-nodes. Third, we use a probabilistic form of generalized arc consistency to more efficiently make inferences about hard constraints. Unlike inference operations formulated using LBP, since we formulate inference using variational updates we directly minimize the KL divergence between our approximation for the joint conditional distribution and the true distribution of interest.

Before presenting the inference components of GEM-MP in detail, we will first examine a small concrete example, then present our more general approach for extending factor graphs. Let us consider a simple example factor graph \({\mathcal {G}}\) (Fig. 2 (left)), which is a fragment of the Cora example in Fig. 1, that involves factors \({\mathcal {F}} =\left\{ f_1,f_2,f_3,f_{4}\right\} \) and three random variables \(\left\{ X_1,X_2,X_3\right\} \) denoting query ground atoms \(\left\{ \text {SBib}(C_2,C_2), \text {SBib}(C_2,C_1), \text {SBib}(C_1,C_2)\right\} \) respectively.

In our GEM-MP framework the first thing we do is to modify the factor graph. Specifically, we need to re-parameterize the factor graph in such a way that carrying out a learning task on the new parameterization is equivalent to running an inference task on the original factor graph. That is, we modify the original factor graph \({\mathcal {G}}\) (depicted in Fig. 2 (left)) by transforming it into an extended factor graph \(\hat{{\mathcal {G}}}\) (depicted in Fig. 2 (right)) as follows:

An example factor graph \({\mathcal {G}}\) (left) which is a fragment of the Cora example in Fig. 1, that involves factors \({\mathcal {F}} = \left\{ f_1,f_2,f_3,f_{4}\right\} \) and three random variables \(\left\{ X_1,X_2,X_3\right\} \) representing query ground atoms \(\left\{ \text {SBib}(C_2,C_2), \text {SBib}(C_2,C_1), \text {SBib}(C_1,C_2)\right\} \). The extended factor graph \(\hat{{\mathcal {G}}}\) (right) which is a transformation of the original factor graph after adding auxiliary mega-node variables \({\mathcal {Y}} = \left\{ y_1,y_2,y_3,y_4\right\} \), and auxiliary activation-node variables \({\mathcal {O}} = \left\{ O_1,O_2,O_3,O_4\right\} \), which yields extended factors \(\hat{{\mathcal {F}}} = \left\{ \hat{f_1},\hat{f_2},\hat{f_3},\hat{f_{4}}\right\} \)

-

We attach an auxiliary mega-node \(Y_i\) (dashed oval) to each factor node \(f_i \in {\mathcal {F}}\). Each of these mega-nodes \(Y_i\) captures the local entries of its corresponding factor \(f_i\). Thus, it has a domain size that equals (at the most) the number of local entries in the factor \(f_i\) (i.e., the states of each mega-node correspond to a subset of the Cartesian product of the domains of the variables that are the arguments to the factor \(f_i\)). \({\mathcal {Y}} = \left\{ Y_i\right\} _{i=1}^m\) is the set of mega-nodes in the extended factor graph, where \(m=4\) in the example factor graph.

-

In addition, we connect an auxiliary activation node, \(O_i\) (dashed circle), to each factor \(f_i\). The auxiliary activation node \(O_i\) enforces an indicator constraint \({\mathbbm {1}}_{\left( Y_i,f_i\right) }\) for ensuring that the particular configuration of the variables that are the argument to the original factor \(f_i\) is identical to the state of the mega-node \(Y_i\):

$$\begin{aligned} {\mathbbm {1}}_{\left( Y_i,f_i\right) } = {\left\{ \begin{array}{ll} 1 &{} \quad \text {If the state of } Y_i \text { is identical to local entry of } f_i. \\ 0 &{} \quad \text {Otherwise} \end{array}\right. } \end{aligned}$$(8) -

Now, since we expand the arguments of each factor \(f_i\) by including both auxiliary mega-node and auxiliary activation node variables, then we get an extended factor \(\hat{f_i}\). \(\hat{{\mathcal {F}}} = \left\{ \hat{f_i}\right\} _{i=1}^m\) is the set of extended factors in the extended factor graph.

-

When the activation node \(O_i\) equals one, then it activates the indicator constraint in Eq. (8). If this indicator constraint is satisfied, then the extended factor graph \(\hat{f_i}\) preserves the same value of \(f_i\) for the configuration that is defined over the original input variables defining the factor \(f_i\). Thus, clearly, the following condition holds for each extended factor \(\hat{f_i}\) when a configuration, \((x_1,\ldots ,x_n)\), of \(f_i\) equals to state, \(y_i\), of mega-node, \(Y_i\):

$$\begin{aligned} \hat{f_i}\left( X_1=x_1,\ldots ,X_n=x_n,Y_i=y_i,\bar{O_i}\right) \Bigm |_{\bar{O_i} = 1} = f_i\left( X_1=x_1,\ldots ,X_n=x_n\right) . \end{aligned}$$(9)But if the indicator constraint in Eq. (8) is not satisfied then the extended factor graph \(\hat{f_i}\) assigns a value 0. Thus, this condition also holds for each extended factor \(\hat{f_i}\) when a configuration \((x_1,\ldots ,x_n)\) of \(f_i\) is not equal to state \(y_i\) of mega-node, \(Y_i\):

$$\begin{aligned} \hat{f_i}\left( X_1=x_1,\ldots ,X_n=x_n,Y_i=y_i,\bar{O_i}\right) \Bigm |_{\bar{O_i} = 1} = 0. \end{aligned}$$(10) -

Setting \(O_i=0\) effectively removes the impact of \(f_i\) from the model. That is, when the activation node \(O_i\) is not equal to one, then it deactivates the indicator constraint in Eq. (8). Here, the extended factor \(\hat{f_i}\) assigns a value 1 when the possible state of \(Y_i\) matches the configuration of variables that are the arguments to the factor \(f_i\). Otherwise it assigns a value 0. Note that by assigning the values in this way, all factors \(f_i \in {\mathcal {F}}\) will have identical values in their corresponding \(\hat{f_i} \in \hat{{\mathcal {F}}}\) when \(O_i=0\). This implies that the deactivation of their indicator constraint has no impact on the distribution from the inclusion of the factors \(f_i \in {\mathcal {F}}\).

Table 2 visualizes the expansion of factor \(f_1\), in the original factor graph, to its corresponding extended factor \(\hat{f_1}\) in the extended factor graph.

Proposition 1

In the extended factor graph \(\hat{{\mathcal {G}}}\), reducing each extended factor \(\hat{f_i}\) by evidencing its activation node with one, \(\bar{O_i}=1\), and then eliminating its auxiliary mega-node \(Y_i\) by marginalization yields its corresponding original factor \(f_i\) in the original factor graph \({\mathcal {G}}\).

Proof

see “Appendix”. \(\square \)

Proposition 2

Any arbitrary factor graph \({\mathcal {G}}\) is equivalent, i.e., defines an identical joint probability over variables \({\mathcal {X}}\), to its extended \(\hat{{\mathcal {G}}}\) iff the activation nodes in \(\hat{{\mathcal {G}}}\) are evidenced with one:

Proof

see “Appendix”. \(\square \)

Given this extended factor graph formulation we can now examine the task of performing inference over unobserved quantities given observed quantities through the lens of variational analysis and inference.

Let \({\mathcal {O}}= \left\{ O_i\right\} _{i=1}^m\) be the observed variables, represented as a binary vector (of 1’s), indicating the observation of the activation node variables \(\bar{O_i}=1,\,\forall O_i \in {\mathcal {O}}\). Let \({\mathcal {H}}=\left\{ {\mathcal {X}},{\mathcal {Y}}\right\} \) be the hidden variables, where \({\mathcal {X}} = \left\{ X_j\right\} _{j=1}^n\) is a set of variables (i.e., ground atoms) whose marginals we want to compute, and \({\mathcal {Y}}=\left\{ Y_i\right\} _{i=1}^m\) is the set of mega-nodes. Let \(\large {q}({\mathcal {X}},{\mathcal {Y}})\) be an auxiliary distribution over the set of hidden variables \({\mathcal {H}}\), satisfying that \(\sum _{\small {{\mathcal {X}},{\mathcal {Y}}}} \, \large {q}({\mathcal {X}},{\mathcal {Y}}) =1\). Now using the distribution \(\large {q}({\mathcal {X}},{\mathcal {Y}})\), we can leverage Jensen’s inequality to obtain a lower bound on the log-marginal likelihood of the form \(\log P({\mathcal {O}}|{\mathcal {M}})\) as follows:Footnote 3

where \({\mathcal {F}}_{\small {{\mathcal {M}}}}\) in Eq. (12e) is the negative of a quantity that represents the variational free energy functional of the free distribution \(\large {q}({\mathcal {X}},{\mathcal {Y}})\). As in Eq. (12d), it is a summation of two terms: \(\large {E}_{\large {q}({\mathcal {X}},{\mathcal {Y}})}\) which is the expected log marginal-likelihood with respect to the distributions, \(\large {q}({\mathcal {X}},{\mathcal {Y}})\), and the second term, \(\large {H}\big (\large {q}({\mathcal {X}},{\mathcal {Y}})\big )\), is the negative entropy of the distribution \(\large {q}({\mathcal {X}},{\mathcal {Y}})\) (Neal and Hinton 1999, for more details).

We can also easily see that similarly to other traditional settings the free-energy \({\mathcal {F}}_{\small {{\mathcal {M}}}}\) is smaller than the log-marginal likelihood by an amount equal to the Kullback–Leibler (KL) divergence between \(\large {q}({\mathcal {X}},{\mathcal {Y}})\) and the distribution over the hidden variables \(P({\mathcal {X}}, {\mathcal {Y}}|{\mathcal {O}},{\mathcal {M}})\):

Since the \(\textit{KL} \big [\large {q}({\mathcal {X}},{\mathcal {Y}}) \, || \, P({\mathcal {X}}, {\mathcal {Y}}|{\mathcal {O}},{\mathcal {M}}) \big ] \ge 0\) in Eq. (13c) and the log marginal probability under the model is a fixed quantity, then minimizing the KL divergence term is equivalent to maximizing the variational free energy \({\mathcal {F}}_{\small {{\mathcal {M}}}}\). That is, one could equivalently select either to maximize the lower bound (the variational free energy), or to minimize the KL divergence. Based on that, we now want to infer the distribution \(\large {q}({\mathcal {X}},{\mathcal {Y}})\) in a class of distributions \({\mathcal {Q}}\) that maximize the variational free energy:Footnote 4

One problem is that the target of the maximization of the variational free energy \({\mathcal {F}}_{\small {{\mathcal {M}}}}\) is unwieldy for direct optimization. The variational free energy requires an explicit summation over all possible instantiations of \({\mathcal {X}}\) and all valid local entries of the factors (i.e., ground clauses) involved in the model for \({\mathcal {Y}}\), which is an operation that is infeasible in practice.Footnote 5 Instead we constrain the auxiliary \(\large {q}({\mathcal {X}},{\mathcal {Y}})\) distribution to be a factorized (separable) approximation as:

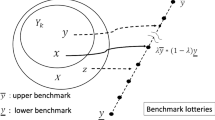

where \(\large {q}({\mathcal {X}};{\mathcal {B}}_{\small {{\mathcal {X}}}})\) is an approximation of the true distribution \(P({\mathcal {X}}|{\mathcal {O}},{\mathcal {M}})\) over hidden variables, \({\mathcal {X}}\). This distribution is characterized by a set of variational parameters, \({\mathcal {B}}_{{\mathcal {X}}} = \left\{ \beta _{X_j}\right\} _{j=1}^n\). Since we use a fully factored distribution, these approximations are somewhat similar to the approximate marginal probabilities of variables \(X_j \in {\mathcal {X}}\), which one might obtain using standard loopy message-passing inference (e.g., LBP); however, unlike the situation with LBP, here we can subject these approximations to a variational analysis leading to an understanding of message-passing inference in terms of KL divergence minimization. The distribution \(\large {q}({\mathcal {Y}};{\mathcal {T}}_{\small {{\mathcal {Y}}}})\) is an approximation to the true distribution \(P({\mathcal {Y}}|{\mathcal {O}},{\mathcal {M}})\) over hidden mega-nodes, \({\mathcal {Y}}\), which is characterized by a set of variational parameters, \({\mathcal {T}}_{\small {{\mathcal {Y}}}} = \left\{ \alpha _{Y_i}\right\} _{i=1}^m\), for adapting the weights associated with the particular states of mega-nodes \(Y_i \in {\mathcal {Y}}\). As a particular formulation of how the \(\large {q}({\mathcal {Y}};{\mathcal {T}}_{\small {{\mathcal {Y}}}})\) distribution is parametrized, these variational parameters \(\alpha _{\small {Y_i}}\left( f_i\right) \) can be defined as:

where \(v_s\) (and \(v_u\)) are the values obtained from \(f_i\) when a particular state of \(Y_i\) satisfies (or unsatisfies) the factor \(f_i\) respectively. Note that \(v_s\) and \(v_u\) can be adapted using the distributions of the factor \(f_i\)’s argument variables and the weight associated with \(f_i\) (as will be explained in Sects. 4.1 and 4.2 for hard and soft factors respectively). Now, using Eq. (15), we can simply represent the lower bound as follows:

where \(\large {E}_{\large {q}({\mathcal {X}};{\mathcal {B}}_{\small {{\mathcal {X}}}}) \large {q}({\mathcal {Y}};{\mathcal {T}}_{\small {{\mathcal {Y}}}})}\) is the expected log marginal-likelihood with respect to the distributions, \(\large {q}({\mathcal {X}};{\mathcal {B}}_{\small {{\mathcal {X}}}})\) and \(\large {q}({\mathcal {Y}};{\mathcal {T}}_{\small {{\mathcal {Y}}}})\), and the negative of the second term, \(- \large {H}\big (\large {q}({\mathcal {X}};{\mathcal {B}}_{\small {{\mathcal {X}}}}) \large {q}({\mathcal {Y}};{\mathcal {T}}_{\small {{\mathcal {Y}}}})\big )\), is the entropy. Hence, we can now set up our goal to find the distributions \(\large {q}({\mathcal {X}};{\mathcal {B}}_{\small {{\mathcal {X}}}})\) and \(\large {q}({\mathcal {Y}};{\mathcal {T}}_{\small {{\mathcal {Y}}}})\) that maximize the lower bound \({\mathcal {F}}_{\small {{\mathcal {M}}}}\).

Now the role of the GEM-MP algorithm is to iteratively maximize the lower bound \({\mathcal {F}}_{\small {{\mathcal {M}}}}\) (or minimize the negative free energy \(- {\mathcal {F}}_{\small {{\mathcal {M}}}}\)) with respect to the distributions \(\large {q}({\mathcal {X}};{\mathcal {B}}_{\small {{\mathcal {X}}}})\) and \(\large {q}({\mathcal {Y}};{\mathcal {T}}_{\small {{\mathcal {Y}}}})\) by applying two steps. In the first step, \(\large {q}({\mathcal {X}};{\mathcal {B}}_{\small {{\mathcal {X}}}})\) is used to maximize \({\mathcal {F}}_{\small {{\mathcal {M}}}}\) with respect to \(\large {q}({\mathcal {Y}};{\mathcal {T}}_{\small {{\mathcal {Y}}}})\). Then in the second step, \(\large {q}({\mathcal {Y}};{\mathcal {T}}_{\small {{\mathcal {Y}}}})\) is used to maximize \({\mathcal {F}}_{\small {{\mathcal {M}}}}\) with respect to \(\large {q}({\mathcal {X}};{\mathcal {B}}_{\small {{\mathcal {X}}}})\). That is, GEM-MP maximizes \({\mathcal {F}}_{\small {{\mathcal {M}}}}\) by performing two iterative updates

Note that the entropy term can be re-written as:

Now if we substitute the entropy \(\large {H}\big (\large {q}({\mathcal {X}};{\mathcal {B}}_{\small {{\mathcal {X}}}}) \large {q}({\mathcal {Y}};{\mathcal {T}}_{\small {{\mathcal {Y}}}})\big )\) from Eq. (19) into Eq. (18a) and (18b), then we will have that \(\large {q}({\mathcal {X}};{\mathcal {B}}_{\small {{\mathcal {X}}}})\) does not depend on the entropy \(\large {H}(\large {q}({\mathcal {Y}};{\mathcal {T}}_{\small {{\mathcal {Y}}}}))\) when maximizing \({\mathcal {F}}_{\small {{\mathcal {M}}}}\) with respect to the variational parameters \({\mathcal {T}}_{\small {{\mathcal {Y}}}}\), and \(\large {q}({\mathcal {Y}};{\mathcal {T}}_{\small {{\mathcal {Y}}}})\) does not depend on \(\large {H}(\large {q}({\mathcal {X}};{\mathcal {B}}_{\small {{\mathcal {X}}}}))\) when maximizing \({\mathcal {F}}_{\small {{\mathcal {M}}}}\) with respect to the variational parameters \(B_{\small {{\mathcal {X}}}}\).Footnote 6 We thus have

Therefore, the goal of GEM-MP can be expressed as that of maximizing a lower bound on the log marginal-likelihood by performing two steps, using superscript (t) to denote the iteration number:

-

GEM-MP “\(M_{q\small {({\mathcal {Y}})}}\)-step”: (for maximizing mega-nodes’ parameters distributions)

$$\begin{aligned}&\overbrace{{\mathcal {T}}^{\text {(t+1)}}_{\small {{\mathcal {Y}}}}}^{\text {Max. w.r.t }\large {q}_{\left( {\mathcal {Y}};{\mathcal {T}}_{\small {{\mathcal {Y}}}}\right) }} \nonumber \\&\quad = \underset{{\mathcal {T}}_{\small {{\mathcal {Y}}}}}{{\text {argmax}}} \, \overbrace{\large {E}_{\large {q}^{\text {(t)}}_{\left( {\mathcal {X}};{\mathcal {B}}_{\small {{\mathcal {X}}}}\right) } \large {q}^{\text {(t)}}_{\left( {\mathcal {Y}};{\mathcal {T}}_{\small {{\mathcal {Y}}}}\right) }} \big [\log P({\mathcal {O}}, {\mathcal {X}},{\mathcal {Y}} |{\mathcal {M}}) \big ]}^{\text { E-step}} + \large {H}\big (\large {q}({\mathcal {Y}};{\mathcal {T}}_{\small {{\mathcal {Y}}}})\big ) \end{aligned}$$(21) -

GEM-MP “\(M_{q\small {({\mathcal {X}})}}\)-step”: (for maximizing variable-nodes’ parameter distributions)

$$\begin{aligned}&\overbrace{{\mathcal {B}}^{\text {(t+1)}}_{\small {{\mathcal {X}}}}}^{\text {Max. w.r.t }\large {q}_{\left( {\mathcal {X}};{\mathcal {B}}_{\small {{\mathcal {X}}}}\right) }} \nonumber \\&\quad = \underset{{\mathcal {B}}_{\small {{\mathcal {X}}}}}{{\text {argmax}}} \, \overbrace{\large {E}_{\large {q}^{\text {(t)}}_{\left( {\mathcal {X}};{\mathcal {B}}_{\small {{\mathcal {X}}}}\right) } \large {q}^{\text {(t+1)}}_{\left( {\mathcal {Y}};{\mathcal {T}}_{\small {{\mathcal {Y}}}}\right) }} \big [\log P({\mathcal {O}}, {\mathcal {X}},{\mathcal {Y}} |{\mathcal {M}}) \big ]}^{\text { E-step}} + \large {H}\big (\large {q}({\mathcal {X}};{\mathcal {B}}_{\small {{\mathcal {X}}}})\big ) \end{aligned}$$(22)

where here the arguments to Eqs. (21) and (22) are the \(E_{q\small {({\mathcal {X}})}}\)-step and \(E_{q\small {({\mathcal {Y}})}}\)-step corresponding to \(M_{q\small {({\mathcal {Y}})}}\)-step and \(M_{q\small {({\mathcal {X}})}}\)-step, respectively. In Eq. (21), the current value of \(\large {q}\left( {\mathcal {X}};{\mathcal {B}}_{\small {{\mathcal {X}}}}\right) \) and \(\large {q}\left( {\mathcal {Y}};{\mathcal {T}}_{\small {{\mathcal {Y}}}}\right) \) are used to optimize the mega-nodes’ variational parameters \({\mathcal {T}}_{\small {{\mathcal {Y}}}}\). This could result in maximizing the lower bound with respect to \(\large {q}\left( {\mathcal {Y}};{\mathcal {T}}_{\small {{\mathcal {Y}}}}\right) \). Next, in Eq. (22), the new value of \(\large {q}\left( {\mathcal {Y}};{\mathcal {T}}_{\small {{\mathcal {Y}}}}\right) \) and the current value of \(\large {q}\left( {\mathcal {X}};{\mathcal {B}}_{\small {{\mathcal {X}}}}\right) \) are used to optimize the nodes’ variational parameters \({\mathcal {B}}_{\small {{\mathcal {X}}}}\). This could result in maximizing the lower bound once again but this time with respect to \(\large {q}\left( {\mathcal {X}};{\mathcal {B}}_{\small {{\mathcal {X}}}}\right) \). Note that the difficulties in dealing with the expectation in Eqs. (21) and (22) depends on the properties of the distributions \(\large {q}\left( {\mathcal {Y}};{\mathcal {T}}_{\small {{\mathcal {Y}}}}\right) \) and \(\large {q}\left( {\mathcal {X}};{\mathcal {B}}_{\small {{\mathcal {X}}}}\right) \). That is if the inference is easy in these two distributions, then evaluating the expectation should be relatively easily. For the entropy terms, the choice of how to approximate the distributions \(\large {q}\left( {\mathcal {Y}};{\mathcal {T}}_{\small {{\mathcal {Y}}}}\right) \) and \(\large {q}\left( {\mathcal {X}};{\mathcal {B}}_{\small {{\mathcal {X}}}}\right) \) determines whether we can evaluate the entropy terms. As will be shown hereafter, using the variational mean-field for approximating the distributions \(\large {q}\left( {\mathcal {Y}};{\mathcal {T}}_{\small {{\mathcal {Y}}}}\right) \) and \(\large {q}\left( {\mathcal {X}};{\mathcal {B}}_{\small {{\mathcal {X}}}}\right) \) makes evaluating the entropy terms tractable.

Now, using a fully factored variational mean field approximation for \(\large {q}({\mathcal {Y}};{\mathcal {T}}_{\small {{\mathcal {Y}}}})\) implies that we create our approximation from independent distributions over the hidden (mega-node) variables as follows:

where \(\large {q}(Y_i;\alpha _{\small { Y_i}})\) is our complete approximation to the true probability distribution \(P(Y_i | {\mathcal {O}}, {\mathcal {X}}, {\mathcal {M}})\) of a randomly chosen valid local entry of mega-node \(Y_i\). Also, the variational mean-field approximation to \(\large {q}({\mathcal {X}};{\mathcal {B}}_{\small {{\mathcal {X}}}})\) is similarly defined as a factorization of independent distributions over the hidden variables in \({\mathcal {X}}\), and can be expressed as follows:

where \(\large {q}(X_j;\beta _{\small {X_j}})\) is an approximate distribution for the true marginal probability distribution \(P(X_j | {\mathcal {O}}, {\mathcal {M}})\) of variable \(X_j\).

From Eqs. (23) and (24), we can write the expected log marginal-likelihood in Eqs. (21) and (22), as follows:

We now proceed to optimize the lower bound, through the use of Eqs. (21) and (22), using our variational mean-field approximations for both \(\large {q}({\mathcal {Y}};{\mathcal {T}}_{\small {{\mathcal {Y}}}})\) and \(\large {q}({\mathcal {X}};{\mathcal {B}}_{\small {{\mathcal {X}}}})\).

We use Eqs. (25), (24) and (23) in Eq. (21). Hence, we have a maximization of the lower bound on the log marginal-likelihood as

One can then separate out the terms related to the updates of the variational parameters for each mega-node \(Y_i\) in Eq. (26). In addition, updating the parameter distribution of mega-node \(Y_i\) requires considering the distributions \(\{\large {q}(X_j;\beta _{\small {X_j}})\}\) of only the variables in \({\mathcal {X}}\) that are arguments to the extended factor \(\hat{f_i}\) (i.e., \(X_j \in {\mathcal {X}}_{\hat{f_i}}\))- where \(Y_i\) is attached to \(\hat{f_i}\). That is

where \(\hat{f_i}(O_i, {\mathcal {X}}_{\hat{f_i}}, Y_i |{\mathcal {M}})\) is the part of \(P({\mathcal {O}}, {\mathcal {X}},{\mathcal {Y}} |{\mathcal {M}})\) in the model with the factor associated with the mega-node \(Y_i\). This in fact allows optimizing the variational parameters of the distributions of each mega-node \(Y_i\) as

where \({\mathcal {Z}}_{Y_i}=\sum \large {E}_{\{\large {q}^{(t)}(X_k;\beta _{\small {X_k}}) \, | \, \small {X_k \ne X_j, \, X_j \in {\mathcal {X}}_{\hat{f_i}}}\}} \big [\log \hat{f_i}(O_i, {\mathcal {X}}_{\hat{f_i}}, Y_i |{\mathcal {M}}) \big ]\) is the normalization factor, and the expectation part can be written as

This update is similar in form to the simpler case of fully factored mean field updates in a model without the additional mega-nodes. See Winn (2004) for more details on the traditional mean field updates. Note that here by using Eqs. (29) and (28) in Eq. (27), we also have that

where \(\textit{KL}\big [\large {q}(Y_i;\alpha _{\small {Y_i}}) \, || \, \large {q}(Y_i;\alpha _{\small {Y_i}}^*) \big ]\) is the Kullback–Leibler divergence. The constant, in Eq. (30b), is simply the logarithm of the normalization factor representing the variables’ {\(\large {q}(X_j;\beta _{\small {X_j}})\)}, that are independent of \(\large {q}(Y_i;\alpha _{\small {Y_i}})\). Note that, from Eq. (30c), we maximize on the lower bound with respect to \(\large {q}(Y_i;\alpha _{\small {Y_i}})\) by minimizing the Kullback–Leibler divergence. This means that the lower bound can be maximized by setting \(\large {q}(Y_i;\alpha _{\small {Y_i}}) = \large {q}(Y_i;\alpha _{\small {Y_i}}^*)\).

Now, likewise, when updating the distribution of each variable \(X_j\) we only consider the updated distributions \(\{\large {q}(Y_i;\alpha _{\small {Y_i}})\}\) of mega-nodes attached to the extended factors on which \(X_j\) appears (i.e., \(\hat{f_i} \in \hat{{\mathcal {F}}}_{X_j}\)). That is

where \(\hat{F}(O, X_j, {\mathcal {Y}} |{\mathcal {M}})\) is the part of \(P({\mathcal {O}}, {\mathcal {X}},{\mathcal {Y}} |{\mathcal {M}})\) in the model with the factors associated with the node \(X_j\). This part involves only the mega-nodes’ qs in the Markov boundary of each \(X_j\), and the qs from the old iteration for the other variables \(X_k \ne X_j\) that are arguments to factors in which \(X_j\) appears.

At this point, we have paved the way for GEM-MP message-passing inference by transforming the inference task into an instance of an EM style approach often associated with learning tasks. The GEM-MP inference proceeds by iteratively sending two types of messages on the extended factor graph so as to compute the updated q distributions needed for the M-steps above. The \(E_q\) and \(M_q\) steps are alternated until converging to a local maximumFootnote 7 of \({\mathcal {F}}_{\small {{\mathcal {M}}}}(\large {q}({\mathcal {Y}};{\mathcal {T}}_{\small {{\mathcal {Y}}}}), \large {q}({\mathcal {X}};{\mathcal {B}}_{\small {{\mathcal {X}}}}))\). These messages are different from simply running the standard LBP algorithm, and they are formulated in the form of E (i.e., \(E_{q\small {({\mathcal {X}})}}\), \(E_{q\small {({\mathcal {Y}})}}\)) and M (i.e., \(M_{q\small {({\mathcal {Y}})}}\), \(M_{q\small {({\mathcal {X}})}}\)) steps outlined in Eqs. (21), (28), (22), and (31), where the E-steps can be computed through message passing procedures as outlined below:

-

\(E_{q\small {({\mathcal {X}})}}\)-step messages, \(\{\mu _{X_j \rightarrow \hat{f_i}} = \large {q}(X_j;\beta _{\small {X_j}})\}\), that are sent from variables \({\mathcal {X}}\) to factors \(\hat{{\mathcal {F}}}\) (as depicted in Fig. 3 (left)). The aim of sending these messages is to perform the GEM-MP’s \(M_{q\small {({\mathcal {Y}})}}\)-step in Eq. (21). That is, the setting of the distributions, \(\{\large {q}(X_j;\beta _{\small {X_j}})\}_{\small {\forall X_j \in {\mathcal {X}}}}\), are used for estimating the distributions, \(\{\large {q}(Y_i;\alpha _{\small {Y_i}})\}_{\small {\forall Y_i \in {\mathcal {Y}}}}\), that maximizes the lower bound on the log marginal-likelihood of Eq. (21). To do so, each variable \(X_j \in {\mathcal {X}}\) sends its current marginal probability \(\beta _{\small {X_j}}\) as an \(E_{q\small {({\mathcal {X}})}}\)-step message, \(\mu _{X_j \rightarrow \hat{f_i}} =\large {q}(X_j;\beta _{\small {X_j}})\), to its neighboring extended factors. Then, at the factors level, each extended factor \(\hat{f_i} \in \hat{{\mathcal {F}}}\) uses the relevant marginals from those received incoming messages of its argument variables, i.e., \(\{\large {q}(X_j;\beta _{\small {X_j}})\}_{\small {\forall X_j \in {\mathcal {X}}_{\hat{f_i}}}}\), to perform the computations of the \(E_{q\small {({\mathcal {X}})}}\)-step of Eq. (21). This implies updating the distribution \(\large {q}(Y_i;\alpha _{\small {Y_i}})\) of its mega-node \(Y_i\) by computing what we call the probabilistic generalized arc consistency (pGAC) (we will discuss pGAC in more detail in Sect. 4).

-

\(E_{q\small {({\mathcal {Y}})}}\)-step messages, \(\{\mu _{\hat{f_i} \rightarrow X_j} = \sum _{\small {Y_i:\forall y_k(X_j)}} \large {q}(Y_i;\alpha _{\small { Y_i}})\}\), that are sent from factors to variables (as depicted in Fig. 3 (right)). Sending these messages corresponds to the GEM-MP’s \(M_{q\small {({\mathcal {X}})}}\)-step in Eq. (22). Here, the approximation of the distributions, \(\{\large {q}(Y_i;\alpha _{\small {Y_i}})\}_{\small {\forall Y_i \in {\mathcal {Y}}}}\), obtained from the GEM-MP’s \(M_{q\small {({\mathcal {Y}})}}\)-step will be used to update the marginals, i.e., \(\{\large {q}(X_j;\beta _{\small {X_j}})\}_{\small {\forall X_j \in {\mathcal {X}}}}\), that maximizes the lower bound on the log marginal-likelihood in Eq. (22). Characteristically, each extended factor \(\hat{f_i} \in \hat{{\mathcal {F}}}\) sends a corresponding refinement of the pGAC distribution - that approximates the \(\large {q}(Y_i;\alpha _{\small { Y_i}})\) of its mega-node - as an \(E_{q\small {({\mathcal {Y}})}}\)-step message, \(\mu _{\hat{f_i} \rightarrow X_j} = \sum _{\small {Y_i:\forall y_k(X_j)}} \large {q}(Y_i;\alpha _{\small { Y_i}})\), to each of its argument variables, \(X_j \in {\mathcal {X}}_{\hat{f_i}}\). Now, at the variables level, each \(X_j \in {\mathcal {X}}\) uses the relevant refinement of pGAC distributions from those received incoming messages - which are the outgoing messages coming from its extended factors \(\hat{f_i} \in \hat{{\mathcal {F}}}_{X_j}\) - to perform the computations of the \(E_{q\small {({\mathcal {Y}})}}\)-step of Eq. (22). This implies updating its distribution \(\large {q}(X_j;\beta _{\small {X_j}})\) by summing these messages (as it will be discussed in more detail in Sect. 4).

Other work has empirically observed that asynchronous belief propagation scheduling often yields faster convergence than synchronous schemes (Elidan et al. 2006). In variational message passing schemes, the mathematical derivations lead to updates that are asynchronous in nature. In Sect. 4 we will derive a general update-rule for GEM-MP based on variational principles in more detail and we shall see it leads to an asynchronous scheduling of messages. However, messages can be passed in a structured form whereby variables \({\mathcal {X}}\) are able to send their \(E_{q\small {({\mathcal {X}})}}\)-step messages simultaneously to their factors (or mega-node variables \({\mathcal {Y}}\)). At the level of factors, the marginals are updated one at a time, then the factors send back \(E_{q\small {({\mathcal {Y}})}}\)-step messages simultaneously to their variables. Moreover, it should be noted that we do the asynchronous updating schedule between variables \({\mathcal {X}}\) and mega-nodes \({\mathcal {Y}}\) in a form that allows updates to potentially be computed in parallel. Thus the version of GEM-MP that we present here involves sending messages in parallel from mega-nodes to variables and variables to mega-nodes. Updates to the \(q\small {({\mathcal {X}}_j)}\)s approximations for variables \(X_j \in {\mathcal {X}}\) could be computed in parallel, and updates to the \(q\small {({\mathcal {Y}}_i)}\)s approximations for mega-nodes \(Y_i \in {\mathcal {Y}}\) could also be performed in parallel.

Theorem 1

(GEM-MP guarantees convergence) At each iteration of updating the marginals (i.e., variational parameters \({\mathcal {B}}_{\small {{\mathcal {X}}}}\)), GEM-MP increases monotonically the lower bound on the model evidence such that it never overshoots the global optimum or until converging naturally to some local optima.

Proof

Using Eq. (15) into Eq. (13c) implies that the maximization of the lower bound \({\mathcal {F}}_{\small {{\mathcal {M}}}}(\large {q}({\mathcal {X}};{\mathcal {B}}_{\small {{\mathcal {X}}}}), \large {q}({\mathcal {Y}};{\mathcal {T}}_{\small {{\mathcal {Y}}}}))\) is equivalent to the minimization of the Kullback–Leibler (\(\textit{KL}\)) divergence between \(\large {q}(\small {{\mathcal {X}}};{\mathcal {B}}_{\small {{\mathcal {X}}}})\) and \(\large {q}({\mathcal {Y}};{\mathcal {T}}_{\small {{\mathcal {Y}}}})\) and the true distribution, \(P({\mathcal {X}},{\mathcal {Y}}|{\mathcal {O}},{\mathcal {M}})\), over hidden variables:

Illustrating how each step of the GEM-MP algorithm is guaranteed to increase the lower bound on the log marginal-likelihood. In its “\(M_{q\small {({\mathcal {Y}})}}\)-step”, the variational distribution over hidden mega-node variables is maximized according to Eq. (21). Then, in its “\(M_{q\small {({\mathcal {X}})}}\)-step”, the variational distribution over hidden \({\mathcal {X}}\) variables is maximized according to Eq. (22)

Now assume that before and after a given iteration (t), we have \(\large {q}^{(t)}_{({\mathcal {X}};{\mathcal {B}}_{\small {{\mathcal {X}}}})}\) and \(\large {q}^{(t+1)}_{({\mathcal {X}};{\mathcal {B}}_{\small {{\mathcal {X}}}})}\) that denote the settings of \({\mathcal {B}}_{\small {{\mathcal {X}}}}\) respectively. Likewise for \(\large {q}^{(t)}_{({\mathcal {Y}};{\mathcal {T}}_{\small {{\mathcal {Y}}}})}\) and \(\large {q}^{(t+1)}_{({\mathcal {Y}};{\mathcal {T}}_{\small {{\mathcal {Y}}}})}\) with respect to \({\mathcal {T}}_{\small {{\mathcal {Y}}}}\), where one iteration is a run of GEM-MP “\(M_{q\small {({\mathcal {Y}})}}\)-step” followed by “\(M_{q\small {({\mathcal {X}})}}\)-step”. By construction, in the \(M_{q\small {({\mathcal {Y}})}}\)-step, \(\large {q}^{(t+1)}_{({\mathcal {Y}};{\mathcal {T}}_{\small {{\mathcal {Y}}}})}\) is chosen such that it maximizes \({\mathcal {F}}_{\small {{\mathcal {M}}}}(\large {q}^{(t)}_{({\mathcal {X}};{\mathcal {B}}_{\small {{\mathcal {X}}}})},\large {q}^{(t+1)}_{({\mathcal {Y}};{\mathcal {T}}_{\small {{\mathcal {Y}}}})})\) given \(\large {q}^{(t)}_{({\mathcal {X}};{\mathcal {B}}_{\small {{\mathcal {X}}}})}\). Then, in the \(M_{q\small {({\mathcal {X}})}}\)-step, \(\large {q}^{(t+1)}_{({\mathcal {X}};{\mathcal {B}}_{\small {{\mathcal {X}}}})}\) is set by maximizing \({\mathcal {F}}_{\small {{\mathcal {M}}}}(\large {q}^{(t+1)}_{({\mathcal {X}};{\mathcal {B}}_{\small {{\mathcal {X}}}})},\large {q}^{(t+1)}_{({\mathcal {Y}};{\mathcal {T}}_{\small {{\mathcal {Y}}}})})\) given \(\large {q}^{(t+1)}_{({\mathcal {Y}};{\mathcal {T}}_{\small {{\mathcal {Y}}}})}\), and we have (as shown in Fig. 4):

and similarly:

This implies that GEM-MP increases the lower bound monotonically.

Now since the exact log marginal-likelihood, \(\log \sum _{\small {{\mathcal {X}}},\small {{\mathcal {Y}}}} P({\mathcal {O}}, {\mathcal {X}},{\mathcal {Y}}|{\mathcal {M}})\), is a fixed quantity and the Kullback–Leibler divergence, \(\textit{KL} \ge 0\), is a non-negative quantity then this implies that GEM-MP never overshoots the global optimum of the variational free energy.

Since GEM-MP applies a variational mean-field approximation for \(\large {q}({\mathcal {Y}};{\mathcal {T}}_{\small {{\mathcal {Y}}}})\) and \(\large {q}({\mathcal {X}};{\mathcal {B}}_{\small {{\mathcal {X}}}})\) distributions [refer to Eqs. (24) and (23)] over both mega-nodes and variables nodes respectively, it inherits the guarantees of mean field to converge to a local minimum of the negative variational free energy free energy or KL divergence. \(\square \)

Note that the convergence behaviour of GEM-MP for inference task resembles the behaviour of the variational Bayesian expectation maximization approach proposed by Beal and Ghahramani (2003) for the Bayesian learning task. Both of them can be seen as a variational technique (forming a factorial approximation) that minimizes a free-energy-based function for estimating the marginal likelihood of the probabilistic models with hidden variables.

It is worth noting that when reaching the GEM-MP “\(M_{q\small {({\mathcal {Y}})}}\)-step”, one could select between a local or global approximation to distribution \(\large {q}({\mathcal {Y}};{\mathcal {T}}_{\small {{\mathcal {Y}}}})\). However, in this paper, we restricted ourselves to local approximations.Footnote 8 Furthermore, although GEM-MP represents a general template framework for applying variational inference to probabilistic graphical models, we concentrate on Markov logic models, where the variables will be ground atoms and the factors will be both hard and soft ground clauses (as will be explained in Sect. 4) and Ising models (as will be explained in Sect. 5).

4 GEM-MP general update rule for Markov logic

By substituting the local approximation for \(\large {q}({\mathcal {Y}};{\mathcal {T}}_{\small {{\mathcal {Y}}}})\) from \(M_{q\small {({\mathcal {Y}})}}\)-step into the \(M_{q\small {({\mathcal {X}})}}\)-step, we can synthesize update rules that tell us how to set the new marginal in terms of the old one. So, in practice the \(M_{q\small {({\mathcal {Y}})}}\)-step and the \(E_{q\small {({\mathcal {Y}})}}\)-step messages of GEM-MP can be expressed in the form of one set of messages (from atoms-to-atoms through clauses). This set of messages synthesizes a general update rule for GEM-MP, applicable to Markov logic. However, since the underlying factor graph often contains hard and soft clauses, then within the GEM-MP framework we will intentionally distinguish hard and soft clauses by using two variants of the general update rule (denoted as Hard-update-rule and Soft-update-rule) for tackling hard and soft clauses, respectively.

4.1 Hard update rule

For notational convenience, we explain the derivation of the hard update rule by considering untyped atoms; but extending it to the more general case is straightforward. Also, for clarity, we begin the derivation with the \(M_{q\small {({\mathcal {X}})}}\)-step rather than with the usual \(M_{q\small {({\mathcal {Y}})}}\)-step. So we assume that we have already constructed \(\large {q}({\mathcal {Y}};{\mathcal {T}}_{\small {{\mathcal {Y}}}})\). We also assume that all clauses are hard.

1. \(M_{q\small {({\mathcal {X}})}}\)-step: Recalling Sect. 3, our basic goal in this step is to use \(\large {q}({\mathcal {Y}};{\mathcal {T}}_{\small {{\mathcal {Y}}}})\) to estimate the marginals (i.e., parameters) \({\mathcal {B}}_{\small {{\mathcal {X}}}}\) that maximize the expected log-likelihood such that each \(\beta _{\small {X_j}} \in {\mathcal {B}}_{\small {{\mathcal {X}}}}\) is a proper probability distribution. Thus we have an optimization problem of the form:Footnote 9

To perform this optimization, we first express it as the Lagrangian function \({\varLambda }({\mathcal {B}}_{\small {{\mathcal {X}}}})\):

where \(T({\mathcal {B}}_{\small {{\mathcal {X}}}})\) is a constraint that ensures the marginals, \({\mathcal {B}}_{\small {{\mathcal {X}}}}\), are sound probability distributions. This constraint can be simply represented as follows:

where \(\lambda _{\small {X_j}}\) are Lagrange multipliers that allow a penalty if the marginal distribution \(\beta _{\small {X_j}}\) does not marginalize to exactly one.

Now, let us turn to the derivation of the expected log-likelihood. We have that:

Based on the (hidden) variables \(X_j \in {\mathcal {X}}\) and mega-nodes \(Y_i \in {\mathcal {Y}}\), we can then decouple the distribution, \(\log P({\mathcal {O}}, {\mathcal {Y}} | {\mathcal {X}}, {\mathcal {M}})\), into individual distributions corresponding to hard ground clauses, and we have:Footnote 10

where \(P(Y_i |{\mathcal {X}}, {\mathcal {M}})\) is the probability of randomly choosing a valid local entry in the mega-node \(Y_i\) given the marginal probabilities of the ground atoms, \({\mathcal {B}}_{\small {{\mathcal {X}}}}\). Now, we can proceed by decomposing \(P(Y_i |{\mathcal {X}}, {\mathcal {M}})\) into individual marginals of ground atoms that possess consistent truth values in the valid local entries of \(Y_i\). That is:

where \(\beta _{\small {X_j}} (Y_i(X_j))\) is the marginal probability of ground atom \(X_j\) at its consistent values with \(Y_i\).

It is important to note that the decomposition in Eq. (40) is a mean field approximation for \(P(Y_i |{\mathcal {X}}, {\mathcal {M}})\). It implies that the probability of valid local entries of \(Y_i\) for the ground clause \(f_i\) can be computed using individual marginals of the variables in the scope of \(f_i\) at their instantiations over such local entries. For instance, suppose that \(f_i(X_1,X_2,X_3)\) is defined over three Boolean variables \(\{X_1,X_2,X_3 \}\) with marginal probabilities \(\{\beta _{\small {X_1}},\beta _{\small {X_2}},\beta _{\small {X_3}}\}\). Now let (0, 0, 1) be a valid local entry in the mega-node \(Y_i\) of \(f_i\). To compute the probability \(P(0,0,1 | \beta _{\small {X_1}},\beta _{\small {X_2}},\beta _{\small {X_3}}, {\mathcal {M}})\), we can simply multiply the marginals of the three variables at their instantiations over this valid local entry as:

Where \(\beta ^{-}_{\small {X_1}}\), \(\beta ^{-}_{\small {X_2}}\) and \(\beta ^{+}_{\small {X_3}}\) are the marginal probabilities of \(X_1\), \(X_2\), and \(X_3\) at values 0, 0, and 1 respectively.

We apply Eq. (40) in Eq. (39), convert logarithms of products into sums of logarithms, exchange summations, and handle each hard ground clause \(f^h_i \in {\mathcal {F}}^h\) separately in a sum.

Subsequently we take the partial derivative of the Lagrangian function in Eq. (36) with respect to an individual ground atom positive marginal \(\beta ^+_{X_j}\) and equate it to zero:Footnote 11

where \(\sum _{\small {Y_i:\forall y_k(X_j)=``+''}} \large {q}(Y_i;\alpha _{\small {Y_i}})\) is the \(E_{q\small {({\mathcal {Y}})}}\)-step message that \(X_j\) will receive from each hard ground clause (\(f^h_i \in {\mathcal {F}}_{X_j}^h\)) conveying what it believes about \(X_j\)’s positive marginal. Each \(E_{q\small {({\mathcal {Y}})}}\)-step message is computed by adding a term for those valid local entries \((Y_i:\forall y_k(X_j)=``+'')\) which instantiate the current hard ground clause using the positive value \(``+''\) for ground atom \(X_j\).

Thus the sum of the \(E_{q\small {({\mathcal {Y}})}}\)-step messages that ground atom \(X_j\) will receive from its neighboring hard ground clauses represents a weight (i.e., \(\textit{Weight}^+_{X_j}\)) used to update its positive marginal.

Furthermore an analogous expression can be applied for a negative marginal \(\beta ^-_{X_j}\):

Finally we now move to solving \(\lambda _{\small {X_j}}\) as follows:

which shows that \(\lambda _{\small {X_j}}\) serves as a normalizing constant that converts such weights (i.e., \(\textit{Weight}^+_{X_j}\), and \(\textit{Weight}^-_{X_j}\)) into a sound marginal probability (i.e. \(\beta _{\small {X_j}} = \left[ \beta ^-_{\small {X_j}} , \beta ^+_{\small {X_j}}\right] \)).

Now to obtain the completed hard update rule, what remains is the \(M_{q\small {({\mathcal {Y}})}}\)-step, through which we need to substitute the distribution \(\large {q}(Y_i;\alpha _{\small {Y_i}})\) in Eqs. (41) and (42).

2. \(M_{q\small {({\mathcal {Y}})}}\)-step: The goal here is to produce the distribution \(\large {q}(Y_i;\alpha _{\small {Y_i}})\) by using the current setting of marginals \({\mathcal {B}}_{\small {{\mathcal {X}}}}\). However the summation \(\sum _{\small {Y_i:\forall y_k(X_j)=``-''}}\) involves enumerating all the valid local entries for each \(Y_i\), which is inefficient. Instead we approximate the distribution \(\sum _{\small {Y_i:\forall y_k(X_j)=``-''}} \large {q}(Y_i;\alpha _{\small {Y_i}})\) for each hard ground clause \(f^h_i \in {\mathcal {F}}^h\) by using a probability \(1-\xi (X_j,f^h_i)\), which we call the probabilistic generalized arc consistency (pGAC). At this point, let us pause to elaborate more on pGAC in the next subsection.

4.1.1 Note on the connection between pGAC and variational inference

According to the concept of generalized arc consistency, a necessary (but not sufficient) condition for a ground atom \(X_j\) to be assigned a value \(d \in \{+,-\}\), is for every other ground atom appearing in the ground clause \(f_i\) to be individually consistent in the support of this assignment, i.e., \(X_j=d\). Without loss of generality, suppose that \(X_j\) appears positively in \(f_i\): there is a probability that \(X_j=d\) is not generalized arc consistent with respect to \(f_i\) when those other ground atoms appearing in \(f_i\) are individually inconsistent with this assignment since \(X_j=d\) can belong to an invalid local entry of \(f_i\). This means that there is a probability that \(X_j=d\) is unsatisfiable with respect to \(f_i\) when all other ground atoms appearing in \(f_i\) are set unsatisfyingly. We use \(\xi (X_j,f_i)\) to denote this probability, we assume Independence and approximate it as:

As indicated in Eq. (44), \(\xi (X_j,f_i)\) is computed by iterating through all the other ground atoms in clause \(f_i\) and consulting their marginals toward the opposite truth value of their appearance in \(f_i\). In other words, the \(\xi (X_j,f_i)\) forms a product representing the probability that, except \(X_j\), all other ground atoms \({\mathcal {X}}_{f_i} {\setminus } \{X_j\}\) in \(f_i\) taking on particular values that constitute invalid local entries to \(f_i\). Such invalid local entries support \(X_j\) unsatisfying \(f_i\) and can be approximated based on the marginal distributions of those ground atoms (i.e., \({\mathcal {X}}_{f_i} \setminus \{X_j\}\)) at these particular values. It should be noted that \(f_i\) has those marginal distributions from the incoming \(E_{q\small {({\mathcal {X}})}}\)-step messages that are sent from its argument ground atoms \({\mathcal {X}}_{f_i}\) during the GEM-MP’s \(M_{q\small {({\mathcal {Y}})}}\)-step.

Hence, if \(\xi (X_j,f_i)\) is the probability of \(X_j=d\) unsatisfying \(f_i\) then \(1-\xi (X_j,f_i)\) is directly the probability of \(X_j=d\) satisfying the ground clause \(f_i\). It also represents the probability that \(X_j=d\) is GAC with respect to \(f_i\) because the event of \(X_j=d\) satisfying \(f_i\) implies that it must be GAC to \(f_i\). This interpretation entails a form of generalized arc consistency, adapted to CNF, in a probabilistic sense; we call it a Probabilistic Generalized Arc Consistency.

Definition 2

(Probabilistic generalized arc consistency (pGAC))

Given a ground clause \(f_i \in {\mathcal {F}}\) defined over ground atoms \({\mathcal {X}}_{f_i}\), and for every \(X_j \in {\mathcal {X}}_{f_i}\), let \(D_{X_j}=\{+,-\}\) be the domain of \(X_j\). A ground atom \(X_j\) assigned a truth value \(d \in D_{X_j}\) is said to be probabilistically generalized arc consistent (pGAC) to ground clause \(f_i\) if the probability of \(X_j=d\) belonging to a valid local entry of \(f_i\) is non-zero. That is to say, if there is a non-zero probability that \(X_j=d\) is GAC to \(f_i\). The pGAC probability of \(X_j=d\) can be approximated as:

The definition of the traditional GAC in Sect. 2 corresponds to the particular case of pGAC where \(\xi (X_j,f_i)=0\), meaning that the probability of \(X_j=d\) being GAC to \(f_i\) definitely occurs, and \(\xi (X_j,f_i)=1\) when it is never GAC to \(f_i\). Based on that, if \(f_i\) contains \(X_j\) positively then the pGAC probability of \(X_j=+\) equals 1 because it is always GAC to \(f_i\). In an analogous way, the pGAC probability is 1 for \(X_j=-\) when \(f_i\) contains \(X_j\) negatively.

From a probabilistic perspective, the pGAC probability of \(X_j=d\) represents the probability that \(X_j=d\) is involved in a valid local entry of \(f_i\). This is similar to the computation of the solution probability of \(X_j=d\) by using the probabilistic arc consistency (pAC) (presented by Horsch and Havens 2000, and summarized in Sect. 2). However, it should be noted that our pGAC applies mean-field approximation. This is because when computing \(\xi (X_j,f_i)\), as defined in Eq. (44), for each ground atom \(X_j \in {\mathcal {X}}_{f_i}\), we use the marginal probabilities of other ground atoms \(X_k \in {\mathcal {X}}_{f_i} {\setminus } \{X_j\}\) set unsatisfying in \(f_i\). Thus the main difference between our pGAC and pAC (Horsch and Havens 2000) appears in the usage of mean-field and BP for computing the probability that \(X_j=d\) belongs to valid local entry of \(f_i\) in pGAC and pAC, respectively. Furthermore, it should be noted that pAC is restricted to binary constraints whilst pGAC is additionally applicable to non-binary ones.

From the point of view of computational complexity, \(\xi (X_j,f_i)\) requires only linear computational time in the arity of the ground clause (as will be shown in Proposition 3). Thus, pGAC is an efficient form of GAC compared to pAC. In addition, pGAC guarantees the convergence of mean-field whereas pAC inherits the possibility of non-convergence from BP.

From a statistical perspective, the pGAC probability of \(X_j=+\) is a closed form approximation for a sample from the valid local entries of \(f_i\) that involve \(X_j=+\). Thus we have that:

And similarly the pGAC probability for \(X_j=-\):

Based on Eqs. (46) and (47), we can use pGAC for computing the two components of \(E_{q\small {({\mathcal {Y}})}}\)-step message, in Eqs. (41)and (42), that \(f_i\) sends to \(X_j\) as follows:

-

\([1,1-\xi (X_j,f_i)]\) if \(f_i\) contains \(X_j\) positively.

-

\([1-\xi (X_j,f_i),1]\) if \(f_i\) contains \(X_j\) negatively.

Note that computing the components of \(f_i\)’s \(E_{q\small {({\mathcal {Y}})}}\)-step message in this way above requires having in hand the marginals of all other ground atoms, \(X_k \in {\mathcal {X}}_{f_i} {\setminus } \{X_j\}\). Thus, one of the best choices is to simultaneously passing the \(E_{q\small {({\mathcal {X}})}}\)-step messages – which convey the marginals – from ground atoms \({\mathcal {X}}_{f_i}\) to ground clause \(f_i\). Additionally at \(f_i\)’s level we can sequentially update the marginals as: obtain the marginal of the first ground atom then use its new marginal in the updating process of the second atom’s marginal, then use the first and second atoms’ new marginals in the updating process of the third atom’s marginal, and so on. This sequential updating allows GEM-MP to use the latest available information of the marginals through the updating process. In addition, doing so enables a single update rule that performs both the E- and M- steps at the same time, by directly representing the \(M_{q\small {({\mathcal {Y}})}}\)-step within the rule we derived for the \(M_{q\small {({\mathcal {X}})}}\)-step.

4.1.2 Using pGAC in the derivation of the hard update rule

We now continue the derivation of the hard update rule by using pGAC to address the task of producing \(\sum _{\small {Y_i:\forall y_k(X_j)}} \large {q}(Y_i;\alpha _{\small {Y_i}})\) in Eqs. (41) and (42) as follows:

where in Eq. (48b) we first separate the summation into \(X_j\)’s positive and negative hard ground clauses to consider the two distinct situations of whether \(X_j\) appears as a positive ground atom versus the other situation where it appears as a negative ground atom. Further in Eq. (48c) in the first positive summation, we replaced the inner summation with the constant 1 (because all other atoms will be generalized arc consistent with \(X_j=``+''\) for the hard clauses that have a positive appearance of \(X_j\) - as explained in Sect. 4.1.1).

The end result, as in Eq. (48d), is the \(\textit{Weight}^+_{X_j}\) of ground atom \(X_j\) computed as the summation of all hard ground clauses that include \(X_j\) minus the summation of pGAC of hard ground clauses that involve \(X_j\) as a negative atom.

The interpretation of \(\textit{Weight}^+_{X_j}\) can be understood as reducing the positive probability of \(X_j\) according to the expectation of the probability that \(X_j\) is needed by its negative hard ground clauses. Such reductions are taken from a constant that represents the overall number of hard ground clauses that involve \(X_j\) (i.e. \(\left| {\mathcal {F}}_{X_j}^h\right| \)). Similarly we can obtain:

where \(\textit{Weight}^-_{X_j}\) has an analogous interpretation of \(\textit{Weight}^+_{X_j}\) for the negative probability of \(X_j\).

4.2 Soft update rule

To derive the update rule for soft ground clauses, what we need to do is to soften some restrictions on the weight parts (i.e. \(\textit{Weight}^+_{X_j}\), \(\textit{Weight}^-_{X_j}\)) of the hard update rule. This encompasses modifying the distributions, \(\large {q}(Y_i;\alpha _{\small {Y_i}})\), of hard ground clauses for soft ground clauses by applying two consecutive steps: softening and embedding.

For clarity, let us recall the example of the extended factor graph shown in Fig. 2 (right). In the softening step, we define the variational parameters \(\alpha _i\), of the distributions \(\large {q}(Y_i;\alpha _{\small {Y_i}})\), that are appended to the soft clauses to be different from those appended to hard clauses in a way that renders them suitable to the semantics of soft ground clauses. That is, we discriminate variational parameters of distributions \(\large {q}(Y_i;\alpha _{\small {Y_i}})\) for hard and soft ground clauses respectively as follows: