Abstract

The desire to support student learning and professional development, in combination with accreditation requirements, necessitates the need to evaluate the learning environment of educational programs. The Health Education Learning Environment Survey (HELES) is a recently-developed global measure of the learning environment for health professions programs. This paper provides evidence of the applicability of the HELES for evaluating the learning environment across four health professions programs: medicine, nursing, occupational therapy and pharmaceutical sciences. Two consecutive years of HELES data were collected from each program at a single university (year 1 = 552 students; year 2 = 745 students) using an anonymous online survey. Reliability analyses across programs and administration years supported the reliability of the tool. Two-way factorial ANOVAs with program and administration year as the independent variables indicated statistically- and practically-significant differences across programs for four of the seven scales. Overall, these results support the use of the HELES to evaluate student perceptions of the learning environment multiple of health professions programs.

Similar content being viewed by others

Introduction and background

The learning environment (LE) has been defined as the psychological, social, cultural and physical context in which learning occurs (Rusticus et al. 2019; Shochet et al. 2013). Moreover, it is co-created through the personal motivations and emotions of students as they engage in their learning and navigate various interactions among their peers, faculty and other individuals within the environment, with all of these taking place within particular physical settings amid various cultural and administrative norms (e.g., school policies). High-quality LEs are increasingly recognized as vital for health professions education and student success. As a complex psycho-social-physical construct, the particular set of features or components that make up the LE and support measures of quality, such as program climate, the variety of learning opportunities, and available facilities, remains inconsistent and contested (Gruppen et al. 2019). Amongst LE researchers, this lack of consensus and the absence of theoretical frameworks for examining LE quality has led to development of context-specific tools with limited uniformity and generalizability (Gruppen et al. 2019; Schönrock-Adema et al. 2012). Regardless, what is consistent amongst LE researchers is recognition of its critical importance for student learning and development and the need for tools that adequately measure LE quality. Given the rigour and complexity of health professions programs today, we argue that the need for understanding the effects of the LE on the student experience, student learning, and professional development has never been greater.

Examining the LE in clinical teaching settings in health care professions programs is particularly insightful. In addition to in-person or virtual classroom studies, much of students’ learning occurs in experiential settings directly caring for people with health care needs of varying severity. Here, the stakes are high and effects on the learning climate and student progress are understandable. Additionally, this environment is severely stretched for a number of reasons, including an over-emphasis on the biomedical model (Feo and Kitson 2016; Forber et al. 2015), a focus on completion of tasks (Ironside et al. 2014), high acuity, heavy workload, unpredictability of everyday practice (Lemaire et al. 2019), corporatization of, and neoliberal approaches to, healthcare (McGregor 2001), and unexpected events such as the current Covid-19 pandemic. Furthermore, the hierarchical structures embedded in health care and health care education programs (Hutchinson and Jackson 2015; Jackson et al. 2011) can greatly influence students’ ability to learn in these settings and can unexpectedly place them in vulnerable positions if issues arise. Of particular relevance in the nursing, medical and, to a much lesser extent, pharmacy programs is the abundance of literature outlining the high prevalence of mistreatment that students witness or experience (Birks et al. 2018; Clark et al. 2012; Jackson et al. 2011; Knapp et al. 2014; Seibel and Fehr 2018; Tee et al. 2016; Timm 2014; Vogel 2016), with the clinical setting cited as the most common place that it occurs in nursing education (Budden et al. 2017; Sidhu and Park 2018).

Given this complexity, it is not surprising that the evaluation of the quality of the LE in health professions programs has garnered much attention around the world, with studies being undertaken in North and South America, Europe, Africa and Asia (e.g., Aktas and Karabulut 2016; Belayachi and Razine 2015; Bergjan and Hertel 2013; Buhari et al. 2014; Kim et al. 2016; Veerapen and McAleer 2010). Importantly, these evaluations have tended to focus primarily on medical and nursing education programs, with comparatively little research being conducted on other health professions programs, such as occupational therapy or pharmaceutical sciences.

The need to examine and evaluate LE quality in health professions programs is often driven by leadership for a range of reasons, including the desire for greater context-specific understanding about what is and is not working within programs and student progress (Regehr 2010). By identifying elements within the LE that can support or hinder student learning, potential problems within the environment can be identified and changes can be implemented to facilitate more positive environments. Furthermore, elements that are identified as key strengths can continue to be supported and fostered. Accreditation requirements also hold health professions education programs accountable for the formation and maintenance of positive LEs and for the ongoing evaluation of the LE as part of continuous quality improvement efforts (BCCNP 2018; CACMS 2018; CAOT 2016; CCAPP 2018). High-quality LEs have been linked to many positive outcomes, such as greater student success, satisfaction and professional development, and less student stress, marginalization, exhaustion and burnout (e.g., Branch 2000; Cruess et al. 2014; Rusticus et al. 2014; Suchman et al. 2004).

A number of instruments, as noted earlier, have been developed to measure LE quality (Gruppen et al. 2019; Miles et al. 2012; Schonrock-Adema et al. 2012). Of those, the Dundee Ready Education Environment Measure (DREEM; Roff et al. 1997) appears to be the most-common measure used worldwide in evaluations of the undergraduate learning environment. The DREEM has been used across a number of groups and settings, and for a variety of evaluation-related purposes, including comparison of different groups, comparison of the same group under different conditions, examining the relationship between the LE and program outcomes such as student achievement and continuous quality improvement. The limitations of the DREEM include cultural bias, use of unfamiliar words in survey items, and a lack of uniformity and clarity about the best way to analyse and report DREEM data (Miles et al. 2012). Further, the DREEM has been criticized for a lack of sufficient validity evidence (Colbert-Getz et al. 2014) and theoretical foundation (Schönrock-Adema et al. 2012). Given the importance of the LE for students’ progress and educational institutions more broadly, researchers (Colbert-Getz et al. 2014; Schönrock-Adema et al. 2012) have suggested that well-validated and theoretically-informed measures of the LE are needed to provide reliable and valid assessments of the environment for research, evaluation, and continuous quality-improvement purposes.

In response to this assertion, the Health Education Learning Environment Survey (HELES) was recently developed and preliminarily validated as a global measure of the LE for health profession students (Rusticus et al. 2019). HELES items were developed to reflect Moos’s learning environment framework and its three dimensions of personal development, relationships, and system maintenance and change (Insel and Moos 1974; Moos 1973). Briefly, these dimensions reflect a student’s personal motivation within the environment, the nature and quality of relationships with peers and faculty/staff, and the broader institutional setting, respectively. Initial validity evidence provided for this tool, using a sample of medical students, supported its factor structure and reliability (Rusticus et al. 2019). While the HELES was initially intended for use with undergraduate medical students, it was broadened during its development, in collaboration with other health professions (nursing, occupational therapy, pharmaceutical sciences), for wider applicability (Rusticus et al. 2019). Having a tool that is relevant to multiple health professions programs acknowledges the similarities and shared concerns regarding the LE across programs and can foster increased collaborations among programs to share their experiences, insights and challenges regarding program improvements.

To date, the HELES has only been administered to medical students and has not been used in other health professions programs. The purpose of the present pilot study was to administer the HELES across four health professions programs at a single Canadian university (undergraduate medicine, nursing, occupational therapy, and pharmaceutical sciences) to assess its reliability across the four different programs. Similarities and differences in student perceptions of the learning environment across programs were also explored. Although all students attended the same university, their differing programs share school-specific learning and professional cultures, as well as encompassing unique program-level learning experiences, all of which could result in differing perspectives of the learning environment. Demonstrating that the HELES can reliably assess student perceptions of the learning environment, and examining what students in each of these programs identify as the strengths and weaknesses of their programs, can promote interprofessional collaborations regarding program improvements and offer suggestions for enabling more positive LEs.

Methods

Context

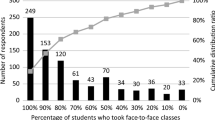

All four health professions programs participating in this study were from the same university in Western Canada. The undergraduate medical program (MED) is a fully-distributed, 4-year program in which students complete their training at either the main campus or one of three smaller regional sites. The program admits 288 students annually with approximately 1152 students at any one time. The first 2 years of the program consist of classroom and laboratory-based training, with a focus on case-based learning. The second 2 years consist of training in a variety of hospital and community-based clinical settings. The nursing (NURS) program is a 20-month accelerated undergraduate program, located at the main campus, which integrates various clinical practice, classroom and laboratory learning experiences throughout the entire program. The program admits 120 students per year, with up to 240 students at any one time in the program. The occupational therapy (OT) program is a 2-year graduate program at the main campus. Students are allocated various clinical placements throughout these 2 years. The program admits approximately 51 to 56 students per year, with just over 100 students in the program at any one time. The pharmaceutical sciences (PS) program is a new entry-to-practice Doctor of Pharmacy (PharmD) program comprising 2 years of prerequisite sciences followed by a 4-year pharmacy program. The program admits 224 students per year with maximum enrollments at any one time of 896. The first three program years include classroom-based courses and summer experiential practicums. The 4th year involves placements in various practice settings. At the time of the study, the PharmD program was still in the early stages of implementation and evaluation.

Participants

All students in each of the four health professions education programs were invited to participate in this study. Two consecutive years of data were collected for each program. In year one of the study, 552 students completed the survey: MED = 347 (30% response rate); NURS = 36 (30% response rate); OT = 76 (85% response rate); PS = 93 (21% response rate). In year two of the study, 745 students completed the survey: MED = 463 (40% response rate); NURS = 22 (18% response rate); OT = 66 (73% response rate); PS = 194 (44% response rate). In year one of the study, only first- and second-year PS students were invited to participate while, in the second year, all four years of PS students received invitations to participate.

Measure

All participants completed the 35-item Health Education Learning Environment Survey (HELES; Rusticus et al. 2019). The HELES consists of a total of six subscales, with two subscales each within the three broad LE domains of personal development, relationships and school culture (Insel and Moos 1974; Moos 1973; Rusticus et al. 2019). See Fig. 1 for an overview of the structure of the HELES, with an example of an item for each subscale. The Work-Life Balance subscale (7 items) assesses perceptions of workload and stress. Three of these items are reverse scored. The Clinical Skill Development subscale (4 items) assesses perceived opportunities to work with patients and to learn and practice clinical skills. Within the relationships dimension are the Peer Relationships and Faculty Relationships subscales. The Peer Relationships subscale (4 items) assesses the nature and degree of support from peers, whereas the Faculty Relationships subscale (8 items) assesses the nature and degree of support from faculty and clinical staff. Finally, within the school culture dimension are the Expectations and Educational Setting and Resources subscales. The Expectations subscale (4 items) assesses self and perceived faculty awareness of student abilities and expectations. The Educational Setting and Resources subscale (8 items) assesses perceptions of the overall school climate, including aspects of the physical environment, perceptions of diversity and available resources. The number of items within each scale was ultimately determined through pilot testing and factor analysis in the initial development of the tool with medical students (Rusticus et al. 2019). Each item is responded to on a 5-point scale ranging from 1 (Strongly disagree) to 5 (Strongly agree). The items are also averaged to a total learning environment score. For each of the subscales and the total score, a higher score indicates more positive perceptions of the learning environment. Students were also asked to complete two additional open-ended questions about how the learning environment supported and hindered their learning. Responses to the latter two questions were not included in this study because these questions are not intended as a central element of the HELES.

Procedure

After receiving ethics approval from the university’s Behavioral Research Ethics Board, the HELES was administered to each of the four health profession programs separately over the 2 years of the study using an online secured survey platform. For year one of the study, all surveys were administered in the spring/summer of 2017, towards the end of their current year of training. For year two, surveys were administered in the spring/summer of 2018. Within each program, the survey remained open for approximately 3 weeks with at least one reminder to participate sent during this time period. Participation in this study was anonymous and voluntary.

Analysis

Data from each program, for each of the two administration years, were transmitted to the first author who merged the data into a single data set for analysis. First, a reliability analysis, split by program and administration year, was conducted to assess the consistency of the HELES across the programs. Second, a series of two-way analyses of variance (ANOVAs), with administration year and program as the independent variables, were conducted for each subscale and the total HELES score. Tukey post-hoc tests were conducted for the program variable. By conducting a two-way ANOVA, we were able to examine differences between programs on the HELES and also assess whether there were differences in perceptions of the learning environment across administration years. Effect sizes were measured using partial eta-squared values with the following interpretations: small = 0.01, medium = 0.06 and large = 0.14 (Cohen 1969). In line with Kirk (1996), results needed to be both statistically significant and achieve at least a medium effect size to be flagged as a meaningful/practical difference.

Results

Table 1 presents the reliabilities for the HELES by program and administration year. A coefficient alpha of 0.70 is considered the minimally accepted level of reliability (Furr and Bacharach 2014). For year 1, alphas ranged from 0.55 to 0.93, with all but three being above 0.70 (the exceptions were: OT, Work-life balance, 0.69; NURS, Expectations, 0.55; PS, Peer Relationships, 0.64). For year 2, alphas ranged from 0.68 to 0.96, with all but one being above 0.70 (exception was: NURS, Peer Relationships, 0.68).

Table 2 presents the means and standard deviations for the HELES by administration year and program. Table 3 presents the results of the two-way ANOVAs. As seen in Table 3, statistically significant results were found for all the subscales and the total HELES score for the program variable. Main effects for administration year and interactions between program and year were statistically nonsignificant for all scales. When looking at statistical significance, combined with the requirement of at least a medium effect size to represent practical program differences, two of the subscales (Work-Life Balance and Educational Setting and Resources) and the total HELES score met these cut-off criteria. One additional subscale was just below this cut-off (Peer Relationships). Because the Peer Relationships subscale had violated the homogeneity of variance assumption, and because administration year was not a significant variable, we conducted a follow-up one-way ANOVA for this scale with program as the only independent variable so that we could use a Games-Howell post-hoc test to account for the assumption violation. This analysis resulted in a statistically-significant main effect for program with an eta-squared effect size of 0.06; therefore, the post-hoc comparisons of the programs are discussed below for this subscale. Homogeneity of variance assumption violations were also found for the Educational Setting and Resources subscale and the total HELES score. To examine the influence of this assumption violation, we conducted additional follow-up one-way ANOVAs with program as the independent variable and a Games-Howell post-hoc test. Comparisons of the two-way ANOVA results with the one-way ANOVAs did not result in any differences in overall conclusions about statistical or practical significance for the program variable, ruling out the confounding effect of the assumption violation.

We next interpreted analyses with a significant main effect for program. For both the Work-Life Balance subscale and the HELES total score, MED and OT students had higher mean scores than NURS and PS students, indicating that MED and OT students reported a better balance between their professional and personal lives and more-positive perceptions of the learning environment overall, respectively. There were no statistically-significant differences between MED and OT students or between NURS and PS students. Additionally, the Work-Life Balance subscale was the only subscale to have means that were below the midpoint of the scale (see Table 2), suggesting that NURS and PS students could perceive their workload to be too great on this measure.

For the Peer Relationships subscale, OT students reported more-positive relationships with their peers than MED students who reported more-positive relationships than PS students. NURS students also reported more-positive peer relationships than PS students. There were no other statistically-significant differences for this subscale. However, in all programs, peer relationships were reported as quite positive, with a mean of 3.99 out of 5 for PS students (administration year 2) which was the lowest reported mean across all programs and administration years.

Finally, for the Educational Setting and Resources subscale, MED students reported higher scores than both OT and PS students, who in turn reported higher scores than NURS students. There were no statistically-significant differences between OT and PS students.

Discussion

While the HELES was initially intended to be a measure of the LE for undergraduate medical students, collaborations with other health professions programs during its development broadened its applicability (Rusticus et al. 2019). In this study, we examined the feasibility of the HELES for assessing student perceptions of the LE across the four health professions programs of medicine, nursing, occupational therapy and pharmaceutical sciences. The HELES was administered in each of these programs for two consecutive years and reliability coefficients for the HELES subscales and total score were calculated for each year to assess the consistency of the tool in each program. Although a small number of reliability coefficients were flagged for being below the 0.70 cut-off (4 of 56 coefficients calculated over the scales, programs, and administration years), none of the scales were flagged in both years. It is likely that differences in the samples between these two years led to these differing coefficients (i.e., sampling error). Overall, these findings suggest that the reliability of the HELES was acceptable across the four programs.

When we examined similarities and differences across the programs and administration years, a lack of differences across administration years, or interactions for any of the scales, indicated consistency within the programs in student perceptions of each of the elements of the LE assessed by the HELES. Across all programs, student ratings of these elements were above the midpoint of the scale (with the exception of work-life balance for NURS and PS students, suggesting that these students tended to perceive their learning environment as positive and supportive for meeting their educational needs.

We also found some differences across programs. In regard to work-life balance, both MED and OT students reported a more-positive balance than NURS and PS students. Furthermore, NURS and PS students reported scores that were below the midpoint of the scale, indicating that these students could have trouble in managing their stress and workloads. Regarding the NURS program, both the short duration and accelerated pace for learning in the 20-month program could be contributing to participants’ perceptions of lower work-life balance, a finding supported by the nursing research literature. Stuenkel et al. (2011), for example, reported that, while workload is a concern for all NURS students, it is heightened by the shorter timeframe in accelerated programs. In addition, Hegge and Larson’s (2008) study revealed that over 60% of students in an accelerated program disclosed stress that was extensive to extreme, exacerbated by the voluminous learning required in a very limited timeframe. Another often-cited contributor potentially impacting the lower work-life balance of NURS students could be the early inclusion of clinical practice learning, a source of considerable stress for nursing students (Chernomas and Shapiro 2013). Unlike other health professions programs in which students might not engage in clinical practice for months or even years after program admission, it is imperative in accelerated NURS programs that clinical learning begins within a few weeks.

In the PS program, students begin their first experiential practicum in the summer following completion of their first year, affording a buffer not experienced by nursing students. However, aligned with the NURS experience, PS student stress levels are receiving growing attention in the pharmacy education literature. Increasing for many reasons (e.g., financial burden of schooling, fear of debt, future job uncertainty and rigours of the pharmacy curriculum), student stress has become particularly acute in newly-developing programs (Hirsch et al. 2019). The PharmD program, under development at the time of the study, was particularly stressful for both faculty and students. Employing contemporary learning-centered and competency-based education principles and involving team-taught integrated modules based on disease-states and/or body systems in place of traditional discipline-based courses, development, implementation and delivery of the PharmD program included many unfamiliar curriculum design, assessment and team-based teaching practices for faculty (Loewen et al. 2016). While empirical data are lacking, the low work-life balance scores for PS student workload seem reasonable given the excessive workloads, changing curriculum and pedagogical practices, and evolving culture associated with the sharp learning curve for faculty.

While students in all programs reported very positive peer relationships, differences were found among the programs, with OT students reporting the most-positive peer relationship experiences and PS the lowest. The OT program is one of the smallest in the country, providing an intimate context for students to create strong links with their peers. Students in the OT program are engaged in multiple simultaneous group projects and, given the small cohort, they have the opportunity to work with the same peers many times. In addition, students are engaged in many social activities that help further to build a sense of community. Therefore, it is not surprising that the OT students reported very-positive peer relationship experiences. While PS students rated peer relationships lower than other programs, interestingly, this subscale was ranked highest amongst all six subscales for student pharmacists. This result might suggest the importance of peer relationships for navigating the rigours of the PharmD program and generating a sense of support and collegiality within the large student body.

Differences were also found regarding students’ overall perceptions of the educational setting and availability of resources. MED students rated this element the most positively while NURS students rated it the least positively, but still positive overall. Regarding the MED program, the LE has been identified as a priority area for attention and action by the leadership and the organization in recent years. This has resulted in a number of new initiatives focusing on the enhancement of the quality of the environment, including a transformed curriculum with innovative learning modalities, new mistreatment reduction and learner resilience strategies, additional learner wellness and support resources, a greater focus on diversity, equity and inclusion, and a stricter adherence to policies related to the support of students’ learning experiences. As previously mentioned, the context of nursing education in an accelerated program also could account for some of these differences in regards to this scale. In addition, it is worth noting that models of clinical education differ across health professions education programs. For example, nursing programs in Canada usually employ a Clinical Instructor (CI) model until the final clinical placement preceptorship. The preceptorship model comprises a one-to-one mentor relationship between an RN and a senior student, as opposed to a CI model which encompasses one educator hired by the academic institution to guide a group of 6/7 students in clinical practice. The student/CI ratio is described as even higher by Luhanga (2018) who found that, while faculty and CIs believe that this model has many strengths, it is also fraught with challenges that appear to outweigh the strengths. Of particular concern is the high student/teacher ratio because it resulted in “limited time for teaching, supervision and evaluation of students, missed learning opportunities, and difficult balancing [of] individual student learning” (p. 135).

Finally, differences were found for the HELES Total score, with MED and OT students reporting a more-positive learning environment overall compared with NURS and PS students. Some of the challenges mentioned above regarding the NURS and PS programs could explain the overall lower scores for these two programs.

What have we learned from engaging in this pilot study? As the first of its kind amongst health professions at this university, building collaboratively on the work of MED has provided individual and collective results and insights that probably would not have emerged otherwise, particularly for OT, NURS and PS. In addition to establishing the reliability of the HELES across the four health professions disciplines (with each program now having a tool for assessing their LEs), administration of this measure has provided an important mechanism for listening to students about their learning experiences, responding to their learning needs on a continuous basis and forming greater context-specific understanding of and evidence for the impact of our curriculum and pedagogical practices (Regehr 2010). For example, PS not only has evidence of our efforts to address specific accreditation standards related to the learning environment, but can use this evidence to address student stress and workload in curriculum and teaching practices. Finding from this study have helped highlight stress levels amongst students and opened up proactive strategies for addressing the issue, including strengthening policies and practices of the Office of Student Services. This study has also highlighted the value of interprofessional collaboration for solving a variety of problems and complex issues (Green and Johnson 2015). The benefits of this collaboration have allowed us to achieve together more than they we would have individually, serve larger groups of students, and grow on individual and organizational levels. Through our discussions about the similarities and differences amongst our programs, we have learned strategies to foster strengths, address weaknesses, and work together for the betterment of all health professions students and programs.

Limitations

There are some limitations of this study that need to be mentioned. First, during the development of the HELES and the collaborations among the four programs, there were challenges in identifying language for some of the items that was appropriate for all programs. To address this concern, we added definitions for the terms ‘clinical staff’ and ‘scholarly interests’ at the beginning of the survey. However, this does raise some concerns regarding the appropriateness of the HELES across various health professions programs. Continued validation work on the ability of the HELES to assess the LE in each of these programs is still needed, especially confirmation of the factor structure of the HELES outside medicine and evidence of how the scales relate to other variables (e.g., convergent/discriminant validity). Second, the response rates across the programs were low except for OT, which raises some concerns about the generalizability of the results. However, the fact that the results were highly consistent across the two years of the study suggest a stable evaluation of these dimensions of the learning environment. Third, because these results are based on students from a single university, they might not generalize to other universities. Fourth, in this study, we focused on each of the programs as a whole. It is likely that differences in the LE would exist within each year of the program, as well as by experiential rotations within a year. Finally, because the HELES is a self-report measure, scores reflect student perceptions of the LE, which might not necessarily reflect the full and actual LE quality. Furthermore, students are limited in their perceptions to only those aspects of the LE with which they are familiar and there might be elements of the environment about which they are not fully aware and upon which they are unable to comment. This is a limitation that the HELES shares with many other LE measures.

Conclusion

Overall, the results of this study support the use of the HELES to evaluate or study student perceptions of the LE across multiple health professions programs. This tool can identify how various programs are be similar and unique in their LEs. Such data can be used to examine how positive LEs can be fostered and can inform accreditation requirements and ongoing continuous quality improvement of programs. Further, it demonstrates how staff from different programs can collaborate to learn from one another and to enhance LEs across programs.

References

Aktas, Y. Y., & Karabulut, N. (2016). A survey on Turkish nursing students’ perception of clinical learning environment and its association with academic motivation and clinical decision making. Nurse Education Today, 36, 124–128. https://doi.org/10.1016/j.nedt.2015.08.015.

Belayachi, J., & Razine, R. (2015). Moroccan medical students’ perceptions of the educational environment. Journal of Educational Evaluation for Health Professions, 12(47), 1–4. https://doi.org/10.3352/jeehp.2015.12.47.

Bergjan, M., & Hertel, F. (2013). Evaluating students’ perception of their clinical placements—Testing the clinical learning environment and supervision and nurse teacher scale (CLES + T scale) in Germany. Nurse Education Today, 33(11), 1393–1398. https://doi.org/10.1016/j.nedt.2012.11.002.

Birks, M., Budden, L., Biedermann, N., Park, T., & Chapman, Y. (2018). A ‘rite of passage?’ Bullying experiences of nursing students in Australia. Collegian, 25(1), 45–50. https://doi.org/10.1016/j.colegn.2017.03.005.

Branch, W. T., Jr. (2000). The ethics of caring and medical education. Academic Medicine, 75(2), 127–132.

British Columbia College of Nurse Professionals. (2018, Previously CRNBC, 2016). Guidelines for nursing education programs. Retrieved from: https://www.bccnp.ca/becoming_a_nurse/Documents/RN_NP_Guidelines_for_NrsgEdProg_693.pdf.

Budden, L. M., Birks, M., & Bagley, T. (2017). Australian nursing students’ experience of bullying and/or harassment during clinical placement. Collegian, 24(2), 125–133.

Buhari, M. A., Nwannadi, I. A., Oghagbon, E. K., & Bello, J. M. (2014). Students’ perceptions of their learning environment at the College of Medicine, University of Ilorin, Southwest, Nigeria. West African Journal of Medicine, 33(2), 141–145.

Canadian Association of Occupational Therapists (CAOP). (2016). Accreditation. Retrieved from https://www.caot.ca/site/accred/accreditation?nav=sidebar.

Chernomas, W. M., & Shapiro, C. (2013). Stress, depression, and anxiety among undergraduate nursing students. International Journal of Nursing Education Scholarship, 10(1), 255–266. https://doi.org/10.1515/ijnes-2012-0032.

Clark, C., Kane, D., Rajacich, D., & Lafreniere, K. (2012). Bullying in undergraduate clinical education. Journal of Nursing Education, 51(5), 269–276. https://doi.org/10.3928/01484834-20120409-01.

Cohen, J. (1969). Statistical power analysis for the behavioral sciences. New York: Academic Press.

Colbert-Getz, J. M., Kim, S., Goode, V. H., Shochet, R. B., & Wright, S. M. (2014). Assessing medical students’ and residents’ perceptions of the learning environment: Exploring validity evidence for the interpretation of scores from existing tools. Academic Medicine, 89, 1687–1693. https://doi.org/10.1097/ACM.0000000000000433.

Committee on Accreditation of Canadian Medical Schools: CACMS Standards and Elements. (2018). Retrieved from https://cacms-cafmc.ca/sites/default/files/documents/CACMS_Standards_and_Elements_-_AY_ 2019–2020.pdf.

Canadian Council for the Accreditation of Pharmacy Programs (CCAPP, 2018). Accreditation standards for Canadian first professional degree in pharmacy programs. Retrieved from: http://ccapp-accredit.ca/.

Cruess, R. L., Cruess, S. R., Boudreau, D., Snell, L., & Steinert, Y. (2014). Reframing medical education to support professional identity formation. Academic Medicine, 89, 1446–1451. https://doi.org/10.1097/ACM.0000000000000427.

Feo, R., & Kitson, A. (2016). Promoting patient-centered fundamental care in acute healthcare systems. International Journal of Nursing Studies, 57, 1–11. https://doi.org/10.1016/j.ijnurstu.2016.01.006.

Forber, J., DiGiacomo, M., Davidson, P., Carter, B., & Jackson, D. (2015). The context, influences and challenges for undergraduate nurse clinical education: Continuing the dialogue. Nurse Education Today, 35, 1114–1118. https://doi.org/10.1016/j.nedt.2015.07.006.

Furr, R. M., & Bacharach, V. R. (2014). Psychometrics: An introduction (2nd ed.). Thousand Oaks: Sage.

Green, B. N., & Johnson, C. D. (2015). Interprofessional collaboration in research, education, and clinical practice: Working together for a better future. Journal of Chiropractic Education, 29(1), 1–10. https://doi.org/10.7899/JCE-14-36.

Gruppen, L. D., Irby, D. M., Durning, S. J., & Maggio, L. A. (2019). Conceptualizing learning environments in the health professions. Academic Medicine, 94, 969–974. https://doi.org/10.1097/ACM.0000000000002702.

Hegge, M., & Larson, V. (2008). Stressors and coping strategies of students in accelerated baccalaureate nursing programs. Nurse Educator, 33(1), 26–30. https://doi.org/10.1097/01.NNE.0000299492.92624.95.

Hirsch, J. D., Nemlekar, P., Phuong, P., Hollenbach, K. A., Lee, K. C., Adler, D. S., & Morello, C. M. (2019). Patterns of stress, coping and health-related quality of life in doctor of pharmacy students: A five year cohort study. American Journal of Pharmaceutical Education. Advance online publication.

Hutchinson, M., & Jackson, D. (2015). The construction and legitimation of workplace bullying in the public sector: Insight into power dynamics and organisational failures in health and social care. Nursing Inquiry, 22(1), 13–26. https://doi.org/10.1111/nin.12077.

Insel, P. M., & Moos, R. H. (1974). Psychological environments: Expanding the scope of human ecology. American Psychologist, 29, 179–188.

Ironside, P. M., McNelis, A. M., & Ebright, P. (2014). Clinical education in nursing: Rethinking learning in practice settings. Nursing Outlook, 62(3), 185–191. https://doi.org/10.1016/j.outlook.2013.12.004.

Jackson, D., Hutchinson, M., Everett, B., Mannix, J., Peters, K., Weaver, R., & Salamonson, Y. (2011). Struggling for legitimacy: Nursing students’ stories of organizational aggression, resilience and resistance. Nursing Inquiry, 18(2), 102–110. https://doi.org/10.1111/j.1440-1800.2011.00536.x.

Kim, H., Jeong, H., Jeon, P., Kim, S., Park, Y., & Kang, Y. (2016). Perception of traditional Korean medical students on the medical education using the Dundee Ready Educational Environment Measure. Journal of Educational Evaluation for Health Professions. https://doi.org/10.1155/2016/6042967.

Kirk, R. E. (1996). Practical significance: A concept whose time has come. Educational and Psychological Measurement, 56(5), 746–759.

Knapp, K., Shane, P., Sasaki-Hill, D., Yoshizuka, K., Chan, P., & Vo, T. (2014). Bullying in the clinical training of pharmacy students. American Journal of Pharmaceutical Education, 78(6), Article 117. https://doi.org/10.5688/ajpe786117.

Lemaire, J. B., Shannon, D. W., Goelz, E., & Linzer, M. (2019). Best practices: Part 1: Chaos in medical practice: An important and remediable contributor to physician burnout. SGIM Forum, 42(5), 1–2.

Loewen, P. S., Gerber, P., McCormack, J., & MacDonald, G. (2016). Design and implementation of an integrated medication management curriculum in an entry-to-practice doctor of pharmacy program. Pharmacy Education, 16, 122–130.

Luhanga, F. L. (2018). The traditional-faculty supervised teaching model: Nursing faculty and clinical instructors’ perspectives. Journal of Nursing Education and Practice, 8(6), 124–137. https://doi.org/10.5430/jnep.v8n6p124.

McGregor, S. (2001). Neoliberalism and health care. International Journal of Consumer Studies, 25(2), 82–89.

Miles, S., Swift, L., & Leinster, S. J. (2012). The Dundee Ready Education Environment Measure (DREEM): A review of its adoption and use. Medical Teacher, 34, e620–e634. https://doi.org/10.3109/0142159X.2012.668625.

Moos, R. H. (1973). Conceptualizations of human environments. American Psychologist, 28, 652–665.

Regehr, G. (2010). It’s NOT rocket science: Rethinking our metaphors for research in health professions education. Medical Education, 44(1), 31–39. https://doi.org/10.1111/j.1365-2923.2009.03418.x.

Roff, S., McAleer, S., Harden, R. M., Al-Qahtani, M., Ahmed, A. U., Deza, H., et al. (1997). Development and validation of the Dundee Ready Education Environment Measure (DREEM). Medical Teacher, 19, 295–299. https://doi.org/10.3109/01421599709034208.

Rusticus, S., Wilson, D., Casio, O., & Lovato, C. (2019). Evaluating the quality of the health professions learning environment: Development and Validation of the Health Education Learning Environment Survey (HELES). Evaluation and the Health Professions. Advanced online publication. https://doi.org/10.1177/0163278719834339.

Rusticus, S., Worthington, A., Wilson, D., & Joughin, K. (2014). The Medical School Learning Environment Survey: An examination of its factor structure and relationship to student performance and satisfaction. Learning Environments Research, 17, 423–435. https://doi.org/10.1007/s10984-014-9167-9.

Schönrock-Adema, J., Bouwkamp-Timmer, T., van Hell, E. A., & Cohen-Schotanus, J. (2012). Key elements in assessing the educational environment: Where is the theory? Advances in Health Sciences Education, 17(5), 727–742. https://doi.org/10.1007/s10459-011-9346-8.

Seibel, M., & Fehr, C. F. (2018). “They can crush you”: Nursing students’ experiences of bullying and the role of faculty. Journal of Nursing Education and Practice, 8(6), 66–76. https://doi.org/10.5430/jnep.v8n6p66.

Shochet, R. B., Colbert-Getz, J. M., Levine, R. B., & Wright, S. M. (2013). Gauging events that influence students’ perceptions of the medical school learning environment: Findings from one institution. Academic Medicine, 88(2), 246–252. https://doi.org/10.1097/ACM.0b013e31827bfa14.

Sidhu, S., & Park, T. (2018). Nursing curriculum and bullying: An integrative literature review. Nursing Education Today, 65, 169–176. https://doi.org/10.1016/j.nedt.2018.03.005.

Stuenkel, D., Nelson, D., Malloy, S., & Cohen, J. (2011). Challenges, changes, and collaboration: Evaluation of an accelerated BSN program. Nurse Educator, 36(2), 70–75. https://doi.org/10.1097/NNE.Ob013e31820c7cf7.

Suchman, A. L., Williamson, P. R., Litzelman, D. K., Frankel, R. M., Mossbarger, D. L., & Inui, T. S. (2004). Toward an informal curriculum that teaches professionalism. Journal of General Internal Medicine, 19, 501–504. https://doi.org/10.1111/j.1525-1497.2004.30157.x.

Tee, S., Sinem Üzar Özçetin, Y., & Russell-Westhead, M. (2016). Workplace violence experienced by nursing students: A UK survey. Nurse Education Today, 41, 30–35. https://doi.org/10.1016/j.nedt.2016.03.014.

Timm, A. (2014). ‘It would not be tolerated in any other profession except medicine’: Survey reporting on undergraduates’ exposure to bullying and harassment in their first placement year. British Medical Journal Open, 4(7), 1–7. https://doi.org/10.1136/bmjopen-2014-005140.

Veerapen, K., & McAleer, S. (2010). Students’ perception of the learning environment in a distributed medical programme. Medical Education Online. https://doi.org/10.3402/meo.v15i0.5168.

Vogel, L. (2016). Bullying still rife in medical training. Canadian Medical Association Journal, 188(5), 321–322.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Rusticus, S.A., Wilson, D., Jarus, T. et al. Exploring student perceptions of the learning environment in four health professions education programs. Learning Environ Res 25, 59–73 (2022). https://doi.org/10.1007/s10984-021-09349-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10984-021-09349-y