Abstract

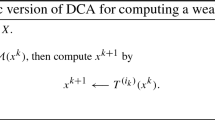

We consider a class of structured nonsmooth difference-of-convex minimization, which can be written as the difference of two convex functions possibly nonsmooth with the second one in the format of the maximum of a finite convex smooth functions. We propose two extrapolation proximal difference-of-convex-based algorithms for potential acceleration to converge to a weak/standard d-stationary point of the structured nonsmooth problem, and prove its linear convergence of these algorithms under the assumptions of piecewise error bound and piecewise isocost condition. As a product, we refine the linear convergence analysis of sDCA and \(\varepsilon \)-DCA in a recent work of Dong and Tao (J Optim Theory Appl 189: 190–220, 2021) by removing the assumption of locally linear regularity regarding the intersection of certain stationary sets and dominance regions. We also discuss sufficient conditions to guarantee these assumptions and illustrate that several sparse learning models satisfy all these assumptions. Finally, we conduct some elementary numerical simulations on sparse recovery to verify the theoretical results empirically.

Similar content being viewed by others

References

Ahn, M., Pang, J.S., Xin, J.: Difference-of-convex learning: Directional stationarity, optimality, and sparsity. SIAM J. Optim. 27(3), 1637–1665 (2017)

Bolte, J., Daniilidis, A., Lewis, A.: The Łojasiewicz inequality for nonsmooth subanalytic functions with applications to subgradient dynamical systems. SIAM J. Optim. 17(4), 1205–1223 (2007)

Dong, H.B., Tao, M.: On the linear convergence to weak/standard d-stationary points of DCA-based algorithms for structured nonsmooth DC programming. J. Optim. Theory Appl. 189(1), 190–220 (2021)

Drusvyatskiy, D., Lewis, A.S.: Error bounds, quadratic growth, and linear convergence of proximal methods. Math. Oper. Res. 43(3), 919–948 (2018)

Fan, J.Q., Li, R.Z.: Variable selection via nonconcave penalized likelihood and its oracle properties. J. Am. Stat. Assoc. 96(456), 1348–1360 (2001)

Gong, P., Zhang, C., Lu, Z., Huang, J.Z., Ye, J.: A general iterative shinkage and thresholding algorithm for non-convex regularized optimization problems. Proc. Int. Conf. Mach. Learn. 28(2), 37–45 (2013)

Gotoh, J.Y., Takeda, A., Tono, K.: DC formulations and algorithms for sparse optimization problems. Math. Program. Ser. B 169(1), 141–176 (2018)

Harker, P.T., Pang, J.S.: Finite-Dimensional Variational Inequalities and Complementarity Problems, vol. II. Springer, New York (2003)

Li, G.Y., Pong, T.K.: Calculus of the exponent of Kurdyka-Łojasiewicz inequality and its applications to linear convergence of first-order methods. Found. Comput. Math. 18(5), 1199–1232 (2018)

Li, H., Lin, Z.: Accelerated proximal gradient methods for nonconvex programming. Adv. Neural. Inf. Process. Syst. 1, 379–387 (2015)

Liu, T.X., Pong, T.K., Takeda, A.: A refined convergence analysis of pDCA\(_{e}\) with applications to simultaneous sparse recovery and outlier detection. Comput. Optim. Appl. 73(1), 69–100 (2019)

Liu, T., Pong, T.K., Takeda, A.A.: Successive difference-of-convex approximation method for a class of nonconvex nonsmooth optimization problems. Math. Program. Ser. B 176, 339–367 (2019)

Liu, T., Pong, T.K.: Further properties of the forward-backward envelope with applications to difference-of-convex programming. Comput. Optim. Appl. 67, 489–520 (2017)

Lu, Z.S., Zhou, Z.R., Sun, Z.: Enhanced Proximal DC Algorithms with Extrapolation for a Class of Structured Nonsmooth DC Minimization. Math. Program. Ser. B 176(1–2), 369–401 (2018)

Lu, Z.S., Zhou, Z.R., Sun, Z.: Nonmonotone enhanced proximal DC algorithms for a class of structured nonsmooth DC programming. SIAM J. Optim. 29(4), 2725–2752 (2019)

Luo, Z.Q., Tseng, P.: Error bound and convergence analysis of matrix splitting algorithms for the affine variational inequality problem. SIAM J. Optim. 2(1), 43–54 (1992)

Luo, Z.Q., Tseng, P.: On linear convergence of descent methods for convex essentially smooth minimization. SIAM J. Control. Optim. 30(2), 408–425 (1992)

Luo, Z.Q., Tseng, P.: Error bounds and convergence analysis of feasible descent methods: a general approach. Ann. Oper. Res. 46–47(1), 157–178 (1993)

Nakayama, S., Gotoh, J.Y.: On the superiority of PGMs to PDCAs in nonsmooth nonconvex sparse regression. Optim. Lett. 15, 2831–2860 (2021)

Pang, J.S., Razaviyayn, M., Alvarado, A.: Computing B-stationary points of nonsmooth DC programs. Math. Oper. Res. 42(1), 95–118 (2017)

Pham Dinh, T., Le Thi, H.A.: Convex analysis approach to DC programming: theory, algorithms and applications. Acta Math. Vietnam 22(1), 289–355 (1997)

Razaviyayn, M., Hong, M., Luo, Z.Q.: A unified convergence analysis of block successive minimization methods for nonsmooth optimization. SIAM J. Optim. 23(2), 1126–1153 (2013)

Rockafellar, R.T., Wets, R.J.-B.: Variational Analysis. Springer, Berlin (1998)

Le Thi, H.A., Pham Dinh, T.: The DC (difference of convex functions) programming and DCA revisited with DC models of real world nonconvex optimization problems. Ann. Oper. Res. 133(1–4), 23–46 (2005)

Le Thi, H.A., Pham Dinh, T.: DC programming and DCA: thirty years of developments. Math. Program. Ser. B 169(1), 5–68 (2018)

Le Thi, H.A., Huynh, V.N., Pham Dinh, T.: Convergence analysis of DC algorithm for DC programming with subanalytic data. J. Optim. Theory Appl. 179(1), 103–126 (2018)

Tseng, P., Yun, S.: A coordinate gradient descent method for nonsmooth separable minimization. Math. Program. Ser. B 117(1–2), 387–423 (2009)

Wen, B., Chen, X., Pong, T.K.: Linear convergence of proximal gradient algorithm with extrapolation for a class of nonconvex nonsmooth minimization problems. SIAM J. Optim. 27(1), 124–145 (2017)

Wen, B., Chen, X., Pong, T.K.: A proximal difference-of-convex algorithm with extrapolation. Comput. Optim. Appl. 69(2), 297–324 (2018)

Zhang, C.H.: Nearly unbiased variable selection under minimax concave penalty. Ann. Stat. 38(2), 894–942 (2010)

Zhou, Z., So, A.M.C.: A unified approach to error bounds for structured convex optimization problems. Math. Program. Ser. A 165(2), 689–728 (2017)

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Aris Daniilidis.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Min Tao was partially supported by the Chinese National Natural Science Foundation Grant (No. 11971228), the National Key Research and Development Program of China (No. 2018AAA0101100) and JiangSu University QingLan Project.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Tao, M., Li, JN. Error Bound and Isocost Imply Linear Convergence of DCA-Based Algorithms to D-Stationarity. J Optim Theory Appl 197, 205–232 (2023). https://doi.org/10.1007/s10957-023-02171-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-023-02171-x