Abstract

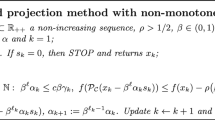

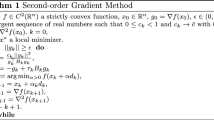

We suggest a conjugate subgradient type method without any line search for minimization of convex non-differentiable functions. Unlike the custom methods of this class, it does not require monotone decrease in the goal function and reduces the implementation cost of each iteration essentially. At the same time, its step-size procedure takes into account behavior of the method along the iteration points. The preliminary results of computational experiments confirm the efficiency of the proposed modification.

Similar content being viewed by others

References

Shor, N.Z.: Minimization Methods for Non-differentiable Functions. Springer, Berlin (1985)

Polyak, B.T.: Introduction to Optimization. Optimization Software, New York (1987)

Mäkela, M.M., Neittaanmäki, P.: Nonsmooth Optimization. World Scientific, Singapore (1992)

Kiwiel, K.C.: Methods of Descent for Nondifferentiable Optimization. Springer, Berlin (1985)

Hiriart-Urruty, J.B., Lemaréchal, C.: Convex Analysis and Minimization Algorithms. Springer, Berlin (1993)

Konnov, I.V.: Nonlinear Optimization and Variational Inequalities. Kazan University Press, Kazan (2013). [in Russian]

Wolfe, P.: A method of conjugate subgradients for minimizing nondifferentiable functions. In: Balinski, M.L., Wolfe, P. (eds.) Nondifferentiable Optimization. Mathematical Programming Study 3, 145–173 (1975)

Dem’yanov, V.F., Vasil’yev, L.V.: Nondifferentiable Optimization. Optimization Software, New York (1985)

Chepurnoi, N.D.: Relaxation method of minimization of convex functions. Dokl. Akad. Nauk Ukrainy. Ser. A. 3, 68–69 (1982). [in Russian]

Konnov, I.V.: A subgradient method of successive relaxation for solving optimization problems. Preprint VINITI No. 531-83, Faculty of Computational Mathematics and Cybernetics, Kazan University, Kazan, 14 pp. (1982). [in Russian]

Kiwiel, K.C.: An aggregate subgradient method for nonsmooth convex minimization. Math. Program. 27, 320–341 (1983)

Konnov, I.V.: A method of the conjugate subgradient type for minimization of functionals. Issled. Prikl. Matem. 12, 59–62 (1984); Engl. transl.: J. Soviet Math. 45, 1026–1029 (1989)

Mikhalevich, V.S., Gupal, A.M., Norkin, V.I.: Methods of Nonconvex Optimization. Nauka, Moscow (1987). [in Russian]

Konnov, I.V.: Combined Relaxation Methods for Variational Inequalities. Springer, Berlin (2001)

Gupal, A.M.: Methods of minimizing functions that satisfy a Lipschitz condition, averaging the directions of descent. Cybernetics 14, 695–698 (1978)

Chepurnoi, N.D.: A method of average quasigradients with stepwise step control for minimization of weakly convex functions. Kibernetika 6, 131–132 (1981). [in Russian]

Konnov, I.V.: Conditional gradient method without line-search. Russ. Mathem. (Izv. VUZ.) 62, 82–85 (2018)

Konnov, I.V.: A simple adaptive step-size choice for iterative optimization methods. Adv. Model. Optim. 20, 353–369 (2018)

Konnov, I.V.: Simplified versions of the conditional gradient method. Optimization 67, 2275–2290 (2018)

Lemaréchal, C.: An extension of Davidon methods to nondifferentiable problems. In: Balinski, M.L., Wolfe, P. (eds.) Nondifferentiable Optimization. Mathematical Programming Study 3, pp. 95–109 (1975)

Rockafellar, R.T.: Convex Analysis. Princeton University Press, Princeton (1970)

Pshenichnyi, B.N., Danilin, Y.M.: Numerical Methods in Extremal Problems. MIR, Moscow (1978)

Shor, N.Z., Shabashova, L.P.: Solution of minimax problems by the method of generalized gradient descent with dilatation of the space. Cybernetics 8, 88–94 (1972)

Nesterov, Y.: Primal–dual subgradient methods for convex problems. Math. Program. 120, 261–283 (2009)

Nesterov, Y., Shikhman, V.: Quasi-monotone subgradient methods for nonsmooth convex minimization. J. Optim. Theory Appl. 165, 917–940 (2015)

Acknowledgements

The results of this work were obtained within the state assignment of the Ministry of Science and Education of Russia, Project No. 1.460.2016/1.4. This work was supported by Russian Foundation for Basic Research, Project No. 19-01-00431.

Author information

Authors and Affiliations

Corresponding author

Additional information

Amir Beck.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Konnov, I. A Non-monotone Conjugate Subgradient Type Method for Minimization of Convex Functions. J Optim Theory Appl 184, 534–546 (2020). https://doi.org/10.1007/s10957-019-01589-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-019-01589-6

Keywords

- Convex minimization problems

- Non-differentiable functions

- Conjugate subgradient method

- Simple step-size choice

- Convergence properties