Abstract

We consider the storage properties of temporal patterns, i.e. cycles of finite lengths, in neural networks represented by (generally asymmetric) spin glasses defined on random graphs. Inspired by the observation that dynamics on sparse systems has more basins of attractions than the dynamics of densely connected ones, we consider the attractors of a greedy dynamics in sparse topologies, considered as proxy for the stored memories. We enumerate them using numerical simulations and extend the analysis to large systems sizes using belief propagation. We find that the logarithm of the number of such cycles is a non monotonic function of the mean connectivity and we discuss the similarities with biological neural networks describing the memory capacity of the hippocampus.

Similar content being viewed by others

References

Hwang, S., Folli, V., Lanza, E., Parisi, G., Ruocco, G., Zamponi, F.: On the number of limit cycles in asymmetric neural networks. J. Stat. Mech. 2019(5), 053402 (2019)

Hopfield, J.J.: Neural networks and physical systems with emergent collective computational abilities. Proc. Natl. Acad. Sci. 79(8), 2554–2558 (1982)

McCulloch, W.S., Pitts, W.: A logical calculus of the ideas immanent in nervous activity. Bull. Math. Biophys. 5(4), 115–133 (1943)

Pfeiffer, B.E., Foster, D.J.: Autoassociative dynamics in the generation of sequences of hippocampal place cells. Science 349(6244), 180–183 (2015)

Fuster, J.M., Alexander, G.E., et al.: Neuron activity related to short-term memory. Science 173(3997), 652–654 (1971)

Miyashita, Y.: Neuronal correlate of visual associative long-term memory in the primate temporal cortex. Nature 335(6193), 817–20 (1988)

Heisenberg, W.: Zur theorie des ferromagnetismus. Zeitschrift für Phys. 49(9), 619–636 (1928)

Amit, D.J., Gutfreund, H., Sompolinsky, H.: Spin-glass models of neural networks. Phys. Rev. A 32(2), 1007 (1985)

Amit, D.J., Gutfreund, H., Sompolinsky, H.: Storing infinite numbers of patterns in a spin-glass model of neural networks. Phys. Rev. Lett. 55(14), 1530 (1985)

Amit, D.J., Gutfreund, H., Sompolinsky, H.: Statistical mechanics of neural networks near saturation. Ann. Phys. 173(1), 30–67 (1987)

Amit, D.J., Amit, D.J.: Modeling Brain Function: The World of Attractor Neural Networks. Cambridge University Press, Cambridge (1992)

Tanaka, F., Edwards, S.F.: Analytic theory of the ground state properties of a spin glass. I. Ising spin glass. J. Phys. F 10(12), 2769 (1980)

Crisanti, A., Sompolinsky, H.: Dynamics of spin systems with randomly asymmetric bonds: Ising spins and glauber dynamics. Phys. Rev. A 37, 4865–4874 (1988)

Bastolla, U., Parisi, G.: Attractors in fully asymmetric neural networks. J. Phys. A 30(16), 5613 (1997)

Gutfreund, H., Reger, J.D., Young, A.P.: The nature of attractors in an asymmetric spin glass with deterministic dynamics. J. Phys. A 21(12), 2775 (1988)

Bastolla, U., Parisi, G.: Relaxation, closing probabilities and transition from oscillatory to chaotic attractors in asymmetric neural networks. J. Phys. A 31(20), 4583 (1998)

Nutzel, K.: The length of attractors in asymmetric random neural networks with deterministic dynamics. J. Phys. A 24(3), L151 (1991)

Toyoizumi, T., Huang, H.: Structure of attractors in randomly connected networks. Phys. Rev. E 91, 032802 (2015)

Huang, H., Kabashima, Y.: Dynamics of asymmetric kinetic ising systems revisited. J. Stat. Mech. 2014(5), P05020 (2014)

Molgedey, L., Schuchhardt, J., Schuster, H.G.: Suppressing chaos in neural networks by noise. Phys. Rev. Lett. 69, 3717–3719 (1992)

Schuecker, J., Goedeke, S., Helias, M.: Optimal sequence memory in driven random networks. Phys. Rev. X 8(4), 041029 (2018)

Tirozzi, B., Tsodyks, M.: Chaos in highly diluted neural networks. EPL (Europhys. Lett.) 14(8), 727 (1991)

Sompolinsky, H., Crisanti, A., Sommers, H.J.: Chaos in random neural networks. Phys. Rev. Lett. 61, 259–262 (1988)

Sompolinsky, H., Crisanti, A., Sommers, H.J.: Chaos in neural networks: chaotic solutions. preprint (1990)

Crisanti, A., Sompolinsky, H.: Path integral approach to random neural networks. Phys. Rev. E 98(6), 062120 (2018)

Stern, M., Sompolinsky, H., Abbott, L.F.: Dynamics of random neural networks with bistable units. Phys. Rev. E 90, 062710 (2014)

Folli, V., Gosti, G., Leonetti, M., Ruocco, G.: Effect of dilution in asymmetric recurrent neural networks. Neural Netwk. 104, 50–59 (2018)

Derrida, B., Gardner, E., Zippelius, A.: An exactly solvable asymmetric neural network model. EPL (Europhys. Lett.) 4(2), 167 (1987)

Gardner, E., Derrida, B., Mottishaw, P.: Zero temperature parallel dynamics for infinite range spin glasses and neural networks. J. Phys. France 48(5), 741–755 (1987)

Baldassi, C., Braunstein, A., Zecchina, R.: Theory and learning protocols for the material tempotron model. J. Stat. Mech. 2013(12), P12013 (2013)

Mézard, M., Parisi, G., Virasoro, M.: Spin Glass Theory and Beyond: An Introduction to the Replica Method and Its Applications, vol. 9. World Scientific Publishing Company, Singapore (1987)

Mézard, M., Parisi, G.: The bethe lattice spin glass revisited. Eur. Phys. J. B 20(2), 217–233 (2001)

Yedidia, J.S., Freeman, W.T., Weiss, Y.: Characterization of belief propagation and its generalizations. IT-IEEE 51, 2282–2312 (2001)

Yedidia, J. S., Freeman, W. T., Weiss, Y.: Generalized belief propagation. In: Advances in neural information processing systems, pp. 689–695 (2001)

Lokhov, A.Y., Mézard, M., Zdeborová, L.: Dynamic message-passing equations for models with unidirectional dynamics. Phys. Rev. E 91(1), 012811 (2015)

Rocchi, J., Saad, D., Yeung, C.H.: Slow spin dynamics and self-sustained clusters in sparsely connected systems. Phys. Rev. E 97(6), 062154 (2018)

Rolls, E.T., Webb, T.J.: Cortical attractor network dynamics with diluted connectivity. Brain Res. 1434, 212–225 (2012)

Rolls, E.T.: Advantages of dilution in the connectivity of attractor networks in the brain. Biol. Inspired Cognit. Architect. 1, 44–54 (2012)

Witter, M. P.: Connectivity of the hippocampus. In: Hippocampal microcircuits, pp 5–26. Springer (2010)

Acknowledgements

We thank Guilhem Semerjian for useful discussions related to this work and in particular for contributing to the development of the procedure discussed in section Appendix A. J.R. and S.H. acknowledge the support of a grant from the Simons Foundation (No. 454941, Silvio Franz).

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Bernard Derrida.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: BP Equations

A well-known technique for computing the partition function, see Eq. 4 on a sparse graph is the so-called cavity method [31, 32]. Given the problem under consideration, one can construct a factor graph by introducing factor nodes \({\hat{i}}\)’s for each local constraint \( \psi _{i}({\underline{\sigma }}_{i}, {\underline{{\varvec{\sigma }}}}_{ {\partial i} } )\), see Fig. 6b. Even if the underlying graph \({\mathcal {G}}\) is tree-like as illustrated in Fig. 6a, the resulting factor graph contains many small loops as can be seen in Fig. 6b. This is notoriously a problem if we want to implement a cavity equation naively since this method is guaranteed to work well only on tree-like factor graphs.

a Original graph topology \({\mathcal {G}}\) on which we consider a parallel dynamics. b Corresponding factor graph for the counting cycles problem. For each node i, \({\hat{i}}\) corresponds to factor imposing the cycle condition of node i and its neighbors \(j_1\), \(j_2\) and \(j_3\). Because the neighbor \(j_1\) also has its own factor \({\hat{j}}_1\) that links to i, we see that the factor graph contains many short loops

An equivalent super-factor graph representation of the original problem in Fig. 6 is shown. For each node i of the original graph \({\mathcal {G}}\) we assign a super-factor \({\hat{i}}\), which corresponds to the cycle condition each node i has to satisfy. For each link (i, j) we assign a super-variable \(S_{ij} = ({\underline{\sigma }}_{ij}, {\underline{\sigma }}_{ji})\) containing both spin configurations node i and j by doubling the dimension of super variables. Then, the resulting graph topology retains the same graph structure as \({\mathcal {G}}\), and thus it allows us to use a cavity method as long as the original graph \({\mathcal {G}}\) is tree-like. The redundancy due to the duplication of spin variables is then handled by implementing additional local constraints such that all the variables \({\underline{\sigma }}_{ik}\) sharing the same first index are constrained to be the same

One way to overcome this problem is to consider a particular form of Generalized Belief Propagation (GBP) [33] proposed precisely to deal with loops. In the original formulation, the difficulty arising from the presence of loops is alleviated considering messages between regions of nodes that have a tree-like structure. In the present case, this comes at the price of doubling the state space and considering the super-factor graph shown in Fig. 7.

In this representation we introduce a super variable for each link (i, j) of \({\mathcal {G}}\) to hold two spin trajectories \(S_{ij} = ({\underline{\sigma }}_{ij},{\underline{\sigma }}_{ji})\). Here, the variable \( {\underline{\sigma }}_{ij} \) is interpreted as the duplicated copy of spin-trajectories of node i that is introduced due to the presence of link (ij). Then, this redundancy due to doubling the spin dimension is removed by adding delta function constraints to each factor node \({\hat{i}}\) to ensure that all spin variables sharing the same first index i are bound to be the same, i.e, \({\underline{\sigma }}_{ij} = {\underline{\sigma }}_{i}\) for all \(j \in {\partial i} \). From the above setting, we achieve a tree-like factor graph without losing the locality of constraints. This trick has been recently used in similar contexts [35, 36].

Having established a locally tree-like factor graph, it is straightforward to write the corresponding BP equations. Following the standard procedure, the message that factor \({\hat{i}}\) sends to node (ij) reads

where the first summation runs over all possible sub-trajectories of spins \( {\partial i \setminus j} \). Similarly, the messages from the super-variables to the super-factors can be written trivially as there exist only two links for each super-variables, namely

By combining Eqs. A.1, A.2, we can define a single set of messages \(m_{i \rightarrow j} (\sigma _i, \sigma _j) \equiv \eta _{(ij) \rightarrow {\hat{j}}} (S_{ij}) \propto \nu _{{\hat{i}}\rightarrow (ij)} (S_{ij})\) satisfying Eq. 10.

Once the messages are determined through the iterative process with Eq. 10 or equivalently A.1, A.2, the partition function in Eq. 4 can be viewed as the sum of three different contributions coming from super-nodes, super-factors, and super-links, namely, the partition function reads

where the first sum is made on all super-nodes, the second one on all super-factors and the third one on all the links between super-nodes and super-factors. For a general factor graph, these factors are given in terms of \(\nu _{{\hat{i}}\rightarrow (ij)}\) and \(\eta _{(ij) \rightarrow {\hat{i}}}\), i.e.,

where \(\psi _{{\hat{i}}}^S ({\varvec{S}}_{\partial {\hat{i}}})\) denotes the constraint on the super graph,

In our setting, these equations are compactly written in terms of the messages in Eq. 10:

and

where we have introduced another representation \(z_i = z_{{\hat{i}}}\) to drop the hat from \({\hat{i}}\), which is possible because factor nodes and original nodes are in one-to-one correspondence \({\hat{i}}\leftrightarrow i\). Additionally, one can immediately establish the relations \( z_{{\hat{i}},(ij)} = z_{{\hat{j}},(ji)} \) and \(z_{(ij)}=z_{{\hat{i}},(ij)}\). After making changes with these simplifications to Eq. A.3, one establishes Eq. 7.

Appendix B: Motifs Preventing Cycles of Lengths \(L <4\)

Illustration of graph motifs that forbid cycles with \(L<4\). If the coupling matrix satisfies both conditions in Eqs. B.1, B.2, the states of i and j are completely independent of the rest of the system. At the same time, the states can only form a periodic orbit of length 4, implying that there exist only cycles of length a multiple of 4 in the system

There exist certain motifs that forbid the existence of cycles of length \(L<4\). Let us consider a subset of graph \({\mathcal {G}}\) given by Fig. 8 where two nodes i and j are connected. Now, let us suppose that the couplings satisfy the following condition

If this condition is met, the state of spin \(\sigma _i^t\) is solely determined to be \(\sigma _j^{t-1}\) by the state of the spin j at time step \((t-1)\) according to Eq. 1. Additionally, let us impose a similar condition for the node j but with the negative sign:

Thus, it similarly follows that \( \sigma _j^{t} = - \sigma _i^{t-1} \). The existence of such conditions implies that even though the network is connected, there can be subsets of a network that are independent of the rest of the network. In this particular case, because of the imposed conditions, the pair \((\sigma _i^t, \sigma _j^t)\) can only form a periodic orbit of length 4, thus the length of the cycles in this system should be a multiple of 4. Considering that all the graph ensembles discussed in this paper have the finite probability per node of having such motifs as long as \(\epsilon >0\) and the connectivity is finite, the cycles of length shorter than \(L<4\) cannot exist in the thermodynamic limit. This result becomes more and more likely as \(\epsilon \) increases. In fact, for small values of \(\epsilon \) the couplings tend to be more symmetric and the occurrences of this motif is suppressed. In this case, the existence of cycles of length smaller than \(L=4\) is possible at finite sizes, as shown in Fig. 9.

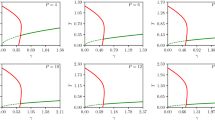

Complexity of cycles of length \(L=1\) and \(L=2\) for a RR graph with \(c=6\) as a function of \(\epsilon \) for \(N=100\). As mentioned in the text, these cycles disappears as N increases. However, at finite N and small \(\epsilon \), the probability of the existence of the motif discussed in the text is small and numerically it is not found

If the two conditions defined in Eqs. B.1 and B.2 holds for each couple of link, cycles are skew symmetric. A generic 4-cycle

is skew symmetric if

Such symmetry may be conveniently described by the quantity Q:

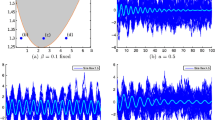

Because \(\sigma = \{-1,1\}\), which means that \(\vert {\varvec{\sigma }}\vert ^2=N\), we have \( -1 \le Q< 1\). If a cycle is skew symmetric (\(\sigma _1=-\sigma _3\) and \(\sigma _2=-\sigma _4\)), then \(Q=-1\). Additionally, if we consider only limit cycles of length 4, Q cannot be equal to 1. At \(\epsilon =2\), coupling are perfectly antisymmetric and our numerical results confirm that the limit cycles are of length \(L=4\), and all of them have \(Q=-1\). We then ran simulations and checked the skew symmetry of limit cycles of length 4 to study the Q values with decreasing connectivity parameter at \(\epsilon =1\) (from \(N=6\) to \(N=15\) and c ranging from 0.4 to 4). As an example, in Fig. 10 we report two histograms of the obtained Q values for \(N=10\), in the case of \(c=1\) and \(c=4\) (\(\epsilon =1\)). It is evident that the fraction of limit cycles with \(Q=-1\), which dominates in the case of \(c=4\), tends to disappear when considering the case of \(c=1\). To confirm this data, Fig. 11 shows the fraction of limit cycles of length 4 for which \(Q = -1\), as a function of c. In this case, all curves decrease on decreasing connectivity, consistently with the results of the previous figure. It is worth noticing that for large N the occurrences of situations like the one outlined in Fig. 8, which can be found only at sufficiently small values of c, increase, as well as the probability that \({{\,\mathrm{sign}\,}}(J_{ij})={{\,\mathrm{sign}\,}}(J_{ji})\) (given that \(\epsilon =1\)) in at least one pair of nodes, which is sufficient to break the skew-symmetry of the limit cycles of length 4. The second effect dominates over the first one at low connectivities and skew symmetricity disappears.

Appendix C: Additivity of Complexity in the Presence of Disconnected Clusters

In this appendix, we construct a simple relation for \(Z_L\) for the case of graphs with a finite number of connected clusters. Since each connected cluster is independent of the other ones, we have the following additivity property:

where \(N_C\) is the number of connected clusters and P(C) is the cluster size distribution. From the normalization condition, we have the following relation

If the clusters possess the same statistical properties and their sizes are sufficiently large, it is reasonable to assume that \(\ln Z_C\) follows the same asymptotic expansion of \(\ln Z_L\) i.e., \( \ln Z_C \sim C \Sigma _{L} + B_L + O(C^{-1})\). Under these conditions, the overall partition function reads

where we have used the normalization condition. Additionally, using Eq. (11), we reach a mismatch in the constant order, thus implying \(B_L =0\). This relation can potentially become wrong if \(N_C\) is extensive. In this case all the higher corrections in Eq. C.3 become of the same order as \(\Sigma _L\). Nevertheless, we found numerically that \(B_L\) is distributed close to our prediction \(B_L =0\).

Rights and permissions

About this article

Cite this article

Hwang, S., Lanza, E., Parisi, G. et al. On the Number of Limit Cycles in Diluted Neural Networks. J Stat Phys 181, 2304–2321 (2020). https://doi.org/10.1007/s10955-020-02664-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10955-020-02664-3