Abstract

We analyze the effect of additive fractional noise with Hurst parameter \(H > {1}/{2}\) on fast-slow systems. Our strategy is based on sample paths estimates, similar to the approach by Berglund and Gentz in the Brownian motion case. Yet, the setting of fractional Brownian motion does not allow us to use the martingale methods from fast-slow systems with Brownian motion. We thoroughly investigate the case where the deterministic system permits a uniformly hyperbolic stable slow manifold. In this setting, we provide a neighborhood, tailored to the fast-slow structure of the system, that contains the process with high probability. We prove this assertion by providing exponential error estimates on the probability that the system leaves this neighborhood. We also illustrate our results in an example arising in climate modeling, where time-correlated noise processes have become of greater relevance recently.

Similar content being viewed by others

1 Introduction

Fast-slow systems naturally arise in the modeling of several phenomena in natural sciences, when processes have widely differing rates [25, 27, 33]. The standard form of a fast-slow system of ordinary differential equations (ODEs) is given by

where x are the fast variables, y are the slow variables, \(\varepsilon >0\) is a small parameter, and f, g are sufficiently smooth vector fields; for a more detailed technical introduction regarding the analysis of (1) we refer to Sect. 2.1. Here we just point out the basic aspects from the modeling perspective. First, note that if \(\varepsilon =0\), then (1) becomes a parametrized set of ODEs, where the y-variables are parameters. Taking this viewpoint, all bifurcation problems [23, 35] involving parameters naturally relate to fast-slow dynamics if the parameters vary slowly, which is often a natural assumption in applications. Second, in practice, we also want to couple many dynamical systems. The resulting large/complex system is often multiscale in time and space. For example, in the context of climate modeling [13, 28] coupled processes can evolve on temporal scales of seconds up to millennial scales. Third, fast-slow systems are the core class of dynamical problems to understand singular perturbations [54], i.e., roughly speaking singular perturbations problems with small parameters are those, which degenerate in the limit of the small parameter into a different class of equations. Combining all these observations, it is not surprising that fast-slow systems have become an important tool in more theoretical as well as application-oriented parts of nonlinear dynamics [33].

However, when dealing with real life phenomena certain random influences have to be taken into account and quantified in a suitable way [18]. The most common stochastic process used to describe uncertainty is Brownian motion \(W=W_t\). One of its key features is the memory-less or Markov property, which means that the behavior of this process after a certain time \(T>0\) only depends on the situation at the current time T. In certain applications it may be desirable to model long-range dependencies and to take into account the evolution of the process up to time T. One of the most famous example is constituted by fractional Brownian motion (fBm) \(W^H=W^H_t\); see [30] for its first use. A fBm is a centered stationary Gaussian processes parameterized by the so-called Hurst index/parameter \(H\in (0,1)\). For \(H=1/2\) one recovers classical Brownian motion. However, for \(H\in (1/2,1)\) and \(H\in (0,1/2)\), fBm exhibits a totally different behavior compared to Brownian motion. Its increments are no longer independent, but positively correlated for \(H>1/2\) and negative correlated for \(H<1/2\). The Hurst index does not only influence the structure of the covariance but also the regularity of the trajectories. Fractional Brownian motion has been used to model a wide range of phenomena such as network traffic [52], stock prices and financial markets [37, 50], activity of neurons [17, 45], dynamics of the nerve growth [41], fluid dynamics [55], as well as various phenomena in geoscience [31, 38, 44]. However, the mathematical analysis of stochastic systems involving fBm is a very challenging task. Several well-known results for classical Brownian motion are not available. For instance, the distribution of the hitting time \(\tau _{a}\) of a level a is explicitly known for a Brownian motion, whereas for fBm, one has only an asymptotic statement, according to which

as t goes to infinity, see [39]. Furthermore, since fBm is not a semi-martingale, Itô-calculus breaks down. Therefore, it is highly non-trivial to define an appropriate integral with respect to the fBm. This issue has been intensively investigated in the literature. There are numerous approaches that exploit the regularity of the trajectories of the fBm in order to develop a completely path-wise integration theory and to analyze differential equations. For more details, see [19, 21, 22, 26, 36] and the references specified therein. Furthermore, another ansatz employed to define stochastic integrals with respect to fBm relies on the stochastic calculus of variations (Malliavin calculus) developed in [11]. In summary, fBm is a natural candidate process to aim to improve our understanding of correlated stochastic dynamics.

Our objective here is to combine the study of fast-slow systems and fBm by starting to study stochastic differential equations of the form

where we start with the case of additive noise for the fast variable(s) and assume there is a single regularly slowly-drifting variable y. For \(H=1/2\), i.e., for Brownian motion, there is a very detailed theory, how to analyze stochastic fast-slow systems [33]. One particular building block—initially developed by Berglund and Gentz—uses a sample paths viewpoint [4]. This approach has recently been extended to broader classes of spatial stochastic fast-slow systems [20] and it has found many successful applications; see e.g. [3, 32, 47, 51]. Therefore, it is evident that one should also consider the case of correlated noise in the fast-slow setup [24, 57].

Our key goal is to derive sample paths estimates for fast-slow systems driven by fBm with Hurst index \(H\in (1/2,1)\). We restrict ourselves to the case of additive noise and establish the theory for the normally hyperbolic stable case. Due to the technical challenges mentioned above, we need to derive sharp estimates for the exit times for processes solving certain equations driven by fBm. Exploring various properties of general Gaussian processes, we propose two variants to obtain optimal sample paths estimates.

Then we are going to apply our theory to a stochastic climate model [16, 42] describing the North-Atlantic thermohaline circulation forced by fractional Brownian motion. In fact, it is well-established that just using white noise modelling in climate models can be insufficient. The simple reason is that neglecting spatial and temporal correlations does not represent the statistics of large classes of underlying climate measurement data including temperature time series [14, 29], historical climate data [1, 2, 10], as well as large-scale simulation data [6]. In all these cases, an elegant way to model temporal correlations in climate science is fractional Brownian motion [1, 10, 29, 49, 58]. The reasoning to use a time correlated process can also be understood in climate dynamics in various intuitive ways. For example, in a larger-scale climate model, stochastic terms often represent unresolved degrees of freedom or small-scale fluctuations. If we consider the weather as a short-lived smaller scale effect in terms of the global long-term climate, then models for the latter must include noise with (positive) time correlations as weather patterns are positively correlated in time on short scales [8, 56]. Similarly, if the noise terms represent external forcing, such as input from another climate subsystem on a macro-scale, then also this input is likely to be correlated in time as there are internal correlations of the long-term behaviour of each larger-scale climate subsystem. In summary, this has motivated us to consider a model from climate dynamics as one possible key application for fast-slow dynamical systems with fractional Brownian motion. As mentioned above, in many other applications, fractional Brownian motion also naturally appears, so our modelling approach via fast-slow systems with with fBm is even more broadly applicable.

This work is structured as follows. In Sect. 2 we introduce basic notions from the theory of fast-slow systems and fractional Brownian motion. Furthermore, we state important estimates for the exit times of Gaussian processes which will be required later on. In Section 3, we generalize the theory of [4] by first deriving an attracting invariant manifold of the variance using the fast-slow structure of the system. Based on this manifold we define a region, where the linearization of the process is contained with high probability. In order to prove such statements, we first derive a suitable nonlocal Lyapunov-type equation for the covariance of the solution of a linear equation driven by fBm, the so-called fractional Ornstein–Uhlenbeck process. Thereafter we analyze two variants which entail sharp estimates for the exit times of this process. Furthermore, we consider more complicated dynamics and provide extensions of our results to the non-linear case, more complicated slow dynamics and finally discuss the case of fully coupled dynamics. We apply our theory to a model for the North-Atlantic thermohaline circulation and provide some simulations. Section 4 generalizes the sample paths estimates to higher dimensions in the autonomous linear case. Our strategy is based on diagonalization techniques, which allow us to go back to the one-dimensional case and apply the results developed in Sect. 3. For completeness, we provide an appendix which contains a detailed proof regarding the limit superior of a non-autonomous fractional Ornstein–Uhlenbeck processes. We conclude in Sect. 5 with an outlook of possible continuations of our results.

2 Background

2.1 Deterministic Fast-Slow Systems

In this section, we will briefly introduce the terminology of fast-slow systems. We restrict ourselves to the most important results tailored to our problem in the upcoming sections. For further details, see [33]. For the definition of the setting, all of the equations are to be understood formally. We will later add regularity assumptions sufficient to deduce important results. These also imply that the formal computation we will have performed before are valid.

Definition 2.1

A fast-slow system is an (ODE) of the form

where \(x=x_s\), \(y=y_s\) are the unknown functions of the fast time variable s, the vector fields are \(f: {\mathbb {R}}^m \times {\mathbb {R}}^n \times {\mathbb {R}}\rightarrow {\mathbb {R}}^m, g: {\mathbb {R}}^m \times {\mathbb {R}}^n \times {\mathbb {R}}\rightarrow {\mathbb {R}}^n\), and \(\varepsilon > 0\) is a small parameter. The x variables are called the fast variables, while y variables are called the slow variables. Transforming into another time scale by defining the slow time\(t = \varepsilon s\) yields the equivalent system

Depending on the situation both formulations in fast and slow time may be of use. In particular, under certain assumptions, considering them for \(\varepsilon \rightarrow 0\) indicates a lot of information for the underlying dynamics for the case \( 0<\varepsilon \ll 1\). The process for \(\varepsilon \rightarrow 0\) is called the singular limit. The singular limit of (3) for \(\varepsilon \rightarrow 0\)

is called the fast subsystem. The resulting system of the slow time formulation of the fast-slow system (4) for \(\varepsilon \rightarrow 0\)

is called the slow subsystem. The set

is called the critical set. If \(C_0\) is a manifold, it is also called the critical manifold. From now on, we assume that \(C_0\) is a manifold given by a graph of the slow variables, i.e.,

where \({\mathcal {D}} \subset {\mathbb {R}}^n\) is an open subset.

Theorem 2.2

(Fenichel–Tikhonov, [15, 27, 33, 53]) Let \(f,g \in {\mathcal {C}}^r({\mathbb {R}}^m \times {\mathbb {R}}^n \times {\mathbb {R}})\), \(1 \le r < \infty \), and their derivatives up to order r be uniformly bounded. Assume that \(C_0\) is uniformly hyperbolic. Then for an \(\varepsilon _0> 0\) there exists a locally invariant \({\mathcal {C}}^r\)-smooth manifold

for all \(\varepsilon \in (0,\varepsilon _0]\), where \({\bar{x}}(y,\varepsilon ) = x^*(y) + {\mathcal {O}}(\varepsilon )\) with respect to the fast variables. Furthermore, the local stability properties of \(C_{\varepsilon }\) are the same as the ones for \(C_{0}\).

2.2 Fractional Brownian Motion

In this section we state important properties of fBm, which will be required later on. For further details see [5, 40] and the references specified therein. We fix a complete probability space \((\Omega , {\mathcal {F}}, {\mathbb {P}})\) and use the abbreviation a.s. for almost surely.

Definition 2.3

Let \(H \in (0,1]\). A one-dimensional fractional Brownian motion (fBm) of Hurst index/parameter H is a continuous centered Gaussian process \((W_t^H)_{t \ge 0}\) with covariance

Note that for \(H>1/2\) the covariance of fBm satisfies

We further observe that:

-

(1)

for \(H=1/2\) one obtains Brownian motion;

-

(2)

for \(H=1\) then \(W^{H}_{t}= t W^{H}_{1}\) a.s. for all \(t\ge 0\). Due to this reason one always considers \(H\in (0,1)\).

The following result regarding the structure of the covariance of fBm holds true, see [40, Section 2.3].

Proposition 2.4

Let \(H>1/2\). Then, the covariance of fBm has the integral representation

where the integral kernel K is given by

for a positive constant \(c_{H}\) depending exclusively on the Hurst parameter.

We remark that for suitable square integrable kernels, one obtains different stochastic processes, for instance the multi-fractional Brownian motion or the Rosenblatt process, see [9]. We now focus on the most important properties of fBm. For the complete proofs of the following statements, see [40, Chapter 2].

Proposition 2.5

(Correlation of the increments) Let \((W^{H}_{t})_{t\ge 0}\) be a fBm of Hurst index \(H\in (0,1)\). Then its increments are:

-

(1)

positively correlated for \(H>1/2\);

-

(2)

independent for \(H=1/2\);

-

(3)

negatively correlated for \(H<1/2\).

Particularly, for \(H>1/2\) fBm exhibits long-range dependence, i.e.

whereas for \(H<1/2\)

Proposition 2.6

Let \((W^{H}_{t})_{t\ge 0}\) be a fBm of Hurst index \(H\in (0,1)\). Then:

-

(1)

[Self-similarity] For \(a\ge 0\)

$$\begin{aligned} (a^HW_t^H)_{t \ge 0} \overset{law}{=} (W_{at}^H)_{t \ge 0}, \end{aligned}$$(6)i.e. fBm is self-similar with Hurst index H.

-

(2)

[Time inversion] \(\Big (t^{2H}W^{H}_{1/t} \Big )_{t>0} \overset{law}{=}(W^{H}_t)_{t>0}. \)

-

(3)

[Stationarity of increments] For all \(h>0\)

$$\begin{aligned} (W_{t+h}^H - W^{H}_{h})_{t \ge 0} \overset{law}{=} (W_{t}^H)_{t \ge 0}. \end{aligned}$$ -

(4)

[Regularity of the increments] fBm has a version which is a.s. Hölder continuous of exponent \(\alpha <H\).

We conclude this section emphasizing the following result, which makes fBm very interesting from the point of view of applications, see [40, Section 2.4 and 2.5].

Proposition 2.7

Let \((W^{H}_{t})_{t\ge 0}\) be a fractional Brownian motion with Hurst index \(H\in (0,1/2)\cup (1/2,1)\). Then \((W^{H}_{t})_{t\ge 0}\) is neither a semi-martingale nor a Markov process.

2.2.1 Integration Theory for \(H > {1}/{2}\)

Since fBm is not a semi-martingale, the standard Itô calculus is not applicable. Due to this reason, the construction of a stochastic integral of a random function with respect to fBm has been a challenging question, see [5, 11] and the references specified therein. However, for deterministic integrands and for \(H>1/2\) the theory essentially simplifies. We deal exclusively with this case and indicate for the sake of completeness the theory of Wiener integrals of deterministic functions with respect to fBm, see [11]. Let \(T>0\) and

be the set of step functions on [0, T]. For \(h \in {\mathcal {E}}\) define the linear mapping \(I(h;T): {\mathcal {E}} \rightarrow L^2(\Omega )\)

Observe that I(h; T) defines a Gaussian random variable with

where

The representation of the variance can be easily verified by noting the following identity

Note that \(H>1/2\) is crucial here. For \(p > {1}/{H}\) we can bound the \(L^2(\Omega )\)-norm of \(h \mapsto I(h;T)\) as follows

where we have obtained the estimate by applying Hölder’s inequality and Young’s inequality for convolutions [7, Theorem 3.9.4]. The boundedness claim now follows as \(\Vert \phi \Vert ^2_{L^{p/(2p-2)}(0,T)} < \infty \) for \(p > \frac{1}{H}\). This means that \(I(\cdot ,T)\) is a bounded linear operator defined on the dense subspace \({\mathcal {E}} \subset L^p(0,T)\), so it can be uniquely extended to a bounded operator

This discussion justifies the following definition:

Definition 2.8

For \(f \in L^p(0,T)\) and \(t \in [0,T]\) we set

The integral process \(\left( I_p(f\mathbb {1}_{[0,t]};T) \right) _{t \in [0,T]}\) is by construction centered Gaussian. Regarding (7), its covariance can be immediately computed as follows.

Proposition 2.9

(Covariance of the integral) Let \(a,b>0\) and \(f, g \in L^{p}(0,T)\) for \(p>1/H\). Then

2.2.2 Stochastic Differential Equations Driven by Fractional Brownian Motion

After establishing a suitable stochastic integral with respect to the fractional Brownian motion, we consider stochastic differential equations (SDEs) given by:

The solution satisfies the integral formulation

where the stochastic integral was constructed in Sect. 2.2.1. Under certain classical regularity assumptions, existence and uniqueness of solutions for (9) can be proven. For more details, see [5, Theorem D.2.4].

Theorem 2.10

Let \(b: [0, \infty ) \times {\mathbb {R}}\rightarrow {\mathbb {R}}\) be globally Lipschitz in both variables, \(\sigma \in {\mathcal {C}}^1([0,\infty ))\) with \(\sigma \) and \(\frac{\mathrm {d}}{\mathrm {d}t}\sigma \) globally Lipschitz. Then for every \(T > 0\) the SDE (9) has a unique continuous solution on [0, T] a.s.

In this work one case we have to consider is a time-dependent linear drift, i.e., \(b(t,\cdot ):{\mathbb {R}}\rightarrow {\mathbb {R}}\) is linear with \(b(t,x):=A(t)x\) for every \(t\in [0,\infty )\) and \(x\in {\mathbb {R}}\). In this case, the solution of (9) is given by the variation of constants formula/Duhamel’s formula and is called non-autonomous fractional Ornstein–Uhlenbeck process.

Theorem 2.11

(Non-autonomous Fractional Ornstein–Uhlenbeck Process) Let \(A, B : [0, \infty ) \rightarrow {\mathbb {R}}\). Suppose that A is globally Lipschitz and uniformly bounded, and \(B \in {\mathcal {C}}^1 ([0, \infty ))\) with B as well as \(\frac{\mathrm {d}}{\mathrm {d}t}B\) globally Lipschitz. Then there exists an a.s. unique solution to the stochastic differential equation

which satisfies the variation of constants formula

Remark 2.12

Note that all the results discussed in this subsection extend to higher dimensions, since all previous steps can be done component-wise. Namely, for \(m\ge 1\) we mention.

-

(R1)

We call \((W^{H}_{t})_{t\ge 0}\) an m-dimensional fractional Brownian motion if \(W^{H}_{t}:=\sum \limits _{k=1}^{m} W^{k,H}_{t} e_{k}\), where \((e_{k})_{k\ge 1}\) is a basis in \({\mathbb {R}}^{m}\) and \((W^{k,H}_{t})_{t\ge 0}\), \(k=1\ldots m\), are independent one-dimensional fractional Brownian motions with the same Hurst index H.

-

(R2)

Naturally, existence and uniqueness of SDEs in higher dimension carry over from Theorem 2.10 under the same assumptions respectively. In particular, for coefficients \(A,B:[0,\infty )\rightarrow {\mathbb {R}}^{m}\) with \(m\ge 1\), satisfying the same assumptions as in Theorem 2.11, the solution of (10) is given by

$$\begin{aligned} X_t = \Phi (t,0) x_0 + \int _0^t \Phi (t,r) B(r) \mathrm {d}W_r^H,~~ \text{ a.s., } \end{aligned}$$where \(\Phi \) denotes the fundamental solution of \(x_t' = A(t)x_t\) and \((W^{H}_{t})_{t\ge 0}\) is an m-dimensional fractional Brownian motion.

2.3 Useful Estimates of Gaussian Processes

The fact that fBm is not a semi-martingale restricts the repository of known inequalities (such as Doob or Burkholder–Davies–Gundy) to establish sample paths estimates. A crucial property of fBm we shall exploit is its Gaussianity. In this section we will describe some useful estimates for exit times of certain Gaussian processes, which will be helpful for our analysis in the upcoming sections.

We first state the next auxiliary result regarding the Laplace transform of a Gaussian process. This was established in [12] by means of Malliavin calculus.

Lemma 2.13

(Proposition 3.5 [12]) Let \((Y_t)_{t\ge 0}\) be a centered Gaussian process with \(Y_0 = 0\) and covariance function \(R(s,t) := {\mathbb {E}}[Y_s Y_t]\) satisfying the following conditions:

-

(i)

\(\frac{\partial }{\partial s}R(s,t)\) exists and is continuous as a function on \([0, \infty ) \times [0, \infty )\),

-

(ii)

\(\frac{\partial }{\partial s}R(s,t) \ge 0\) for all \(t,s \ge 0\),

-

(iii)

\({\mathbb {E}}[\left| Y_t - Y_s \right| ^2] > 0\) for all \(t > s \ge 0\),

-

(iv)

\(\limsup _{t \rightarrow \infty } Y_t = \infty \) a.s.

Then for any \(\alpha >0\):

where \(V_{t} := R(t,t)\) and \(\tau _{c} := \inf \{r > 0: Y_r \ge c \}\).

In addition, we require the following form of Chebychev’s inequality.

Lemma 2.14

Let \(\varphi : {\mathbb {R}}\rightarrow [0, \infty )\) be measurable, Z a random variable and \(A \in {\mathcal {B}}({\mathbb {R}})\). Then

Proof

Under these assumptions we have

Taking expectation in the above inequality yields the result. \(\square \)

Lemma 2.15

Let \(c>0\) and \((Y_{t})_{t\ge 0}\) be a centered Gaussian process with \(Y_0=0\) satisfying the assumptions (i)–(iv) of Lemma 2.13. Then, for its exit time \(\tau _{c} := \inf \{r > 0: Y_r \ge c \}\), the following estimate holds:

Proof

Applying Lemma 2.14 for \(Z:=\tau _c\), \(\varphi (r) := \exp \left( \alpha V_r\right) \) and \(A := (0,t)\) we can bound the probability \({\mathbb {P}}( \tau _c < t)\) together with (11) as follows:

for all \(\alpha > 0\). Optimizing over \(\alpha \) and noticing that \(\sup _{0< r < t} V_r = \mathrm {Var}(Y_t)\) proves the statement. \(\square \)

The previous lemma established a Bernstein-type inequality solely relying on certain properties of the covariance function of Gaussian processes. Another useful estimate is given by [43, Theorem D.4], which is based on Slepian’s Lemma [48].

Theorem 2.16

Let \(T>0\) and \((Y_t)_{t\in [0,T]}\) be a centered Gaussian process with a.s. continuous trajectories. Assume that \((Y_t)_{t\in [0,T]}\) is a.s. mean-square Hölder continuous, i.e. there are constants G and \(\gamma \) such that

Then there exists a constant \(K := K(G,\gamma )\) such that for \(c > 0\) and \(A \subset [0,T]\)

where \(\sigma ^2(A) := \sup _{t \in A} \mathrm {Var}\left( Y_t\right) \).

This estimate can be sharpened if we restrict ourselves to the interval of interest.

Corollary 2.17

Let \(T>0\) and \((Y_t)_{t\in [0,T]}\) be a centered Gaussian process with a.s. continuous trajectories. Assume that \((Y_t)_{t\in [0,T]}\) is a.s. mean-square Hölder continuous, i.e. there are constants G and \(\gamma \), such that

Then there exists a constant \(K := K(G,\gamma )\) such that for \(c > 0\) and \(0 \le a < b \le T\)

where \(\sigma ^2 := \sup _{a \le t < b} \mathrm {Var}\left( Y_t\right) \).

Proof

\((Z_t)_t\) with \(Z_t := Y_{t+a}\) satisfies the assumptions of Theorem 2.16 on \([0,b-a]\). \(\square \)

3 The One-Dimensional Case

In this section, we investigate the dynamics of a planar stochastic fast-slow system driven by fractional Brownian motion \((W_s^H)_{s\ge 0}\) with Hurst parameter \(H >\frac{1}{2}\):

Its equivalent formulation in slow time, i.e. for \(t = \varepsilon s\) is

using the self-similarity of fBm (6). We are interested in the normally hyperbolic stable case and therefore make the following assumptions.

Assumption 3.1

Stable case

-

(1)

Regularity: The functions \(f \in {\mathcal {C}}^2({\mathbb {R}}\times [0,\infty )^2;{\mathbb {R}})\) and \(F \in {\mathcal {C}}^1([0,\infty );(0,\infty ))\), as well as all their existing derivatives up to order two are uniformly bounded on an interval \(I = [0, \infty )\) or \(I = [0,T]\), \(T > 0\), by a constant \(M > 0\).

-

(2)

Critical manifold: There is an \(x^*: [0,\infty ) \rightarrow {\mathbb {R}}\) such that

$$\begin{aligned} f(x^*(t),t,0) = 0 \end{aligned}$$for all \(t \in [0,\infty )\).

-

(3)

Stability: For \(a(t) := \partial _x f(x^*(t),t,0)\) there is \(a > 0\) such that

$$\begin{aligned} a(t) \le -a \end{aligned}$$for all \(t \in [0,\infty )\).

Under these assumptions, (12) has a unique global solution according to Theorem 2.10. Furthermore, the deterministic system, i.e., for \(\sigma = 0\), given by

has an asymptotically slow manifold \({\bar{x}}(t, \varepsilon ) = x^*(t) + {\mathcal {O}}(\varepsilon )\) for \(\varepsilon > 0\) small enough due to Fenichel–Tikhonov (Theorem 2.2). We expect that, given small noise \(0 < \sigma \ll 1\), the trajectories of (12) starting sufficiently close to \({\bar{x}}(0, \varepsilon )\) remain in a properly chosen neighborhood of \({\bar{x}}(t, \varepsilon )\) for a long time with high probability. Our goal will be to make this idea rigorous by pursuing the following steps. We first linearize the system around the slow manifold to get an SDE describing the deviations induced by the noise. This helps us obtain a simple description of a suitable neighborhood by using the fast-slow structure inherited by the variance of the system. Then, using this neighborhood, we deduce sample paths estimates for the linear case starting on the slow manifold. To complete the discussion we generalize the result to the non-linear case starting sufficiently close to the slow manifold, that is, such that in the deterministic case solutions are still attracted by the slow manifold. This general strategy inspired by [4], where a similar system driven by Brownian motion (Hurst parameter \(H = \frac{1}{2}\)) is analyzed. Yet, the several techniques used in [4] do not generalize to fBm.

3.1 The Linearized System

The deterministic system

has an asymptotically stable slow manifold \({\bar{x}}(t, \varepsilon ) = x^*(t) + {\mathcal {O}}(\varepsilon )\) due to Fenichel–Tikhonov (Theorem 2.2). As already outlined, our first step is to examine the behavior of the linearized system around \({\bar{x}}(t, \varepsilon )\). For a solution \((x_t)_{t \in I}\) of (12) we set \(\xi _t: = x_t - {\bar{x}}(t, \varepsilon )\). Then \((\xi )_{t \in I}\) satisfies the equation

where

by Taylor’s remainder theorem. Due to the uniform boundedness of the derivatives of f one can show that the \({\mathcal {O}}(\varepsilon )\)-term is negligible on finite time scales as \(\varepsilon \rightarrow 0.\) Therefore, we restrict ourselves without loss of generality to the analysis of the linearization

Examining the process starting on the slow manifold now corresponds to investigating the unique explicit solution of (14) for initial value \(\xi _0 = 0\), which is given by the fractional Ornstein–Uhlenbeck process (recall Theorem 2.11)

where \(\alpha (t,u) := \int _u^t a(r) \mathrm {d}r\). In order to define a proper neighborhood, where the fractional Ornstein–Uhlenbeck process \((\xi _t)_{t \in I}\) is going to stay with high probability, we use the variance \(\mathrm {Var}(\xi _t)\) as an indicator for the deviations at time t. According to Proposition 2.9, the variance is given by

As we would like to see dynamics of \(t \mapsto \mathrm {Var}(\xi _t)\), we rescale it by \(\frac{1}{\sigma ^2}\) to get rid of the small parameter \(\sigma \ll 1\), which only changes the order of magnitude of the system. It turns out that \(t \mapsto w(t)\) inherits the fast-slow structure from the SDE, which yields a particularly simple approximation of the variance.

Proposition 3.2

The so-called renormalized variance w satisfies the fast-slow ODE

In particular, there is a (globally) asymptotically stable slow manifold of the system of the form

Proof

Differentiating \(t \mapsto w(t)\) yields

In order to be able to take the singular limit \(\varepsilon \rightarrow 0\) and apply Fenichel–Tikhonov (Theorem 2.2) we need to prove sufficient regularity in \(\varepsilon = 0\); continuous differentiability will be enough for the approximation of the slow manifold with the critical manifold up to order \({\mathcal {O}}(\varepsilon )\). To do this, rewrite the integral by substituting \(v = \frac{t-u}{\varepsilon }\)

To see that the right hand side of (15) is continuously differentiable in \(\varepsilon = 0\) it is sufficient to check it for the integral term

which has an existing limit for \(\varepsilon \rightarrow 0,\) because the exponential term goes to 0 faster than the polynomial term diverges. Now taking the singular limit \(\varepsilon \rightarrow 0\) gives the slow subsystem

The critical manifold is hence given by

Using integration by parts we can rewrite \((2H-1)\Gamma (2H-1) = \Gamma (2H)\), so that the critical manifold can also be written as

By Theorem 2.2, the ODE (15) has a solution of the form

which is asymptotically stable due to Assumption 3.13. This stability property is even global in this case because the ODE (15) is linear. \(\square \)

As expected, the critical manifold depends on the Hurst parameter H. Note that as \(\Gamma (x) \rightarrow 1\) for \(x \rightarrow 1\), the slow subsystem for \(H \rightarrow \frac{1}{2}\) reads

which coincides with the slow subsystem we would obtain in the case of Brownian motion noise, which exactly corresponds to \(H = \frac{1}{2}\).

Remark 3.3

The proof of Proposition 3.2 only shows that \(\zeta \) is \({\mathcal {C}}^1\) in \(\varepsilon \) and \({\mathcal {C}}^1\) in the time t. Depending on the properties of f, we expect \(\zeta \) to even have higher regularity. However, this fact is not required in the following considerations.

Proposition 3.2 already states that the slow manifold is a good indicator for the size of the set we are looking for as \(t \mapsto \frac{1}{\sigma ^2} \mathrm {Var}(\xi _t)\) (as a solution of (15) with initial datum \(w(0) = 0\)) is attracted by the slow manifold. In this particular case we can explicitly state the exponentially fast approach due to the structure of the linear equation

where \(\alpha (t) := \alpha (t,0)\). Even more is known about the properties of \(\zeta \). Due to the uniform boundedness assumption on f and F we get that the difference between \(\zeta \) and \(\frac{F(t)^2}{\left| a(t)\right| ^{2H}} H \Gamma (2H)\) is actually in uniform t. This implies that for \(\varepsilon \) small enough there are \(\zeta ^+\) and \(\zeta ^-\) such that

The goal is now to prove that the stochastic process \((\xi )_{t \in I}\) is concentrated in sets of the form

To get a better understanding of what to expect, note that the probability that \(\xi \) leaves \({\mathcal {B}}(h)\) at time t can be bounded by using the inequality \({\mathbb {P}}(X>c) \le \exp \left( - \frac{c^2}{2\mathrm {Var}(X)}\right) \), which holds for any centered Gaussian random variable X. This further leads to

Of course, the probability that \((\xi )_{t \in I}\) has exited \({\mathcal {B}}(h)\) in the interval [0, t] at least once

is larger, where \(\tau _{{\mathcal {B}}(h)} := \inf \{ r > 0: (\xi _r,r) \notin {\mathcal {B}}(h)\}\) is the first time \((\xi )_{t \in I}\) has exited \({\mathcal {B}}(h)\). We will present a few approaches to estimate this probability in the following, using the inequalities we have established in Section 2.3. The increase of probability compared to (18) is simply indicated by the prefactor of \(\exp \left( - \frac{h^2}{2\sigma ^2}\right) \).

Remark 3.4

It could also have been possible to define the neighborhood \({\mathcal {B}}(h)\) by considering the critical manifold \(w^*\), i.e. to define

This will yield the same bounds on the exit times, which we will establish in the following because the difference between \(\zeta (t)\) and \(w^*(t)\) is only in \({\mathcal {O}}(\varepsilon ),\) which is of the same order as the order we obtain by approximating with the slow manifold \(\zeta \) anyways.

3.1.1 Variant 1

The first approach is based on the result on exit times of Gaussian processes with sufficiently regular and increasing covariance function, as stated in Lemma 2.15.

Theorem 3.5

Let \(t \in I.\) Then under Assumption 3.1 for any \(h>0\) the following estimate holds true for \(\varepsilon >0\) sufficiently small

Proof

In order to apply the estimate given in Lemma 2.15 to our problem observe that \(\xi \) may not satisfy all the assumptions. First of all we need well-definedness of the Ornstein–Uhlenbeck process over the whole non-negative real line \([0,\infty )\); this is guaranteed by Assumption 3.1. In addition, we consider the process given by \(X_t = e^{-\alpha (t)/\varepsilon }\xi _t\). Note that the event that \(\xi _t\) exceeds a certain level \(c \ge 0\) corresponds exactly to the event of \(X_t\) exceeding \(e^{-\alpha (t)/\varepsilon }c\), that is \(\{ \xi _t \ge c\} = \{ X_t \ge e^{-\alpha (t)/\varepsilon }c\}\). Unfortunately this observation does not carry over to \(\sup _{0 \le r \le t} X_r\) and \(\sup _{0 \le r \le t} \xi _r\). In fact, a priori we only have the relation \(\{ \sup _{0 \le r \le t} \xi _r \ge c\} \subset \{ \sup _{0 \le r \le t} X_r \ge c\}\), which yields a too strong estimate in the end as we are increasing the variance by an exponentially increasing factor, while maintaining the same exit level! A way to overcome this is to partition the interval [0, t] to suitable subintervals \([t_i,t_{i+1})\) to obtain the relation \(\{\sup _{t_i \le r \le t_{i+1}} \xi _r \ge c\} \subset \{ \sup _{t_i \le r \le t_{i+1}} X_r \ge e^{-\alpha (t_i)/\varepsilon }c\}\). This partition will also turn out to be useful to control the variance with the slow manifold in the exponential of the estimate. The covariance of \((X_t)_{t \in I}\) is given by

By the theorem for differentiation of parameter dependent integrals we deduce that \(\frac{\partial }{\partial t} R(t,r)\) exists and is continuous in \([0,\infty )\times [0,\infty )\). Furthermore, \(\frac{\partial }{\partial t} R(t,r) \ge 0\) for all \(t,r \ge 0\) is an immediate consequence of the fundamental theorem of calculus as the integrand is always greater equal than 0. This already implies assumption (i) und (ii) of Lemma 2.15. For assumption (iii) it suffices to observe that

is a Gaussian process with nonzero variance. Assumption (iv) follows by Corollary A.2. This implies that \((e^{-\alpha (t)/\varepsilon }\xi _t)_{t \in I}\) satisfies a Bernstein-type inequality due to Lemma 2.15

After having established this result, we can proceed as in the proof of Proposition 3.1.5 [4]. For \(\gamma \in (0, 1/2)\) let \(0 = t_0< t_1< \ldots < t_N\) be a partition containing the interval [0, t] such that

(Note that \(t_N \ge t\) is possible. But this only increases the estimate on the probability slightly. As we would like to optimize over \(\gamma \) in the end, it does not make sense to fix it to obtain \(t_N = t\).) For each \(i \in \{0, \ldots , N-1\}\) we have

where the last inequality follows by \({\dot{\zeta }}(r) = {\mathcal {O}}(1)\), which is proven in Lemma 3.6. Now by subadditivity of the probability measure

where the last inequality is due to \(e^{-2\gamma } \ge 1 - 2\gamma \). Due to monotonicity of \(\lceil \cdot \rceil \) , it suffices to minimize

in order to find the minimal value of this estimate. Optimizing over \(\gamma \) hence yields

which finishes the proof. \(\square \)

Lemma 3.6

The slow manifold \(\zeta (t)\) satisfies \({\dot{\zeta }}(t) = {\mathcal {O}}(1)\).

Proof

Note that

satisfies the corresponding invariance equation for the fast-slow ODE (15) up to error \({\mathcal {O}}(\varepsilon ).\) This implies that \(\zeta (t) = w^*(t,\varepsilon ) + {\mathcal {O}}(\varepsilon )\). Plugging this representation of \(\zeta \) into the ODE (15) yields directly \(\varepsilon {\dot{\zeta }}(t) = {\mathcal {O}}(\varepsilon )\). \(\square \)

3.1.2 Variant 2

The second approach uses the fact that \((\xi _t)_{t \in I}\) is mean-square Hölder continuous. This is also going to enable to control the deviations based upon Theorem 2.16.

Theorem 3.7

Let \(t \in I.\) Then under Assumption 3.1 there is a constant \(K = K(t,\varepsilon ,\sigma ,H) > 0\), such that for any \(h>0\) the following estimate holds true for \(\varepsilon >0\) sufficiently small

where \(F_+ := \sup _{r \in [0,\infty )} F(r) < \infty .\)

Proof

Let \(t>0\). In order to apply Theorem 2.16 we have to prove mean-square Hölder continuity of \(\left( \xi _r\right) _{r\in [0,t]}\). For \(t\ge r_1 > r_2 \ge 0\)

Lipschitz continuity of \(r \mapsto e^{\alpha (r,u)/\varepsilon }\) for arbitrary \(u \ge 0\) (with Lipschitz constant \(L = \frac{a_+}{\varepsilon }\), where \(a_+ := \sup _{r \in I} |a(r)|\)) yields for (20)

Similarly we can show for (21)

Last but not least (22) can be estimated as follows

By combining the three estimates we obtain that, for a constant \(G = G(t,\varepsilon ,\sigma ,H) > 0\), it holds

Let \(0 = t_0< t_1< \ldots < t_N = t\) be a partition of the interval [0, t] such that

For each \(i \in \{0, \ldots , N-1\}\) we have by Corollary 2.17 for \(K = K(G, 2H)\)

where the last inequality follows by \({\dot{\zeta }}(r) = {\mathcal {O}}(1)\), see Lemma 3.6. This yields by the subadditivity of the probability measure

Therefore, the proof is finished. \(\square \)

3.2 Comparison of the Two Variants

In this section, we will compare the two variants in view of varying the noise intensity given by \(\sigma > 0\) and the time scale parameter \(\varepsilon > 0\). In order to better understand what to expect under these variations we first heuristically describe their effect on the underlying SDE

We can directly see that a smaller \(\sigma \) reduces the intensity of the fraction Brownian motion noise. In particular, as \(\sigma \) is decreasing, the probability that \((\xi _r)_{r \in I}\) exits \({\mathcal {B}}(h)\) on some interval [0, t] should become smaller. For a smaller \(\varepsilon \) the attraction towards the slow manifold becomes stronger, however also the noise intensity increases. We expect small deviations if \(\frac{\sigma }{\varepsilon ^H}\) is sufficiently small.

Suppose we are in the situation of Theorems 3.5 and 3.7. To simplify the comparison the results of both variant 1

and variant 2

where \(K = K(t,\varepsilon ,\sigma ,H) > 0\), are displayed here. Unfortunately, we do not know the dependence of K on the other parameters, so we can only do a qualitative comparison up to some extent. By looking at the proof of Theorem 3.7 we guess that K is increasing in G, so that we assume for the forthcoming analysis that K is increasing in \(\sigma \), decreasing in \(\varepsilon \) and increasing in t. In variant 1, we see the same interplay of \(\sigma \) and h as already observed in the analysis of (18) because the exponential dominates the linear term in the prefactor, and the same holds true for variant 2 as long as \(\varepsilon \ll \frac{h^2}{\sigma ^2}\) (for \(\exp \left( \frac{h^2}{2\sigma ^2}{\mathcal {O}}(\varepsilon )\right) \) to remain relatively small), which is true in many applications. For \(\varepsilon \rightarrow 0\) the estimate in variant 1 becomes larger, whereas in variant 2 it does not seem to have a huge effect on the bound. However, the increase might be hidden in K. As the time t increases, it obviously becomes more likely that \(\xi _t\) has already exited \({\mathcal {B}}(h)\) at least once. In variant 1 this increase is displayed linearly in t as \(r \mapsto a(r)\) is uniformly bounded and thus \(\alpha (t) = \Theta (t)\). Variant 2 shows an increase which is at least linear in t because K might be increasing in t as well. This means that in variant 1 we have to pick h large enough such that \(\frac{h^2}{\sigma ^2}\) is significantly larger than \(\ln \left( \frac{t}{\varepsilon }\right) \). For variant 2 we have to choose h in a suitable way that \(\frac{h^2}{\sigma ^2}\) is larger than \(\frac{h^2}{\sigma ^2}{\mathcal {O}}(\varepsilon ) + \frac{1}{H}\ln (h) + \ln (Kt)\). Although we cannot prove it, it seems that variant 1 yields a sharper bound. Last but not least, note that the estimate in variant 1 coincides with the estimate derived for the Brownian motion case, see [4, Proposition 3.1.5]. The dependence of the Hurst parameter H is completely hidden in the structure of the neighborhood \({\mathcal {B}}(h)\), which depends on the slow manifold. This also intuitively makes sense because we are “almost” dividing by the variance. Furthermore, in the Brownian motion case this estimate is quite close to the actual distribution of the exit time \(\tau _{{\mathcal {B}}(h)}\), see [4, Theorem 3.1.6] and the comments below.

3.3 Back to the Original System

Now that we have convinced ourselves that the most promising estimate is given in Theorem 3.5 it remains to generalize the result to different scenarios which may be of interest.

3.3.1 The Nonlinear Case

Recall that we have rewritten the SDE (13) satisfied by the deviations around the slow manifold \(\xi _t = x_t - {\bar{x}}(t, \varepsilon )\)

with a being the linear drift term and b containing the (possible) nonlinearities of the equation satisfying \(\left| b(x,t,\varepsilon ) \right| \le M \left| x \right| ^2\). As we expect that the nonlinear term b does not influence the deviations too strongly near the critical manifold, we use the same neighborhood \({\mathcal {B}}(h)\). This in particular implies that we keep the same simple description of it, which we have derived in Proposition 3.2. The bound on b will help us to control it inside of \({\mathcal {B}}(h)\). For the case that \((x_t)_{t \in I}\) is starting on the slow manifold, i.e. \(\xi _0 = 0\) we obtain:

Theorem 3.8

Let \(t \in I\). For h sufficiently small it holds

where \(\kappa = 1 - {\mathcal {O}}(h)\).

Proof

As previously motivated before we treat the nonlinear drift term b as perturbation of the linear system, i.e. split the solution of (13)

where \(\xi ^0_t\) is a solution to the linear system, which we have already studied in detail, and

Then for \(h = h_0 + h_1\), \(\,h_0, h_1 \ge 0\) we consider

It remains to prove that \(P_1(h_1)\) is small. Observe that due to continuity of \((\xi _t)_{t \in I}\) we have for \(r < \tau _{{\mathcal {B}}(h)}\)

This enables us to control \(\frac{\left| \xi ^1_r \right| }{\sqrt{\zeta (r)}}\) inside of \({\mathcal {B}}(h)\) thanks to the bound on the Taylor remainder term b

Hence, choosing \(h_1 = 2 \frac{Mh^2}{a}\frac{\zeta ^+}{\sqrt{\zeta ^-}}\) results in \(P_1(h_1) = 0\). Note that this is choice is possible as long as \(h \le \frac{a}{2M} \frac{\sqrt{\zeta ^-}}{\zeta ^+}\), which is in \({\mathcal {O}}(1)\), so requiring \(h \gg \sigma \) is possible. Indeed, the choice of h is usually even “smaller” than \({\mathcal {O}}(1)\), so that \(h_0 = h - h_1 = h(1-{\mathcal {O}}(h))\). Applying Theorem 3.5 to \(h_0\) now yields the claim. \(\square \)

Remark 3.9

With this approach we lose some accuracy (\(\kappa = 1 - {\mathcal {O}}(h)\) instead of \(\kappa = 1\)) in the exponential. This has more effect on the increase of probability than in the prefactor. To overcome this difficulty it might be better to adapt the neighborhood depending on the nonlinearities.

3.3.2 Behavior Close to the Slow Manifold

For the deterministic system we get in the case of a uniformly asymptotically stable slow manifold that solutions starting close to it are attracted exponentially fast. Given low enough noise intensity a similar behavior can be observed in the noisy system, i.e., solutions have small deviations around the deterministic solution and after some (small) time \(t_0\) we can again observe small deviations around the slow manifold.

Theorem 3.10

Let \(t > 0\), \(d>0\). There is \(\delta > 0\) and some time \(t_0 > 0\) such that the solutions \((x_t)_{t \in I}\) of (12) with initial condition \(x_0\) satisfying

are attracted by the slow manifold. That is, up to time \(t_0\) the solution \((x_t)_{t \in I}\) is close to the deterministic solution \(x^{\det }\)

where the \(\tilde{}\) denotes the different values due to linearization around \(x^{\det }\) instead of the slow manifold \({\bar{x}}(t,\varepsilon )\). After \(t_0\) we obtain almost the same behavior as in the case where \(x_0 = x(0,\varepsilon )\), i.e. for \(t \ge t_0\)

Proof

Exponentially fast attraction means that there are constants \(\delta ,C,\kappa > 0\) such that for \(x_0 \in {\mathbb {R}}\) with \(\left| x_0 - {\bar{x}}(0,\varepsilon ) \right| < \delta \) it holds

Consider an initial value \(x_0 \in {\mathbb {R}}\) with \(\left| x_0 - x(0,\varepsilon ) \right| < \delta \) and denote by \(x^{\det }\) the solution to the deterministic system (\(\sigma = 0\)) in (12). Instead of linearizing (12) around \({\bar{x}}(t,\varepsilon )\), like we did in (13), we linearize it around \(x^{\det }.\) This procedure yields qualitatively the same linearization, with \({\tilde{a}}(t) := \partial _x f(x_t^{\det }, t, 0)\) instead of \(a(t,\varepsilon ),\) or respectively a(t), see discussion before, and \({\tilde{\zeta }}\) adapted accordingly. In particular, even for the nonlinear case we obtain by Theorem 3.8

where \({\tilde{\alpha }}(t) = \int _0^t {\tilde{a}}(r) \mathrm {d}r\) and \(\kappa = 1 - {\mathcal {O}}(h).\) Choose \(t_0\) such that for distance d

that is \(t_0 \ge \frac{\varepsilon }{\kappa } \ln \left( \frac{C\delta }{d}\right) \). Furthermore, we have by the mean value theorem

for some \({\tilde{x}} = \lambda x_t + (1- \lambda ) {\bar{x}}(t,\varepsilon ), \, \lambda \in [0,1].\) So that for \(a(t,\varepsilon ) = \partial _x f({\bar{x}}(t,\varepsilon ),t,\varepsilon )\)

We want this distance to be of order at most \(\varepsilon \), so that in total \(t_0 \ge \max \left\{ \frac{\varepsilon }{\kappa } \ln \left( \frac{C\delta }{d}\right) , \frac{\varepsilon }{\kappa } \ln \left( \frac{CM\delta }{\varepsilon }\right) \right\} .\) Then, up to time \(t_0\), we can use the estimate above for \((x_t)_{t \in I}\) close to its deterministic solution. And after \(t_0\) the process is already close to the slow manifold and its dynamics, so it makes sense to look at the deviations around \({\bar{x}}(r,\varepsilon )\). Splitting again \(h = h_0 + h_1, \, h_0, h_1 \ge 0\)

Choosing \(h_1 = d\) we obtain \({\mathbb {P}}\left( \sup _{t_0 \le r < t} \frac{\left| {\bar{x}}(r,\varepsilon ) - x_r^{\det } \right| }{\sqrt{\zeta (r)}} \ge h_1 \right) = 0.\) Furthermore, since \(h_0 = h - d\), we have

which finishes the proof. \(\square \)

3.3.3 More Complicated Slow Dynamics

So far, we have considered the case where the slow dynamics is completely uniform and regular, i.e.,

However, in applications many interesting systems contain more complicated slow variables. In fact, this is particularly relevant if one wants to reduce the dynamics to the slow manifold. The reduced equation usually qualitatively describes the dynamics of the slow variables around the slow manifold quite well. This section will clarify that the theory developed so far can be extended to more complicated slow dynamics in two steps. We first generalize our result to deterministic slow dynamics, which may also influence the diffusion term and then consider a fully coupled system.

3.3.4 Deterministic Slow Dynamics

Hence, we consider systems of the form

We have to adapt the assumptions a bit.

Assumption 3.11

Stable case, non-trivial slow dynamics

-

1.

Regularity The functions \(f \in {\mathcal {C}}^2({\mathcal {D}} \times [0, \infty );{\mathbb {R}})\), \(g \in {\mathcal {C}}^2(\pi _2({\mathcal {D}})\times [0, \infty );{\mathbb {R}})\) and \(F \in {\mathcal {C}}^1(\pi _2({\mathcal {D}});(0,\infty ))\), as well as their derivatives up to order 2 are uniformly bounded on an open subset \({\mathcal {D}} \subset {\mathbb {R}}^2\) by a constant \(M \ge 0\). Here \(\pi _2\) is the projection onto the second coordinate.

-

2.

Critical manifold There is an \(x^*: {\mathcal {D}}_0 \rightarrow {\mathbb {R}}\) for \({\mathcal {D}}_0 \subset \pi _2({\mathcal {D}})\) open such that

$$\begin{aligned} C_0 = \{ (x,y) \in {\mathcal {D}}: ~ y \in {\mathcal {D}}_0, \, x = x^*(y)\} \end{aligned}$$is a critical manifold of the system (23).

-

3.

Stability For \(a(y) := \partial _x f(x^*(y),y, 0)\) there is \(a > 0\) such that

$$\begin{aligned} a(y) \le -a \end{aligned}$$for all \(y \in {\mathcal {D}}_0\).

-

4.

Global existence The solutions (x, y) of (23) are defined for all \(t \in [0, \infty )\).

Under these assumptions the system (23) has an attracting slow manifold

where \({\bar{x}}(y, \varepsilon ) = x^*(y) + {\mathcal {O}}(\varepsilon )\) due to Theorem 2.2 (Fenichel–Tikhonov). (Again, this \({\mathcal {O}}(\varepsilon )\) is uniform in y.) We linearize the fast variable around \(C_\varepsilon \). For a solution (x, y) of (23) set \(\xi _t = x_t - {\bar{x}}(y_t, \varepsilon )\), then \((\xi ,y)\) satisfies the equation

where due to Taylor’s remainder theorem

Now we can proceed as in the case for trivial slow dynamics by first considering solutions starting on the slow manifold, i.e. \((\xi _0, y_0) = (0, y_0), \,y_0 \in {\mathcal {D}}_0\), and using the terms

This way we obtain the same qualitative bound (also for the nonlinear case) as before, which also coincides with intuition, as more involved dynamics on the slow manifold should not influence the attracting behavior of it.

3.3.5 Fully Coupled Dynamics

Now that we have seen the idea how to generalize to more complicated dynamics we give an exposition of the more general case, where the slow variables may even be random, particularly be perturbed by fBms with different Hurst parameters \(H_1, H_2 \in (\frac{1}{2},1)\). We consider the following system

which will turn out to be interesting in applications, see Sect. 3.4. The following assumptions will suffice to obtain a qualitatively similar result to the one-dimensional case, analyzed in Sect. 3.

Assumption 3.12

Stable case, fully coupled system

-

1.

Regularity The functions \(f \in {\mathcal {C}}^2({\mathcal {D}} \times [0, \infty );{\mathbb {R}})\) and \(g \in {\mathcal {C}}^2({\mathcal {D}}\times [0, \infty );{\mathbb {R}})\) as well as their derivatives up to order 2 are uniformly bounded on an open subset \({\mathcal {D}} \subset {\mathbb {R}}^2\).

-

2.

Critical manifold There is an \(x^*: {\mathcal {D}}_0 \rightarrow {\mathbb {R}}\) for \({\mathcal {D}}_0 \subset \pi _2({\mathcal {D}})\) open such that

$$\begin{aligned} C_0 = \{ (x,y) \in {\mathcal {D}}: ~ y \in {\mathcal {D}}_0, \, x = x^*(y)\} \end{aligned}$$is a critical manifold of the system (25). Here \(\pi _2\) is the projection onto the second coordinate.

-

3.

Stability For \(a(y) := \partial _x f(x^*(y),y, 0)\) there is \(a > 0\) such that

$$\begin{aligned} a(y) \le -a \end{aligned}$$for all \(y \in {\mathcal {D}}_0\).

-

4.

Global existence The solutions (x, y) of (25) are defined for all \(t \in [0, \infty )\).

Remark 3.13

Note that the theory discussed in Sect. 2.2.1 does not provide the technical details regarding the existence and uniqueness of solutions for coupled systems driven by fractional Brownian motion. However, this can be extended to systems of the form (25). We refer to [40, Theorem 3.3] for further details.

Similarly as before the system (25) has an attracting slow manifold given by

where \({\bar{x}}(y, \varepsilon ) = x^*(y) + {\mathcal {O}}(\varepsilon )\) due to Theorem 2.2, where again the \({\mathcal {O}}(\varepsilon )\)-term is uniform in y. The strategy to establish sample paths estimates for (25) is to successively linearize both fast and slow variables around their deterministic counterpart (i.e. the solution for \(\sigma _1 = \sigma _2 = 0\)) denoted by \(y_t^{\det }\), \({\bar{x}}(y_t^{\det },\varepsilon )\). The deviations are then described by

They satisfy the following SDE, whose form is obtained by successively applying Taylor’s theorem (\(\mathrm {int.}\) always stands for the appropriate intermediate value)

where

so that \(\left| b(x, y,t,\varepsilon ) \right| \le M_1 (x^2+|xy|),\) where \(M_1\) is uniform in the variables due to the uniform boundedness assumption.

where

In particular the nonlinearity term satisfies for some \(M_2 \ge 0\) (again uniform in the variables)

In order to prove that \(x_t\) is concentrated in the neighborhood \({\mathcal {B}}(h) := \{ (x,y) \in {\mathbb {R}}^2: \left| x \right| < h \sqrt{\zeta (y)}\}\) around the slow manifold \(C_{\varepsilon }\) with high probability define the exit times

Then we partition the event of \(x_t\) in the following way

Note that the first probability is of the form

By the same technique used to prove Theorem 3.8 we get

which is valid as long as \(h \zeta ^+ + \sqrt{\zeta ^-} {\tilde{h}} \le \frac{a \sqrt{\zeta ^-}}{2M_1}.\) It remains to estimate \({\mathbb {P}}\left( \tau _{\eta ,{\tilde{h}}} \le t \wedge \tau _{{\mathcal {B}}(h)} \right) \). This issue is however tightly linked to investigating the behavior of non-stable or even non-hyperbolic dynamics under fractional noise because we have no additional assumptions on the slow dynamics. In the event \(\{\tau _{\eta ,{\tilde{h}}} \le t\}\) we conjecture that a reduction to the slow variables should be possible. The reduced equation is then given by

which will be illustrated by the simulations presented at the end of Sect. 3.4.

3.4 Example

We consider the climate model analyzed in [4, Section 6.2.1]. It is a simple model describing the difference of temperature \(\Delta T = T_1 - T_2\) and salinity \(\Delta S = S_1 - S_2\) between low latitude (\(T_1,\)\(S_1\)) and high latitude (\(T_2,\)\(S_2\)) by a system of coupled differential equations

Here \(\tau _r\) stands for the relaxation time of \(\Delta T\) to its reference value \(\theta \), F is the freshwater flux, H the depth of the ocean, \(S_0\) a reference salinity. Furthermore, \(\tau _d\) is the diffusion timescale, q the Poiseuille transport coefficient and V the volume of the box the system is contained in. The influence of external sources, internal fluctuations, and/or microscopic effects can be incorporated into the model via noise terms. For example, daily weather variations certainly influence the temperature T and salinity S. Yet, a precise/detailed modelling of these terms would be far too expensive computationally and would make the model intractable analytically. We know, as discussed in the introduction to this work, that using white noise generally does not represent temperature fluctuations correctly but these are usually positively correlated. Since we have no further basic knowledge of the stochastic process it is quite natural to start by considering fBm with Hurst index \(H>1/2\). This allows us to model Gaussianity and positive correlations in time. After transforming our model into dimensionless variables \(x = \Delta T / \theta \), \(y = \alpha _S \Delta S / (\alpha _T \theta )\), rescaling time by \(\tau _d\) and taking into consideration fractional noise with Hurst parameter \(H > \frac{1}{2}\), this yields the system

where \(\varepsilon = \tau _r/\tau _d\), \(\eta ^2 = \tau _d(\alpha _T \theta )^2 q / V\), and \(\mu = F \cdot \alpha _S S_0 \tau _d / (\alpha _T \theta H)\). Note that the previous system is of the form (25). We consider the solution on a bounded time interval [0, T], \(T >0\) to ensure the uniform boundedness of the corresponding functions, as imposed in Assumption 3.12.

The slow subsystem of the deterministic system is given by

In particular, it has a normally hyperbolic critical manifold, namely

which is even stable, as \(\frac{\mathrm {d}}{\mathrm {d}x} \left( -(x-1)\right) = -1.\) By Theorem 2.2 there exists an invariant manifold

In order to apply the estimate from Theorem 3.5 note that \(f(x,y,\varepsilon ) = -(x_t-1) - \varepsilon x_t (1+ \eta ^2(x_t - y_t)^2)\), so that

Hence we have

By Proposition 3.2 there is an attracting slow manifold for the variance of the form

We conclude that, in the case that \(y_t\) is deterministic, sample paths starting on the slow manifold \({\bar{x}}(y,\varepsilon )\) are concentrated in the set

or, more precisely, for \(0 < t \le T\) and initial data \((x_0, y_0) = ({\bar{x}}(y_0,\varepsilon ),y_0)\)

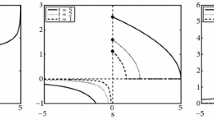

Figure 1 indicates that for small enough noise the dynamics around the slow manifold should be governed by the equation

Equation (27) simulated for Hurst parameter \(H=0.7\), \(\varepsilon = 0.01\), \(\sigma _2 = 0\) and different \(\sigma _1.\) The stochastic solution is displayed red, the deterministic one is blue, the critical manifold is in green and the neighborhood \({\mathcal {B}}(h)\) for \(h = 0.2\) is in black (Color figure online)

In (28) \(\eta ^2\) is a fixed parameter, while \(\mu \) is proportional to the freshwater flux. It can be hence treated as a slowly varying parameter compared to the rescaled salinity. By setting \(X := y\) and \(Y := \mu \) we obtain another fast slow system subject to some noise

where \(g \in {\mathcal {C}}^2({\mathbb {R}}^2;{\mathbb {R}}).\) In particular, we can apply our theory again on the two stable branches of the new slow manifold. We simulate now the reduced equation, similarly to [32, Section 7.1], where the same system was considered with respect to the Brownian motion. The results for two different Hurst parameters (i.e. \(H=0.6\) and \(H=0.8\)) are illustrated in Figs. 2 and 3.

Equation (29) simulated for Hurst parameter \(H=0.6\), \(\varepsilon = 0.01\). and different noise. The stochastic solution is displayed red, the deterministic one is blue, the critical manifold is in green and the neighborhood \({\mathcal {B}}(h)\) for varying h is in black (Color figure online)

Equation (29) simulated for Hurst parameter \(H=0.8\), \(\varepsilon = 0.01\). and different noise intensity. The stochastic solution is displayed red, the deterministic one is blue, the critical manifold is in green and the neighborhood \({\mathcal {B}}(h)\) for varying h is in black (Color figure online)

4 The Multi-dimensional Case

In this section, we make the first steps towards extending our theory to the multi-dimensional case. Note that we keep the same notation as for the one-dimensional objects. We start again with uniform slow dynamics and consider the fast-slow system system in slow time

under the following assumptions. Let \(m\ge 1\).

Assumption 4.1

Stable autonomous multi-dimensional case

-

1.

Regularity The function \(f \in {\mathcal {C}}^2({\mathbb {R}}^m \times [0,\infty )^2;{\mathbb {R}})\), as well as its derivatives up to order 2 are uniformly bounded by a constant \(M \ge 0\) on an interval \(I = [0, \infty )\) or \(I = [0,T]\), \(T > 0\).

-

2.

Critical manifold There is an \(x^*: [0,\infty ) \rightarrow {\mathbb {R}}^m\) such that

$$\begin{aligned} f(x^*(t),t,0) = 0 \end{aligned}$$for all \(t \in [0,\infty )\).

-

3.

Stability The critical manifold is asymptotically stable, i.e. the Jacobian matrix

$$\begin{aligned} A(t) := \partial _x f(x^*(t),t,0) \end{aligned}$$only contains eigenvalues with negative real part. In addition, its linearization is independent of time, i.e. \(A(t) \equiv A.\)

-

4.

Noise\((W^{H}_{t})_{t\ge 0}\) is an m-dimensional fractional Brownian motion.

These assumptions guarantee the existence and uniqueness of (30) due to Remark 2.12(R2). Furthermore, recall that under these assumptions there is a slow manifold

due to Fenichel–Tikhonov (Theorem 2.2). We start again by examining the behavior of the linearized system around \(C_{\varepsilon }\). For a solution \((x_t)_{t \in I}\) of (30) set \(\xi _t := x_t - {\bar{x}}(t, \varepsilon )\), then \((\xi _t)_{t \in I}\) satisfies the equation

where

with \(\left\| \cdot \right\| _{2}\) being the operator norm with respect to the Euclidean norm. For simplicity, we analyze the linearization with A being the drift term instead of \(A + {\mathcal {O}}(\varepsilon )\), i.e. we consider

The solution for \(\xi _0 = 0\) (\((x_t)_{t \in I}\) starting on the slow manifold \(C_{\varepsilon }\)) is given by

Its covariance (matrix) can be computed as

In the same way as in the one-dimensional case the rescaled covariance \(t \mapsto \Xi (t)\) inherits the fast-slow structure.

Proposition 4.2

The so-called renormalized covariance \(\Xi (t)\) satisfies the fast-slow ODE

where

In particular, there is an (even globally) asymptotically stable slow manifold of the system of the form

where

Proof

We again differentiate \(t \mapsto \Xi (t)\) to obtain the ODE

where

In order to be able to take the singular limit \(\varepsilon \rightarrow 0\) and apply Fenichel–Tikhonov (Theorem 2.2) we need to prove at least one times continuous differentiability in \(\varepsilon = 0\). To do this, rewrite \(\frac{1}{\varepsilon ^{2H-1}}P(t)\) by substituting \(v = \frac{t-u}{\varepsilon }\)

This implies continuity in \(\varepsilon = 0.\) To see that the right hand side of (34) is continuously differentiable in \(\varepsilon = 0,\) it is sufficient to check it for the integral P(t)

where the limit for \(\varepsilon \rightarrow 0\) exists because the exponential term dominates the polynomial term in \(\varepsilon .\) The slow subsystem hence reads

where

This is a Lyapunov equation, and according to Lemma 4.4 it has the unique solution

By Fenichel–Tikhonov (Theorem 2.2) we conclude that there is an asymptotically stable manifold of the form

Note again that the stability property, which carries over from the critical manifold, is even global due to linearity of the ODE (34). \(\square \)

Remark 4.3

We need that linearization (32) is autonomous in this section for taking the singular limit in (36). In the non-autonomous case we need to compute the limit of \(\Phi (t,t-\varepsilon v)\) for \(\varepsilon \rightarrow 0.\) We suspect that \(\Phi (t,t-\varepsilon v) \rightarrow e^{A(t)v}.\)

In order to investigate a multi-dimensional Lyapunov-Equation, we rely on the following result, see [4, Lemma 5.1.2].

Lemma 4.4

(Lyapunov Equation) Let \(A \in {\mathbb {R}}^{p \times p}\) and \(B \in {\mathbb {R}}^{q \times q}\) with eigenvalues \(a_1, \dots , a_p\) and \(b_1, \dots , b_q.\) Then the operator \(L: {\mathbb {R}}^{p\times q} \rightarrow {\mathbb {R}}^{p\times q}\) defined by

has eigenvalues of the form \(\left\{ a_i + b_j \right\} _{i=1,\dots ,p,j=1,\dots ,q}.\) In particular, L is invertible if and only if A and \(-B\) don’t have any common eigenvalue. Moreover, if all eigenvalues of A and B have negative real part, then for any \(C \in {\mathbb {R}}^{p \times q}\) the unique solution of the so-called Lyapunov equation\(AX + XB + C = 0\) is of the form

Note again that due to the linearity of the operator \(LX = AX + XA^\top \) the rescaled covariance \(t \mapsto \frac{1}{\sigma ^2}\mathrm {Cov}(\xi _t)\) as solution of (34) with starting value \(\frac{1}{\sigma ^2}\mathrm {Cov}(\xi _0) = 0\) satisfies the following equation

which explicitly depicts the exponentially fast approach of the covariance towards the slow manifold, as it could have been already concluded by Fenichel–Tikhonov (Theorem 2.2). This justifies the choice of our neighborhood this time, depending on the critical manifold

As already previously mentioned in Remark 3.4 choosing the neighborhood depending on the critical manifold instead of the slow manifold does not worsen our estimates. So we expect the same to be true in the higher dimensional case. Therefore, we have used the critical manifold \(X^*\) (which is time-independent in our case) this time because our strategy depends on diagonalizing, and we do not spell out the additional technical details regarding the \({\mathcal {O}}(\varepsilon )\)-term.

4.1 Estimates on the Deviations

4.1.1 No Restrictions on the Linearization

The proof of Theorem 3.7 can be immediately extended the multi-dimensional case by proving the mean-square Hölder continuity in each component of the covariance.

Lemma 4.5

Let \(t \ge r_1 > r_2 \ge 0\), then there is a constant \(G = G(t,\varepsilon ,\sigma ,H)>0\) such that

Proof

Let \(t \ge r_1 > r_2 \ge 0\), then

Since A only has eigenvalues with negative real part we have for \(r \ge u \ge 0\)

and for \(r_1 \ge u \ge 0\), \(r_2 \ge u \ge 0\)

where \(a := \max \{ |\lambda |: \lambda \text { eigenvalue of } A\}.\) This enables us to prove the result similarly as in the one-dimensional case, i.e., a straightforward calculations using the last result now shows the required Hölder bounds by estimating (38)–(41). \(\square \)

The mean-square Hölder continuity of \((\xi _t)_{t \in I}\) implies the same for each component. Hence, we can establish the following qualitative result.

Theorem 4.6

Let \(t \in I.\) Then under Assumption 4.1 there is a constant \(K = K(t,\varepsilon ,\sigma ,H) >0\) such that for \(h >0\) the following estimate holds true for \(\varepsilon >0\) small enough

where \(\lambda _k \ge 0\) with \(\sum _{k=1}^m \lambda _k = 1\) and \(d^*_k\) denote the (time-independent) eigenvalues of \(X^*.\)

Proof

Note that the critical manifold \(X^*\) is symmetric and in the autonomous case it is time independent in addition. This implies that it is diagonalizable with respect to an orthogonal matrix O (independent of time). Let \(O^\top = \left( O_1^\top , \dots , O_m^\top \right) \), where \(O_k\) denotes the k-th row of O and \(d^*_k(r) \equiv d^*_k\) be the corresponding eigenvalues. This enables us to reduce the problem to the estimate of the one-dimensional problem, using the notation \(D^*(r) = \mathrm {diag}(d_k^*),\)

We have already proven that \((\xi _t)_{t \in I}\) is mean-square Hölder continuous in Lemma 4.5, which directly implies the same property for the components in the O-coordinate system. This means that we can apply Theorem 2.16 for \(O_k \xi \). This leads to

Now note that (37) written in the k-th component in the O-coordinate system reads as

This further implies

Summing over the dimensions yields

and this finishes the proof. \(\square \)

In the case when A is normal we get a nice description of the \(d_k^*\). The corresponding k-th component of the diagonalized critical manifold results in

which simplifies even further if all eigenvalues are real

4.1.2 Symmetric Linearization

From now on, we consider the case when A is a symmetric matrix (i.e. \(A = A^\top \)). The reason for this restriction is that in the following the proves to bound the probability of \((\xi _t)_{t \in I}\) exiting the neighborhood up to time t

is based on linearizing the underlying system and understanding the structure of the eigenvalues of the covariance. We actually require normality of A for this strategy to work as it is sufficient to use the functional equality of the matrix exponential. Furthermore, \(e^A\) inherits the normality structure, which is a necessary and sufficient criterion to characterize the eigenvalues of \(e^{A\lambda }e^{A^\top \mu },\)\(\lambda ,\mu \ge 0\). To be able to generalize the result of variant 1 it is crucial that the eigenvalues of A are all real. These two criteria already imply that A is symmetric. In particular, we see that the critical manifold is of the form

Thanks to the discussion above the we can consider the diagonalization \( D^*(t) := UX^*(t)U^\top \). Its k-th (\(k=1,\dots ,m\)) diagonal component is given by

Similarly we can rewrite the covariance

and diagonalize it with respect to the same U and consider its k-th (\(k=1,\dots ,m\)) component

We obtain the following result

Theorem 4.7

Let \(t \in I.\) Then under Assumption 4.1 and if A is in addition symmetric with real eigenvalues \(a_1, \dots , a_m\) there is a constant such that for \(h >0\) the following estimate holds for \(\varepsilon >0\) small enough

where \(a_{+} = \max \{|\mu |: \mu \text { eigenvalue of }A\}.\)

Proof

Let \(U^\top = \begin{pmatrix} U_1^\top&\cdots&U_m^\top \end{pmatrix}\), where \(U_k\) denotes the k-th row of U. Now

for \(\lambda _k \ge 0\) with \(\sum \limits _{k=1}^m \lambda _k = 1\)

Due to the normality of \(e^{A(t-u)}\) (inherited by A) the Gaussian process \(U_k \xi \) has variance

In particular, we can show that the process \(\left( e^{-a_kt}U_k\xi _t\right) _{t \ge 0}\) satisfies the assumptions of Lemma 2.15, so that we can apply the Bernstein-type inequality. (The proof is completely analogous as in the one-dimensional case, see proof of Theorem 3.5.) To get a relation between the k-th value of the diagonalized covariance and the corresponding component of the critical manifold consider (37) in the U-coordinate system

as \(d^*(t) \equiv d^*\) is actually independent of time in our case. Now, we can use the same strategy as variant 1 in the one dimensional case for each k. For \(\gamma \in (0, 1/2)\) let \(0 = t_0< t_1< \ldots < t_N\) be a partition containing the interval [0, t] such that

We start by estimating the probability of the exit time on \([t_i,t_{i+1})\) for \(i \in \{0, \dots , N-1 \}\)

Applying Lemma 2.15

Taking the union of the events that \(U_k\xi \) has exited \({\mathcal {B}}(h)\) in \([t_i,t_{i+1})\) and using the subadditivity of the probability measure, yields

Finding the minimal bound with respect to \(\gamma \) now corresponds to optimizing

due to the monotonicity of \(\lceil \cdot \rceil \). The optimal value is achieved for

Plugging this in the estimate gives the bound for the k-th component

Summing over the dimensions

where \(a_+ := \max \{|\mu |: \mu \text { eigenvalue of }A\}.\) The optimal value is now attained by choosing \(\lambda _k = \frac{1}{m}.\) This yields

The proof is complete. \(\square \)

Due to the symmetry of A we could have diagonalized the SDE in the beginning (i.e. look at it in the U-coordinate system) and done the whole theory established in Chapter 2 to get the existence of a slow manifold \(\zeta _k(t)\) for \(\mathrm {Var}(U_k \xi _t)\), which is of the form

However, we decided to use the results on the higher dimensional systems as much as possible to clearly indicate which steps of the proof can be generalized to more general classes of matrices beyond symmetric ones.

5 Outlook

This work provides a first step towards the investigation of fast-slow systems driven by fBm using sample paths estimates. So far we have examined the behavior close to a normally hyperbolic attracting invariant manifold in finite dimensions. Numerous extensions could be considered as next steps.

Having covered the uniformly attracting case, it is then natural to conjecture that there are scaling laws for the fluctuations as fast subsystem bifurcation points are approached, i.e., when hyperbolicity is lost. These results are available in the fast-slow Brownian motion case [32]. However, even when the fast dynamics is dominated by nonlinear terms [46] or one considers fast-slow maps with bounded noise [34] using modified proofs and additional technical tools it is possible to save many results. This robustness of the scaling laws near the loss of normal hyperbolicity leads one to conjecture that it will still be possible to prove such results for the fast-slow fBm case when \(H\in (1/2,1)\).

However, the analysis of fast-slow systems for \(H \in (0,1/2)\) is expected to be more complicated due to several reasons. First of all, a different integration theory has to be considered, see for instance [5, 11]. Furthermore, the kernel (8) we have used to develop an approximation of the variance by means of the slow manifold has a non-integrable singularity for \(H<1/2\). Last but not least, Bernstein-type inequalities as established in Lemma 2.15 do not hold true anymore, since the covariance function of the fractional Brownian motion is negative. Consequently, one has to develop completely different techniques in this case. Another related extension would be to analyze the dynamics of fast-slow systems driven by multiplicative noise. This issue, however, requires a more general theory than Itô-calculus because the fractional Brownian motion is not a semi-martingale.

Furthermore, one could consider other stochastic processes with memory. More precisely, one could think of other stochastic processes whose covariance functions are represented by

for suitable square integrable kernels K, recall (5). Beyond fBm, further examples in this sense are the multi-fractional Brownian motion or the Rosenblatt process [9]. However, the analysis of fast-slow systems in this case is a challenging question, since these processes do not have in general stationary increments and are no longer Gaussian (as e.g. Rosenblatt processes).

Finally, one can also broaden the scope of the applications. Although climate dynamics is certainly a very important topic, where time-correlated noise is well-motivated by data such as temperature measurements, it is not the only possible application. Other areas, where fast-slow systems with fBm could be considered are financial markets. For example, assets could be modelled as fast variables influenced by fBm stochastic forcing, while the slow variables are political/social factors influencing the market, which change on a much slower time scale in many cases. Similar remarks and examples of concrete applications are likely also exist in many contexts in neuroscience, ecology and epidemiology, where stochastic fast-slow systems with Brownian motion are already used frequently.

References

Ashkenazy, Y., Baker, D.R., Gildor, H., Havlin, S.: Nonlinearity and multifractality of climate change in the past 420,000 years. Geophys. Res. Lett. (2003). https://doi.org/10.1029/2003GL018099

Barboza, L., Li, B., Tingley, M.P., Viens, F.G.: Reconstructing past temperatures from natural proxies and estimated climate forcings using short-and long-memory models. Ann. Appl. Stat. 8(4), 1966–2001 (2014)

Berglund, N., Gentz, B.: The effect of additive noise on dynamical hysteresis. Nonlinearity 15(3), 605–632 (2002)

Berglund, N., Gentz, B.: Noise-Induced Phenomena in Slow-Fast Dynamical Systems. Springer, London (2006)

Biagini, F., Hu, Y., Øksendal, B., Zhang, T.: Stochastic Calculus for Fractional Brownian Motion and Applications. Springer, London (2008)

Blender, R., Fraedrich, K.: Long time memory in global warming simulations. Geophys. Res. Lett. (2003). https://doi.org/10.1029/2003GL017666

Bogachev, V.I.: Measure theory. Number Bd. 1 in measure theory. Springer, Berlin (2007)

Chekroun, M.D., Liu, H., Wang, S.: Stochastic parameterizing manifolds and non-Markovian reduced equations: stochastic manifolds for nonlinear SPDEs II. Springer, New York (2014)

Čoupek, P., Maslowski, B.: Stochastic evolution equations with Volterra noise. Stochastic Process. Appl. 127(3), 877–900 (2017)

Davidsen, J., Griffin, J.: Volatility of unevenly sampled fractional Brownian motion: an application to ice core records. Phys. Rev. E 81(1), 016107 (2010)

Decreusefond, L., et al.: Stochastic analysis of the fractional Brownian motion. Potential Anal. 10(2), 177–214 (1999)

Decreusefond, L., Nualart, D.: Hitting times for Gaussian processes. Ann. Probab. 36(1), 319–330 (2008)

Dijkstra, H.A.: Nonlinear Climate Dynamics. CUP, (2013)

Eichner, J.F., Koscielny-Bunde, E., Bunde, A., Havlin, S., Schellnhuber, H.J.: Power-law persistence and trends in the atmosphere: a detailed study of long temperature records. Phys. Rev. E 68(4), 046133 (2003)

Fenichel, N.: Geometric singular perturbation theory for ordinary differential equations. J. Differ. Equat. 31(1), 53–98 (1979)

Franzke, C.L., O’Kane, T.J., Berner, J., Williams, P.D., Lucarini, V.: Stochastic climate theory and modeling. Wiley Interdiscip. Rev. 6(1), 63–78 (2015)

Gao, F.-Y., Kang, Y.-M., Chen, X., Chen, G.: Fractional Gaussian noise-enhanced information capacity of a nonlinear neuron model with binary signal input. Phys. Rev. E 97(5), 052142 (2018)

Gardiner, C.: Stochastic methods, 4th edn. Springer, Berlin (2009)

Garrido-Atienza, M.J., Lu, K., Schmalfuss, B.: Local pathwise solutions to stochastic evolution equations driven by fractional Brownian motions with Hurst parameter \({H} \in (1/3, 1/2] \). Discret. Cont. Dyn. B 20, 11 (2014)

Gnann, M., Kuehn, C., Pein, A.: Towards sample path estimates for fast-slow SPDEs. Euro. J. Appl. Math. 30(5), 1004–1024 (2019)

Gubinelli, M., Lejay, A., Tindel, S.: Young integrals and SPDEs. Potential Anal. 25(4), 307–326 (2006)

Gubinelli, M., Tindel, S., et al.: Rough evolution equations. Ann. Probab. 38(1), 1–75 (2010)

Guckenheimer, J., Holmes, P.: Nonlinear Oscillations, Dynamical Systems, and Bifurcations of Vector Fields. Springer, New York (1983)

Hairer, M., Li, X.-M.: Averaging dynamics driven by fractional Brownian motion. Ann. Probab. (2019) (to appear)

Hek, G.: Geometric singular perturbation theory in biological practice. J. Math. Biol. 60(3), 347–386 (2010)

Hesse, R., Neamţu, A.: Local mild solutions for rough stochastic partial differential equations. J. Differential Equat. 267(11), 6480–6538 (2019)