Abstract

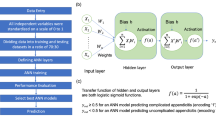

An accurate diagnosis of acute appendicitis in the early stage is often difficult, and decision support tools to improve such a diagnosis might be required. This study compared the levels of accuracy of artificial neural network models and logistic regression models for the diagnosis of acute appendicitis. Data from 169 patients presenting with acute abdomen were used for the analyses. Nine variables were used for the evaluation of the accuracy of the two models. The constructed models were validated by the “.632+ bootstrap method”. The levels of accuracy of the two models for diagnosis were compared by error rate and areas under receiver operating characteristic curves. The artificial neural network models provided more accurate results than did the logistic regression models for both indices, especially when categorical variables or normalized variables were used. The most accurate diagnosis was obtained by the artificial neural network model using normalized variables.

Similar content being viewed by others

References

Andersson, R. E., Meta-analysis of the clinical and laboratory diagnosis of appendicitis. Br. J. Surg. 91:28–37, 2004.

Eldar, S., Nash, E., Sabo, E., Matter, I., Kunin, J., Mogilner, J. G., and Abrahamson, J., Delay of surgery in acute appendicitis. Am. J. Surg. 173:194–198, 1997.

Arnbjornsson, E., Scoring system for computer-aided diagnosis of acute appendicitis. The value of prospective versus retrospective studies. Ann. Chir. Gynaecol. 74:159–166, 1985.

Eskelinen, M., Ikonen, J., and Lipponen, P., A computer-based diagnostic score to aid in diagnosis of acute appendicitis. A prospective study of 1333 patients with acute abdominal pain. Theor. Surg. 7:86–90, 1992.

Alvarado, A., A practical score for the early diagnosis of acute appendicitis. Ann. Emerg. Med. 15:557–564, 1986.

Ohmann, C., Franke, C., and Yang, Q., Clinical benefit of a diagnostic score for appendicitis: results of a prospective interventional study. German Study Group of Acute Abdominal Pain. Arch. Surg. 134:993–996, 1999.

Tzanakis, N. E., Efstathiou, S. P., Danulidis, K., et al., A new approach to accurate diagnosis of acute appendicitis. World J. Surg. 29:1151–1156, 2005.

Kharbanda, A. B., Taylor, G. A., Fishman, S. J., and Bachur, R. G., A clinical decision rule to identify children at low risk for appendicitis. Pediatrics 116:709–716, 2005.

Hosseini, H. G., Luo, D., and Reynolds, K. J., The comparison of different feed forward ANN architectures for ECG signal diagnosis. Med. Eng. Phys. 28:372–378, 2006.

Ottenbacher, K. J., Linn, R. T., Smith, P. M., Illig, S. B., Mancuso, M., and Granger, C. V., Comparison of logistic regression and ANN analysis applied to predicting living setting after hip fracture. Ann. Epidemiol. 14:551–559, 2004.

Baxt, W. G., Shofer, F. S., Sites, F. D., and Hollander, J. E., A neural computational aid to the diagnosis of acute myocardial infarction. Ann. Emerg. Med. 39:366–373, 2002.

Pesonen, E., Eskelinen, M., and Juhola, M., Comparison of different neural network algorithms in the diagnosis of acute appendicitis. Int. J. Biomed. Comput. 40:227–233, 1996.

Efron, B., and Tibshirani, R., Improvement on cross-validation: The .632+ bootstrap method. J. Am. Stat. Assoc. 92:548–560, 1997.

Sivit, C. J., Siegel, M. J., Applegate, K. E., and Newman, K. D., When appendicitis is suspected in children. Radiographics 21:247–262, 2001.

Wehberg, S., and Schumacher, M., A comparison of nonparametric error rate estimation methods in classification problems. Biom. J. 46:35–47, 2004.

Hanley, J. A., and McNeil, B. J., The meaning and use of the area under a receiver operating characteristic (ROC) curve. Radiology 143:29–36, 1982.

Zweig, M. H., and Campbell, G., Receiver-operating characteristic (ROC) plots: a fundamental evaluation tool in clinical medicine. Clin. Chem. 39(4):561–577, 1993.

Bartfay, E., Mackillop, W. J., and Pater, J. L., Comparing the predictive value of neural network models to logistic regression models on the risk of death for small-cell lung cancer patients. Eur. J. Cancer Care 15:115–124, 2006.

Marchand, A., Van Lente, F., and Galen, R. S. The assessment of laboratory tests in the diagnosis of acute appendicitis. Am. J. Clin. Pathol. 80:369–374, 1983.

Gronroos, J. M., Forsstrom, J. J., Irjala, K., and Nevalainen, T. J., Phospholipase A2, C-reactive protein, and white blood cell count in the diagnosis of acute appendicitis. Clin. Chem. 40:1757–1760, 1994.

Clyne, B., and Olshaker, J. S., The C-reactive protein. J. Emerg. Med. 17:1019–1025, 1999.

Hua, J., Lowey, J., Xiong, Z., and Dougherty, E. R., Noise-injected neural networks show promise for use on small-sample expression data. BMC Bioinformatics 7:274, 2006.

Basheer, I. A., and Hajmeer, M., Artificial neural networks: fundamentals, computing, design, and application. J. Microbiol. Methods 43:3–31, 2000.

Austin, P. C., and Brunner, L. J., Inflation of the type I error rate when a continuous confounding variable is categorized in logistic regression analyses. Stat. Med. 23:1159–1178, 2004.

Altman, D. G., Lausen, B., Sauerbrei, W., and Schumachar, M., Dangers of using ‘optimal’ cutpoints in the evaluation of prognostic factors. J. Natl. Cancer Inst. 86:829–835, 1994.

Lee, C. C., Golub, R., Singer, A. J., Cantu, R., Jr., and Levinson, H., Routine versus selective abdominal computed tomography scan in the evaluation of right lower quadrant pain: a randomized controlled trial. Acad. Emerg. Med. 14:117–122, 2007.

Acknowledgements

We thank Ms. Akane Inaizumi, Ms. Atsuko Sugiyama and Mr. Toshikazu Abe for valuable assistance in the acquisition of the data and references. We also thank Dr. Ralph Grams for his kind suggestions.

Author information

Authors and Affiliations

Corresponding author

Additional information

The MATLAB program, which we used to construct the ANN models, is available for any interested individuals. Please contact via e-mail: toyabe@med.niigata-u.ac.jp.

Rights and permissions

About this article

Cite this article

Sakai, S., Kobayashi, K., Toyabe, Si. et al. Comparison of the Levels of Accuracy of an Artificial Neural Network Model and a Logistic Regression Model for the Diagnosis of Acute Appendicitis. J Med Syst 31, 357–364 (2007). https://doi.org/10.1007/s10916-007-9077-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10916-007-9077-9