Abstract

We propose two implicit numerical schemes for the low-rank time integration of stiff nonlinear partial differential equations. Our approach uses the preconditioned Riemannian trust-region method of Absil, Baker, and Gallivan, 2007. We demonstrate the efficiency of our method for solving the Allen–Cahn and the Fisher–KPP equations on the manifold of fixed-rank matrices. Our approach allows us to avoid the restriction on the time step typical of methods that use the fixed-point iteration to solve the inner nonlinear equations. Finally, we demonstrate the efficiency of the preconditioner on the same variational problems presented in Sutti and Vandereycken, 2021.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The topic of this paper is the efficient solution of large-scale variational problems arising from the discretization of partial differential equations (PDEs), both time-independent and time-dependent. In the first part of the paper, we use the preconditioned Riemannian trust-region (RTR) method of Absil, Baker, and Gallivan [1] to solve the nonlinear equation derived from an implicit scheme for numerical time integration. All the calculations are performed on a low-rank manifold, which allows us to approximate the solution with significantly fewer degrees of freedom. In the second part of the paper, we solve variational problems derived from the discretization of elliptic PDEs. These are large-scale finite-dimensional optimization problems arising from the discretization of infinite-dimensional problems. Variational problems of this type have been considered as benchmarks in several nonlinear multilevel algorithms [26, 29, 69, 76].

A common way to speed up numerical computations is by approximating large matrices using low-rank methods. This is particularly useful for high-dimensional problems, which can be solved using low-rank matrix and tensor methods. The earliest examples are low-rank solvers for the Lyapunov equation, \(AX+XA^{\top }= C\), and other matrix equations; see, e.g., [38, 40, 64]. The low-rank approximation properties for these problems are also reasonably well-understood from a theoretical point of view. Grasedyck [25, Remark 1] showed that the solution X to a Sylvester equation \( AX - XB + C = 0 \) could be approximated up to a relative accuracy of \( \varepsilon \) using a rank \( r = {{\mathcal {O}}}(\log _{2}(\textrm{cond}_{2}(A)) \log _{2}(1/\varepsilon ) ) \), under some hypotheses on A, B, and C. Typically, to obtain a low-rank approximation of the unknown solution X, an iterative method that directly constructs the low-rank approximation is used. This work uses techniques that achieve a low-rank approximation through Riemannian optimization [2, 10, 21]. To ensure critical points have a low-rank representation, the optimization problem (which may be reformulated from the original) is limited to the manifold \({{\mathcal {M}}}_{r}\) of matrices with fixed rank r. Some early references on this manifold include [27, 34, 74]. Retraction-based optimization on \( {{\mathcal {M}}}_{r}\) was studied in [62, 63]. Optimization on \( {{\mathcal {M}}}_{r}\) has gained a lot of momentum during the last decade, and examples of such methods are [50, 66, 74] for matrix and tensor completion, [63] for metric learning, [37, 52, 75] for matrix and tensor equations, and [57, 58] for eigenvalue problems. These optimization problems are ill-conditioned in discretized PDEs, making simple first-order methods like gradient descent excessively slow.

1.1 Riemannian Preconditioning

This work employs preconditioning techniques on Riemannian manifolds, which are similar to preconditioning techniques in the unconstrained case; see, e.g., [56]. Several authors have tackled preconditioning in the Riemannian optimization framework; the following overview is not meant to be exhaustive. In [37, 58, 75], for example, the gradient is preconditioned with the inverse of the local Hessian. Solving these Hessian equations is done by a preconditioned iterative scheme, mimicking the class of quasi or truncated Newton methods. We also refer to [72] for a recent overview of geometric methods for obtaining low-rank approximations. The work most closely related to the present paper is [75], which proposed a preconditioner for the manifold of symmetric positive semidefinite matrices of fixed rank. Boumal and Absil [11] developed a preconditioner for Riemannian optimization on the Grassmann manifold. Mishra and Sepulchre [51] investigated the connection between quadratic programming and Riemannian gradient optimization, particularly on quotient manifolds. The method of [51] proved efficient, especially in quadratic optimization with orthogonality and rank constraints. Related to this preconditioned metric approach are those of [52, 55], and more recently [14], who extend the preconditioned metric from the matrix case to the tensor case using the tensor train (TT) format for the tensor completion problem. On tensor manifolds, [37] developed a preconditioned version for Riemannian gradient descent and the Richardson method, using the Tucker and TT formats.

1.2 Trust-Region Methods

The Riemannian trust-region (RTR) method of [1] embeds an inner truncated conjugate gradient (tCG) method to solve the so-called trust-region minimization subproblem. The tCG solver naturally lends itself to preconditioning, and the preconditioner is typically a symmetric positive definite operator that approximates the inverse of the Hessian matrix. Ideally, it has to be cheap to compute. Preconditioning with the projected Euclidean Hessian was done for symmetric positive semidefinite matrices with fixed rank [75]. In contrast, we develop it here for any, i.e., typically non-symmetric, fixed-rank matrix.

We follow the steps outlined in [75] to find the preconditioner, namely: find the Euclidean Hessian, find the Riemannian Hessian operator, vectorize it to get the Hessian matrix, a linear and symmetric matrix; the inverse of the Hessian matrix should make a good candidate for a preconditioner; apply the preconditioner.

1.3 Low-Rank Approximations for Time-Dependent PDEs

Various approaches have been employed to address the low-rank approximation of time-dependent partial differential equations (PDEs). One such method is the dynamical low-rank approximation (DLRA) [34, 48], which optimally evolves a system’s low-rank approximation for common time-dependent PDEs. For example, suppose we are given a discretized dynamical system as a first-order differential equation (ODE), namely,

The DLRA idea is to replace the derivative of W with respect to time,  , in the ODE with the tangent vector in the tangent space t \({{\mathcal {M}}}_{r}\) at W, \( \textrm{T}_{W}{{\mathcal {M}}}_{r}\), that is closer to the right-hand side G(W) . Recent developments of the DLRA include, but are not limited to, [15, 16, 32, 33, 54], and [9].

, in the ODE with the tangent vector in the tangent space t \({{\mathcal {M}}}_{r}\) at W, \( \textrm{T}_{W}{{\mathcal {M}}}_{r}\), that is closer to the right-hand side G(W) . Recent developments of the DLRA include, but are not limited to, [15, 16, 32, 33, 54], and [9].

Another approach is the dynamically orthogonal Runge–Kutta of [45, 46], and its more recent developments [17, 18, 22, 23, 61, 71].

The step-truncation methods of Rodgers, Dektor, and Venturi [59, 60] form another class of methods for the low-rank approximation of time-dependent problems. In [60], they study implicit rank-adaptive algorithms involving rank truncation onto a tensor or matrix manifold where the discrete implicit integrator is obtained by fixed-point iteration. To accelerate convergence, this iteration is warm started with one time step of a conventional time-stepping scheme.

Recently, Massei et al. [49] also investigated the low-rank numerical integration of the Allen–Cahn equation. However, their approach is very different from ours since they use hierarchical low-rank matrices.

1.4 Contributions and Outline

The most significant contributions of this paper are two implicit numerical time integration schemes that can be used to solve stiff nonlinear time-dependent partial differential equations (PDEs). While also employing an implicit time-stepping scheme for the time evolution, as in [60], instead of using a fixed-point iteration method for solving the nonlinear equations derived by the time integration scheme, we use a preconditioned RTR method (named PrecRTR) on the manifold of fixed-rank matrices. Our preconditioner for the RTR subproblem on the manifold of fixed-rank matrices can be regarded as an extension of the preconditioner of [75] for the manifold of symmetric positive semidefinite matrices of fixed rank. We apply our low-rank implicit numerical time integration schemes for solving two time-dependent, stiff nonlinear PDEs: the Allen–Cahn and Fisher–KPP equations. Additionally, we consider the two variational problems already studied in [26, 29, 69, 76]. The numerical experiments demonstrate the efficiency of the preconditioned algorithm in contrast to the non-preconditioned algorithm.

The remaining part of this paper is organized as follows. Section 2 introduces the problem settings and the objective functions object of study of this work. In Sect. 3, we recall some preliminaries on the Riemannian optimization framework and the RTR method and give an overview of the geometry of the manifold of fixed-rank matrices. In Sect. 4, we recall more algorithmic details of the RTR method. Sections 5 and 6 present the core contribution of this paper: an implicit Riemannian low-rank scheme for the numerical integration of stiff nonlinear time-dependent PDEs, the Allen–Cahn equation and the Fisher–KPP equation. Other numerical experiments on the two variational problems from [69] are presented and discussed in Sect. 7. Finally, we draw some conclusions and propose a research outlook in Sect. 8. The discretization details for the Allen–Cahn and the Fisher–KPP equations are provided in Appendices A and B, respectively. More details about the derivation of the preconditioner for the RTR method on the manifold of fixed-rank matrices are given in Appendix C.

1.5 Notation

The space of \( n \times r \) matrices is denoted by \( {\mathbb {R}}^{n \times r} \). By \( X_{\perp } \in {\mathbb {R}}^{n \times (n-r)}\) we denote an orthonormal matrix whose columns span the orthogonal complement of \( \textrm{span}(X) \), i.e., \( X^{\top }_{\perp } X = 0 \) and \( X_{\perp }^{\top }X_{\perp } = I_{r} \). In the formulas throughout the paper, we typically use the Roman capital script for operators and the italic capital script for matrices. For instance, \( \text {P}_{X}\) indicates a projection operator, while \(P_{X}\) is the corresponding projection matrix.

The directional derivative of a function f at x in the direction of \( \xi \) is denoted by \( {{\,\textrm{D}\,}}\! f(x)[\xi ] \). With \( \Vert \cdot \Vert _{\textrm{F}} \), we indicate the Frobenius norm of a matrix.

Even though we did not use a multilevel algorithm in this work, we want to maintain consistency with the notation used in [69]. Consequently, we denote by \( \ell \) the discretization level. Hence, the total number of grid points on a two-dimensional square domain is given by \( 2^{2\ell } \). This notation was adopted in [69] due to the multilevel nature of the Riemannian multigrid line-search (RMGLS) algorithm. In contrast, here we omit the subscripts \( \cdot _{h} \) and \( \cdot _{H} \) because they were due to the multilevel nature of RMGLS. We use \( {\varDelta } \) to denote the Laplacian operator, and the spatial discretization parameter is denoted by \( h_{x} \). The time step is represented by \( h\).

2 The Problem Settings and Cost Functions

In this section, we present the optimization problems studied in this paper. The first two problems are time-dependent, stiff PDEs: the Allen–Cahn and the Fisher–KPP equations. The last two problems are the same considered in [69].

The Allen–Cahn equation in its simpler form reads

where \( w \equiv w({\varvec{x}}, t) \), \( {\varvec{x}} \in \varOmega = [-\pi , \pi )^{2} \), with periodic boundary conditions, and \( t\ge 0 \). We reformulate it as a variational problem, which leads us to consider

The second problem considered is the Fisher–KPP equation with homogeneous Neumann boundary conditions, for which we construct the cost function

We emphasize that this is the only example we do not formulate as a variational problem. We refer the reader to Sect. 6 for the details about this cost function.

Thirdly, we study the following variational problem, studied in [26, 29, 76], and called LYAP in [69, Sect. 5.1],

where \( \nabla = \big ( \frac{\partial }{\partial x}, \frac{\partial }{\partial y} \big ) \), \( \varOmega = [0,1]^{2} \) and \( \gamma \) is the source term. The variational derivative (Euclidean gradient) of \( {{\mathcal {F}}}\) is

A critical point of (2) is thus also a solution of the elliptic PDE \( -{\varDelta } w = \gamma \). We refer the reader to [69, Sect. 5.1.1] or [67, Sect. 7.4.1.1] for the details about the discretization.

Finally, we consider the variational problem from [69, Sect. 5.2]:

For \( \gamma \), we choose

which is the same right-hand side adopted in [69]. The variational derivative of \( {{\mathcal {F}}}\) is

Regardless of the specific form of the functional \( {{\mathcal {F}}}\), all the problems studied in this paper have the general formulation

where F denotes the discretization of the functional \( {{\mathcal {F}}}\).

More details about each problem are provided later in the dedicated sections.

3 Riemannian Optimization Framework and Geometry

As anticipated above, in this paper we use the Riemannian optimization framework [2, 21]. This approach exploits the underlying geometric structure of the low-rank constrained problems, thereby allowing the constraints to be explicitly taken into account. In practice, the optimization variables in our discretized problems are constrained to a smooth manifold, and we perform the optimization on the manifold.

Specifically, in this paper, we use the RTR method of [1]. A more recent presentation of the RTR method can be found in [10]. In the next section, we introduce some fundamental geometry concepts used in Riemannian optimization, which are needed to formulate the RTR method, whose pseudocode is provided in Sect. 4.

3.1 Geometry of the Manifold of Fixed-Rank Matrices

The manifold of fixed-rank matrices is defined as

Using the singular value decomposition (SVD), one has the equivalent characterization

where \( \textrm{St}^{m}_{r}\) is the Stiefel manifold of \( m \times r \) real matrices with orthonormal columns, and \( {{\,\textrm{diag}\,}}(\sigma _{1}, \sigma _{2}, \ldots , \sigma _{r} ) \) is a square matrix with \( \sigma _{1}, \sigma _{2}, \ldots , \sigma _{r} \) on its main diagonal.

3.1.1 Tangent Space and Metric

The following proposition shows that \( {{\mathcal {M}}}_{r}\) is a smooth manifold with a compact representation for its tangent space.

Proposition 1

([74, Prop. 2.1]) The set \( {{\mathcal {M}}}_{r}\) is a smooth submanifold of dimension \( (m+n-r)r \) embedded in \( {\mathbb {R}}^{m \times n}\). Its tangent space \( \textrm{T}_{X}{{\mathcal {M}}}_{r}\) at \( X = U\varSigma V^{\top }\in {{\mathcal {M}}}_{r}\) is given by

In addition, every tangent vector \(\xi \in \textrm{T}_{X}{{\mathcal {M}}}_{r}\) can be written as

with \(M \in {\mathbb {R}}^{r \times r}\), \(U_{\textrm{p}}\in {\mathbb {R}}^{m\times r}\), \(V_{\textrm{p}}\in {\mathbb {R}}^{n \times r}\) such that \(U_{\textrm{p}}^{\top }U = V_{\textrm{p}}^{\top }V = 0\).

The orthogonality conditions \(U_{\textrm{p}}^{\top }U = V_{\textrm{p}}^{\top }V = 0\) are also known as gauging conditions [72, §9.2.3]. Since \( {{\mathcal {M}}}_{r}\subset {\mathbb {R}}^{m \times n}\), we represent tangent vectors in (5) and (6) as matrices of the same dimensions.

The Riemannian metric is the restriction of the Euclidean metric on \( {\mathbb {R}}^{m \times n}\) to the submanifold \( {{\mathcal {M}}}_{r}\), i.e.,

3.1.2 Projectors

Defining \( P_{U} = UU^{\top }\) and \( P_{U}^{\perp } = I - P_{U} \) for any \( U \in \textrm{St}^{m}_{r}\), where \( \textrm{St}^{m}_{r}\) is the Stiefel manifold of m-by-r orthonormal matrices, the orthogonal projection onto the tangent space at X is [74, (2.5)]

Since this projector is a linear operator, we can represent it as a matrix. The projection matrix \(P_{X}\in {\mathbb {R}}^{n^{2} \times n^{2}}\) representing the operator \(\text {P}_{X}\) can be written as

3.1.3 Riemannian Gradient

The Riemannian gradient of a smooth function \( f :{{\mathcal {M}}}_{r}\rightarrow {\mathbb {R}}\) at \( X \in {{\mathcal {M}}}_{r}\) is defined as the unique tangent vector \( {{\,\textrm{grad}\,}}f(X) \) in \( \textrm{T}_{X}{{\mathcal {M}}}_{r}\) such that

where \( {{\,\textrm{D}\,}}f \) denotes the directional derivatives of f. More concretely, for embedded submanifolds, the Riemannian gradient is given by the orthogonal projection onto the tangent space of the Euclidean gradient of f seen as a function on the embedding space \( {\mathbb {R}}^{m \times n}\); see, e.g., [2, (3.37)]. Then, denoting \( \nabla f(X) \) the Euclidean gradient of f at X, the Riemannian gradient is given by

3.1.4 Riemannian Hessian

The Riemannian Hessian is defined by (see, e.g., [2, def. 5.5.1], [10, def. 5.14])

where \( \nabla _{\xi _{x}} \) is the Levi-Civita connection. If \({{\mathcal {M}}}\) is a Riemannian submanifold of the Euclidean space \( {\mathbb {R}}^{n} \), as it is the case for the manifold of fixed-rank matrices, it follows that [10, cor. 5.16]

In practice, this is what we use in the calculations.

3.1.5 Retraction

To map the updates in the tangent space onto the manifold, we make use of so-called retractions. A retraction \( {{\,\textrm{R}\,}}_{X} \) is a smooth map from the tangent space to the manifold, \( {{\,\textrm{R}\,}}_{X}:\textrm{T}_{X}{{\mathcal {M}}}_{r}\rightarrow {{\mathcal {M}}}_{r}\), used to map tangent vectors to points on the manifold. It is, essentially, any smooth first-order approximation of the exponential map of the manifold; see, e.g., [3]. To establish convergence of the Riemannian algorithms, it is sufficient for the retraction to be defined only locally. An excellent survey on low-rank retractions is given in [4]. In our setting, we have chosen the metric projection, which is provided by a truncated SVD.

4 The RTR Method

As we anticipated above, to solve the implicit equation resulting from the time-integration scheme, we employ the RTR method of [1]. For reference, we provide the pseudocode for RTR in Algorithm 1. Step 4 in Algorithm 1 uses the truncated conjugate gradient (tCG) of [65, 70]. This method lends itself very well to being preconditioned.

RTR method of [1]

4.1 Riemannian Gradient and Riemannian Hessian

In general, in the case of Riemannian submanifolds, the full Riemannian Hessian of an objective function f at \( x \in {{\mathcal {M}}}\) is given by the projected Euclidean Hessian plus the curvature part

This suggests using \( P_{x} \, \nabla ^{2} f(x) \, P_{x} \) as a preconditioner in the RTR scheme; see [75, §6.1] for further details.

For the LYAP problem, the Riemannian gradient is given by

The directional derivative of the gradient, i.e., the Euclidean Hessian applied to \( \xi \in \textrm{T}_{X}{{\mathcal {M}}}_{r}\), is

The orthogonal projection of the Euclidean Hessian followed by vectorization yields

where the second \( P_{X}\) is inserted for symmetrization. From here we can read the symmetric \(n^{2}\)-by-\(n^{2}\) matrix

The inverse of this matrix (10) should be a good candidate for a preconditioner. In Appendix C, we present the derivation of the preconditioner for the tCG subsolver on the manifold of fixed-rank matrices.

In general, the preconditioner from above cannot be efficiently inverted because of the coupling with the nonlinear terms. Nonetheless, numerical experiments in Sect. 7 show that it remains an efficient preconditioner even for problems with a (mild) nonlinearity.

5 The Allen–Cahn Equation

The Allen–Cahn equation is a reaction-diffusion equation originally studied for modeling the phase separation process in multi-component alloy systems [5, 6]. It later turned out that the Allen–Cahn equation has a much wider range of applications. Recently, [78] provided a good review. Applications include mean curvature flows [41], two-phase incompressible fluids [77], complex dynamics of dendritic growth [44], image inpainting [20, 47], and image segmentation [7, 43].

The Allen–Cahn equation in its simplest form is given by (1). It is a stiff PDE with a low-order polynomial nonlinearity and a diffusion term \( \varepsilon {\varDelta } w \). As in [60], we set \( \varepsilon = 0.1 \), and we solve (1) on a square domain \([-\pi ,\pi )^{2}\) with periodic boundary conditions, and we also use the same initial condition as in [60, (77)–(78)], namely,

where

We emphasize that with this choice, the matrix \( W_{0} \) which discretizes the initial condition (11) has no low-rank structure and will be treated as a dense matrix. Nonetheless, thanks to the Laplacian’s smoothing effect as time evolution progresses, the solution W can be well approximated by low-rank matrices [49, §4.2]. In particular, for large simulation times, the solution converges to either \(-1\) or 1 in most of the domain, giving rise to four flat regions that can be well approximated by low rank; see panels (e) and (f) of Fig. 1.

5.1 Spatial Discretization

We discretize (1) in space on a uniform grid with \( 256 \times 256 \) points. In particular, we use the central finite differences to discretize the Laplacian with periodic boundary conditions. This results in the matrix ODE

where \(W :[0, T] \rightarrow {\mathbb {R}}^{256 \times 256} \) is a matrix that depends on t, \(^{\circ 3}\) denotes the elementwise power of a matrix (so-called Hadamard power, defined by \( W^{\circ \alpha } = [w_{ij}^{\alpha }] \)), and A is the second-order periodic finite difference differentiation matrix

This matrix ODE is an initial value problem (IVP) in the form of [72, (48)]

where \( G :=\varepsilon \left( A W + W A \right) + W - W^{\circ 3} \) is the right-hand side of (12).

5.2 Reference Solution

To get a reference solution \( W_{\textrm{ref}} \), we solve the (full-rank) IVP problem (14) with the classical explicit fourth-order Runge–Kutta method (ERK4), with a time step \(h= 10^{-4} \). Figure 1 illustrates the time evolution of the solution to the Allen–Cahn equation at six different simulation times. It is apparent that the solution evolves from an initial condition with many peaks and valleys to a solution with four flat regions occupying most of the domain.

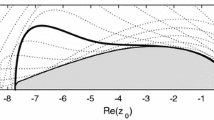

As a preliminary study, we monitor the discrete \( L^{2} \)-norm of the right-hand side of (1) for this reference solution and the numerical rank history of \( W_{\textrm{ref}} \). From panel (a) of Fig. 2, it appears that after \( t \approx 13 \), \( \Vert \partial w / \partial t \Vert _{L^{2}(\varOmega )} \le 10^{-3} \), which means that the solution w enters a stationary phase; see also last two panels of Fig. 1. Panel (b) of Fig. 2 plots the numerical rank of \( W_{\textrm{ref}} \) versus time, with relative singular value tolerance of \( 10^{-10} \). The numerical rank exhibits a rapid decay during the first \( \approx 2 \) seconds, then varies between 13 and 17 during the rest of the simulation. The rank decreases as the diffusion term comes to dominate the system.

5.3 Low-Rank Implicit Time-Stepping Scheme

As mentioned above, we employ the implicit Euler method for the time integration of (12), which gives

and, additionally, we want \( W_{k} \) to be of low rank. This is achieved by using our preconditioned RTR method (PrecRTR) on the manifold of fixed-rank matrices to solve for \( W_{k+1} \) the nonlinear equation (15).

Since our strategy is optimization, and since we wish to maintain some coherence with the LYAP and NPDE problems presented in Sect. 2 and [69], we build a variational problem whose first-order optimality condition will be exactly (15). This leads us to consider the problem

It is interesting to note that this cost function is very similar to the NPDE functional [69, (5.11)]. Here, \( {\widetilde{w}} \) is the solution at the previous time step and plays a similar role as \( \gamma \) in the NPDE functional (it is constant w.r.t. w). The only additional term w.r.t. the NPDE problem is the term \( h/ 4 \, w^{4} \). Moreover, in contrast to LYAP and NPDE, we need to solve this optimization problem many times, i.e., at every time step, to describe the time evolution of w.

We aim to obtain good low-rank approximations on the whole interval [0, T] . However, it is clear from our preliminary study (panel (b) of Fig. 2) that at the beginning of the time evolution, the numerical solution is not really low rank due to the initial condition chosen. For this reason, in our numerical experiments, we consider the dense matrix until \( t_{0} = 0.5 \), and only then do we start our rank-adaptive method. Indeed, according to the rank history of the reference solution (panel (b) of Fig. 2), at \( t=0.5 \) the numerical rank has already dropped to 23. We deemed this to be a good starting time for our low-rank algorithm. Our procedure is summarized in Algorithm 2.

The discretizations of the objective function \( {{\mathcal {F}}}(w) \) and its gradient are detailed in Appendix A.

5.4 Numerical Experiments

The algorithm was implemented in MATLAB and is publicly available at https://github.com/MarcoSutti/PrecRTR. The RTR method of [1] was executed using solvers from the Manopt package [12] with the Riemannian embedded submanifold geometry from [74]. We conducted our experiments on a desktop machine with Ubuntu 22.04.1 LTS and MATLAB R2022a installed, with Intel Core i7-8700 CPU, 16GB RAM, and Mesa Intel UHD Graphics 630.

For the time integration, we use the time steps \( h= \lbrace 0.05, 0.1, 0.2, 0.5, 1 \rbrace \), and we monitor the error \( \Vert w - w_{\textrm{ref}} \Vert _{L^{2}(\varOmega )} \). Figure 3 reports on the results. Panel (a) shows the time evolution of the error \( \Vert w - w_{\textrm{ref}} \Vert _{L^{2}(\varOmega )} \), while panel (b) shows that the error decays linearly in \( h\), as expected.

Figure 4 reports on the numerical rank history for the preconditioned low-rank evolution of the Allen–Cahn equation, with \( h= 0.05 \).

5.5 Discussion/Comparison with Other Solvers

From the results reported on Fig. 3, it is evident that even with very large time steps, we can still obtain relatively good low-rank approximations of the solution, especially at the final time \( T = 15 \). For example, compare with Fig. 4 in [60], where the biggest time step is \( h= 0.01 \) — i.e., one hundred times smaller than our largest time step. Moreover, factorized formats are not mentioned in [60]. In contrast, we always work with the factors to reduce computational costs.

In [60], the authors study implicit rank-adaptive algorithms based on performing one time step with a conventional time-stepping scheme, followed by an implicit fixed-point iteration step involving a rank truncation operation onto a tensor or matrix manifold. Here, we also employ an implicit time-stepping scheme for the time evolution. Still, instead of using a fixed-point iteration method for solving the nonlinear equations, we use our preconditioned RTR (PrecRTR) on the manifold of fixed-rank matrices. This way, we obtain a preconditioned dynamical low-rank approximation of the Allen–Cahn equation.

In general, implicit methods are much more effective for stiff problems, but they are also more expensive than their explicit counterparts since solutions of nonlinear systems replace function evaluations. Nonetheless, the additional computational overhead of the implicit method is compensated by the fact that we can afford a larger time step, as demonstrated by Fig. 3. Moreover, the cost of solving the inner nonlinear equations remains moderately low thanks to our preconditioner.

On the one hand, another known issue with explicit methods is that the time step needs to be in proportion to the smallest singular value of the solution [72, §9.5.3]. On the other hand, when using fixed point iterations, one still obtains a condition on the time step size, which depends on the Lipschitz constant of the right-hand side term, namely

where A is the matrix of the coefficients defining the stages (the Butcher tableau); see, e.g., [36, (3.13)] and [19]. This condition appears to be a restriction on the time step, not better than the restrictions for explicit methods to be stable. This shows that (quoting from [36, §3.2]) “fixed point iterations are unsuitable for solving the nonlinear system defining the stages. For solving the nonlinear system, other methods like the Newton method should be used”. A similar condition also holds for the method of Rodgers and Venturi, see [60, (31)]:

Their paper states: “Equation (31) can be seen as a stability condition restricting the maximum allowable time step \( h\) for the implicit Euler method with fixed point iterations.” This makes a case for using the Newton method instead of fixed point iteration to find a solution to the nonlinear equation.

As we observed from the MATLAB profiler,Footnote 1 as \( \ell \) increases, the calculation of the preconditioner becomes dominant in the running time. We are in the best possible situation since the preconditioner dominates the cost.

Finally, we emphasize that low-rank Lyapunov solvers (see [64] for a review) cannot be used to solve this kind of problem due to a nonlinear term in the Hessian, and PrecRTR proves much more effective than the RMGLS method of [69]. However, the latter may remain useful in all those problems for which an effective preconditioner is unavailable.

Of course, our method proves efficient when the rank is low and the time step is not too small. Otherwise, if these conditions are not met, there is no advantage over using full-rank matrices.

6 The Fisher–KPP Equation

The Fisher–KPP equation is a nonlinear reaction-diffusion PDE, which in its simplest form reads [53, (13.4)]

where \( w \equiv w(x, t; \omega ) \), \( r( \omega ) \) is a species’s reaction rate or growth rate. It is called “stochastic”Footnote 2 Fisher–KPP equation in the recent work of [18].

It was originally studied around the same time in 1937 in two independent, pioneering works. Fisher [24] studied a deterministic version of a stochastic model for the spread of a favored gene in a population in a one-dimensional habitat, with a “logistic” reaction term. Kolmogorov, Petrowsky, and Piskunov provided a rigorous study of the two-dimensional equation and obtained some fundamental analytical results, with a general reaction term. We refer the reader to [35] for an English translation of their original work.

The Fisher–KPP equation can be used to model several phenomena in physics, chemistry, and biology. For instance, it can be used to describe biological population or chemical reaction dynamics with diffusion. It has also been used in the theory of combustion to study flame propagation and nuclear reactors; see [53, §13.2] for a comprehensive review.

6.1 Boundary and Initial Conditions

Here, we adopt the same boundary and initial conditions as in [18]. The reaction rate is modeled as a random variable that follows a uniform law \( r \sim {{\mathcal {U}}}\left[ 1/4, 1/2 \right] \). We consider the spatial domain \( x \in [0, 40] \) and the time domain \( t \in [0, 10] \). We impose homogeneous Neumann boundary conditions, i.e.,

These boundary conditions represent the physical condition of zero diffusive fluxes at the two boundaries. The initial condition is “stochastic”, of the form

where \( a \sim {{\mathcal {U}}}\left[ 1/5, \ 2/5\right] \) and \( b \sim {{\mathcal {U}}}\left[ 1/10, \ 11/10\right] \). The random variables a, b, and r are all independent, and we consider \( N_{r} = 1000 \) realizations.

6.2 Reference Solution with the IMEX-CNLF Method

To obtain a reference solution, we use the implicit-explicit Crank–Nicolson leapfrog scheme (IMEX-CNLF) for time integration [30, Example IV.4.3]. This scheme treats the linear diffusion term with Crank–Nicolson, an implicit method. In contrast, the nonlinear reaction term is treated explicitly with leapfrog, a numerical scheme based on the implicit midpoint method.

For the space discretization, we consider 1000 grid points in x, while for the time discretization, we use 1601 points in time, so that the time step is \( h= 10/(1601 - 1) = 0.00625 \).

Let \( w^{(i)} \) denote the spatial discretization of the ith realization. At a given time t, each realization is stored as a column of our solution matrix, i.e.,

Moreover, let \( R_{\omega } \) be a diagonal matrix whose diagonal entries are the \( r_{(\omega )}^{(i)} \) coefficients for every realization indexed by i, \( i = 1, 2, \ldots , N_{r} \). Indeed,

The IMEX-CNLF scheme applied to (17) gives the algebraic equation

where A is the matrix that discretizes the Laplacian with a second-order centered finite difference stencil and homogeneous Neumann boundary conditions, i.e.,

For ease of notation, we call \( M_{\textrm{m}} = I - hA \) and \( M_{\textrm{p}} = I + hA \), so that (18) becomes

Panels (a) and (b) of Fig. 5 show the 1000 realizations at \( t = 0 \) and at \( t = 10 \), respectively. Panel (c) reports on the numerical rank history. To compute the numerical rank, we use MATLAB’s default tolerance, about \(10^{-11}\).

6.3 Low-Rank Crank–Nicolson Leapfrog (LR-CNLF) Scheme

To obtain a low-rank solver for the Fisher–KPP PDE, we proceed similarly as for the Allen–Cahn equation low-rank solution. We build a cost function F(W) , so that its minimization gives the solution to (20), i.e.,

Developing and keeping only the terms that depend on W, we get the cost function

Appendix B provides further details about the low-rank formats of (21), its gradient, and Hessian.

6.4 Numerical Experiments

We monitor the following quantities:

-

the numerical rank of the solution \( W_{\text {LR-CNLF}} \) given by the low-rank solver;

-

the discrete \( L^{2} \)-norm of the error

$$\begin{aligned} \Vert w_{\text {LR-CNLF}} - w_{\textrm{CNLF}} \Vert _{L^{2}(\varOmega )} = \sqrt{h_{x}} \cdot \Vert W_{\text {LR-CNLF}} - W_{\textrm{CNLF}} \Vert _{\textrm{F}}. \end{aligned}$$

As was done in the previous section for the reference solution, here we also consider 1000 realizations. We apply our technique with rank adaption, with tolerance for rank truncation of \( 10^{-8} \). The inner PrecRTR is halted once the gradient norm is less than \( 10^{-8} \). Figure 6 reports on the numerical experiments. Panel (a) reports on the numerical rank history of \( W_{\text {LR-CNLF}} \); the numerical rank of the reference solution \( W_{\textrm{CNLF}} \) from Sect. 6.2 is also plotted as reference. Panel (b) reports the history of the discrete \( L^{2} \)-norm of the error versus time for several \( h\). It is clear that the low-rank approximation improves for smaller values of \(h\), but this improvement is counterbalanced by an error that accumulates as the simulation progresses.

7 Numerical Experiments for LYAP and NPDE

This section focuses on the numerical properties of PrecRTR, our preconditioned RTR on the manifold of fixed-rank matrices on the variational problems from [69], recalled in Sect. 2. These are large-scale finite-dimensional optimization problems arising from the discretization of infinite-dimensional problems. These problems have been used as benchmarks in several nonlinear multilevel algorithms [26, 29, 76]. For further information on the theoretical aspects of variational problems, we recommend consulting [13, 42].

We consider two scenarios: in the first one, we let Manopt automatically take care of the trust-region radius \( {\bar{\varDelta }} \), while we fix \( {\bar{\varDelta }} = 0.5 \) in the second one. The tolerance on the norm of the gradient in the trust-region method is set to \( 10^{-12} \), and we set the maximum number of outer iterations \( n_{\text {max outer}} = 300 \).

7.1 Tables

Tables 1, 2, 3 report the numerical results of the LYAP problem, while Tables 4, 5, 6 correspond to the NPDE problem. In all the tables, \( \ell \) indicates the level of discretization, i.e., the total number of grid points on a two-dimensional square domain equals \(2^{2\ell }\). The quantities \( \Vert \xi ^{(\textrm{end})} \Vert _{\textrm{F}} \) and \( r(W^{(\textrm{end})}) \) are the Frobenius norm of the gradient and the residual, respectively, both evaluated at the final iteration of the simulation, \(W^{(\textrm{end})}\).

Tables 1 and 4 report the monitored quantities \( \Vert \xi ^{(\textrm{end})} \Vert _{\textrm{F}} \) and \( r(W^{(\textrm{end})}) \) for our PrecRTR, for the LYAP and NPDE problems, respectively. CPU times are in seconds and were obtained as an average over 10 runs.

When the maximum number of outer PrecRTR iterations \( n_{\text {max outer}} = 300 \) is reached, we indicate this in bold text. We also set a limit on the cumulative number of inner iterations \( \sum n_{\textrm{inner}} \): the inner tCG solver is stopped when \( \sum n_{\textrm{inner}} \) first exceeds \( 30\,000 \). This is also highlighted using the bold text in the following tables.

Tables 2 and 5 report on the effect of preconditioning as the problem size \( \ell \) increases for LYAP and NPDE, respectively. The reductions in the number of iterations of the inner tCG between the non-preconditioned (rows 3–5) and the preconditioned (last three rows) versions are impressive. Moreover, for the preconditioned method (last three rows in the tables), both tables demonstrate that \( n_{\textrm{outer}} \) and \( \sum n_{\textrm{inner}} \) depend (quite mildly) on the problem size \( \ell \), while \( \max n_{\textrm{inner}} \) is basically constant.

For NPDE, in both the non-preconditioned and preconditioned methods, the numbers of iterations are typically higher than those for the LYAP problem, which is plausibly due to the nonlinearity of the problem.

Finally, Tables 3 and 6 report the results for varying rank and fixed problem size \( \ell = 12 \). The stopping criteria are the same as above. It is remarkable that, for PrecRTR for the LYAP problem, all three monitored quantities basically do not depend on the rank. For NPDE, there is some more, but still moderate, dependence on the rank.

8 Conclusions and Outlook

In this paper, we have shown how to combine an efficient preconditioner with optimization on low-rank manifolds. Unlike classical Lyapunov solvers, our optimization strategy can treat nonlinearities. Moreover, compared to iterative methods that perform rank-truncation at every step, our approach allows for much larger time steps as it does not need to satisfy a fixed-point Lipschitz restriction. We illustrated this technique by applying it to two time-dependent nonlinear PDEs — the Allen–Cahn and the Fisher–KPP equations. In addition, the numerical experiments for two time-independent variational problems demonstrate the efficiency in computing good low-rank approximations with a number of tCG iterations in the trust region subsolver which is almost independent of the problem size.

Future research may focus on higher-order methods, such as more accurate implicit methods. Additionally, we may explore higher-dimensional problems, problems in biology, and stochastic PDEs.

Data Availability

The code and datasets generated and analyzed during the current study are available in the PrecRTR repository, https://github.com/MarcoSutti/PrecRTR.

Notes

Not reported here, but reproducible with the distributed code.

“Stochastic” might be too big of a term since no Brownian motion is involved. It is just a PDE with random coefficients for the initial condition and the reaction rate.

Sometimes known as forward difference matrix.

We use the MATLAB notation \( (:,i) \) to denote the ith column extraction from a matrix.

References

Absil, P.A., Baker, C.G., Gallivan, K.A.: Trust-region methods on riemannian manifolds. Found. of Comput. Math. 7, 303–330 (2007). https://doi.org/10.1007/s10208-005-0179-9

Absil, P.A., Mahony, R., Sepulchre, R.: Optimization Algorithms on Matrix Manifolds. Princeton University Press, Princeton, NJ (2008)

Absil, P.A., Malick, J.: Projection-like retractions on matrix manifolds. SIAM J. Optim. 22(1), 135–158 (2012). https://doi.org/10.1137/100802529

Absil, P.A., Oseledets, I.V.: Low-rank retractions: a survey and new results. Comput. Optim. Appl. 62(1), 5–29 (2015). https://doi.org/10.1007/s10589-014-9714-4

Allen, S.M., Cahn, J.W.: Ground state structures in ordered binary alloys with second neighbor interactions. Acta Metall. 20(3), 423–433 (1972). https://doi.org/10.1016/0001-6160(72)90037-5

Allen, S.M., Cahn, J.W.: A correction to the ground state of FCC binary ordered alloys with first and second neighbor pairwise interactions. Scr. Metall. 7(12), 1261–1264 (1973). https://doi.org/10.1016/0036-9748(73)90073-2

Beneš, M., Chalupecký, V., Mikula, K.: Geometrical image segmentation by the Allen-Cahn equation. Appl. Numer. Math. 51(2), 187–205 (2004). https://doi.org/10.1016/j.apnum.2004.05.001

Benzi, M., Golub, G.H., Liesen, J.: Numerical solution of saddle point problems. Acta. Numer. 14, 1–137 (2005). https://doi.org/10.1017/S0962492904000212

Billaud-Friess, M., Falcó, A., Nouy, A.: A new splitting algorithm for dynamical low-rank approximation motivated by the fibre bundle structure of matrix manifolds. BIT Numer. Math. 62(2), 387–408 (2022). https://doi.org/10.1007/s10543-021-00884-x

Boumal, N.: An Introduction to Optimization on Smooth Manifolds. Cambridge University Press, Cambridge (2023). https://doi.org/10.1017/9781009166164

Boumal, N., Absil, P.A.: Low-rank matrix completion via preconditioned optimization on the Grassmann manifold. Linear Algebra Appl. 475, 200–239 (2015). https://doi.org/10.1016/j.laa.2015.02.027

Boumal, N., Mishra, B., Absil, P.A., Sepulchre, R.: Manopt, a Matlab toolbox for optimization on manifolds. J. Mach. Lear. Res. 15, 1455–1459 (2014)

Brenner, S., Scott, R.: The Mathematical Theory of Finite Element Methods. Texts in Applied Mathematics. Springer, New York (2007)

Cai, J.F., Huang, W., Wang, H., Wei, K.: Tensor completion via tensor train based low-rank quotient geometry under a preconditioned metric (2022)

Ceruti, G., Kusch, J., Lubich, C.: A rank-adaptive robust integrator for dynamical low-rank approximation. BIT Numer. Math. 62(4), 1149–1174 (2022). https://doi.org/10.1007/s10543-021-00907-7

Ceruti, G., Lubich, C.: An unconventional robust integrator for dynamical low-rank approximation. BIT Numer. Math. 62(1), 23–44 (2022). https://doi.org/10.1007/s10543-021-00873-0

Charous, A., Lermusiaux, P.: Dynamically orthogonal differential equations for stochastic and deterministic reduced-order modeling of ocean acoustic wave propagation. In: OCEANS 2021: San Diego – Porto, pp. 1–7 (2021). https://doi.org/10.23919/OCEANS44145.2021.9705914

Charous, A., Lermusiaux, P.F.J.: Stable rank-adaptive dynamically orthogonal runge-kutta schemes (2022)

Dieudonné, J.: Foundations of Modern Analysis. Academic Press, New York (1960)

Dobrosotskaya, J.A., Bertozzi, A.L.: A wavelet-laplace variational technique for image deconvolution and inpainting. IEEE Trans. Image Process. 17(5), 657–663 (2008). https://doi.org/10.1109/TIP.2008.919367

Edelman, A., Arias, T.A., Smith, S.T.: The geometry of algorithms with orthogonality constraints. SIAM J. Matrix Anal. Appl. 20(2), 303–353 (1998). https://doi.org/10.1137/S0895479895290954

Feppon, F., Lermusiaux, P.F.J.: Dynamically orthogonal numerical schemes for efficient stochastic advection and lagrangian transport. SIAM Rev. 60(3), 595–625 (2018). https://doi.org/10.1137/16M1109394

Feppon, F., Lermusiaux, P.F.J.: A geometric approach to dynamical model order reduction. SIAM J. Matrix Anal. Appl. 39(1), 510–538 (2018). https://doi.org/10.1137/16M1095202

Fisher, R.A.: The wave of advance of advantageous genes. Ann. Eug. 7(4), 355–369 (1937). https://doi.org/10.1111/j.1469-1809.1937.tb02153.x

Grasedyck, L.: Existence of a low rank or \({\cal{H} }\)-matrix approximant to the solution of a Sylvester equation. Numer. Linear Algebra with Appl. 11(4), 371–389 (2004). https://doi.org/10.1002/nla.366

Gratton, S., Sartenaer, A., Toint, P.L.: Recursive trust-region methods for multiscale nonlinear optimization. SIAM J. Optim. 19(1), 414–444 (2008). https://doi.org/10.1137/050623012

Helmke, U., Moore, J.B.: Optimization and Dynamical Systems. Springer-Verlag, London (1994). https://doi.org/10.1007/978-1-4471-3467-1

Henderson, H.V., Searle, S.R.: The vec-permutation matrix, the vec operator and Kronecker products: a review. Linear Multilinear Algebra 9(4), 271–288 (1981). https://doi.org/10.1080/03081088108817379

Henson, V.E.: Multigrid methods nonlinear problems: an overview. In: Bouman, C.A., Stevenson, R.L. (eds.) Computational Imaging, vol. 5016, pp. 36–48. International Society for Optics and Photonics, SPIE, US (2003). https://doi.org/10.1117/12.499473

Hundsdorfer, W., Verwer, J.: Numerical Solution of Time-Dependent Advection-Diffusion-Reaction Equations, 1st edn. Springer, Berlin, Heidelberg (2003). https://doi.org/10.1007/978-3-662-09017-6

Khatri, C.G., Rao, C.R.: Solutions to some functional equations and their applications to characterization of probability distributions. Sankhyā Ser. A 30(2), 167–180 (1968)

Kieri, E., Lubich, C., Walach, H.: Discretized dynamical low-rank approximation in the presence of small singular values. SIAM J. Numer. Anal. 54(2), 1020–1038 (2016). https://doi.org/10.1137/15M1026791

Kieri, E., Vandereycken, B.: Projection methods for dynamical low-rank approximation of high-dimensional problems. Comput. Methods Appl. Math. 19(1), 73–92 (2019). https://doi.org/10.1515/cmam-2018-0029

Koch, O., Lubich, C.: Dynamical low-rank approximation. SIAM J. Matrix Anal. Appl. 29(2), 434–454 (2007). https://doi.org/10.1137/050639703

Kolmogorov, A.N., Petrowsky, I.G., Piskunov, N.S.: Studies of the diffusion with the increasing quantity of the substance; its application to a biological problem. In: O.A. Oleinik (ed.) I.G. Petrowsky Selected Works. Part II: Differential Equations and Probability Theory, Classics of Soviet Mathematics, vol. 5, first edn., chap. 6, pp. 106–132. CRC Press, London (1996). https://doi.org/10.1201/9780367810504

Kressner, D.: Advanced numerical analysis (2015). https://www.epfl.ch/labs/anchp/wp-content/uploads/2018/05/AdvancedNA2015.pdf

Kressner, D., Steinlechner, M., Vandereycken, B.: Preconditioned low-rank riemannian optimization for linear systems with tensor product structure. SIAM J. Sci. Comput. 38(4), A2018–A2044 (2016). https://doi.org/10.1137/15M1032909

Kressner, D., Tobler, C.: Preconditioned low-rank methods for high-dimensional elliptic PDE eigenvalue problems. Comput. Methods Appl. Math. 11(3), 363–381 (2011). https://doi.org/10.2478/cmam-2011-0020

Kressner, D., Tobler, C.: Algorithm 941: Htucker–a matlab toolbox for tensors in hierarchical tucker format. ACM Trans. Math. Softw. 40(3), 22:1-22:22 (2014). https://doi.org/10.1145/2538688

Kürschner, P.: Efficient low-rank solution of large-scale matrix equations. Ph.D. thesis, Aachen (2016)

Laux, T., Simon, T.M.: Convergence of the Allen-Cahn equation to multiphase mean curvature flow. Commun. Pure Appl. Anal. 71(8), 1597–1647 (2018). https://doi.org/10.1002/cpa.21747

Le Dret, H., Lucquin, B.: Partial Differential Equations: Modeling, Analysis and Numerical Approximation. Birkhäuser, Basel (2016)

Lee, D., Lee, S.: Image segmentation based on modified fractional Allen-Cahn equation. Math. Probl. Eng. 2019, 3980181 (2019). https://doi.org/10.1155/2019/3980181

Lee, H.G., Kim, J.: An efficient and accurate numerical algorithm for the vector-valued Allen-Cahn equations. Comput. Phys. Commun. 183(10), 2107–2115 (2012). https://doi.org/10.1016/j.cpc.2012.05.013

Lermusiaux, P.: Evolving the subspace of the three-dimensional multiscale ocean variability: massachusetts bay. J. Mar. Syst. 29(1), 385–422 (2001). https://doi.org/10.1016/S0924-7963(01)00025-2. (Three-Dimensional Ocean Circulation: Lagrangian measurements and diagnostic analyses)

Lermusiaux, P.F.J., Robinson, A.R.: Data assimilation via error subspace statistical estimation. Part i: theory and schemes. Mon. Weather Rev. 127(7), 1385–1407 (1999). https://doi.org/10.1175/1520-0493(1999)127\(<\)1385:DAVESS\(>\)2.0.CO;2

Li, Y., Jeong, D., Choi, J., Lee, S., Kim, J.: Fast local image inpainting based on the Allen-Cahn model. Digit. Signal Process. 37, 65–74 (2015). https://doi.org/10.1016/j.dsp.2014.11.006

Lubich, C., Oseledets, I.V.: A projector-splitting integrator for dynamical low-rank approximation. BIT Numer. Math. 54(1), 171–188 (2014). https://doi.org/10.1007/s10543-013-0454-0

Massei, S., Robol, L., Kressner, D.: Hierarchical adaptive low-rank format with applications to discretized partial differential equations. Numer. Linear Algebra Appl. 29(6), e2448 (2022). https://doi.org/10.1002/nla.2448

Mishra, B., Meyer, G., Bach, F., Sepulchre, R.: Low-rank optimization with trace norm penalty. SIAM J. Optim. 23(4), 2124–2149 (2013)

Mishra, B., Sepulchre, R.: Riemannian preconditioning. SIAM J. Optim. 26(1), 635–660 (2016). https://doi.org/10.1137/140970860

Mishra, B., Vandereycken, B.: A Riemannian approach to low-rank algebraic Riccati equations. In: 21st International Symposium on Mathematical Theory of Networks and Systems, pp. 965–968. Groningen, The Netherlands (2014)

Murray, J.D.: Mathematical Biology I. An Introduction, 3rd edn. Springer, New York, NY (2002). https://doi.org/10.1007/b98868

Musharbash, E., Nobile, F., Vidličková, E.: Symplectic dynamical low rank approximation of wave equations with random parameters. BIT Numer. Math. 60(4), 1153–1201 (2020). https://doi.org/10.1007/s10543-020-00811-6

Ngo, T., Saad, Y.: Scaled Gradients on Grassmann Manifolds for Matrix Completion. In: Pereira, F., Burges, C., Bottou, L., Weinberger, K. (eds.) Adv. Neural Inf. Process. Syst., vol. 25. Curran Associates Inc., USA (2012)

Nocedal, J., Wright, S.J.: Numerical Optimization, 2nd edn. Springer, New York, NY (2006). https://doi.org/10.1007/978-0-387-40065-5

Rakhuba, M., Novikov, A., Oseledets, I.: Low-rank Riemannian eigensolver for high-dimensional Hamiltonians. J. Comput. Phys. 396, 718–737 (2019)

Rakhuba, M., Oseledets, I.: Jacobi-Davidson method on low-rank matrix manifolds. SIAM J. Sci. Comput. 40(2), A1149–A1170 (2018)

Rodgers, A., Dektor, A., Venturi, D.: Adaptive integration of nonlinear evolution equations on tensor manifolds. J. Sci. Comput. 92(2), 39 (2022). https://doi.org/10.1007/s10915-022-01868-x

Rodgers, A., Venturi, D.: Implicit step-truncation integration of nonlinear PDEs on low-rank tensor manifolds. J. Sci. Comput. 97(2), 33 (2022). https://doi.org/10.48550/ARXIV.2207.01962. arXiv:2207.01962

Sapsis, T.P., Lermusiaux, P.F.: Dynamically orthogonal field equations for continuous stochastic dynamical systems. Phys. D: Nonlinear Phenom. 238(23), 2347–2360 (2009). https://doi.org/10.1016/j.physd.2009.09.017

Shalit, U., Weinshall, D., Chechik, G.: Online Learning in The Manifold of Low-Rank Matrices. In: Lafferty, J., Williams, C., Shawe-Taylor, J., Zemel, R., Culotta, A. (eds.) Adv. Neural Inf. Process. Syst., vol. 23. Curran Associates Inc., USA (2010)

Shalit, U., Weinshall, D., Chechik, G.: Online learning in the embedded manifold of low-rank matrices. J. Mach. Lear. Res. 13, 429–458 (2012)

Simoncini, V.: Computational methods for linear matrix equations. SIAM Rev. 58(3), 377–441 (2016). https://doi.org/10.1137/130912839

Steihaug, T.: The conjugate gradient method and trust regions in large scale optimization. SIAM J. Numer. Anal. 20(3), 626–637 (1983). https://doi.org/10.1137/0720042

Steinlechner, M.: Riemannian optimization for high-dimensional tensor completion. SIAM J. Sci. Comput. 38(5), S461–S484 (2016)

Sutti, M.: Riemannian algorithms on the stiefel and the fixed-rank manifold. Ph.D. thesis, University of Geneva (2020). ID: unige:146438

Sutti, M., Vandereycken, B.: RMGLS: A MATLAB algorithm for Riemannian multilevel optimization. Available online (2020). https://doi.org/10.26037/yareta:zara3a5aivcsfk6uhq4oovjxhe

Sutti, M., Vandereycken, B.: Riemannian multigrid line search for low-rank problems. SIAM J. Sci. Comput. 43(3), A1803–A1831 (2021). https://doi.org/10.1137/20M1337430

Toint, P.L.: Towards an efficient sparsity exploiting Newton method for minimization. In: Sparse Matrices and Their Uses, pp. 57–88. Academic Press, London, England (1981)

Ueckermann, M., Lermusiaux, P., Sapsis, T.: Numerical schemes for dynamically orthogonal equations of stochastic fluid and ocean flows. J. Comput. Phys. 233, 272–294 (2013). https://doi.org/10.1016/j.jcp.2012.08.041

Uschmajew, A., Vandereycken, B.: Geometric methods on low-rank matrix and tensor manifolds, chap. 9, pp. 261–313. Springer International Publishing, Cham (2020). https://doi.org/10.1007/978-3-030-31351-7_9

Van Loan, C.F.: The ubiquitous Kronecker product. J. Comput. and Appl. Math. 123(1–2), 85–100 (2000)

Vandereycken, B.: Low-rank matrix completion by riemannian optimization. SIAM J. Optim. 23(2), 1214–1236 (2013). https://doi.org/10.1137/110845768

Vandereycken, B., Vandewalle, S.: A riemannian optimization approach for computing low-rank solutions of Lyapunov equations. SIAM J. Matrix Anal. Appl. 31(5), 2553–2579 (2010). https://doi.org/10.1137/090764566

Wen, Z., Goldfarb, D.: A line search multigrid method for large-scale nonlinear optimization. SIAM J. Optim. 20(3), 1478–1503 (2009). https://doi.org/10.1137/08071524X

Yang, X., Feng, J.J., Liu, C., Shen, J.: Numerical simulations of jet pinching-off and drop formation using an energetic variational phase-field method. J. Comput. Phys. 218(1), 417–428 (2006). https://doi.org/10.1016/j.jcp.2006.02.021

Yoon, S., Jeong, D., Lee, C., Kim, H., Kim, S., Lee, H.G., Kim, J.: Fourier-spectral method for the phase-field equations. Mathematics 8(8), 1385 (2020). https://doi.org/10.3390/math8081385

Acknowledgements

The authors would like to thank the anonymous referee whose valuable comments contributed to improving an earlier version of this paper. M.S. would also like to thank Jhih-Huang Li for the occasional discussions, which significantly contributed to overcoming obstacles encountered while working on this project.

Funding

Open access funding provided by University of Geneva The work of M.S. was supported by the National Center for Theoretical Sciences and the National Science and Technology Council of Taiwan (R.O.C.), under the contracts 111-2124-M-002-014- and 112-2124-M-002-009-. The work of B.V. was supported by the Swiss National Science Foundation (grant number 192129).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors confirm that the manuscript has been read and approved by all named authors and that there are no other persons who satisfied the criteria for authorship but are not listed. The authors further confirm that the order of authors listed in the manuscript has been approved by all of us. The authors also hereby certify that there is not any actual or potential conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Low-Rank Formats for the Allen–Cahn Equation (ACE)

1.1 Objective Functional

Discretizing (16) similarly as in [69, §5.2.1], we obtain

To obtain the factored format of the discretized objective functional, we consider the factorizations \( W = U\varSigma V^{\top }\), and \( {\widetilde{W}} = {\widetilde{U}} {\widetilde{\varSigma }} {\widetilde{V}}^{\top }\).

The first term and the fourth term in (22) have the same factorized form as those seen in [69, §5.2.1]. The only slight change is due to the periodic boundary conditions adopted here. As a consequence, the matrix L that discretizes the first-order derivatives.Footnote 3 with periodic boundary conditions becomes

Note the presence of the unitary coefficient in the lower-left corner. The reader can easily verify that \( A = L^{\top }L \), where A is the matrix (13) obtained by discretizing the Laplacian with central finite differences and periodic boundary conditions. We recall from [69] that, given this matrix, the first-order derivatives of W can be computed as

For the second term in (22), we have

For the third term, it is easier to consider the full-rank format

We call \( {\widetilde{G}} = {\widetilde{U}}{\widetilde{\varSigma }} \) and \( G = U \varSigma \). Finally, the discretized objective functional in factorized matrix form is

Table 7 summarizes the asymptotic complexities for the ACE cost function. In this and the following similar tables, we indicate the sizes of the matrices in the order in which they appear in the product. If all the matrices in a term are the same size, we only indicate that once. Matrices without any specific structure are stored as dense, unless otherwise specified.

1.2 Gradient

The gradient of \( {{\mathcal {F}}}\) (16) is the variational derivative

The discretized Euclidean gradient in matrix form is given by

with A as in (13).

For the term \( W^{\circ 2} = W \odot W \), we perform the element-wise multiplication in factorized form as explained in [39, §7] and store the result in the format \( U_{\circ 2} \varSigma _{\circ 2} V_{\circ 2}^{\top }\), i.e.,

where \( *^{\top }\) denotes a transposed variant of the Khatri–Rao product [31]. Then for \( W^{\circ 3} = W \odot W \odot W \) we consider the factorized format

Substituting the formats \( W = U\varSigma V^{\top }\), \( W^{\circ 3} = U_{\circ 3}\varSigma _{\circ 3}V_{\circ 3}^{\top }\), and \( {\widetilde{W}} = {\widetilde{U}} {\widetilde{\varSigma }} {\widetilde{V}}^{\top }\), we get the factorized form of the Euclidean gradient \( G = U_{G} \varSigma _{G} V_{G}^{\top }\), where

and

The gradient G is just an augmented matrix, analogously to the discretized gradient in factored format for the NPDE problem; see [69, §5.2.2]. The operations needed to form G are summarized in Table 8.

1.3 Hessian

The discretized Euclidean Hessian is (compare [68, §7.4.2.3])

The factored form of the discretized Euclidean Hessian is \( H_{W}[\eta ] = U_{H_{W}[\eta ]} S_{H_{W}[\eta ]} V_{H_{W}[\eta ]}^{\top }\), with

where \( \eta = U_{\eta } S_{\eta } V_{\eta }^{\top }\) is a tangent vector in \( \textrm{T}_{W}{{\mathcal {M}}}_{r}\), and \( W^{\circ 2} \odot \eta = U_{\odot } \varSigma _{\odot } V_{\odot }^{\top }\) (Table 9).

Remark 1

We have the following relationships between the cost function, the gradient, and their discretized counterparts:

i.e., we can first discretize \( {{\mathcal {F}}}\) to obtain F, and then the Euclidean gradient of F is G. This is equivalent to computing the variational derivative \( \frac{\delta {{\mathcal {F}}}}{\delta w} \) first, and then discretizing it to obtain G.

Low-Rank Formats for the Fisher–KPP Equation (FKPPE)

1.1 Cost Function

The FKPPE cost function (21) involves the quantities W, \(W^{(n)}\), and \(W^{(n-1)}\). In low-rank matrix format, these are factorized as

where all the U and V factors have size n-by-r, while the \( \varSigma \) factors are stored as sparse diagonal r-by-r matrices. As in A.1 for the Allen–Cahn equation, the square Hadamard power of \( W^{(n)} \) is factorized as \( \big ( W^{(n)} \big )^{\circ 2} = U_{\circ 2}\varSigma _{\circ 2}V_{\circ 2}^{\top }\), where \( U_{\circ 2} \), \( V_{\circ 2} \in {\mathbb {R}}^{n \times r^{2}} \), and \( \varSigma _{\circ 2} \) is a sparse diagonal \( r^{2} \)-by-\( r^{2} \) matrix.

We call the operations \( G_{W} = U\varSigma \), \( G^{(n)} = U^{(n)} \varSigma ^{(n)} \), \( G^{(n-1)} = U^{(n-1)} \varSigma ^{(n-1)} \), and \( G_{\odot } = U_{\odot } \varSigma _{\odot } \). We point out that \( M_{\textrm{m}}^{\top }M_{\textrm{m}} \) is a symmetric sparse banded matrix with bandwidth 2. This implies that the number of nonzero elements is \( 2(n-2) + 2(n-1)+ n = 5n - 6 \ll n^{2} \), which allows for efficient matrix-matrix products.

Refer to Table 10 for details on the computational costs for evaluating (21) in low-rank format.

1.2 Gradient

The Euclidean gradient of the FKPPE cost function F(W) is

In low-rank format we have \( G = U_{G} \varSigma _{G} V_{G}^{\top }\), whose factors are

Table 11 summarizes the asymptotic complexities for the FKPPE factorized gradient.

1.3 Hessian

The discretized Euclidean Hessian of (21) is

The factored form of the discretized Euclidean Hessian is

where \( \eta = U_{\eta } S_{\eta } V_{\eta }^{\top }\) is a tangent vector in \( \textrm{T}_{W}{{\mathcal {M}}}_{r}\), in a SVD-like format. The only operation needed is the product \( \big ( M_{\textrm{m}}^{\top }M_{\textrm{m}} \big ) U_{\eta } \), whose cost is \( {{\mathcal {O}}}(nr) \).

Derivation of the Preconditioner

As mentioned in Sect. 4, the tCG trust-region subsolver can be preconditioned with the inverse of (10). However, inverting the matrix \( H_{X} \) directly would be too computationally expensive, taking \({{\mathcal {O}}}(n^{6})\) in this case. A suitable preconditioner can be used to solve this problem, thereby reducing the number of iterations required by the tCG solver. This appendix provides the derivation of such a preconditioner.

1.1 Applying the Preconditioner

In practice, applying the preconditioner in \( X \in {{\mathcal {M}}}_{r}\) means solving (without explicitly inverting the matrix) for \( \xi \in \textrm{T}_{X}{{\mathcal {M}}}\) the system

where \( H_{X} \) is defined in (10) and \(\eta \in \textrm{T}_{X}{{\mathcal {M}}}\) is a known tangent vector. This equation is equivalent to

Using definition (7) of the orthogonal projector onto \( \textrm{T}_{X}{{\mathcal {M}}}_{r}\), we obtain

which is equivalent to the system

The main difference w.r.t. [75] is that here, in general, the tangent vectors are not symmetric. Using the matrix representations (6) of the tangent vectors \( \xi \) and \( \eta \) at \( X = U\varSigma V^{\top }\)

with \(M_{\xi } \in {\mathbb {R}}^{r \times r}\), \(U_{\textrm{p}}^{\xi }\in {\mathbb {R}}^{m\times r}\), \(V_{\textrm{p}}^{\xi }\in {\mathbb {R}}^{n \times r}\) such that \((U_{\textrm{p}}^{\xi })^{\top }U = (V_{\textrm{p}}^{\xi })^{\top }V = 0\). Analogously, for the tangent vector \(\eta \) we have the constraints \(M_{\eta } \in {\mathbb {R}}^{r \times r}\), \( U_{\textrm{p}}^{\eta }\in {\mathbb {R}}^{m\times r}\), \(V_{\textrm{p}}^{\eta }\in {\mathbb {R}}^{n \times r}\) such that \((U_{\textrm{p}}^{\eta })^{\top }U = (V_{\textrm{p}}^{\eta })^{\top }V = 0\), also called gauging conditions [72, §9.2.3].

After some manipulations (see Appendix C.3), system (25) can be written as

where \( M_{\xi } \), \( U_{\textrm{p}}^{\xi }\), and \( V_{\textrm{p}}^{\xi }\) are the unknown matrices.

The solution flow of system (26) is as follows. From the second and the third equations of (26), we get \( U_{\textrm{p}}^{\xi }\) and \( V_{\textrm{p}}^{\xi }\) depending on \( M_{\xi } \), then we insert the expressions obtained in the first equation to get \( M_{\xi } \).

We introduce orthogonal matrices Q and \( {\widetilde{Q}}\) to diagonalize \( U^{\top }\! A U \) and \( V^{\top }\! A V \), respectively,

and use them to define the following matrices

With these transformations, the first equation in (26) becomes (see Appendix C.3.1 for the details)

By using the same transformations, we can also rewrite the second equation in (26) as (see Appendix C.3.2 for the details)

with the condition \( {\widehat{U}}^{\top }{\widehat{U}}_{\textrm{p}}^{\xi }= 0 \). The ith column of this equation isFootnote 4

where \({\widetilde{d}}_{i}\), for \( i = 1, \ldots , n\), are the diagonal entries of \( {\widetilde{D}}\). We rewrite this equation as a saddle-point system

for all \( y \in {\mathbb {R}}^{r} \). This saddle-point system can be efficiently solved with the techniques described in Sect. C.2.

Let us define

The solution of (30) is given by

where the notation (1 : n) means that we only keep the first n entries of the vector. In other terms, we have

Here, \({\mathcal {T}}_{i}^{-1}\) denotes solving for \( {\widehat{U}}_{\textrm{p}}^{\xi }(:,i) \) the ith saddle-point system, corresponding to (30).

For the third equation in (26), we proceed analogously as above; see Appendix C.3.3 for the details. After some manipulations, we obtain the saddle-point system

for all \( z \in {\mathbb {R}}^{r} \). The solution is

Here, \(\widetilde{{\mathcal {T}}}_{i}^{-1}\) denotes solving for \( {\widehat{V}}_{\textrm{p}}^{\xi }(:,i) \) the ith saddle-point system, corresponding to (32).

We now go back to the first equation in (26), in its form given in (28). To treat the term \( {\widehat{U}}^{\top }\! A {\widehat{U}}_{\textrm{p}}^{\xi }\) appearing in (28), let us define the vectors

We emphasize that the vector \( v_{i} \) is known, while \( w_{i} \) is not because \( {\widehat{M}}_{\xi }\) is unknown. With these definitions, and (31), one can easily verify that

Similarly, for treating the term \( {\widehat{V}}^{\top }\! A {\widehat{V}}_{\textrm{p}}^{\xi }\), we define the vectors

then

Inserting (34) and (35) into (28), we obtain

The vectors \( w_{i} \) and \( {\widetilde{w}}_{i} \) contain the unknown matrix \( {\widehat{M}}_{\xi }\), so we leave them on the left-hand side, while since \( v_{i} \) and \( {\widetilde{v}}_{i} \) are known, we move them to the right-hand side. By letting \( K_{i} :={\widetilde{d}}_{i}I_{r} - {\widehat{U}}^{\top }\! A {\mathcal {T}}_{i}^{-1} \big ( P_{U}^{\perp } A {\widehat{U}}\big ) \) and \( {\widetilde{K}}_{i}:=d_{i} I_{r} - {\widehat{V}}^{\top }\! A \widetilde{{\mathcal {T}}}_{i}^{-1} \big ( P_{V}^{\perp } A {\widehat{V}}\big ) \) for \( i = 1, \ldots , r \), we get

where the matrix on the right-hand side is defined by

Now, we need to isolate \( {\widehat{M}}_{\xi }\) in (36). We vectorize the first term on the left-hand side of (36) and get

where \( {{\mathcal {K}}}= {{\,\textrm{blkdiag}\,}}(K_{1}, \ldots , K_{r}) \), a block-diagonal matrix with the \( K_{i} \) on the main diagonal. Vectorizing the second term on the left-hand side of (36), we obtain

where \( \widetilde{{\mathcal {K}}}= {{\,\textrm{blkdiag}\,}}({\widetilde{K}}_{1}, \ldots , {\widetilde{K}}_{r}) \in {\mathbb {R}}^{r^{2} \times r^{2}} \) is a block-diagonal matrix, and \(\varPi \) is the perfect shuffle matrix defined by \( {{\,\textrm{vec}\,}}(X^{\top }) = \varPi {{\,\textrm{vec}\,}}(X) \) [28, 73]. Wrapping it up, from (36) we obtain the vectorized equation

The matrix \( {{\mathcal {K}}}+ \varPi \widetilde{{\mathcal {K}}}\varPi \) is of size \(r^{2}\)-by-\(r^{2}\), so it can be efficiently inverted if the rank r is really low. We solve this equation for \( {\widehat{M}}_{\xi }\), and then we use (31) and (33) to find \( {\widehat{U}}_{\textrm{p}}^{\xi }\) and \( {\widehat{V}}_{\textrm{p}}^{\xi }\), respectively. Finally, undoing the transformations done by Q and \( {\widetilde{Q}}\), we find the components of \(\xi \)

and thus the tangent vector \( \xi \) such that (24) is satisfied.

1.2 Efficient Solution of the Saddle-Point System

We use a Schur complement idea to efficiently solve the saddle-point problem (30) and invert the \( T_{i} \). See the techniques described in [8]. Let

The system \( {\mathcal {T}}_{i}(X) = B_{i} \) can be solved for X by eliminating the (negative) Schur complement \( S_{i} = {\widehat{U}}^{\top }(A + {\widetilde{d}}_{i}I)^{-1} {\widehat{U}}\). This gives

We use Cholesky factorization to solve the system (38). For solving (39), we use a sparse solver for \( (A + {\widetilde{d}}_{i}I)^{-1} B_{i} \) and \( (A + {\widetilde{d}}_{i}I)^{-1} {\widehat{U}}\), for example, MATLAB backslash \( (A + {\widetilde{d}}_{i}I) \backslash B_{i} \) and \( (A + {\widetilde{d}}_{i}I) \backslash {\widehat{U}}\). Equation (37) is a linear system of size \( r^{2} \).

1.3 Algebraic Manipulations

1.3.1 First Equation in (25)

The first equation in system (25) becomes

Using the gauging conditions \( (U_{\textrm{p}}^{\xi })^{\top }U = (V_{\textrm{p}}^{\xi })^{\top }V = 0 \) and \( (U_{\textrm{p}}^{\eta })^{\top }U = (V_{\textrm{p}}^{\eta })^{\top }V = 0 \), we obtain

Left-multiplying by \( U^{\top }\) and right-multiplying by V we get the first equation in (26), i.e.,

With the transformations introduced in (27), it becomes

which is the first equation in system (26) in the form (28).

1.3.2 Second Equation in (25)

Analogously for the second equation in (25), i.e.,

we obtain

then, using \( P_{U}^{\perp } = I - UU^{\top }\),

Right-multiplying by V we get the second equation in system (26), i.e.,

With the transformations introduced in (27), this becomes

which is the second equation in (25) in the form (29).

1.3.3 Third Equation in (25)

The third equation in (25)

becomes

Using the gauging conditions \( (U_{\textrm{p}}^{\xi })^{\top }U = (V_{\textrm{p}}^{\xi })^{\top }V = 0 \), we obtain

Left-multiplying by \( U^{\top }\) and noting that \( (V_{\textrm{p}}^{\eta })^{\top }P_{V}^{\perp } = (V_{\textrm{p}}^{\eta })^{\top }\), we obtain the third equation in system (26), namely,

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Sutti, M., Vandereycken, B. Implicit Low-Rank Riemannian Schemes for the Time Integration of Stiff Partial Differential Equations. J Sci Comput 101, 3 (2024). https://doi.org/10.1007/s10915-024-02629-8

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10915-024-02629-8

Keywords

- Implicit methods

- Numerical time integration

- Riemannian optimization

- Stiff PDEs

- Manifold of fixed-rank matrices

- Variational problems

- Preconditioning

- Trust-region method

- Allen–Cahn equation

- Fisher–KPP equation