Abstract

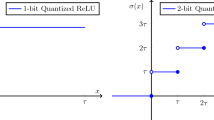

The quantized neural network is a common way to improve inference and memory efficiency for deep learning methods. However, it is challenging to solve this optimization problem with good generalization due to its high nonlinearity and nonconvexity. This paper proposes an algorithm to train one-bit quantization neural networks based on sharpness-aware minimization Foret et al. (Sharpness-aware minimization for efficiently improving generalization, 2021) with two types of gradient approximation. The idea is to improve the generalization by finding a local minimum of a flat landscape of both continuous and quantized neural network loss. The convergence theory is partially established under a one-bit quantization setting. Experiments on the CIFAR-10 Krizhevsky (Learning multiple layers of features from tiny images, 2009) and SVHN Netzer et al. (Reading digits in natural images with unsupervised feature learning, 2011) datasets show improvement in generalization with the proposed algorithm compared to other state-of-the-art quantized training methods.

Similar content being viewed by others

Data Availability

Enquiries about data availability should be directed to the authors.

References

Ashok, A., Rhinehart, N., Beainy, F., Kitani, K. M.: N2n learning: Network to network compression via policy gradient reinforcement learning. arXiv preprint arXiv:1709.06030 (2017)

Fallah, A., Mokhtari, A., Ozdaglar, A.: On the convergence theory of gradient-based model-agnostic meta-learning algorithms. Proceedings of PMLR 108, 1082–1092 (2020)

Krizhevsky, A.: Learning multiple layers of features from tiny images. Technical Report (2009)

Krizhevsky, A., Sutskever, I., Hinton, G. E.: Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. (2012)

Yazdanbakhsh, A., Elthakeb, A., Pilligundla, P., Mireshghallah, F., Esmaeilzadeh, H.: Releq: An Automatic Reinforcement Learning Approach for Deep Quantization of Neural Networks. arXiv preprint arXiv:1811.01704 (2018)

Tang, C.Z., Kwan, H.K.: Multilayer feedforward neural networks with single powers-of-two weights. IEEE Trans. Signal Process. 41(8), 2724–2727 (1993)

Kingma, D. P., Ba, J.: Adam: A Method for Stochastic Pptimization. arXiv e-prints, art. arXiv:1412.6980 (2014)

Fiesler, E., Choudry, A., John Caulfield, H.: Weight discretization paradigm for optical neural networks. Opt. Int Networks 1281, 164–173 (1990)

Park, E., Ahn, J., Yoo, S.: Weighted-Entropy-Based Quantization for Deep Neural Networks. In: Proceedings of CVPR pp. 5456–5464 (2017)

Chelsea, F., Pieter, A., Sergey, L.: Model-agnostic meta-learning for fast adaptation of deep networks. Proceedings of PMLR 70, 1126–1135 (2017)

Li, F., Zhang, B., Liu, B.: Ternary Weight Networks. preprint, arXiv:1605.04711 (2016)

Lacey, G., Taylor, G. W., Areibi, S.: Stochastic Layer-Wise Precision in Deep Neural Networks. preprint, arXiv:1807.00942 (2018)

Li, H., Xu, Z., Taylor, G., Studer, C., Goldstein, T.: Visualizing the Loss Landscape of Neural Nets. In: NeurIPS (2018)

Liu, H., Simonyan, K., Yang, Y.: Darts: Differentiable Architecture Search. arXiv preprint arXiv:1806.09055 (2018)

Qin, H., Gong, R., Liu, X., Bai, X., Song, J., Sebe, N.: Bianry Neural N: A Survey. arXiv preprint arXiv:2004.03333v1 (2020)

Hubara, I., Courbariaux, M., Soudry, D., El-Yaniv, R., Bengio, Y.: Binarized Neural Networks. In: NeurIPS (2016)

Lin, J., Rao, Y., Lu, J., Zhou, J.: Runtime Neural Pruning. In: NeurIPS. pp. 2181–2191 (2017)

He, K., Zhang, X., Ren, S., Sun, J.: Deep Residual Learning for Image Recognition. CVPR, IEEE conference on (2016)

Nogueira, K., Penatti, O.A.B., dos Santos, J.A.: Towards better exploiting convolutional neural networks for remote sensing scene classification. Pattern Recogn. 61, 539–556 (2017)

Simonyan, K., Zisserman, A.: Very Deep Convolutional Networks for Large-Scale Image Recognition. In: ICLR (2015)

Liu, L., Fieguth, P.W., Guo, Y., Wang, X., Pietikainen, M.: Local binary features for texture classification: Taxonomy and experimental study. Pattern Recognit. 62, 135–160 (2017)

Rosasco, L., Villa, S., Vu, B.C.: Convergence of stochastic proximal gradient algorithm. Appl. Math. Optim. 82, 891–917 (2020)

Perpinan, M. C., Idelbayev, Y.: Model Compression as Constrained Optimization, with Application to Neural Nets. Part II: quantization, preprint arXiv:1707.04319 (2017)

Courbariaux, M., Bengio, Y., David, J. P.: Binaryconnect: Training Deep Neural Networks with Binary Weights During Propagations. In: NeurIPS (2015)

Marchesi, M., Orlandi, G., Piazza, F., Uncini, A.: Fast neural networks without multipliers. IEEE Trans. Neural Networks 4(1), 53–62 (1993)

Rastegari, M., Ordonez, V., Redmon, J., Farhadi, A.: Xnor-net: Imagenet Classification using Binary Convolutional Nural Networks. In: ECCV (2016)

Tan, M., Le, Q. V.: Efficientnet: Rethinking Model Scaling for Convolutional Neural Networks. arXiv e-prints, art. arXiv:1905.11946 (2019)

Keskar, N. S., Mudigere, D., Nocedal, J., Smelyanskiy, M., Tang, P. T.: On Large-Batch Training for Deep Learning: Generalization Gap and Sharp Minima. arXiv e-prints, arXiv:1609.04836 (2016)

Foret, P., Kleiner, A., Mobahi, H., Neyshabur, B.: Sharpness-Aware Minimization for Efficiently Improving Generalization. arXiv preprint arXiv:2010.01412v3 (2021)

Yin, P., Zhang, S., Lyu, J., Osher, S., Qi, Y., Xin, J.: Binaryrelax: A relaxation approach for training deep neural networks with quantized weights. SIAM J. Imaging Sci. 11(4), 2205–2223 (2018)

Yin, P., Zhang, S., Qi, Y., Xin, J.: Quantization and training of low bit-width convolutional neural networks for object detection. J. Comput. Math. 37, 349–359 (2019)

Liu, R., Gao, J., Zhang, J., Meng, D., Lin, Z.: Investigating bi-level Optimization for Learning and Vision from a Unified Perspective: A Survey and Beyond. arXiv preprint arXiv:2101.11517 (2021)

Ren, S., He, K., Girshick, R., Sun, J.: Faster r-cnn: Towards Real-Time Object Detection with Region Proposal networks. In: NeurIPS pp. 91–99 (2015)

Balzer, W., Takahashi, M., Ohta, J., Kyuma, K.: Weight quantization in boltzmann machines. Neural Netw. 4(3), 405–409 (1991)

Huang, Y., Cheng, Y., Bapna, A., Firat, O., Chen, M., Chen, D., Lee, H. J., Ngiam, J., Le,Q. V., Wu, Y., Chen, Z.: Gpipe: Efficient Training of Giant Neural networks using Pipeline Parallelism. arXiv e-prints, arXiv:1811.06965 (2018)

Jiang, Y., Neyshabur, B., Mobahi, H., Krishnan, D., Bengio, S.: Fantastic Generalization Measures and Where to find Them. arXiv preprint arXiv:1912.02178 (2019)

LeCun, Y., Bottou, L., Bengio, Y., Haffner, P.: Gradient-based learning applied to document recognition. Proc. IEEE 86, 2278–2324 (1998)

LeCun, Y., Bengio, Y., Hinton, G.: Deep learning. Nature 521, 436–444 (2015)

Netzer, Y., Wang, T., Coates, A., Bissacco, A., Wu, B., Ng, A. Y.: Reading Digits in Natural Images with Unsupervised Feature Learning. In: NeurIPS (2011)

Acknowledgements

We would like to thank the anonymous referee very much for the careful reading and valuable comments, which greatly improved the quality of this manuscript. We also thank the Student Innovation Center at Shanghai Jiao Tong University for providing us the computing services. X. Zhang was supported by National Natural Science Foundation of China (No. 12090024) and Shanghai Municipal Science and Technology Major Project 2021SHZDZX0102. F. Bian is also partly supported by the outstanding Ph.D. graduates development scholarship of Shanghai Jiao Tong University.

Funding

National Natural Science Foundation of China (No. 12090024); Shanghai Municipal Science and Technology Major Project 2021SHZDZX0102.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have not disclosed any competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Liu, R., Bian, F. & Zhang, X. Binary Quantized Network Training With Sharpness-Aware Minimization. J Sci Comput 94, 16 (2023). https://doi.org/10.1007/s10915-022-02064-7

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10915-022-02064-7