Abstract

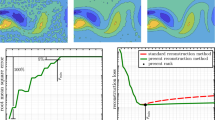

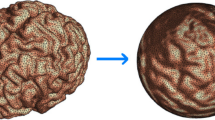

The log-sum penalty is often adopted as a replacement for the \(\ell _0\) pseudo-norm in compressive sensing and low-rank optimization. The proximity operator of the \(\ell _0\) penalty, i.e., the hard-thresholding operator, plays an essential role in applications; similarly, we require an efficient method for evaluating the proximity operator of the log-sum penalty. Due to the nonconvexity of this function, its proximity operator is commonly computed through the iteratively reweighted \(\ell _1\) method, which replaces the log-sum term with its first-order approximation. This paper reports that the proximity operator of the log-sum penalty actually has an explicit expression. With it, we show that the iteratively reweighted \(\ell _1\) solution disagrees with the true proximity operator in certain regions. As a by-product, the iteratively reweighted \(\ell _1\) solution is precisely characterized in terms of the chosen initialization. We also give the explicit form of the proximity operator for the composition of the log-sum penalty with the singular value function, as seen in low-rank applications. These results should be useful in the development of efficient and accurate algorithms for optimization problems involving the log-sum penalty. We present applications to solving compressive sensing problems and to mixed additive Gaussian white noise and impulse noise removal.

Similar content being viewed by others

Data Availability

Data sharing not applicable to this article as no datasets were generated or analysed during the current study.

References

Attouch, H., Bolte, J., Svaiter, B.: Convergence of descent methods for semi-algebraic and tame problems: proximal algorithms, forward-backward splitting, and regularized Gauss-Seidel methods. Math. Program. Ser. A 137, 91–129 (2013)

Bauschke, H.L., Combettes, P.L.: Convex Analysis and Monotone Operator Theory in Hilbert Spaces. AMS Books in Mathematics. Springer, New York (2011)

Bolte, J., Pauwels, E.: Majorization-minimization procedures and convergence of SQP methods for semi-algebraic and tame Programs. Math. Oper. Res. 41, 442–465 (2016)

Cai, J.-F., Choi, J.K., Li, J., Wei, K.: Image restoration: structured low rank matrix framework for piecewise smooth functions and beyond. Appl. Comput. Harmon. Anal. 56, 26–60 (2022)

Candes, E., Tao, T.: Near optimal signal recovery from random projections: universal encoding strategies? IEEE Trans. Inf. Theory 52, 5406–5425 (2006)

Candes, E., Wakin, M.B., Boyd, S.: Enhancing sparsity by reweighted \(\ell ^1\) minimization. J. Fourier Anal. Appl. 14, 877–905 (2008)

Chartrand, R.: Exact reconstruction of sparse signals via nonconvex minimization. Signal Process. Lett. IEEE 14, 707–710 (2007)

Chen, F., Shen, L., Suter, B.W.: Computing the proximity operator of the \(\ell _p\) norm with \(0<p<1\). IET Signal Proc. 10, 557–565 (2016)

Chen, K., Dong, H., Chan, K.-S.: Reduced rank regression via adaptive nuclear norm penalization. Biometrika 100, 901–920 (2013)

Dabov, K., Foi, A., Katkovnik, V., Egiazarian, K.: Image denoising by sparse 3d transform-domain collaborative filtering. IEEE Trans. Image Process. 16, 2080–2095 (2007)

Deng, Y., Dai, Q., Liu, R., Zhang, Z., Hu, S.: Low-rank structure learning via nonconvex heuristic recovery. IEEE Trans. Neural Netw. Learn. Syst. 24, 383–396 (2013)

Dong, W., Shi, G., Li, X., Ma, Y., Huang, F.: Compressive sensing via nonlocal low-rank regularization. IEEE Trans. Image Process. 23, 3618–3632 (2014)

Esser, E., Lou, Y., Xin, J.: A method for finding structured sparse solutions to nonnegative least squares problems with applications. SIAM J. Imaging Sci. 6, 2010–2046 (2013)

Fan, J., Li, R.: Variable selection via nonconcave penalized likelihood and its oracle properties. J. Am. Stat. Assoc. 96, 1348–1360 (2001)

Fazel, M., Hindi, H., Boyd, S.: Log-det heuristic for matrix rank minimization with applications to Hankel and Euclidean distance matrices, vol. 3 of Proceedings of American Control Conference, pp. 2156–2162 (2003)

Huang, T., Dong, W., Xie, X., Shi, G., Bai, X.: Mixed noise removal via Laplacian scale mixture modeling and nonlocal low-rank approximation. IEEE Trans. Image Process. 26, 3171–3186 (2017)

Li, H., Lin, Z.: Accelerated proximal gradient methods for nonconvex programming. In: Advances in Neural Information Processing Systems, pp. 379–387, pp. 3171–3186 (2015)

Lou, Y., Yan, M.: Fast L1–L2 minimization via a proximal operator. J. Sci. Comput. 74(2), 767–785 (2018)

Mirsky, L.: A trace inequality of John von Neumann. Monatshefte fur Mathematik 79, 303–306 (1975)

Ochs, P., Chen, Y., Brox, T., Pock, T.: iPiano: Inertial proximal algorithm for nonconvex optimization. SIAM J. Imaging Sci. 7, 1388–1419 (2014)

Ochs, P., Dosovitskiy, A., Brox, T., Pock, T.: On iteratively reweighted algorithms for nonsmooth nonconvex optimization in computer vision. SIAM J. Imaging Sci. 8, 331–372 (2015)

Shen, L., Suter, B.W., Tripp, E.E.: Structured sparsity promoting functions. J. Optim. Theory Appl. 183, 386–421 (2019)

Shen, Y., Fang, J., Li, H.: Exact reconstruction analysis of log-sum minimization for compressed sensing. IEEE Signal Process. Lett. 20, 1223–1226 (2013)

Wang, Z., Bovik, A.C., Sheikh, H.R., Simoncelli, E.P.: Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 13, 600–612 (2004)

Wen, B., Chen, X., Pong, T.K.: A proximal difference-of-convex algorithm with extrapolation. Comput. Optim. Appl. 69, 297–324 (2018)

Wen, F., Chu, L., Liu, P., Qiu, R.C.: A Survey on nonconvex regularization-based sparse and low-rank recovery in signal processing, statistics, and machine learning. IEEE Access 6, 69883–69906 (2018)

Xia, L.-Y., Wang, Y.-W., Meng, D.-Y., Yao, X.-J., Chai, H., Liang, Y.: Descriptor selection via Log-Sum regularization for the biological activities of chemical structure. Int. J. Mol. Sci. 19, 30 (2017)

Xu, C., Liu, X., Zheng, J., Shen, L., Jiang, Q., Lu, J.: Nonlocal low-rank regularized two-phase approach for mixed noise removal. Inverse Prob. 37, 085001 (2021)

Yin, P., Lou, Y., He, Q., Xin, J.: Minimization of \(\ell _{1-2}\) for compressed sensing. SIAM J. Sci. Comput. 37, A536–A563 (2015)

Zhang, C.-H.: Nearly unbiased variable selection under minimax concave penalty. Ann. Stat. 38, 894–942 (2010)

Zhang, L., Zhang, L., Mou, X., Zhang, D.: FSIM: a feature similarity index for image quality assessment. IEEE Trans. Image Process. 20, 2378–2386 (2011)

Acknowledgements

This work was funded in part by Air Force Office of Scientific Research (AFOSR) grant 21RICOR035. The work of L. Shen was supported in part by the National Science Foundation under grant DMS-1913039, 2020 U.S. Air Force Summer Faculty Fellowship Program, and the 2020 Air Force Visiting Faculty Research Program funded through AFOSR grant 18RICOR029. Any opinions, findings and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the U.S. Air Force Research Laboratory. Cleared for public release 08 Jan 2021: Case number AFRL-2021-0024.

Funding

The authors have not disclosed any funding.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Code Availability

The code generated for numerical experiments in this article is available from the corresponding author on reasonable request.

Competing interests

The authors have not disclosed any competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Prater-Bennette, A., Shen, L. & Tripp, E.E. The Proximity Operator of the Log-Sum Penalty. J Sci Comput 93, 67 (2022). https://doi.org/10.1007/s10915-022-02021-4

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10915-022-02021-4